环境准备三台:

master:192.168.100.200

node1:192.168.100.201

node2:192.168.100.202

1.初始化系统:

1).配置双击互信: [root@master ~]# ssh-keygen [root@master .ssh]# mv id_rsa.pub authorized_keys [root@master ~]# for i in 201 202;do scp -r /root/.ssh/ 192.168.100.$i:/root/;done 各个节点执行: [root@test1 yum.repos.d]# hostnamectl set-hostname master [root@test1 yum.repos.d]# hostnamectl set-hostname node1 [root@test1 yum.repos.d]# hostnamectl set-hostname node2 [root@master ~]# cat /etc/hosts 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 192.168.100.200 master 192.168.100.201 node1 192.168.100.202 node2 [root@master .ssh]# for i in 201 202;do scp /etc/hosts 192.168.100.$i:/etc/;done 2).配置yum源: [root@test1 yum.repos.d]# cat > kubernetes.repo << EOF > [kubernetes] > name=a li yun > baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/ > gpgcheck=0 > enabled=1 > EOF [root@test1 yum.repos.d]# cat > docker.repo << EOF > [docker] > name= a li yun > baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/7/x86_64/stable/ > gpgcheck=0 > enabled=1 > EOF [root@test1 yum.repos.d]# yum repolist Loaded plugins: fastestmirror ............................ kubernetes 421/421 repo id repo name status cdrom local yum 3,831 docker a li yun 61 kubernetes a li yun 421 repolist: 4,313 [root@test1 yum.repos.d]# yum makecache fast 3).关闭swap分区: [root@master yum.repos.d]# swapoff -a [root@master yum.repos.d]#sed -i '/swap/s@(.*)@#1@' /etc/fstab

2.安装docker:(各个节点需要安装)

[root@master .ssh]# yum list docker-ce.x86_64 --showduplicates | sort -r [root@master .ssh]# yum install docker-ce-19.03.4-3.el7 -y [root@master yum.repos.d]# systemctl enable docker [root@master yum.repos.d]# systemctl restart docker [root@master yum.repos.d]# vim /etc/sysctl.d/k8s.conf net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 net.ipv4.ip_forward = 1 [root@master yum.repos.d]# cat > /etc/docker/daemon.json << EOF > { > "registry-mirrors": ["https://l6ydvf0r.mirror.aliyuncs.com"], > "exec-opts": ["native.cgroupdriver=cgroupfs"] > } > EOF

[root@master yum.repos.d]# systemctl daemon-reload && systemctl restart docker [root@master yum.repos.d]# docker info ................................. Labels: Experimental: false Insecure Registries: 127.0.0.0/8 Registry Mirrors: https://l6ydvf0r.mirror.aliyuncs.com/ Live Restore Enabled: false ##其他两个节点同理以上操作。

3.使用kubeadm部署Kubernetes:

[root@master yum.repos.d]# yum install kubectl kubeadm kubectl -y node节点安装: [root@node1 ~]# yum install kubelet kubeadm -y [root@master yum.repos.d]# systemctl enable kubelet

4.初始化集群:

1.手动拉取镜像文件脚本: [root@master kubernetes]# cat docker_images.sh #!/bin/bash echo -e "�33[31m 正在拉取镜像... �33[0m" IMAGE=(kube-apiserver-amd64:v1.16.0 kube-controller-manager-amd64:v1.16.0 etcd-amd64:3.3.15-0 kube-scheduler-amd64:v1.16.0 kube-proxy-amd64:v1.16.0 pause:3.1) for i in ${IMAGE[@]}; do docker pull mirrorgooglecontainers/${i} docker pull coredns/coredns:1.6.2 done docker tag mirrorgooglecontainers/kube-apiserver-amd64:v1.16.0 k8s.gcr.io/kube-apiserver:v1.16.0 docker tag mirrorgooglecontainers/kube-controller-manager-amd64:v1.16.0 k8s.gcr.io/kube-controller-manager:v1.16.0 docker tag mirrorgooglecontainers/kube-scheduler-amd64:v1.16.0 k8s.gcr.io/kube-scheduler:v1.16.0 docker tag mirrorgooglecontainers/kube-proxy-amd64:v1.16.0 k8s.gcr.io/kube-proxy:v1.16.0 docker tag mirrorgooglecontainers/etcd-amd64:3.3.15-0 k8s.gcr.io/etcd:3.3.15-0 docker tag mirrorgooglecontainers/pause:3.1 k8s.gcr.io/pause:3.1 docker tag coredns/coredns:1.6.2 k8s.gcr.io/coredns:1.6.2 docker rmi mirrorgooglecontainers/kube-apiserver-amd64:v1.16.0 docker rmi mirrorgooglecontainers/kube-controller-manager-amd64:v1.16.0 docker rmi mirrorgooglecontainers/etcd-amd64:3.3.15-0 docker rmi mirrorgooglecontainers/kube-scheduler-amd64:v1.16.0 docker rmi mirrorgooglecontainers/kube-proxy-amd64:v1.16.0 docker rmi mirrorgooglecontainers/pause:3.1 docker rmi coredns/coredns:1.6.2

[root@master kubernetes]# kubeadm config print init-defaults > kubeadm.yaml 修改:vim kubeadm.yaml apiVersion: kubeadm.k8s.io/v1beta2 bootstrapTokens: - groups: - system:bootstrappers:kubeadm:default-node-token token: abcdef.0123456789abcdef ttl: 24h0m0s usages: - signing - authentication kind: InitConfiguration localAPIEndpoint: advertiseAddress: 192.168.100.200 ##修改 kubernetesVersion: v1.16.0 networking: dnsDomain: cluster.local serviceSubnet: 10.96.0.0/12 scheduler: {} networking: podSubnet: 10.244.0.0/16 #添加pod网络

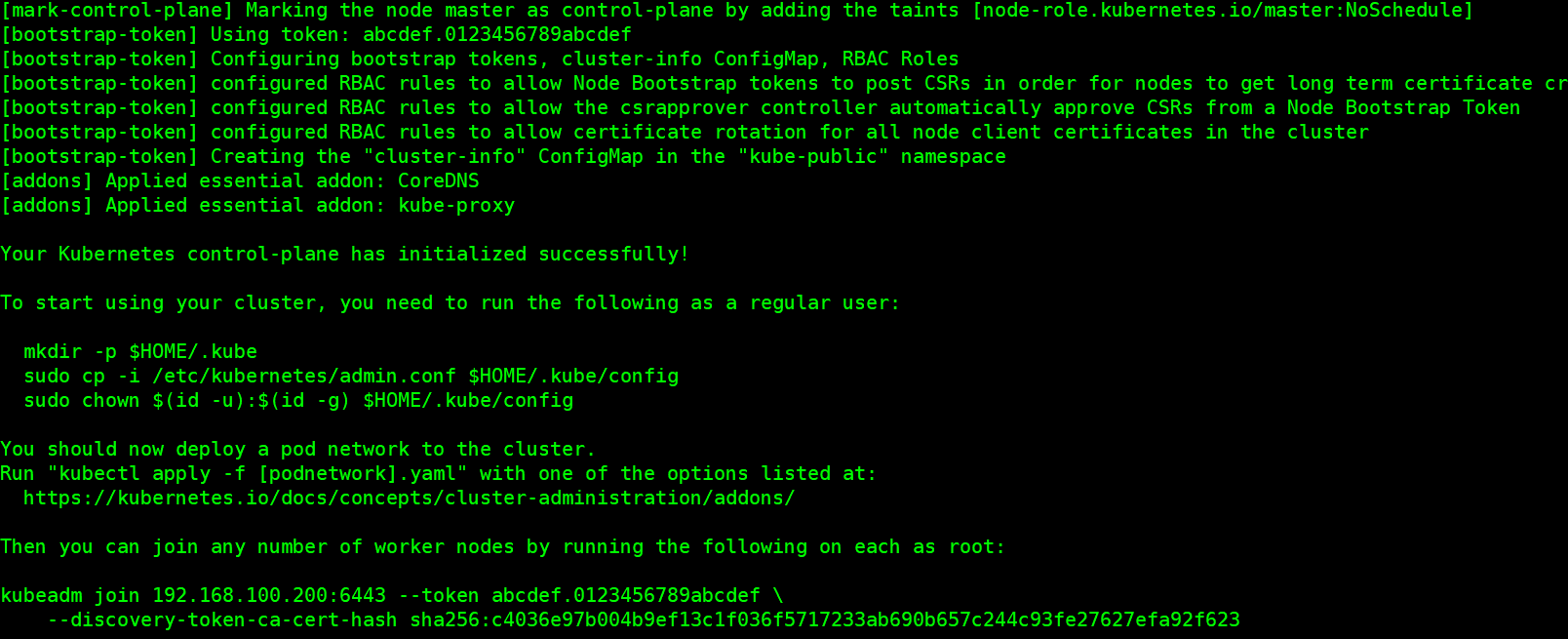

2.初始化集群: [root@master kubernetes]# kubeadm init --config kubeadm.yaml

执行: [root@master kubernetes]# mkdir -p $HOME/.kube [root@master kubernetes]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config [root@master kubernetes]# chown $(id -u):$(id -g) $HOME/.kube/config [root@master kubernetes]# kubectl get cs NAME AGE scheduler <unknown> controller-manager <unknown> etcd-0 <unknown> [root@master kubernetes]# kubectl get nodes NAME STATUS ROLES AGE VERSION master NotReady master 3m31s v1.16.2 3.node节点加入集群:在每个节点执行 [root@node1 ~]# kubeadm join 192.168.100.200:6443 --token abcdef.0123456789abcdef --discovery-token-ca-cert-hash sha256:2b3a8f91a12240d739e2169078c83b75f19898d58fad3347a00fbc0865f7908f [preflight] Running pre-flight checks [WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/ [preflight] Reading configuration from the cluster... [preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml' [kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.16" ConfigMap in the kube-system namespace [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env" [kubelet-start] Activating the kubelet service [kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap... This node has joined the cluster: * Certificate signing request was sent to apiserver and a response was received. * The Kubelet was informed of the new secure connection details. Run 'kubectl get nodes' on the control-plane to see this node join the cluster. [root@master kubernetes]# kubectl get nodes NAME STATUS ROLES AGE VERSION master NotReady master 6m59s v1.16.2 node1 NotReady <none> 108s v1.16.2 node2 NotReady <none> 8s v1.16.2 ##此时已经加入集群了;

5.node节点部署kube-proxy:

[root@master kubernetes]# kubectl get pod -n kube-system NAME READY STATUS RESTARTS AGE coredns-5644d7b6d9-4hxxk 0/1 Pending 0 91s coredns-5644d7b6d9-bbl67 0/1 Pending 0 91s etcd-master 1/1 Running 0 35s kube-apiserver-master 1/1 Running 0 43s kube-controller-manager-master 1/1 Running 0 25s kube-proxy-g9p6p 1/1 Running 0 91s kube-proxy-qvk7d 0/1 ContainerCreating 0 28s kube-proxy-v8wd2 0/1 ContainerCreating 0 15s kube-scheduler-master 1/1 Running 0 46s

[root@master kubernetes]# docker save -o kube-proxy.tar k8s.gcr.io/kube-proxy:v1.16.0 [root@master kubernetes]# docker save -o pause.tar k8s.gcr.io/pause:3.1 [root@master kubernetes]# docker save -o coredns.tar k8s.gcr.io/coredns:1.6.2

[root@master kubernetes]# ll 总用量 86620 -rwxr-xr-x 1 root root 1319 11月 3 16:26 docker.sh -rw-r--r-- 1 root root 834 11月 3 17:08 kubeadm.yaml -rw------- 1 root root 87930880 11月 3 17:19 kube-proxy.tar -rw------- 1 root root 754176 11月 3 17:19 pause.tar [root@master kubernetes]# for i in node1 node2; do scp kube-proxy.tar pause.tar coredns.tar $i:/root;done ##node节点需要kube-proxy,pause,coredns

在node节点上执行: [root@node1 ~]# docker load -i kube-proxy.tar [root@node1 ~]# docker load -i coredns.tar [root@node1 ~]# docker load -i pause.tar

在master节点查看: [root@master kubernetes]# kubectl get pod -n kube-system NAME READY STATUS RESTARTS AGE coredns-5644d7b6d9-4hxxk 0/1 Pending 0 6m42s coredns-5644d7b6d9-bbl67 0/1 Pending 0 6m42s etcd-master 1/1 Running 0 5m46s kube-apiserver-master 1/1 Running 0 5m54s kube-controller-manager-master 1/1 Running 0 5m36s kube-proxy-g9p6p 1/1 Running 0 6m42s kube-proxy-qvk7d 1/1 Running 0 5m39s kube-proxy-v8wd2 1/1 Running 0 5m26s kube-scheduler-master 1/1 Running 0 5m57s

##此时kube-proxy已经运行起来了。

6.部署flannel网络:

[root@master kubernetes]#wget http://k8s.ziji.work/flannel/kube-flannel.yml [root@master kubernetes]# docker pull registry.cn-hangzhou.aliyuncs.com/jhr-flannel/flannel:v0.9.0-amd64 [root@master kubernetes]# docker tag registry.cn-hangzhou.aliyuncs.com/jhr-flannel/flannel:v0.9.0-amd64 quay.io/coreos/flannel:v0.11.0-amd64 [root@master kubernetes]# kubectl get nodes NAME STATUS ROLES AGE VERSION master Ready master 4m23s v1.16.2 node1 Ready <none> 2m29s v1.16.2 node2 Ready <none> 2m20s v1.16.2 [root@master ~]# kubectl get pod -n kube-system NAME READY STATUS RESTARTS AGE coredns-5644d7b6d9-9gzkp 0/1 Running 0 143m coredns-5644d7b6d9-htf7g 0/1 Running 0 143m etcd-master 1/1 Running 0 142m kube-apiserver-master 1/1 Running 0 142m kube-controller-manager-master 1/1 Running 0 142m kube-flannel-ds-amd64-55q22 1/1 Running 0 141m kube-flannel-ds-amd64-7hzmd 1/1 Running 0 141m kube-flannel-ds-amd64-9x57c 1/1 Running 0 141m kube-proxy-h5r4j 1/1 Running 0 142m kube-proxy-sb8cx 1/1 Running 0 142m kube-proxy-xx5vp 1/1 Running 0 143m kube-scheduler-master 1/1 Running 0 142m ##此时集群部署完成。

7.部署集群的dashboard:

[root@master cert]#wget https://raw.githubusercontent.com/kubernetes/dashboard/v1.10.1/src/deploy/recommended/kubernetes-dashboard.yaml 修改: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kube-system spec: type: NodePort ##增加此字段 ports: - port: 443 targetPort: 8443 selector: k8s-app: kubernetes-dashboard

[root@master cert]#kubectl apply -f kubernetes-dashboard.yaml

[root@master cert]#kubectl get svc -n kube-system [root@master kubernetes]# kubectl get svc -n kube-system NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 18h kubernetes-dashboard NodePort 10.102.151.131 <none> 443:31596/TCP 6m20s 此时用谷歌浏览器可以访问:https://node_IP:31596

##此时浏览器会显示无法登陆,需要创建证书#生成证书

openssl genrsa -out dashboard.key 2048openssl req -new -out dashboard.csr -key dashboard.key -subj '/CN=serviceip '

openssl x509 -req -in dashboard.csr -signkey dashboard.key -out dashboard.crt

#删除原有的证书secret kubectl delete secret kubernetes-dashboard-certs -n kube-system

#创建新的证书secret kubectl create secret generic kubernetes-dashboard-certs --from-file=dashboard.key --from-file=dashboard.crt -n kube-system

#查看pod kubectl get pod -n kube-system

#重启pod kubectl delete pod <pod name> -n kube-system 此时再次登陆:

##此时还是无法登陆,需要我们创建serviveaccount账号和授权:

创建dashboard管理用户

kubectl create serviceaccount dashboard-admin -n kube-system

绑定用户为集群管理用户

kubectl create clusterrolebinding dashboard-cluster-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin

8.此时登陆获取token:

[root@master kubernetes]# kubectl describe secret -n kube-system dashboard-admin