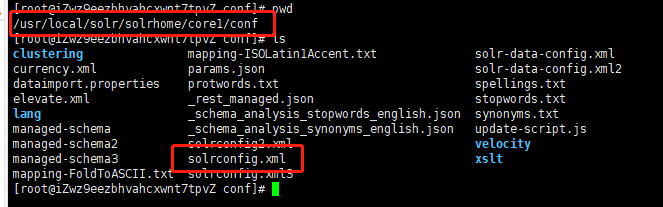

一、在自己配置的sorlhome中的core1文件下solrconfig.xml配置

vim solrconfig.xml添加如下配置

<requestHandler name="/dataimport" class="solr.DataImportHandler"> <lst name="defaults"> <str name="config">solr-data-config.xml</str> </lst> </requestHandler>

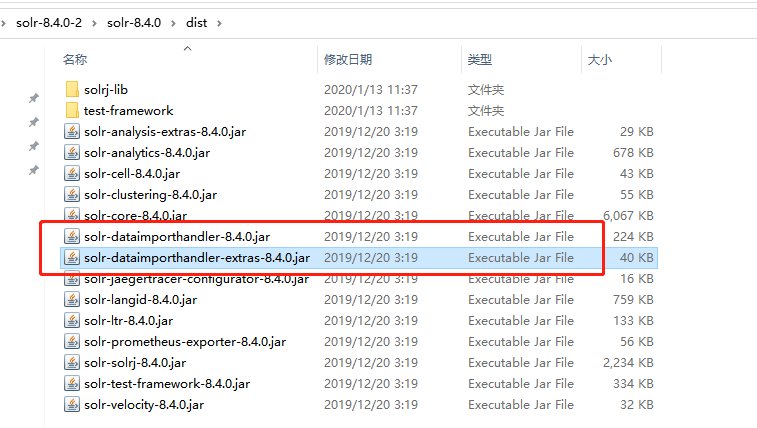

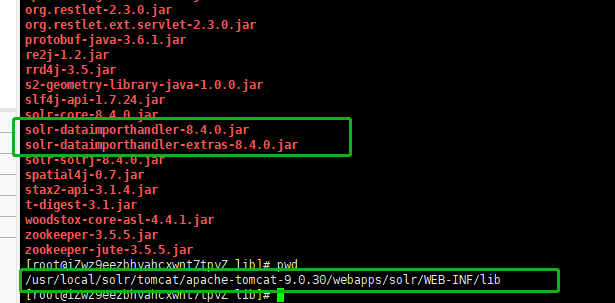

二、把solr-dataimporthandler-extras-8.4.0.jar、solr-dataimporthandler-8.4.0.jar、还有mysql连接包mysql-connector-java-5.1.38.jar(这个mysql驱动包要自己下载)

扔到tomcat目录下的solr:/usr/local/solr/tomcat/apache-tomcat-9.0.30/webapps/solr/WEB-INF/lib

三、编辑,solrhome下面的:/usr/local/solr/solrhome/core1/conf中的solr-data-config.xml

<?xml version="1.0" encoding="UTF-8" ?> <!-- Licensed to the Apache Software Foundation (ASF) under one or more contributor license agreements. See the NOTICE file distributed with this work for additional information regarding copyright ownership. The ASF licenses this file to You under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with the License. You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License. --> <dataConfig> <!-- 数据库信息 --> <dataSource type="JdbcDataSource" driver="com.mysql.jdbc.Driver" url="jdbc:mysql://localhost:3306/renren" user="root" password="123456"/> <!-- document实体 name为数据库表;pk为managed-schema文件中定义的<uniqueKey>id</uniqueKey> --> <document> <!-- document实体 name为数据库表;pk为managed-schema文件中定义的<uniqueKey>id</uniqueKey> --> <entity name="sys_platform" pk="id" query="SELECT `id` as id, `parent_id` as parentId, `platform_name` as platformName, `longitude` as longitude, `latitude` as latitude, `en_name` as enName, `gmt_create` as gmtCreate, `gmt_modified` as gmtModified, `is_deleted` as isDeleted, concat( longitude,' ',latitude) as GEO FROM sys_platform where is_deleted=0" deltaQuery="select id from sys_platform where gmt_modified > '${dataimporter.last_index_time}'" deletedPkQuery="select id from sys_platform where is_deleted=0" deltaImportQuery="select `id` as id, `parent_id` as parentId, `platform_name` as platformName, `longitude` as longitude, `latitude` as latitude, `en_name` as enName, `gmt_create` as gmtCreate, `gmt_modified` as gmtModified, `is_deleted` as isDeleted, concat( longitude,' ',latitude) as GEO from sys_platform where id='${dataimporter.delta.id}'" > <field column="id" name="id"/> <field column="parent_id" name="parentId"/> <field column="platform_name" name="platformName"/> <field column="longitude" name="longitude"/> <field column="latitude" name="latitude"/> <field column="en_name" name="enName"/> <field column="gmt_create" name="gmtCreate"/> <field column="gmt_modified" name="gmtModified"/> <field column="is_deleted" name="isDeleted"/> </entity> </document> </dataConfig>

solr-data-config.xml各配置参数的作用,请参考:https://www.cnblogs.com/davidwang456/p/4744415.html

至于为什么要用concat( longitude,' ',latitude) as GEO,那是因为我要用到空间搜索

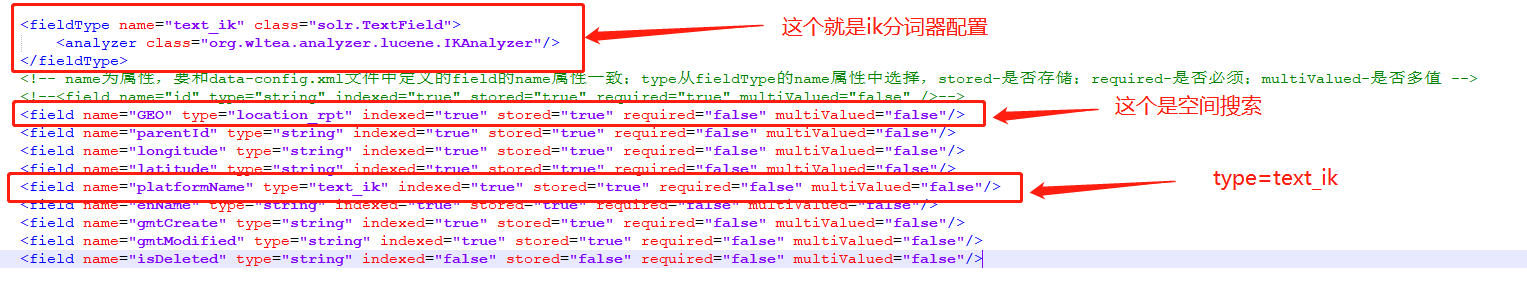

其中以下的域要在managed-schema文件中自定义配置的一致

managed-schema文件

其中solr中的managed-schema默认配置了id的域,并且指定是唯一的,所以我们不用自己去配置了

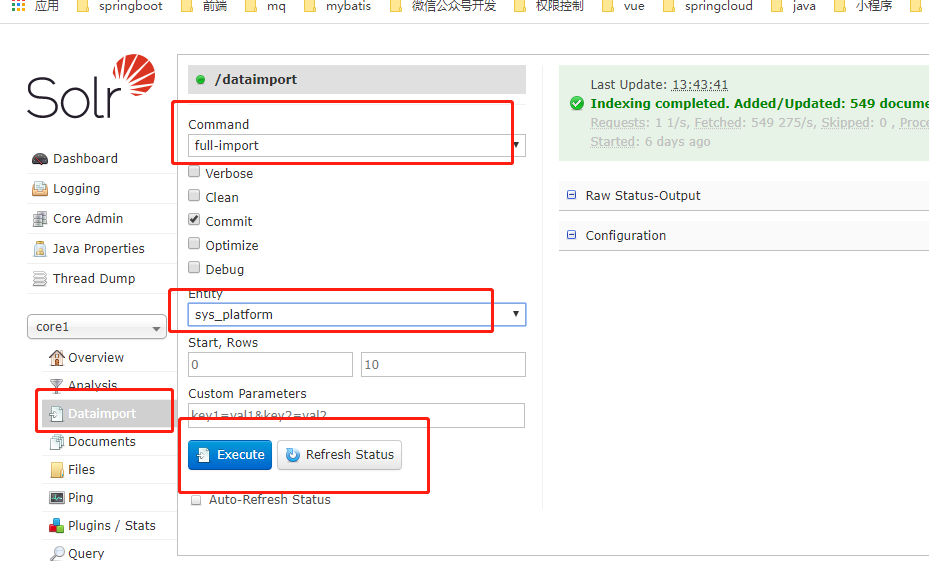

四、配置成功后,即可在管理页面进行数据导入,如下就意味着配置成功了,可以导入了mysql数据库中的数据了

五、如果要实时更新mysql数据库到solr中,那怎么做呢?接下来,我们开始配置实时数据更新到solr库

1、打开tomcat下面solr的web.xml配置文件,路径:/usr/local/solr/tomcat/apache-tomcat-9.0.30/webapps/solr/WEB-INF,添加如下内容

<!-- solr数据导入监听器 --> <listener> <listener-class> org.apache.solr.handler.dataimport.scheduler.ApplicationListener </listener-class> </listener>

2、导包,在网上找到pache-solr-dataimportscheduler.jar,我的包是重新编译过了,名称是apache-solr-dataimportscheduler-recompile.jar,名称不重要。

然后扔到tomcat目录下面:/usr/local/solr/tomcat/apache-tomcat-9.0.30/webapps/solr/WEB-INF/lib

3、在solrhome目录下,新建配置文件: mkdir conf文件夹,并在conf中新建mkdir dataimport.properties文件,注意,一定要在solrhome目录下,以solr实例core1要在同级目录,并在dataimport.properties添加以下内容

################################################# # # # dataimport scheduler properties # # # ################################################# # to sync or not to sync 是否同步功能 # 1 - active; anything else - inactive 1 - 开启; 否则不开启 syncEnabled=1 # which cores to schedule # in a multi-core environment you can decide which cores you want syncronized # leave empty or comment it out if using single-core deployment #syncCores=game,resource 需要同步的solr core syncCores=appDbDisasterShelter,appDbMaterialAddress,appDbProtectionobject,appDbRisk,appDbTeam # solr server name or IP address solr server 名称或IP地址 # [defaults to localhost if empty] 默认为localhost server=localhost # solr server port solr server端口 # [defaults to 80 if empty] 默认为80 port=8999 # 调度区间 # 默认为30分钟 interval=1 # application name/context # [defaults to current ServletContextListener's context (app) name] webapp=solr # URL params [mandatory] # remainder of URL params=/dataimport?command=delta-import&clean=false&commit=true # schedule interval # number of minutes between two runs # [defaults to 30 if empty] # 重做索引的时间间隔,单位分钟,默认7200,即5天; # 为空,为0,或者注释掉:表示永不重做索引 reBuildIndexInterval=1 # 重做索引的参数 reBuildIndexParams=/dataimport?command=full-import&clean=true&commit=true # 重做索引时间间隔的计时开始时间,第一次真正执行的时间=reBuildIndexBeginTime+reBuildIndexInterval*60*1000; # 两种格式:2012-04-11 03:10:00 或者 03:10:00,后一种会自动补全日期部分为服务启动时的日期 reBuildIndexBeginTime=03:10:00

六、重启tomcat,然后再数据库添加一条数据,就可以在solr后台查询到添加的结果了

我配置成功所用的solr工具包与要点:https://download.csdn.net/download/caohanren/12116984