前言

本章主要讲述了如何在mapreduce任务中添加自定义的计数器,从所有任务中聚合信息,并且最终输出到mapreduce web ui中得到统计信息。

准备工作

数据集:ufo-60000条记录,这个数据集有一系列包含下列字段的UFO目击事件记录组成,每条记录的字段都是以tab键分割,请看http://www.cnblogs.com/cafebabe-yun/p/8679994.html

- sighting date:UFO目击事件发生时间

- Recorded date:报告目击事件的时间

- Location:目击事件发生的地点

- Shape:UFO形状

- Duration:目击事件持续时间

- Dexcription:目击事件的大致描述

例子:

19950915 19950915 Redmond, WA 6 min. Young man w/ 2 co-workers witness tiny, distinctly white round disc drifting slowly toward NE. Flew in dir. 90 deg. to winds.

需要共享的数据:州名缩写与全称的对应关系

数据:

AL Alabama AK Alaska AZ Arizona AR Arkansas CA California

自定义计数器的使用

- 将数据集 ufo.tsv 上传到hdfs上

hadoop dfs -put ufo.tsv ufo.tsv

- 将共享数据数据上传到hdfs上,命令同上

- 创建文件 UFOCountingRecordValidationMapper.java ,并且输入以下代码:

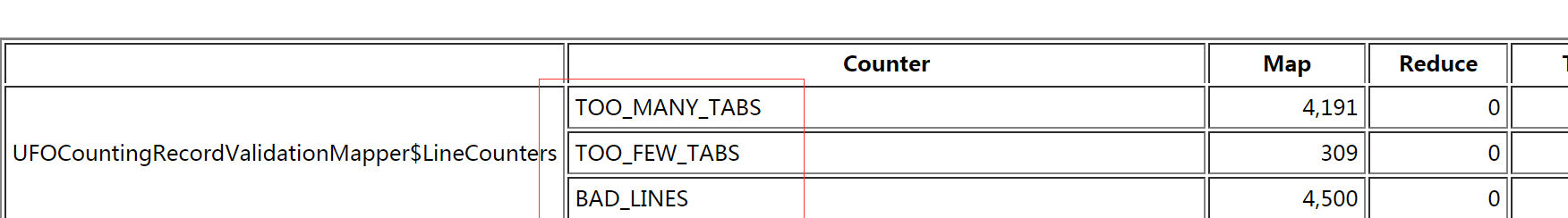

import java.io.IOException; import org.apache.hadoop.io.*; import org.apache.hadoop.mapred.*; import org.apache.hadoop.mapred.lib.*; public class UFOCountingRecordValidationMapper extends MapReduceBase implements Mapper<LongWritable, Text, LongWritable, Text> { public enum LineCounters { BAD_LINES, TOO_MANY_TABS, TOO_FEW_TABS }; @Override public void map(LongWritable key, Text value, OutputCollector<LongWritable, Text> output, Reporter reporter) throws IOException { String line = value.toString(); if(validate(line, reporter)) { output.collect(key, value); } } private boolean validate(String line, Reporter reporter) { String[] words = line.split(" "); if (words.length != 6) { if (words.length < 6) { reporter.incrCounter(LineCounters.TOO_MANY_TABS , 1); } else { reporter.incrCounter(LineCounters.TOO_FEW_TABS, 1); } reporter.incrCounter(LineCounters.BAD_LINES, 1); if ((reporter.getCounter(LineCounters.BAD_LINES).getCounter() % 10) == 0) { reporter.setStatus("Got 10 bad lines."); System.err.println("Read another 10 bad lines."); } return false; } return true; } }

- 创建文件 UFOLocation3.java ,并输入以下代码:

import java.io.*; import java.util.*; import java.net.*; import java.util.regex.*; import org.apache.hadoop.conf.*; import org.apache.hadoop.fs.Path; import org.apache.hadoop.filecache.DistributedCache; import org.apache.hadoop.io.*; import org.apache.hadoop.mapred.*; import org.apache.hadoop.mapred.lib.*; public class UFOLocation3 { public static class MapClass extends MapReduceBase implements Mapper<LongWritable, Text, Text, LongWritable> { private final static LongWritable one = new LongWritable(1); private static Pattern locationPattern = Pattern.compile("[a-zA-Z]{2}[^a-zA-Z]*$"); private Map<String, String> stateNames; @Override public void configure(JobConf job) { try { Path[] cacheFiles = DistributedCache.getLocalCacheFiles(job); setupStateMap(cacheFiles[0].toString()); } catch (IOException e) { System.err.println("Error reading state file."); System.exit(1); } } private void setupStateMap(String fileName) throws IOException { Map<String, String> stateCache = new HashMap<String, String>(); BufferedReader reader = new BufferedReader(new FileReader(fileName)); String line = null; while((line = reader.readLine()) != null) { String[] splits = line.split(" "); stateCache.put(splits[0], splits[1]); } stateNames = stateCache; } @Override public void map(LongWritable key, Text value, OutputCollector<Text, LongWritable> output, Reporter reporter) throws IOException { String line = value.toString(); String[] fields = line.split(" "); String location = fields[2].trim(); if(location.length() >= 2) { Matcher matcher = locationPattern.matcher(location); if(matcher.find()) { int start = matcher.start(); String state = location.substring(start, start + 2); output.collect(new Text(lookupState(state.toUpperCase())), one); } } } private String lookupState(String state) { String fullName = stateNames.get(state); if(fullName == null || "".equals(fullName)) { fullName = state; } return fullName; } } public static void main(String...args) throws Exception { Configuration config = new Configuration(); JobConf conf = new JobConf(config, UFOLocation3.class); conf.setJobName("UFOLocation3"); DistributedCache.addCacheFile(new URI("/user/root/states.txt"), conf); conf.setOutputKeyClass(Text.class); conf.setOutputValueClass(LongWritable.class); JobConf mapconf1 = new JobConf(false); ChainMapper.addMapper(conf, UFOCountingRecordValidationMapper.class, LongWritable.class, Text.class, LongWritable.class, Text.class, true, mapconf1); JobConf mapconf2 = new JobConf(false); ChainMapper.addMapper(conf, MapClass.class, LongWritable.class, Text.class, Text.class, LongWritable.class, true, mapconf2); conf.setMapperClass(ChainMapper.class); conf.setCombinerClass(LongSumReducer.class); conf.setReducerClass(LongSumReducer.class); FileInputFormat.setInputPaths(conf, args[0]); FileOutputFormat.setOutputPath(conf, new Path(args[1])); JobClient.runJob(conf); } }

- 编译上述的两个文件

javac UFOCountingRecordValidationMapper.java UFOLocation3.java

- 将编译好的文件打包成jar文件

jar cvf ufo3.jar UFO*class

- 在hadoop上执行jar包

hadoop cvf ufo3.jar UFOLocation3 ufo.tsv output

- 查看输出结果

hadoop dfs -cat output/part-00000

- 在mapreduce web ui页面上查看统计信息

- 相应的job,进入job的统计信息页面

-

- 查看统计信息