环境

虚拟机:VMware 10

Linux版本:CentOS-6.5-x86_64

客户端:Xshell4

FTP:Xftp4

jdk1.8

scala-2.10.4(依赖jdk1.8)

spark-1.6

一、搭建集群

组建方案:

master:PCS101,slave:PCS102、PCS103

搭建方式一:Standalone

步骤一:解压文件 改名

[root@PCS101 src]# tar -zxvf spark-1.6.0-bin-hadoop2.6.tgz -C /usr/local [root@PCS101 local]# mv spark-1.6.0-bin-hadoop2.6 spark-1.6.0

步骤二:修改配置文件

1、slaves.template 设置从节点

[root@PCS101 conf]# cd /usr/local/spark-1.6.0/conf && mv slaves.template slaves && vi slaves PCS102 PCS103

2、spark-config.sh 设置java_home

export JAVA_HOME=/usr/local/jdk1.8.0_65

3、spark-env.sh

[root@PCS101 conf]# mv spark-env.sh.template spark-env.sh && vi spark-env.sh #SPARK_MASTER_IP:master的ip export SPARK_MASTER_IP=PCS101 #SPARK_MASTER_PORT:提交任务的端口,默认是7077 export SPARK_MASTER_PORT=7077 #SPARK_WORKER_CORES:每个worker从节点能够支配的core的个数 export SPARK_WORKER_CORES=2 #SPARK_WORKER_MEMORY:每个worker从节点能够支配的内存数 export SPARK_WORKER_MEMORY=3g #SPARK_MASTER_WEBUI_PORT:sparkwebUI端口 默认8080 或者修改spark-master.sh export SPARK_MASTER_WEBUI_PORT=8080

步骤三、分发spark到另外两个节点

[root@PCS101 local]# scp -r /usr/local/spark-1.6.0 root@PCS102:`pwd` [root@PCS101 local]# scp -r /usr/local/spark-1.6.0 root@PCS103:`pwd`

步骤四:启动集群

[root@PCS101 sbin]# /usr/local/spark-1.6.0/sbin/start-all.sh

步骤五:关闭集群

[root@PCS101 sbin]# /usr/local/spark-1.6.0/sbin/stop-all.sh

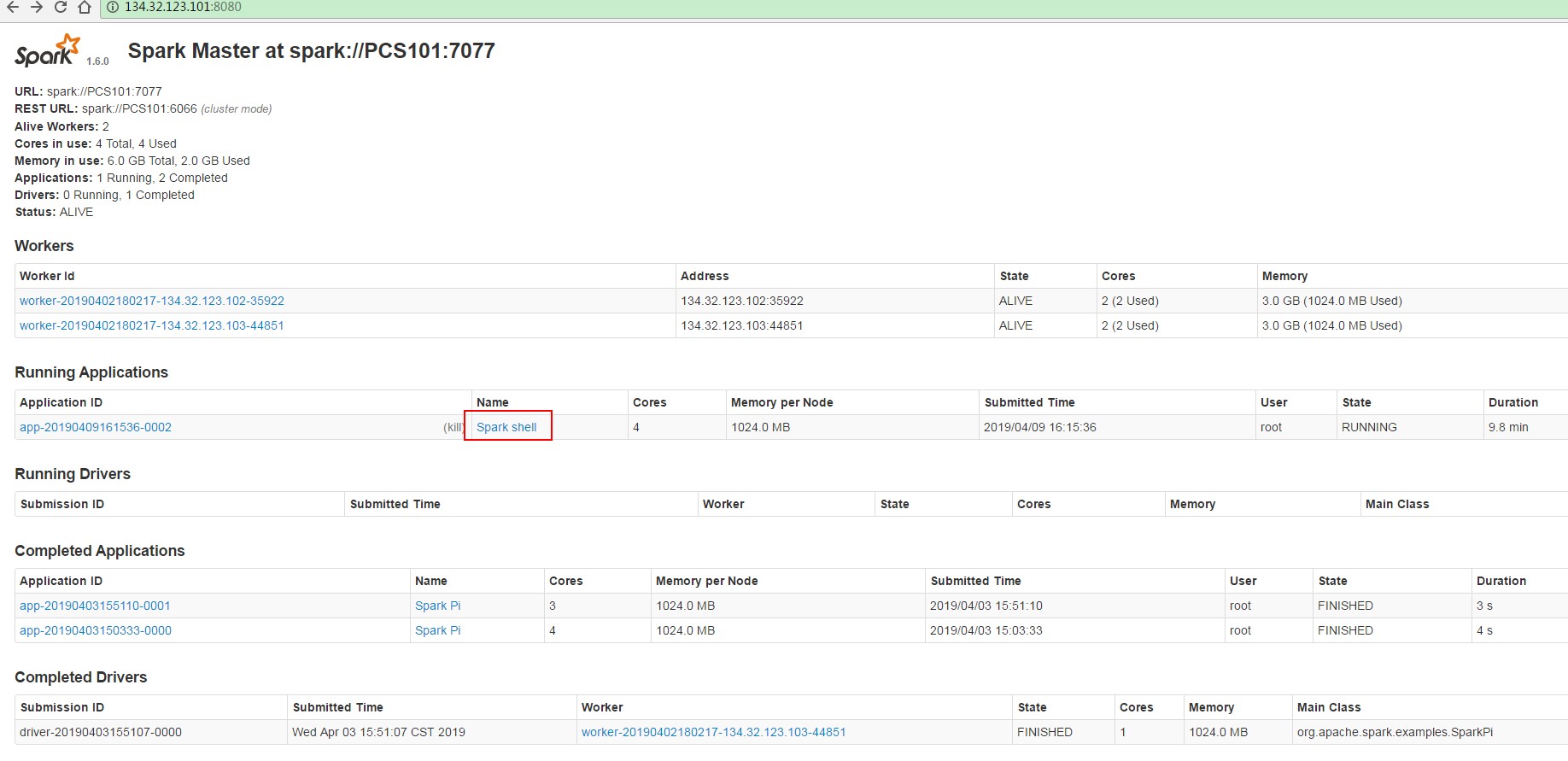

master界面:端口默认8080

job界面,端口是4040:

步骤六:搭建客户端

将spark安装包原封不动的拷贝到一个新的节点上,然后,在新的节点上提交任务即可

提交任务测试:

[root@PCS101 bin]# ./spark-submit Usage: spark-submit [options] <app jar | python file> [app arguments] Usage: spark-submit --kill [submission ID] --master [spark://...] Usage: spark-submit --status [submission ID] --master [spark://...] Options: --master MASTER_URL spark://host:port, mesos://host:port, yarn, or local. --deploy-mode DEPLOY_MODE Whether to launch the driver program locally ("client") or on one of the worker machines inside the cluster ("cluster") (Default: client). --class CLASS_NAME Your application's main class (for Java / Scala apps). --name NAME A name of your application. --jars JARS Comma-separated list of local jars to include on the driver and executor classpaths. --packages Comma-separated list of maven coordinates of jars to include on the driver and executor classpaths. Will search the local maven repo, then maven central and any additional remote repositories given by --repositories. The format for the coordinates should be groupId:artifactId:version. --exclude-packages Comma-separated list of groupId:artifactId, to exclude while resolving the dependencies provided in --packages to avoid dependency conflicts. --repositories Comma-separated list of additional remote repositories to search for the maven coordinates given with --packages. --py-files PY_FILES Comma-separated list of .zip, .egg, or .py files to place on the PYTHONPATH for Python apps. --files FILES Comma-separated list of files to be placed in the working directory of each executor. --conf PROP=VALUE Arbitrary Spark configuration property. --properties-file FILE Path to a file from which to load extra properties. If not specified, this will look for conf/spark-defaults.conf. --driver-memory MEM Memory for driver (e.g. 1000M, 2G) (Default: 1024M). --driver-java-options Extra Java options to pass to the driver. --driver-library-path Extra library path entries to pass to the driver. --driver-class-path Extra class path entries to pass to the driver. Note that jars added with --jars are automatically included in the classpath. --executor-memory MEM Memory per executor (e.g. 1000M, 2G) (Default: 1G). --proxy-user NAME User to impersonate when submitting the application. --help, -h Show this help message and exit --verbose, -v Print additional debug output --version, Print the version of current Spark Spark standalone with cluster deploy mode only: --driver-cores NUM Cores for driver (Default: 1). Spark standalone or Mesos with cluster deploy mode only: --supervise If given, restarts the driver on failure. --kill SUBMISSION_ID If given, kills the driver specified. --status SUBMISSION_ID If given, requests the status of the driver specified. Spark standalone and Mesos only: --total-executor-cores NUM Total cores for all executors. Spark standalone and YARN only: --executor-cores NUM Number of cores per executor. (Default: 1 in YARN mode, or all available cores on the worker in standalone mode) YARN-only: --driver-cores NUM Number of cores used by the driver, only in cluster mode (Default: 1). --queue QUEUE_NAME The YARN queue to submit to (Default: "default"). --num-executors NUM Number of executors to launch (Default: 2). --archives ARCHIVES Comma separated list of archives to be extracted into the working directory of each executor. --principal PRINCIPAL Principal to be used to login to KDC, while running on secure HDFS. --keytab KEYTAB The full path to the file that contains the keytab for the principal specified above. This keytab will be copied to the node running the Application Master via the Secure Distributed Cache, for renewing the login tickets and the delegation tokens periodically.

(1)三台节点选择任意一个节点提交:

#--master指定master 四种方式:

standlone方式:spark://host:port;

mesos方式:mesos://host:port;

yarn方式:yarn

local方式:local

#--class指定任务类名以及所在的jar包路径

#--deploy-mode 提交任务模式:client客户端模式 cluster 集群模式

示例:SparkPi:后面的10000是SparkPi的参数

[root@PCS101 bin]# /usr/local/spark-1.6.0/bin/spark-submit --master spark://PCS101:7077 --class org.apache.spark.examples.SparkPi ../lib/spark-examples-1.6.0-hadoop2.6.0.jar 10000

(2)使用单独节点作为客户端提交任务:

将PCS101上spark-1.6.0安装目录整个拷贝到新节点PCS104上,然后在PCS104上提交任务,效果是一样的;

PCS104与spark集群无关的,仅仅是上面有spark的提交任务脚本,可以将PCS104当作spark客户端。

搭建方式二:Yarn

注意:如果数据来源于HDFS或者需要使用YARN提交任务,那么spark需要依赖HDFS,否则两者没有联系

(1)步骤一、二、三、六同standalone

(2)在客户端中配置:

export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop

提交任务:

[root@PCS101 bin]# /usr/local/spark-1.6.0/bin/spark-submit --master yarn --class org.apache.spark.examples.SparkPi ../lib/spark-examples-1.6.0-hadoop2.6.0.jar 10000

二、spark-shell

SparkShell是Spark自带的一个快速原型开发工具,也可以说是Spark的scala REPL(Read-Eval-Print-Loop),即交互式shell。支持使用scala语言来进行Spark的交互式编程。

1、运行

步骤一:启动standalone集群和HDFS集群(PCS102上HDFS伪分布),之后启动spark-shell

[root@PCS101 bin]# /usr/local/spark-1.6.0/bin/spark-shell --master spark://PCS101:7077

步骤二:运行wordcount

scala>sc.textFile("hdfs://PCS102:9820/spark/test/wc.txt").flatMap(_.split(" ")).map((_,1)).reduceByKey(_+_).foreach(println)

2、配置historyServer

2、配置historyServer

(2.1)临时配置,对本次提交的应用程序起作用

[root@PCS101 bin]# /usr/local/spark-1.6.0/bin/spark-shell --master spark://PCS101:7077 --name myapp1 --conf spark.eventLog.enabled=true --conf spark.eventLog.dir=hdfs://PCS102:9820/spark/test

停止程序,在Web UI中Completed Applications对应的ApplicationID中能查看history。

(2.2)spark-default.conf配置文件中配置HistoryServer,对所有提交的Application都起作用

如果想看历史事件日志,可以新搭建一个HistoryServer专门用来看历史应用日志,跟当前的集群没有关系,

这里我们新启动客户端PCS104节点,进入../spark-1.6.0/conf/spark-defaults.conf最后加入:

//开启记录事件日志的功能 spark.eventLog.enabled true //设置事件日志存储的目录 spark.eventLog.dir hdfs://PCS102:9820/spark/test //设置HistoryServer加载事件日志的位置 恢复查看 spark.history.fs.logDirectory hdfs://PCS102:9820/spark/test //日志优化选项,压缩日志 spark.eventLog.compress true

启动HistoryServer:

[root@PCS104 sbin]# /usr/local/spark-1.6.0/sbin/start-history-server.sh

访问HistoryServer:PCS104:18080,之后所有提交的应用程序运行状况都会被记录。这里的HistoryServer和当前spark集群无关的

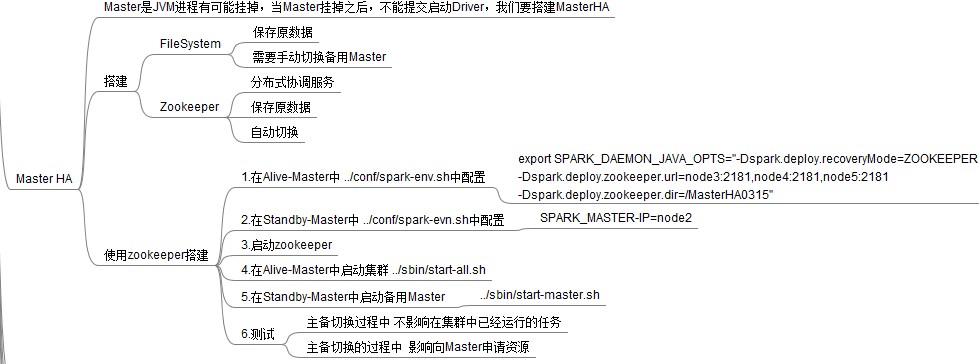

三、Master HA

1、Master的高可用可以使用fileSystem(文件系统)和zookeeper(分布式协调服务),一般使用zookeeper方式

2、Master高可用搭建

搭建方案:

Spark集群:PCS101、PCS102、PCS103

主master:PCS101

备master:PCS102

1)在Spark Master节点上配置主Master,配置/usr/local/spark-1.6.0/conf/spark-env.sh

export SPARK_DAEMON_JAVA_OPTS="-Dspark.deploy.recoveryMode=ZOOKEEPER -Dspark.deploy.zookeeper.url=PCS101:2181,PCS102:2181,PCS103:2181 -Dspark.deploy.zookeeper.dir=/sparkmaster0409"

2)发送到其他worker节点上

[root@PCS101 conf]# scp spark-env.sh root@PCS102:`pwd` [root@PCS101 conf]# scp spark-env.sh root@PCS103:`pwd`

3)PCS102配置备用 Master,修改spark-env.sh配置节点上的MasterIP

export SPARK_MASTER_IP=PCS102

4)启动集群之前启动zookeeper集群:

../zkServer.sh start

5)启动spark Standalone集群,启动备用Master

[root@PCS101 sbin]# /usr/local/spark-1.6.0/sbin/start-all.sh [root@PCS102 sbin]# /usr/local/spark-1.6.0/sbin/start-master.sh

6) 打开主Master和备用Master WebUI页面,观察状态。

PCS101:Status:ALIVE

PCS102:Status:STANDBY

kill掉PCS101的master,PCS102的Status:STANDBY变为ALIVE

3. 注意点

(1)主备切换过程中不能提交Application。

(2)主备切换过程中不影响已经在集群中运行的Application。因为Spark是粗粒度资源调度。

4. 测试验证

提交SparkPi程序,kill主Master观察现象。

./spark-submit --master spark://PCS101:7077,PCS102:7077 --class org.apache.spark.examples.SparkPi ../lib/spark-examples-1.6.0-hadoop2.6.0.jar 10000