导出数据,写入数据库

| 方法 | 导出用时 | 导入用时 |

|---|---|---|

| 方法1, 导出csv文件写 | 07.28 | 1731K 5s /不是localhost 8s |

| 方法2, 导出sql文件写 | 11.50 | 2093K 12347条数据 10.289m |

导出sql文件:1,借用navicat, 2, mysqldump -uroot -pxxx databasename > xxx.sql, 3.导真实数据,写脚本导出,试试pandas。

navicat连接不上,由于用了跳板机,可以查到真实数据库的连接命令,但还是连不上。项目目录有配置文件信息。

只导表结构:mysqldump -uroot -pxxx -d databasename > xxx.sql

写入sql文件:1,mysql> source 拖入即可(自动路径注意删除引号),2,mysql -uxxx -pxxx databasename< xxx.sql (当前数据库无需写用户名和密码,直接mysql进入)

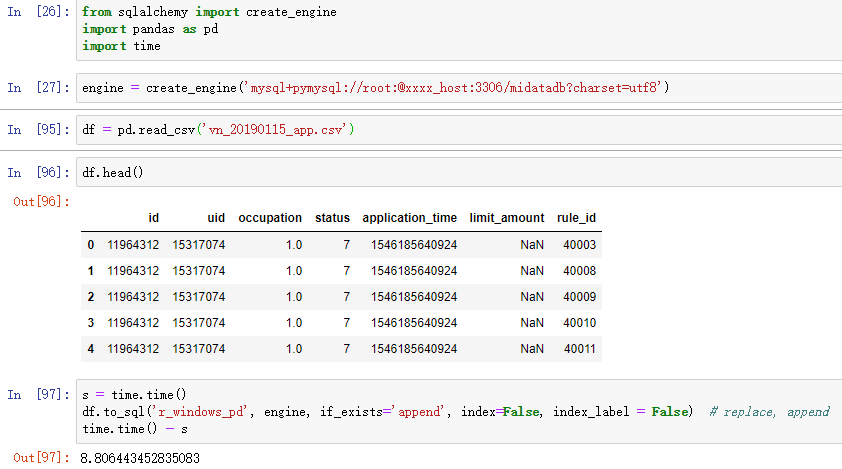

写入csv文件:利用pandas

注意:使用pd要先建好数据库,进入数据库show create database dbnamexxx

导入sql文件:终端进入数据库 mysql> source 拖入sql文件(去掉引号)/sql文件路径

导入csv:利用pandas to_sql

engine = create_engine('mysql+pymysql://root:@xxxx_host:3306/midatadb?charset=utf8')

df.to_sql('r_windows_pd', engine, if_exists='append', index=False, index_label = False) # replace, append

其他创建引擎方式;

# 第二种连接方式,pymysql

import pymysql

user = 'root'

passw = 'my-secret-pw-for-mysql-12ud'

host = 'xxxxx'

port = 3306

database = 'data_2'

conn = pymysql.connect(host=host,

port=port,

user=user,

passwd=passw,

db=database,

charset='utf8')

data.to_sql(name=database, con=conn, if_exists = 'replace', index=False, flavor = 'mysql')

# 或

from pandas.io import sql

import MySQLdb

con = MySQLdb.connect(xxxxx)

sql.write_frame(df, con=con, name='table_name_for_df',

if_exists='replace', flavor='mysql')

Mysql 大量数据快速导入导出

https://blog.csdn.net/xiaobaismiley/article/details/41015783

pd.read_sql

read_sql_table(table_name, con[, schema, …]) Read SQL database table into a DataFrame.

read_sql_query(sql, con[, index_col, …]) Read SQL query into a DataFrame.

read_sql(sql, con[, index_col, …]) Read SQL query or database table into a DataFrame.

pandas.read_sql_table(table_name, con, schema=None, index_col=None, coerce_float=True, parse_dates=None, columns=None, chunksize=None)

pandas.read_sql_query(sql, con, index_col=None, coerce_float=True, params=None, parse_dates=None, chunksize=None)

pandas.read_sql(sql, con, index_col=None, coerce_float=True, params=None, parse_dates=None, columns=None, chunksize=None)

This function is a convenience wrapper around read_sql_table and read_sql_query (for backward compatibility). It will delegate to the specific function depending on the provided input. A SQL query will be routed to read_sql_query, while a database table name will be routed to read_sql_table. Note that the delegated function might have more specific notes about their functionality not listed here.

sql join vs pandas

merge conbine based on key(common values)

pd.merge(df1, df2, on='uid', how='right')

how='inner' default

join default inner join

right -> right join

left -> left join

outer -> outer join

join

a variation of merge(just learn merge)

concat append up down or left right

按列连接 默认axis=0

若列名不同会有两列

| id | some db | other |

|---|---|---|

| 0 | xx | Nan |

| 1 | xx | Nan |

| 2 | Nan | xx |

| 3 | Nan | xx |