Nginx动静分离简单来说就将动态与静态资源分开,不能理解成只是单纯的把动态页面和静态页面物理分离,严格意义上说应该是动态请求跟静态请求分开,可以理解成使用Nginx处理静态页面,Tomcat,Resin,PHP,ASP处理动态页面,

动静分离从目前实现角度来件大致分为两种,一种是纯粹的将静态文件独立成单独的域名,放在独立的服务器上,也就会目前主流推崇的方案;另一种是动态跟 静态文件混合在一起发布,通过Nginx来分开;

Nginx-web1

[root@Nginx-server ~]# useradd -M -s /sbin/nologin nginx [root@Nginx-server ~]# yum install -y pcre-devel popt-devel openssl-devel [root@Nginx-server ~]# tar zxvf nginx-1.11.2.tar.gz -C /usr/src/ [root@Nginx-server ~]# cd /usr/src/nginx-1.11.2/ [root@Nginx-server nginx-1.11.2]# ./configure --prefix=/usr/local/nginx --user=nginx --group=nginx --with-http_stub_status_module --with-http_gzip_static_module --with-http_ssl_module [root@Nginx-server nginx-1.11.2]# make [root@Nginx-server nginx-1.11.2]# make install [root@Nginx-server nginx-1.11.2]# ln -s /usr/local/nginx/sbin/nginx /usr/local/sbin/ [root@Nginx-server nginx-1.11.2]# nginx -t nginx: the configuration file /usr/local/nginx/conf/nginx.conf syntax is ok nginx: configuration file /usr/local/nginx/conf/nginx.conf test is successful [root@Nginx-server nginx-1.11.2]# nginx

【Tomcat-server01】

[root@Tomcat-server01 ~]# tar zxvf jdk-7u65-linux-x64.gz [root@Tomcat-server01 ~]# mv jdk1.7.0_65/ /usr/ [root@Tomcat-server01 ~]# vim /etc/profile export JAVA_HOME=/usr/jdk1.7.0_65 export CLASSPATH=$CLASSPATH:$JAVA_HOME/lib:$JAVA_HOME/jre/lib export PATH=$JAVA_HOME/bin:$JAVA_HOME/jre/bin:$PATH:$HOMR/bin [root@Tomcat-server01 ~]# source /etc/profile [root@Tomcat-server01 ~]# java -version java version "1.7.0_65" Java(TM) SE Runtime Environment (build 1.7.0_65-b17) Java HotSpot(TM) 64-Bit Server VM (build 24.65-b04, mixed mode) [root@Tomcat-server ~]# wget https://mirrors.tuna.tsinghua.edu.cn/apache/tomcat/tomcat-7/v7.0.88/bin/apache-tomcat-7.0.88.tar.gz [root@Tomcat-server ~]# tar zxvf apache-tomcat-7.0.88.tar.gz [root@Tomcat-server ~]#mv apache-tomcat-7.0.88 /usr/local/tomcat_v1 [root@Tomcat-server ~]#mv apache-tomcat-7.0.88 /usr/local/tomcat_v2

[root@Tomcat-server ~]# vim /usr/local/tomcat_v1/webapps/ROOT/index.jsp

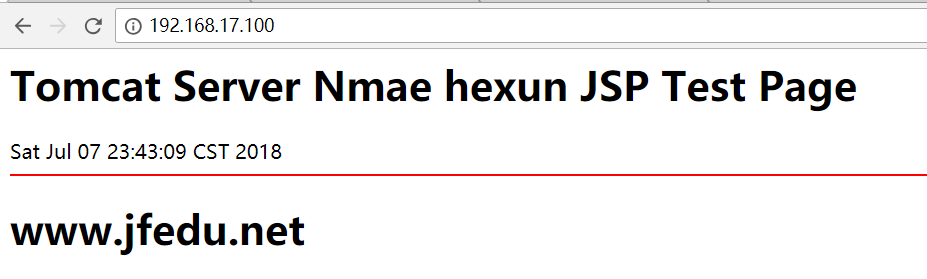

<html> <body> <h1>Tomcat Server Nmae hexun JSP Test Page </h1> <%=new java.util.Date()%> <hr color="red"> <h1>www.hexun.net</h1> </body> </html>

[root@Tomcat-server ~]# vim /usr/local/tomcat_v2/webapps/ROOT/index.jsp

<html> <body> <h1>Tomcat Server Nmae hexun JSP Test Page </h1> <%=new java.util.Date()%> <hr color="red"> <h1>www.jfedu.net</h1> </body> </html>

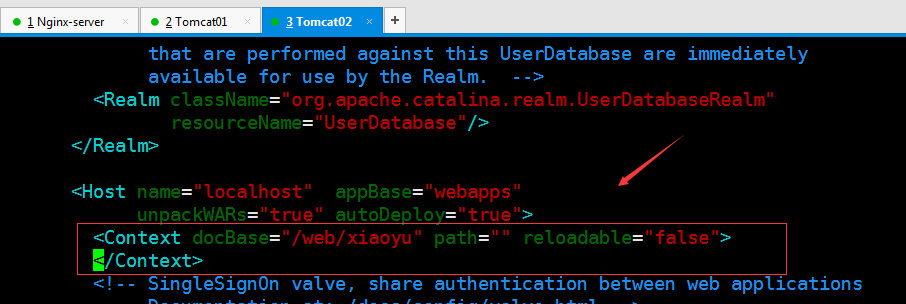

拓展:[root@Tomcat-server02 ~]# vim /usr/local/tomcat/conf/server.xml #也可以自定义目录

[配置Nginx服务端]

配置负载均衡&动静分离

[root@Nginx-server ~]# vim /usr/local/nginx/conf/nginx.conf

user nginx nginx; worker_processes 8; worker_cpu_affinity 00000001 00000010 00000100 00001000 00010000 00100000 01000000 10000000; pid /usr/local/nginx/nginx.pid; worker_rlimit_nofile 102400; events { use epoll; worker_connections 102400; } http { include mime.types; default_type application/octet-stream; fastcgi_intercept_errors on; charset utf-8; server_names_hash_bucket_size 128; client_header_buffer_size 4k; large_client_header_buffers 4 32k; client_max_body_size 300m; sendfile on; tcp_nopush on; keepalive_timeout 60; tcp_nodelay on; client_body_buffer_size 512k; proxy_connect_timeout 5; proxy_read_timeout 60; proxy_send_timeout 5; proxy_buffer_size 16k; proxy_buffers 4 64k; proxy_busy_buffers_size 128k; proxy_temp_file_write_size 128k; gzip on; gzip_min_length 1k; gzip_buffers 4 16k; gzip_http_version 1.1; gzip_comp_level 2; gzip_types text/plain application/x-javascript text/css application/xml; gzip_vary on; log_format main '$http_x_forwarded_for - $remote_user [$time_local] "$request" ' '$status $body_bytes_sent "$http_referer" ' '"$http_user_agent" $request_time $remote_addr'; upstream tomcat_webapp { server 192.168.2.147:8080 weight=1 max_fails=2 fail_timeout=30s; server 192.168.2.147:8081 weight=1 max_fails=2 fail_timeout=30s; } ##include引用vhosts.conf,该文件主要用于配置Nginx 虚拟主机 include vhosts.conf; }

[root@Nginx-server ~]# vim /usr/local/nginx/conf/vhosts.conf

server { listen 80; server_name www.xiaoyu.com; index index.html index.htm; #配置发布目录为/data/www/webapp root /data/www/webapp; location / { proxy_next_upstream http_502 http_504 error timeout invalid_header; proxy_set_header Host $host; proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; proxy_pass http://tomcat_webapp; expires 3d; } #动态页面交给http://tomcat_webapp,也即我们之前在nginx.conf定义的upstream tomcat_webapp 均衡 location ~ .*.(php|jsp|cgi|shtml)?$ { proxy_set_header Host $host; proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; proxy_pass http://tomcat_webapp; } #配置Nginx动静分离,定义的静态页面直接从Nginx发布目录读取。 location ~ .*.(html|htm|gif|jpg|jpeg|bmp|png|ico|txt|js|css)$ { root /data/www/webapp; #expires定义用户浏览器缓存的时间为3天,如果静态页面不常更新,可以设置更长,这样可以节省带宽和缓解服务器的压力 expires 3d; } #定义Nginx输出日志的路径 access_log /usr/local/nginx/logs/nginx_xiaoyu/access.log main; error_log /usr/local/nginx/logs/nginx_xiaoyu/error.log crit; }

【验证】

vhosts.conf配置文件中:localtion ~.* (php |jsp |shtml)表示匹配动态页面请求,然后将请求proxy_pass到后端服务器,而localtion~.*. (html | htm | gif | jpg| jpeg |ico| txt |js |css)表示匹配静态页面请求本地返回

后端配置好Tomcat服务。成功启动之后,发布的程序需要同步到Nginx的/data/www/webapp对应的目录,因为配置动静分离后,用户请求定义的静态页面,默认户去nginx的发布的目录请求,所以这个时候,要保证后端的tomcat数据和前端的数据保持

Nginx本地发布目录/data/www/webapp静态文件;后端两台Tomcat存放动态jsp文件

检查Nginx配置是否正确即可,然后测试动静分离是否成功,在192.168.149.130服务器启动8080、8081 Tomcat服务或者LAMP服务,删除后端Tomcat或者LAMP服务器上的某个静态文件,测试是否能访问该文件,如果可以访问说明静态资源Nginx直接返回了,如果不能访问,则证明动静分离不成功。

或者是说,后端两台Tomcat服务器正常访问,Nginx本地静态文件也能正常访问,但是Nginx配置文件配置了负载,且默认请求 是动态数据,

【拓展】

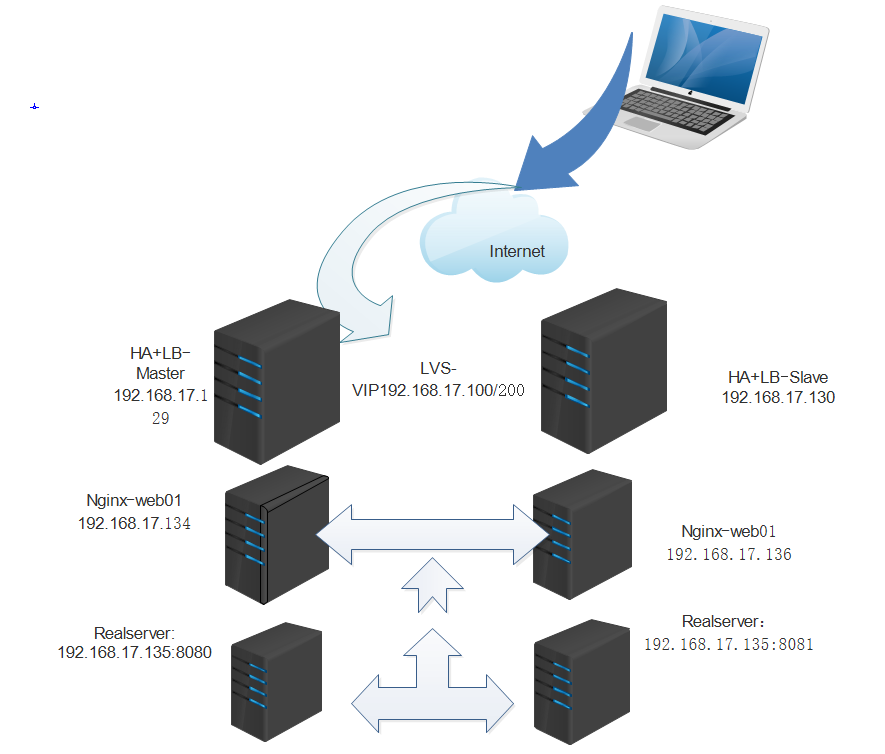

上述我们的环境是前端两台Nginx-web服务器均衡后端tomcat两个实例,并做到动静分离;如果Nginx节点故障,那么无论是动态请求还是静态请求,都无法访问了·,可能有人会说,可以多上几台nginx,统一代理后端tomcat,但是这并不是理想的方案

我们所要做的负载均衡动静分离同时也要保证前端的两台Nginx稳定且均衡的代理后端两台tomcat节点;让用户有一个统一的访问入口,当前端两台Nginx节点有一个宕机,不影响用户访问;这才是我们的目的;

[root@HA_LB-master ~]# yum install -y kernel-devel openssl-devel popt-devel keepalived

[root@HA_LB-master ~]# yum install -y ipvsadm

[root@HA+LB-Master ~]# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived global_defs { notification_email { bxy@163.com } notification_email_from bxy@163.com smtp_server 127.0.0.1 smtp_connect_timeout 30 router_id LVS_DEVEL } ################################### VIP1 vrrp_instance VI_1 { state MASTER interface eth0 lvs_sync_daemon_inteface eth0 virtual_router_id 50 priority 150 advert_int 5 nopreempt authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 192.168.17.100 } } virtual_server 192.168.17.100 80 { #虚拟服务器 地址(VIP,端口) delay_loop 6 #健康检查间隔时间 lb_algo wrr #加权轮询调(wrr)度算法 lb_kind DR persistence_timeout 60 #持久性超时时间 protocol TCP #应用服务器采用的协议,在这里是TCP real_server 192.168.17.134 80 { #后端服务器第一个web节点 weight 150 #节点的权重值 TCP_CHECK { #健康检查方式 connect_timeout 10 #连接超市(秒) nb_get_retry 3 #重试次数 delay_before_retry 3 #重试间隔(秒) connect_port 80 #连接端口 } } real_server 192.168.17.136 80 { weight 100 TCP_CHECK { connect_timeout 10 nb_get_retry 3 delay_before_retry 3 connect_port 80 } } } ###############################VIP2 vrrp_instance VI_2 { state BACKUP interface eth0 lvs_sync_daemon_inteface eth0 virtual_router_id 51 priority 100 advert_int 5 nopreempt authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 192.168.17.200 } } virtual_server 192.168.17.200 80 { delay_loop 6 lb_algo wrr lb_kind DR persistence_timeout 60 protocol TCP real_server 192.168.17.134 80 { weight 100 TCP_CHECK { connect_timeout 10 nb_get_retry 3 delay_before_retry 3 connect_port 80 } } real_server 192.168.17.136 80 { weight 100 TCP_CHECK { connect_timeout 10 nb_get_retry 3 delay_before_retry 3 connect_port 80 } } }

[root@HA+LB-Slave ~]# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived global_defs { notification_email { bxy@163.com } notification_email_from bxy@163.com smtp_server 127.0.0.1 smtp_connect_timeout 30 router_id LVS_DEVEL } ############################ VIP1 vrrp_instance VI_1 { state BACKUP interface eth0 lvs_sync_daemon_inteface eth0 virtual_router_id 50 priority 100 advert_int 5 nopreempt authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 192.168.17.100 } } virtual_server 192.168.17.100 80 { delay_loop 6 lb_algo wrr lb_kind DR persistence_timeout 60 protocol TCP real_server 192.168.17.134 80 { weight 100 TCP_CHECK { connect_timeout 10 nb_get_retry 3 delay_before_retry 3 connect_port 80 } } real_server 192.168.17.136 80 { weight 100 TCP_CHECK { connect_timeout 10 nb_get_retry 3 delay_before_retry 3 connect_port 80 } } } ######################## VIP2 vrrp_instance VI_2 { state MASTER interface eth0 lvs_sync_daemon_inteface eth0 virtual_router_id 51 priority 150 advert_int 5 nopreempt authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 192.168.17.200 } } virtual_server 192.168.17.200 80 { delay_loop 6 lb_algo wrr lb_kind DR persistence_timeout 60 protocol TCP real_server 192.168.17.134 80 { weight 100 TCP_CHECK { connect_timeout 10 nb_get_retry 3 delay_before_retry 3 connect_port 80 } } real_server 192.168.17.136 80 { weight 100 TCP_CHECK { connect_timeout 10 nb_get_retry 3 delay_before_retry 3 connect_port 80 } } }

到这里我们的keepalived双实例双主模式的配置基本上完成了

【配置keepalived日志输出】

接下来是将keepalived的日志单独划分出来,不要与系统messages日志混淆一起

# sed -i '14 s#KEEPALIVED_OPTIONS="-D"#KEEPALIVED_OPTIONS="-D -d -S 0"#g' /etc/sysconfig/keepalived

# sed -n '14p' /etc/sysconfig/keepalived

KEEPALIVED_OPTIONS="-D -d -S 0"

# sed -n '54p;$p' /etc/rsyslog.conf #查看rsyslog.conf配置文件的54行和最后一行所修改的内容;

/etc/init.d/rsyslog restart #配置完成之后,重启rsyslog服务即可

HA-LB-Mater端keepalived日志信息

[root@HA+LB-Master ~]# ip add show dev eth0 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 00:0c:29:9e:fd:f4 brd ff:ff:ff:ff:ff:ff inet 192.168.17.129/24 brd 192.168.17.255 scope global eth0 inet 192.168.17.100/32 scope global eth0 inet6 fe80::20c:29ff:fe9e:fdf4/64 scope link valid_lft forever preferred_lft forever [root@HA+LB-Master ~]# tail /var/log/keepalived.log Oct 28 06:38:40 HA+LB-Master Keepalived_healthcheckers[9894]: Removing service [0.0.0.0]:80 from VS [192.168.17.200]:80 Oct 28 06:38:40 HA+LB-Master Keepalived_healthcheckers[9894]: IPVS: No such destination Oct 28 06:38:40 HA+LB-Master Keepalived_healthcheckers[9894]: Remote SMTP server [127.0.0.1]:25 connected. Oct 28 06:38:44 HA+LB-Master Keepalived_vrrp[9895]: VRRP_Instance(VI_1) Entering MASTER STATE Oct 28 06:38:44 HA+LB-Master Keepalived_vrrp[9895]: VRRP_Instance(VI_1) setting protocol VIPs. Oct 28 06:38:44 HA+LB-Master Keepalived_vrrp[9895]: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 192.168.17.100 Oct 28 06:38:44 HA+LB-Master Keepalived_healthcheckers[9894]: Netlink reflector reports IP 192.168.17.100 added Oct 28 06:38:49 HA+LB-Master Keepalived_vrrp[9895]: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 192.168.17.100 Oct 28 06:39:08 HA+LB-Master Keepalived_healthcheckers[9894]: Timeout reading data to remote SMTP server [127.0.0.1]:25. Oct 28 06:39:10 HA+LB-Master Keepalived_healthcheckers[9894]: Timeout reading data to remote SMTP server [127.0.0.1]:25.

HA-LB-Slave端keepalived日志信息

[root@HA+LB-Slave ~]# ip add show dev eth0 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 00:0c:29:35:75:bc brd ff:ff:ff:ff:ff:ff inet 192.168.17.130/24 brd 192.168.17.255 scope global eth0 inet 192.168.17.200/32 scope global eth0 inet6 fe80::20c:29ff:fe35:75bc/64 scope link valid_lft forever preferred_lft forever [root@HA+LB-Slave ~]# tail /var/log/keepalived.log Nov 3 02:22:19 HA+LB-Slave Keepalived_healthcheckers[35711]: Activating healthchecker for service [192.168.17.134]:80 Nov 3 02:22:19 HA+LB-Slave Keepalived_healthcheckers[35711]: Activating healthchecker for service [192.168.17.136]:80 Nov 3 02:22:19 HA+LB-Slave Keepalived_healthcheckers[35711]: Activating healthchecker for service [192.168.17.134]:80 Nov 3 02:22:19 HA+LB-Slave Keepalived_healthcheckers[35711]: Activating healthchecker for service [192.168.17.136]:80 Nov 3 02:22:24 HA+LB-Slave Keepalived_vrrp[35712]: VRRP_Instance(VI_2) Transition to MASTER STATE Nov 3 02:22:29 HA+LB-Slave Keepalived_vrrp[35712]: VRRP_Instance(VI_2) Entering MASTER STATE Nov 3 02:22:29 HA+LB-Slave Keepalived_vrrp[35712]: VRRP_Instance(VI_2) setting protocol VIPs. Nov 3 02:22:29 HA+LB-Slave Keepalived_vrrp[35712]: VRRP_Instance(VI_2) Sending gratuitous ARPs on eth0 for 192.168.17.200 Nov 3 02:22:29 HA+LB-Slave Keepalived_healthcheckers[35711]: Netlink reflector reports IP 192.168.17.200 added Nov 3 02:22:34 HA+LB-Slave Keepalived_vrrp[35712]: VRRP_Instance(VI_2) Sending gratuitous ARPs on eth0 for 192.168.17.200

Nginx-web01和web02的脚本配置

客户端通过浏览器访问LVS-VIP,LVS接受到请求,根据配置的转发方式以及调度算法将请求转发给后端Realserver,在转发的过程中,因为我们配置的DR模式,重新封装用户请求报文中的MAC地址(目标mac改为Realserver的mac地址/源mac就修改为LVS自身的Mac),Realserver接受到请求并处理,处理完毕之后直接将数据报文返给用户客户端,不需要经过LVS节点;

PS:LVS与Realserver位于同一个物理网段重,当LVS直接将请求转发给Realserver时,如果Realserver检测到该请求报文目的地址是VIP而不是自己,这个时候便会丢弃,不会响应,那么,我们如何解决这个问题呢?

在这里我们需要在所有的Realserver上都配置VIP,保证数据包不丢失,同时由于后端Realserver都配置VIP会导致IP冲突,所以我们需要将VIP配置在lo网卡上,这样就能抑制住Realserver的apr应答;

#!/bin/sh #LVS Client Server VIP=192.168.17.100 case $1 in start) ifconfig lo:0 $VIP netmask 255.255.255.255 broadcast $VIP /usr/sbin/route add -host $VIP dev lo:0 echo "1" >/proc/sys/net/ipv4/conf/lo/arp_ignore echo "2" >/proc/sys/net/ipv4/conf/lo/arp_announce echo "1" >/proc/sys/net/ipv4/conf/all/arp_ignore echo "2" >/proc/sys/net/ipv4/conf/all/arp_announce sysctl -p >/dev/null 2>&1 echo "RealServer Start OK" exit 0 ;; stop) ifconfig lo:0 down route del $VIP >/dev/null 2>&1 echo "0" >/proc/sys/net/ipv4/conf/lo/arp_ignore echo "0" >/proc/sys/net/ipv4/conf/lo/arp_announce echo "0" >/proc/sys/net/ipv4/conf/all/arp_ignore echo "0" >/proc/sys/net/ipv4/conf/all/arp_announce echo "RealServer Stoped OK" exit 1 ;; *) echo "Usage: $0 {start|stop}" ;; esac

到这里,我们基本上是可以收工了,我们来验证一下,在客户端访问,能够通过LVS+Keepalived集群的VIP地址(192.168.17.100或者192.168.17.200)正常访问页面内容,当主从调度器节点故障时,WEB站点仍然能够访问 ,同时呢 ,在这里我们中间有两台Nginx,且配置完全相同(都做了均衡和动静分离)这样就保证了,其中一个nginx节点宕机,并不影响后端的Tomcat整个网站的运行,我们访问VIP,可验证负载均衡