实验环境准备

k8s-master 192.168.2.156

k8s-node节点 192.168.2.161

Ps:两台保证时间同步,firewalld防火墙关闭,selinxu关闭,系统采用centos7.5版本

[k8s-master部署]

[root@k8s-master ~]# yum install kubernetes-master etcd flannel -y

[root@k8s-master ~]# cp /etc/etcd/etcd.conf /etc/etcd/etcd.conf.back

[root@k8s-master ~]# egrep -v "#|^$" /etc/etcd/etcd.conf.back > /etc/etcd/etcd.conf

[root@k8s-master ~]# cat /etc/etcd/etcd.conf

ETCD_DATA_DIR="/data/etcd/"

ETCD_LISTEN_CLIENT_URLS="http://0.0.0.0:2379"

ETCD_NAME="default"

ETCD_ADVERTISE_CLIENT_URLS="http://192.168.2.156:2379"

[root@k8s-master ~]# mkdir -p /data/etcd

[root@k8s-master ~]# chmod 757 -R /data/etcd/

[root@k8s-master ~]# systemctl restart etcd

[root@k8s-master ~]# systemctl enable etcd

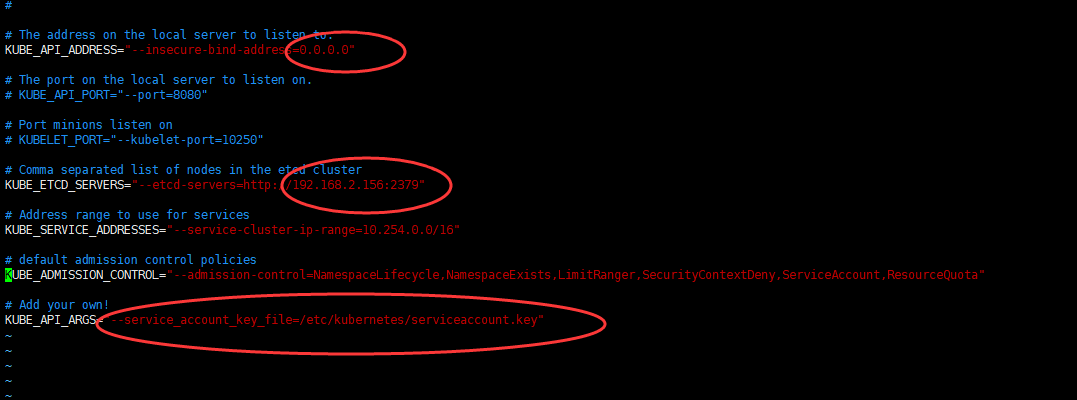

配置api文件并生成秘钥认证

[root@k8s-master ~]# openssl genrsa -out /etc/kubernetes/serviceaccount.key 2048

[root@k8s-master ~]# sed -i 's#KUBE_API_ARGS=""#KUBE_API_ARGS="--service_account_key_file=/etc/kubernetes/serviceaccount.key"#g' /etc/kubernetes/apiserver

[root@k8s-master ~]# sed -i 's#KUBE_CONTROLLER_MANAGER_ARGS=""#KUBE_CONTROLLER_MANAGER_ARGS="--service_account_private_key_file=/etc/kubernetes/serviceaccount.key"#g' /etc/kubernetes/controller-manager

[root@k8s-master ~]# egrep -v "#|^$" /etc/kubernetes/apiserver

KUBE_API_ADDRESS="--insecure-bind-address=0.0.0.0"

KUBE_ETCD_SERVERS="--etcd-servers=http://192.168.2.156:2379"

KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=10.254.0.0/16"

KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,NamespaceExists,LimitRanger,SecurityContextDeny,ServiceAccount,ResourceQuota"

KUBE_API_ARGS="--service_account_key_file=/etc/kubernetes/serviceaccount.key"

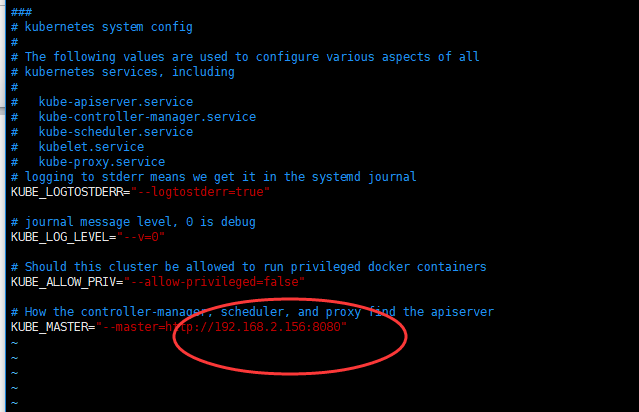

[root@k8s-master ~]# vim /etc/kubernetes/config

[root@k8s-master ~]# systemctl restart kube-apiserver

[root@k8s-master ~]# systemctl enable kube-apiserver

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-apiserver.service to /usr/lib/systemd/system/kube-apiserver.service.

[root@k8s-master ~]# systemctl restart kube-controller-manager

[root@k8s-master ~]# systemctl enable kube-controller-manager

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-controller-manager.service to /usr/lib/systemd/system/kube-controller-manager.service.

[root@k8s-master ~]# systemctl restart kube-scheduler

[root@k8s-master ~]# systemctl enable kube-scheduler

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-scheduler.service to /usr/lib/systemd/system/kube-scheduler.service.

[root@k8s-master ~]# iptables -P FORWARD ACCEPT

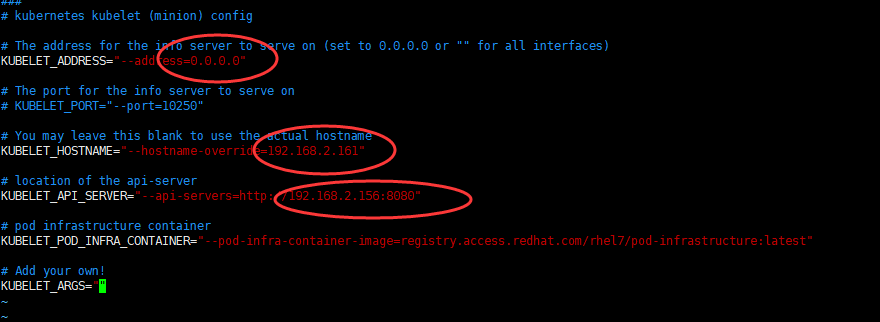

【k8s-node节点配置】

[root@k8s-node1 ~]# yum install -y kubernetes-node flannel docker *rhsm*

[root@k8s-node1 ~]# vim /etc/kubernetes/config

[root@k8s-node1 ~]# vim /etc/kubernetes/kubelet

[root@k8s-node1 ~]# systemctl restart kube-proxy

[root@k8s-node1 ~]# systemctl restart kubelet

[root@k8s-node1 ~]# systemctl restart docker

[root@k8s-node1 ~]# iptables -P FORWARD ACCEPT

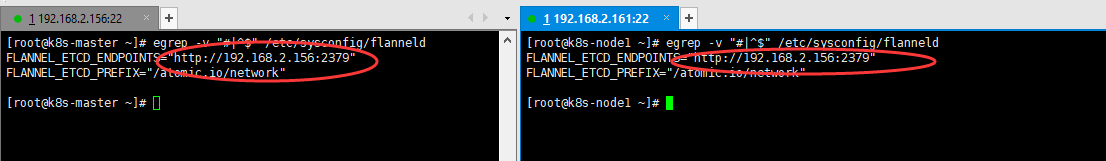

【配置flanneld网络】

配置flanneld文件,IP地址统一写master端IP即可

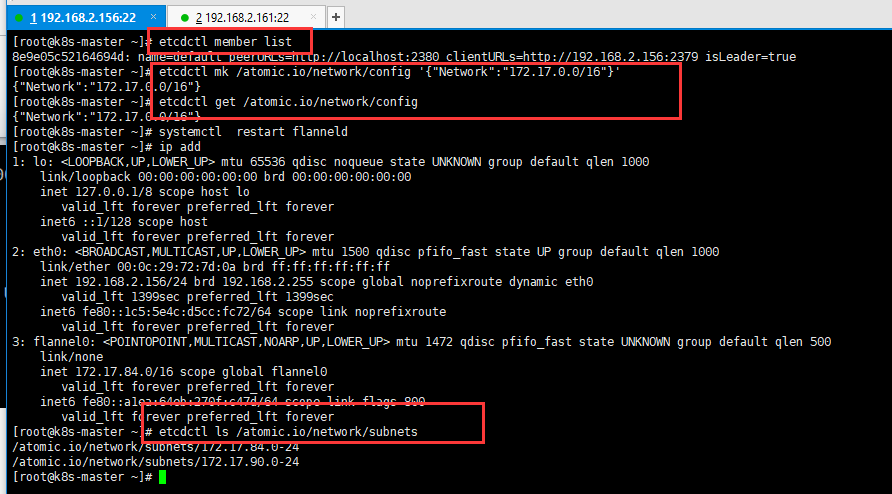

[root@k8s-master ~]# etcdctl member list

8e9e05c52164694d: name=default peerURLs=http://localhost:2380 clientURLs=http://192.168.2.156:2379 isLeader=true

[root@k8s-master ~]# etcdctl mk /atomic.io/network/config '{"Network":"172.17.0.0/16"}'

{"Network":"172.17.0.0/16"}

[root@k8s-master ~]# etcdctl get /atomic.io/network/config

{"Network":"172.17.0.0/16"}

分别重启flanneld服务

#systemctl restart flanne

检验ping测互通

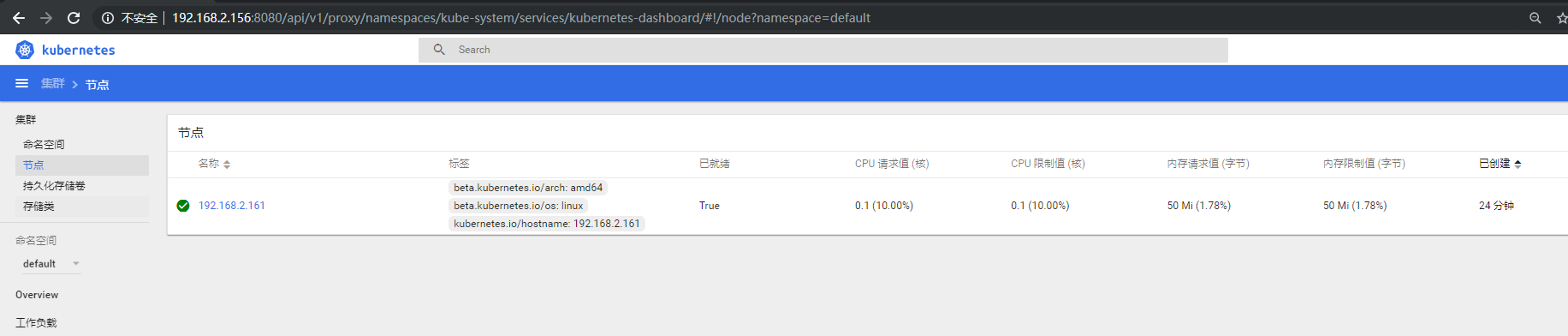

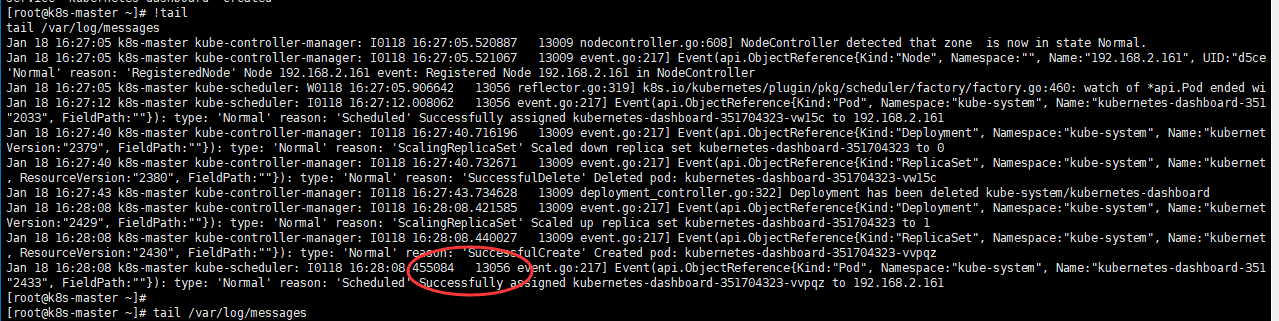

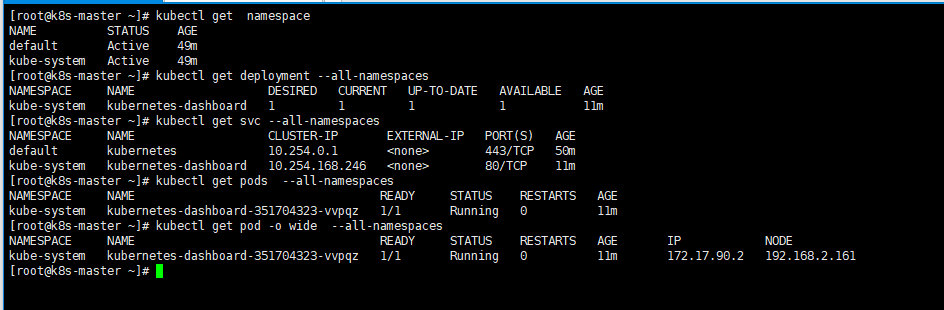

【k8s-Dashboard UI界面】

kubernetes最重要的工作就是对docker容器集群进行统一的管理和调度,一般用命令行来操作kubernetes集群以及各个节点,可用UI界面可视化操作,由此便需要两个基础列表镜像

[root@k8s-node1 ~]# docker load < pod-infrastructure.tgz

[root@k8s-node1 ~]# docker tag $(docker images|grep none|awk '{print $3}') registry.access.redhat.com/rhel7/pod-infrastructure

[root@k8s-node1 ~]# docker load < kubernetes-dashboard-amd64.tgz

[root@k8s-node1 ~]# docker tag $(docker images|grep none|awk '{print $3}') bestwu/kubernetes-dashboard-amd64:v1.6.3

在master端创建dashboard-controller.yaml和dashboard-service.yaml两个yuml文件,用于控制node节点镜像

[root@k8s-master ~]# cat >dashboard-controller.yaml<<EOF

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: kubernetes-dashboard

namespace: kube-system

labels:

k8s-app: kubernetes-dashboard

kubernetes.io/cluster-service: "true"

spec:

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ''

scheduler.alpha.kubernetes.io/tolerations: '[{"key":"CriticalAddonsOnly", "operator":"Exists"}]'

spec:

containers:

- name: kubernetes-dashboard

image: bestwu/kubernetes-dashboard-amd64:v1.6.3

resources:

# keep request = limit to keep this container in guaranteed class

limits:

cpu: 100m

memory: 50Mi

requests:

cpu: 100m

memory: 50Mi

ports:

- containerPort: 9090

args:

- --apiserver-host=http://192.168.2.156:8080

livenessProbe:

httpGet:

path: /

port: 9090

initialDelaySeconds: 30

timeoutSeconds: 30

EOF

[root@k8s-master ~]# cat dashboard-service.yaml

apiVersion: v1 kind: Service metadata: name: kubernetes-dashboard namespace: kube-system labels: k8s-app: kubernetes-dashboard kubernetes.io/cluster-service: "true" spec: selector: k8s-app: kubernetes-dashboard ports: - port: 80 targetPort: 9090[root@k8s-master ~]#

[root@k8s-master ~]#kubectl apply -f dashboard-controller.yaml

[root@k8s-master ~]#kubectl apply -f dashboard-service.yaml

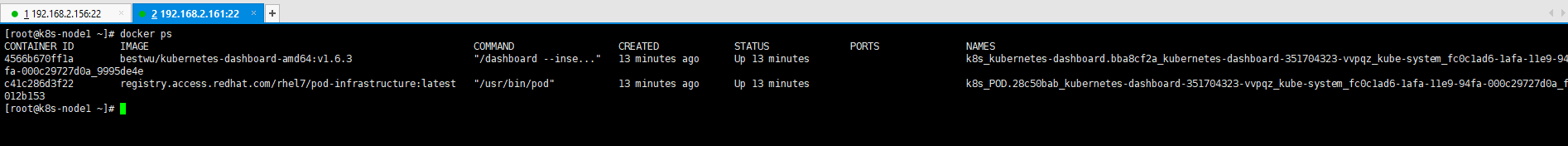

最直接的可以到node节点查看两个docker容器是否启动

浏览器访问http://192.168.2.156:8080/ui地址即可~