环境 : CentOS7

master 192.168.94.11

node1 192.168.94.22

node2 192.168.94.33

关闭防火墙、SElinux

安装包地址 : 链接: https://pan.baidu.com/s/1_Jjpfhly5fvA6ICf4zrYCQ 提取码: gaic

所有节点做以下操作

将安装包上传到每个节点

[root@master ~]# unzip k8s-offline-install.zip [root@master ~]# cd k8s-images # 安装docker [root@master k8s-images]# yum -y localinstall docker-ce* # 修改docker的镜像仓库源或者使用阿里云docker仓库的加速 [root@master k8s-images]# curl -sSL https://get.daocloud.io/daotools/set_mirror.sh | sh -s http://a58c8480.m.daocloud.io # 启动docker并设置开启启动 [root@master k8s-images]# systemctl start docker [root@master k8s-images]# systemctl enable docker

配置系统路由参数,防止kubeadm报路由警告

[root@master k8s-images]# cat <<EOF > /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

[root@master k8s-images]# sysctl --system

* Applying /usr/lib/sysctl.d/00-system.conf ...

net.bridge.bridge-nf-call-ip6tables = 0

net.bridge.bridge-nf-call-iptables = 0

net.bridge.bridge-nf-call-arptables = 0

* Applying /usr/lib/sysctl.d/10-default-yama-scope.conf ...

kernel.yama.ptrace_scope = 0

* Applying /usr/lib/sysctl.d/50-default.conf ...

kernel.sysrq = 16

kernel.core_uses_pid = 1

net.ipv4.conf.default.rp_filter = 1

net.ipv4.conf.all.rp_filter = 1

net.ipv4.conf.default.accept_source_route = 0

net.ipv4.conf.all.accept_source_route = 0

net.ipv4.conf.default.promote_secondaries = 1

net.ipv4.conf.all.promote_secondaries = 1

fs.protected_hardlinks = 1

fs.protected_symlinks = 1

* Applying /etc/sysctl.d/99-sysctl.conf ...

* Applying /etc/sysctl.d/k8s.conf ...

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

* Applying /etc/sysctl.conf ...

关闭swap

[root@master k8s-images]# swapoff -a

# 注释掉swap的行

[root@master k8s-images]# sed -i 's/.*swap/#&/' /etc/fstab

导入镜像

# 解压docker镜像 [root@master k8s-images]# unzip docker_images.zip

# 如果解压失败的话, 使用zip命令来修复压缩包

# 例: zip -F(或者-FF) file_old.zip --out file_new.zip , 之后尝试重新解压

# 导入镜像 docker load < /root/k8s-images/docker_images/etcd-amd64_v3.1.10.tardocker load </root/k8s-images/docker_images/flannel_v0.9.1-amd64.tar docker load </root/k8s-images/docker_images/k8s-dns-dnsmasq-nanny-amd64_v1.14.7.tar docker load </root/k8s-images/docker_images/k8s-dns-kube-dns-amd64_1.14.7.tar docker load </root/k8s-images/docker_images/k8s-dns-sidecar-amd64_1.14.7.tar docker load </root/k8s-images/docker_images/kube-apiserver-amd64_v1.9.0.tar docker load </root/k8s-images/docker_images/kube-controller-manager-amd64_v1.9.0.tar docker load </root/k8s-images/docker_images/kube-scheduler-amd64_v1.9.0.tar docker load < /root/k8s-images/docker_images/kube-proxy-amd64_v1.9.0.tar docker load </root/k8s-images/docker_images/pause-amd64_3.0.tar docker load < /root/k8s-images/docker_images/kubernetes-dashboard_v1.8.1.tar

安装kubelet kubeadm kubectl包

[root@master k8s-images]# yum -y localinstall socat-1.7.3.2-2.el7.x86_64.rpm kubernetes-cni-0.6.0-0.x86_64.rpm kubelet-1.9.9-9.x86_64.rpm kubectl-1.9.0-0.x86_64.rpm kubectl-1.9.0-0.x86_64.rpm kubeadm-1.9.0-0.x86_64.rpm [root@master k8s-images]# systemctl enable kubelet

master与node做ssh互信

[root@master k8s-images]# ssh-keygen -t rsa -f ~/.ssh/id_rsa -N "" -q [root@master k8s-images]# ssh-copy-id node1 [root@master k8s-images]# ssh-copy-id node2

kubelet默认的cgroup的driver和docker的不一样,docker默认的cgroupfs,kubelet默认为systemd

[root@master k8s-images]# cp -a /etc/systemd/system/kubelet.service.d/10-kubeadm.conf /etc/systemd/system/kubelet.service.d/10-kubeadm.conf_bak [root@master k8s-images]# sed -i "s/systemd/cgroupfs/g" /etc/systemd/system/kubelet.service.d/10-kubeadm.conf

重新加载system配置文件

[root@master k8s-images]# systemctl daemon-reload # 将环境reset [root@master k8s-images]# kubeadm reset

# master初始化配置 [root@master k8s-images]# kubeadm init --kubernetes-version=v1.9.0 --pod-network-cidr=10.244.0.0/16

kubeadm join --token b209aa.249f0b51592e58bb 192.168.94.11:6443 --discovery-token-ca-cert-hash sha256:b3537b3ecf6e7febd8fec7b4a635c740a0bd52fe2fb1606b026a938d52fa9e60

# 将kubeadm join --token ...... 保存下来,等下node节点需要使用 ,可以在master上通过 kubeadmin token list 命令来得到

配置环境变量

[root@master k8s-images]# echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bash_profile [root@master k8s-images]# . ~/.bash_profile # 测试 [root@master k8s-images]# kubectl version

安装网络,可以使用flannel、calico、weave、macvlan这里我们用flannel

[root@master k8s-images]# kubectl create -f kube-flannel.yml # 安装dashboard [root@master k8s-images]# kubectl apply -f kubernetes-dashboard.yaml # 查看部署状态是否正常 [root@master k8s-images]# kubectl get pods --all-namespaces

node节点操作:

kubeadm init 输出的 join 指令中 token 只有 24h 的有效期,如果过期后,可以使用 kubeadm token create --print-join-command 命令重新生成

# 使用刚才master的

[root@node1 k8s-images]# kubeadm join --token b209aa.249f0b51592e58bb 192.168.94.11:6443 --discovery-token-ca-cert-hash sha256:b3537b3ecf6e7febd8fec7b4a635c740a0bd52fe2fb1606b026a938d52fa9e60 --namespace=kube-systemc

master节点验证:

[root@master k8s-images]# kubectl get nodes NAME STATUS ROLES AGE VERSION master Ready master 1h v1.9.0 node1 Ready <none> 58m v1.9.0 node2 Ready <none> 58m v1.9.0

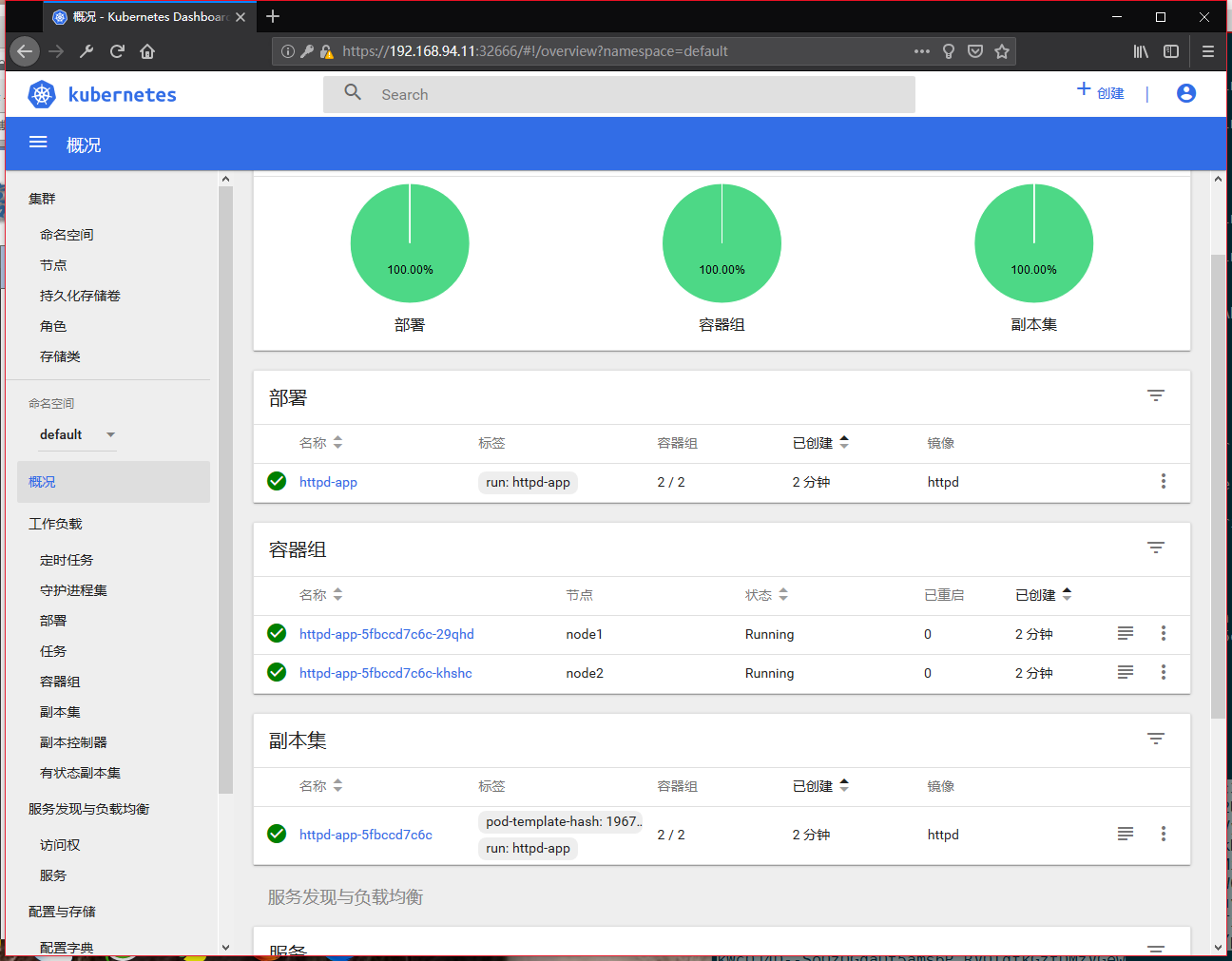

测试集群

[root@master k8s-images]# kubectl run httpd-app --image=httpd --replicas=2 [root@master k8s-images]# kubectl get deployment NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE httpd-app 2 2 2 2 58m # 检查pod,pod分别在node1和node2上 [root@master k8s-images]# kubectl get pods NAME READY STATUS RESTARTS AGE httpd-app-5fbccd7c6c-b4pzp 1/1 Running 0 58m httpd-app-5fbccd7c6c-pjmx2 1/1 Running 0 58m [root@master k8s-images]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE httpd-app-5fbccd7c6c-b4pzp 1/1 Running 0 59m 10.244.1.2 node1 httpd-app-5fbccd7c6c-pjmx2 1/1 Running 0 59m 10.244.2.2 node2 # 因为创建的资源不是service,所以不会调用proxy # 直接访问测试 [root@master k8s-images]# curl 10.244.1.2 <html><body><h1>It works!</h1></body></html> [root@master k8s-images]# curl 10.244.2.2 <html><body><h1>It works!</h1></body></html>

创建用户

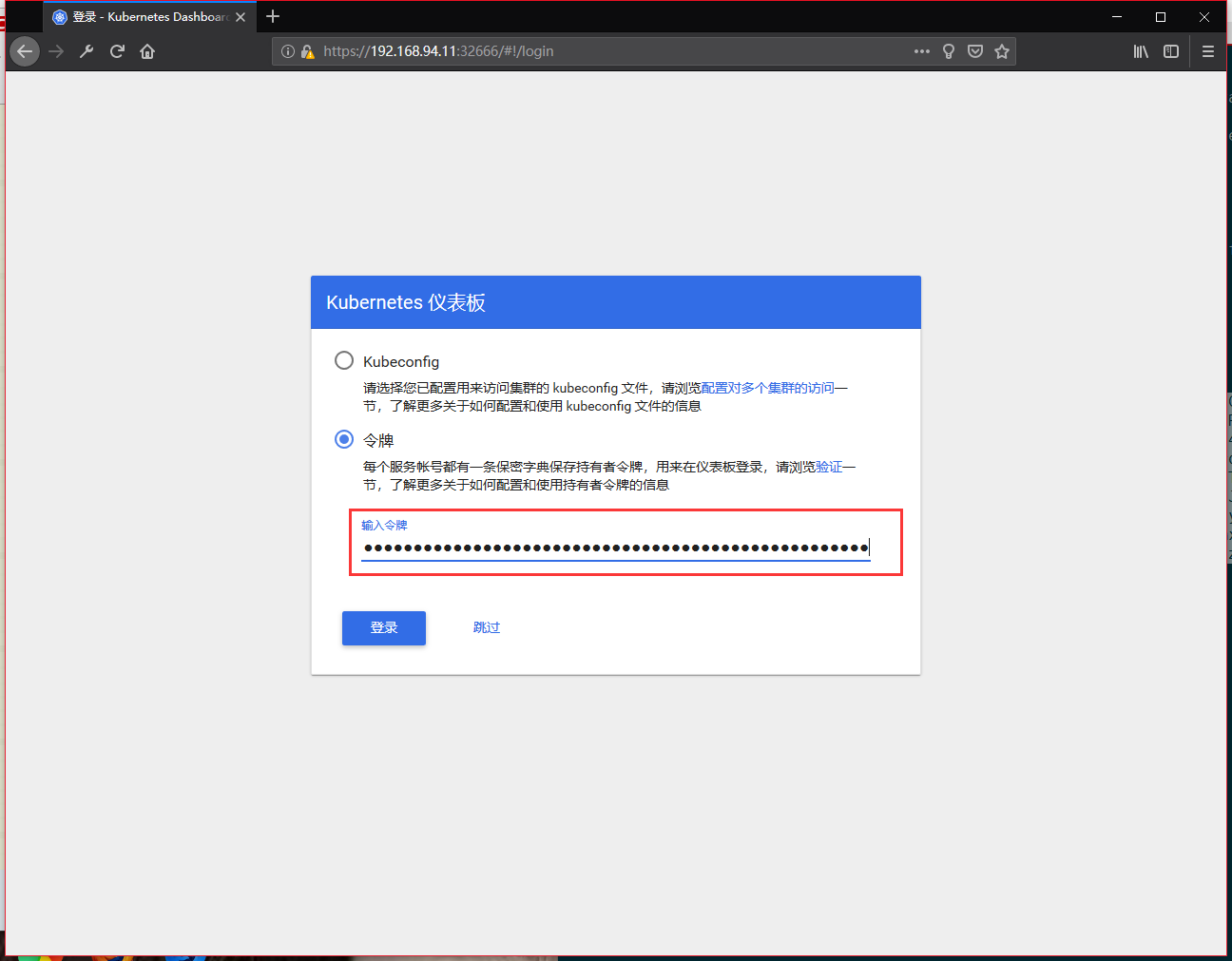

# 创建服务账号 ,并放在kube-system名称空间下 [root@master k8s-images]# kubectl create -f admin-user.yaml # 默认情况下,kubeadm创建集群时已经创建了admin角色,我们直接绑定即可 [root@master k8s-images]# kubectl create -f admin-user-role-binding.yaml # 现在需要新创建的用户的Token,以便用来登录dashboard [root@master k8s-images]# kubectl -n kube-system describe secret $(kubectl -n kube-system get secret | grep admin-user | awk '{print $1}') # 会输出以下内容 Name: admin-user-token-j46b8 Namespace: kube-system Labels: <none> Annotations: kubernetes.io/service-account.name=admin-user kubernetes.io/service-account.uid=0915f27c-d0a2-11e8-bf22-000c29b353bc Type: kubernetes.io/service-account-token Data ==== ca.crt: 1025 bytes namespace: 11 bytes token: eyJhbGciOiJSUzI1NiIsInR5cCI6IkpXVCJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLWo0NmI4Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiIwOTE1ZjI3Yy1kMGEyLTExZTgtYmYyMi0wMDBjMjliMzUzYmMiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZS1zeXN0ZW06YWRtaW4tdXNlciJ9.dpKrpQPNeixzyFOBRGOaCAnLzORoM300FRfXa0bZEByTEUz5o7Ti9oKVGNOaNOIqsDXJ_HU16DWbkYR58Dnu6UaIy_Ya1_Ro5zRFHPUUkc1PSfCJxIMOxRas4irKy8pL9QMY6evQCtQDKzrVF7xNmUIyxCKtm9d7h7RKxL7xADODz7Sr7HgPOaMtu6MyxHT1EjoXwlXbl4WylTquuMfj1EjXBU1E_6ScUtkJ2yX_MkTNshAWbpFufUW4cTgQ1GumabXPhTmnr4r1HDRXVbJNDd_gGnj_2GwM72YEee-W-iq1dJtDTrbwGgG3mbkiJdkze22F-Ec_twGjfuYuR04Rmw

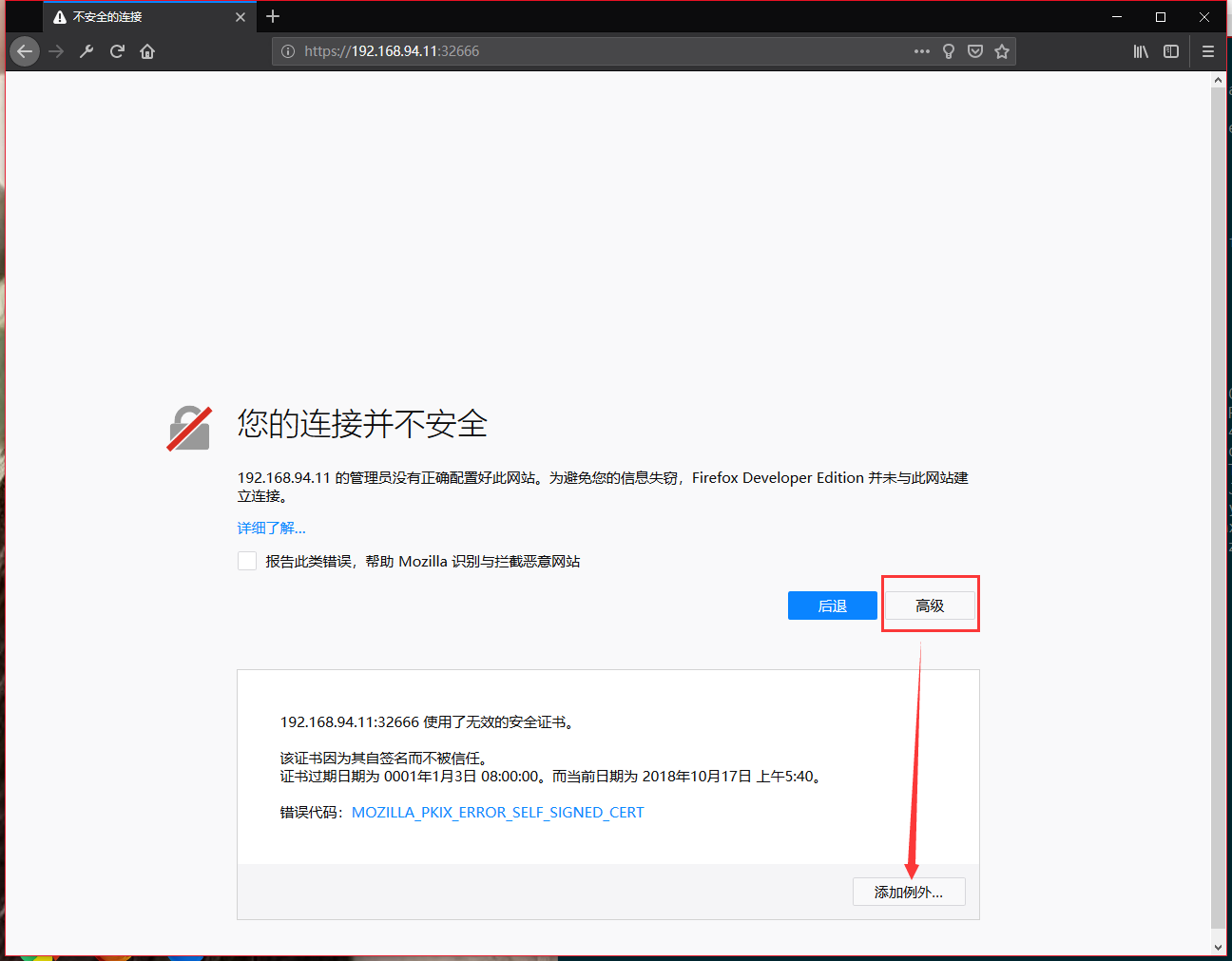

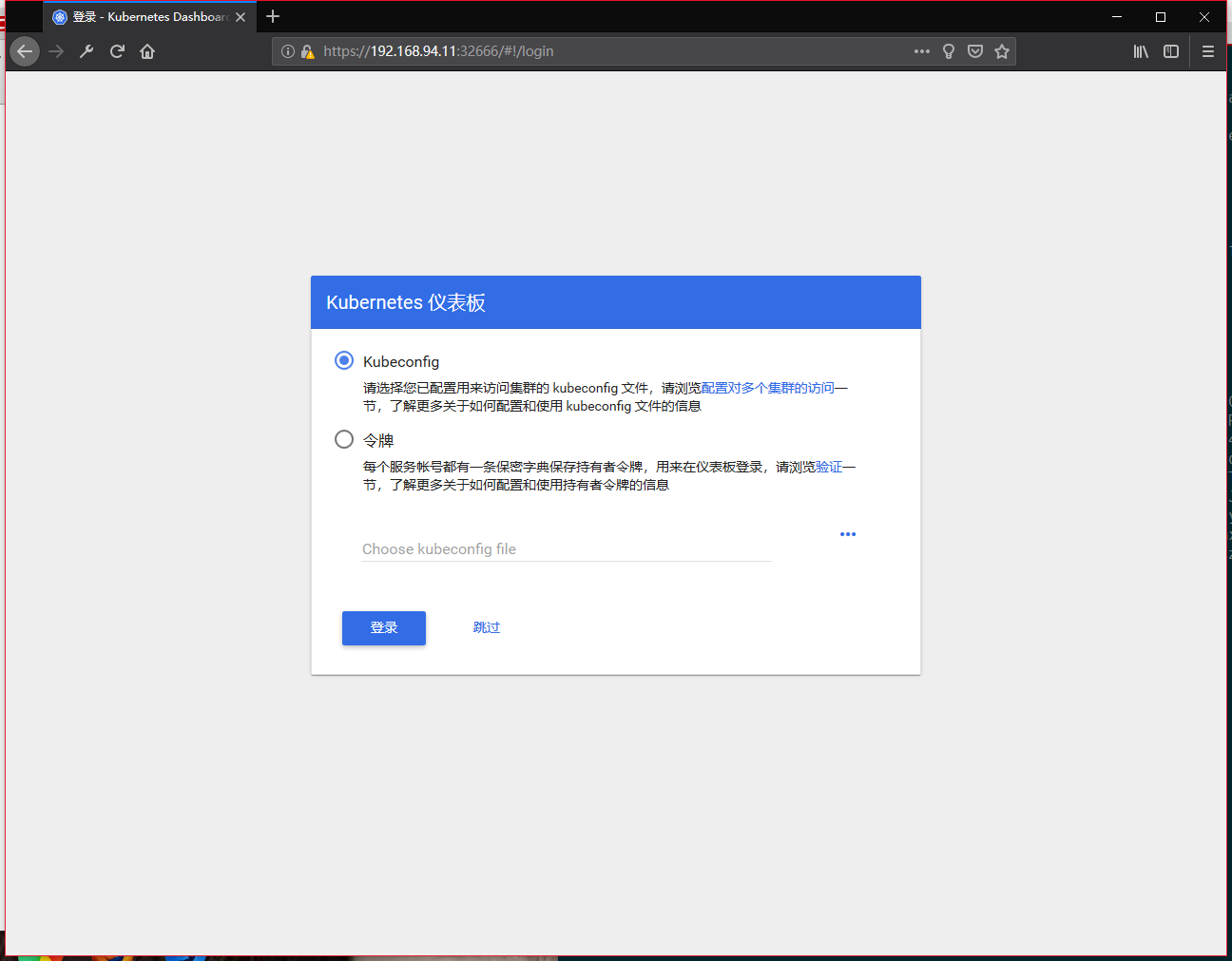

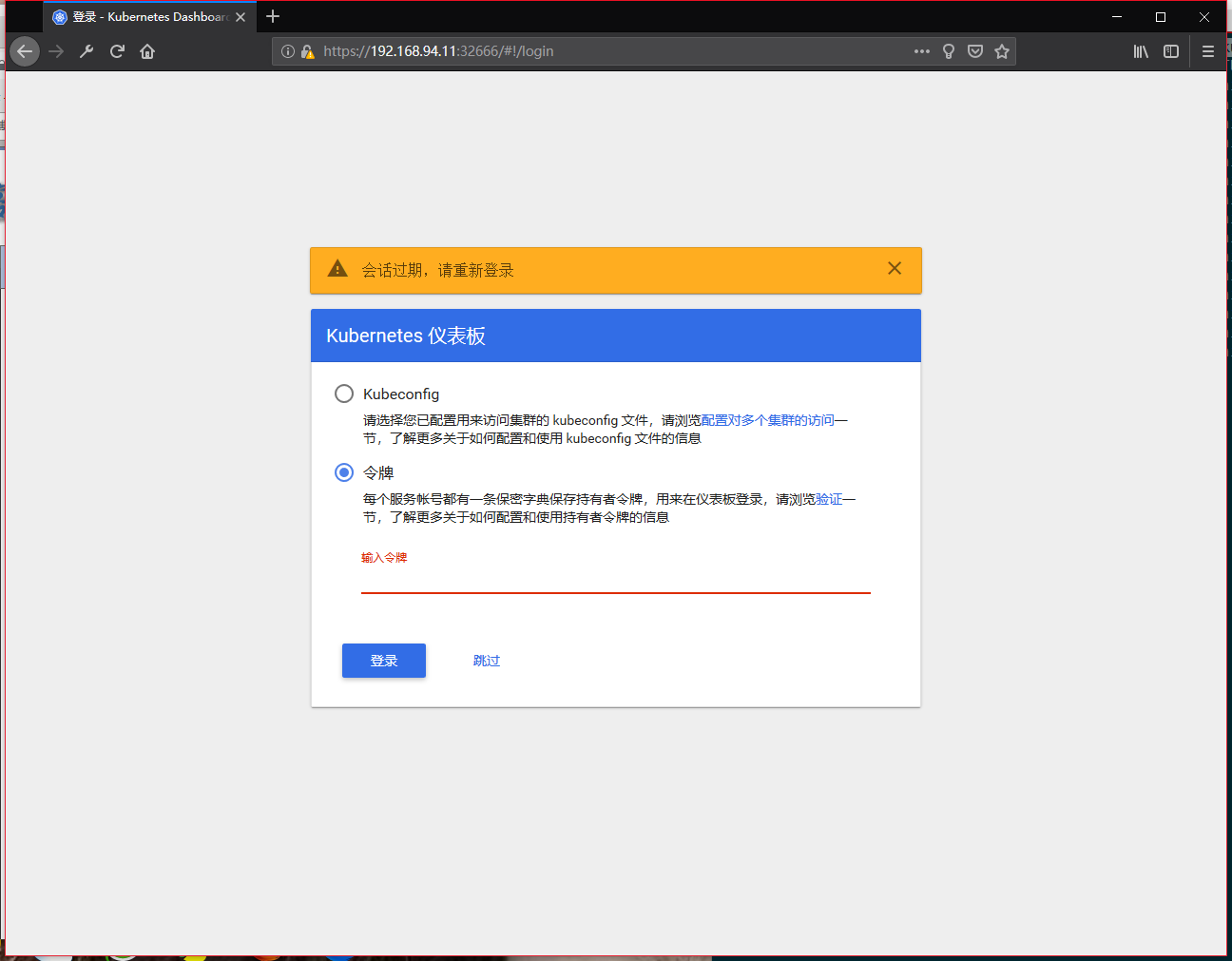

访问kubernetes-dashboard

使用Firefox浏览器访问 https://master_ip:32666

把刚才获取到的token输入进去

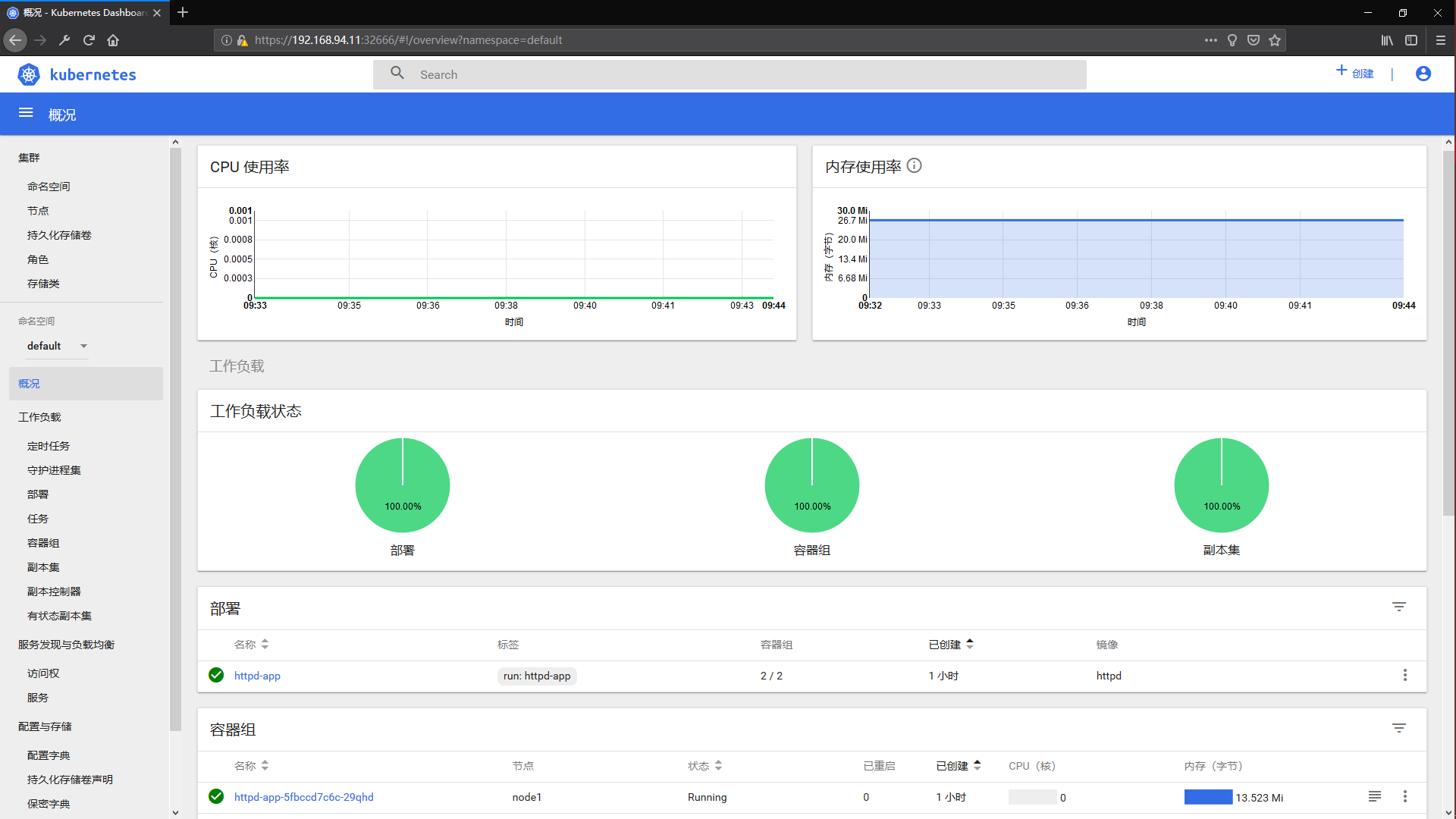

登录效果

集成Heapster

Heapster是容器集群监控和性能分析工具,天然的支持Kubernetes和CoreOS

Heapster支持多种储存方式,本示例中使用influxdb,直接执行下列命令即可:

[root@master k8s-images]# mkdir heapster [root@master k8s-images]# cd heapster/ [root@master heapster]# wget https://raw.githubusercontent.com/kubernetes/heapster/master/deploy/kube-config/influxdb/grafana.yaml [root@master heapster]# wget https://raw.githubusercontent.com/kubernetes/heapster/master/deploy/kube-config/influxdb/heapster.yaml [root@master heapster]# wget https://raw.githubusercontent.com/kubernetes/heapster/master/deploy/kube-config/influxdb/influxdb.yaml [root@master heapster]# wget https://raw.githubusercontent.com/kubernetes/heapster/master/deploy/kube-config/rbac/heapster-rbac.yaml

修改yaml 中 image 的值 把k8s.gcr.io 全部修改为 registry.cn-hangzhou.aliyuncs.com/google_containers

部署 Heapster

[root@master heapster]# cd .. [root@master k8s-images]# kubectl create -f heapster/ # 查看状态,都是running之后就可以了 [root@master heapster]# kubectl get pods --namespace=kube-system

刷新浏览器,查看效果

重新获取token

[root@master heapster]# kubectl -n kube-system describe secret $(kubectl -n kube-system get secret | grep admin-user | awk '{print $1}')

多了CPU和内存信息