创建三个文件

-

XJTRunner

-

XJTReducer

-

XJTMapper

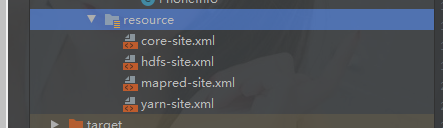

resource目录引入HDFS连接文件:

具体代码

XJTRunner类

package com.ke.xjt; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.Path; import org.apache.hadoop.hbase.client.Put; import org.apache.hadoop.hbase.mapreduce.TableMapReduceUtil; import org.apache.hadoop.io.IntWritable; import org.apache.hadoop.io.NullWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; import org.apache.hadoop.mapreduce.Job; public class XJTRunner { public static void main(String[] args) throws Exception{ Configuration conf = new Configuration(true); // 使用hbase,需要加载zookeeper配置 conf.set("hbase.zookeeper.quorum","node04,node02,node03"); //让框架知道是windows异构平台运行 conf.set("mapreduce.app-submission.cross-platform","true"); conf.set("mapreduce.framework.name","local"); //创建job对象 Job job = Job.getInstance(conf); job.setJarByClass(XJTRunner.class); //设置mapper类 job.setMapperClass(XJTMapper.class); job.setMapOutputKeyClass(Text.class); job.setMapOutputValueClass(IntWritable.class); //设置reduce类 //wordcount是hbase表 TableMapReduceUtil.initTableReducerJob("wordcount",XJTReducer.class,job,null,null,null,null,false); job.setOutputKeyClass(NullWritable.class); job.setOutputValueClass(Put.class); //指定hdfs存储数据的目录 FileInputFormat.addInputPath(job, new Path("/data/godno")); job.waitForCompletion(true); } }

XJTMapper类

package com.ke.xjt; import org.apache.hadoop.io.IntWritable; import org.apache.hadoop.io.LongWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Mapper; import java.io.IOException; public class XJTMapper extends Mapper<LongWritable, Text, Text, IntWritable> { @Override protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException { System.out.println("key - - -" + key); System.out.println("value - - -" + value); System.out.println("context - - -" + context); String[] split = value.toString().split(" "); for (String s : split) { context.write(new Text(s), new IntWritable(1)); } } }

XJTReducer类

package com.ke.xjt; import org.apache.hadoop.hbase.client.Put; import org.apache.hadoop.hbase.io.ImmutableBytesWritable; import org.apache.hadoop.hbase.mapreduce.TableReducer; import org.apache.hadoop.hbase.util.Bytes; import org.apache.hadoop.io.IntWritable; import org.apache.hadoop.io.Text; import java.io.IOException; public class XJTReducer extends TableReducer<Text, IntWritable, ImmutableBytesWritable> { @Override protected void reduce(Text key, Iterable<IntWritable> values, Context context) throws IOException, InterruptedException { System.out.println("XJTReducer-key - - -" + key); System.out.println("XJTReducer-value - - -" + values); System.out.println("XJTReducer-context - - -" + context); int num = 0; for (IntWritable value : values) { num = value.get(); } Put put = new Put(Bytes.toBytes(key.toString())); put.addColumn(Bytes.toBytes("cf"), Bytes.toBytes("ct"), Bytes.toBytes(num)); context.write(null, put); } }

注意: 一定要先在HBase先创建对应的表 wordcount

代码:https://gitee.com/Xiaokeworksveryhard/big-data.git