1.1 基准评测程序测试hadoop集群

通过运行测试作业,用测试结果和资源检测结果来判断集群的性能,调整设置进行优化。最好在刚搭建好集群时测试。通过运行高强度的IO操作评测程序检测硬盘故障。

1.1.1 hadoop基准评测程序

(1)测试IO读写

hadoop自带基准评测程序,在D:hadoophadoop-2.8.3sharehadoopmapreduce路径下hadoop-mapreduce-client-jobclient-2.8.3-tests.jar。运行命令如下命令,会出现提示没有参数,按照提示带上参数就可以执行IO读写操作测试:

D:hadoophadoop-2.8.3sharehadoopmapreduce>hadoop jar hadoop-mapreduce-client-jobclient-2.8.3-tests.jar TestDFSIO

20/03/01 21:59:12 INFO fs.TestDFSIO: TestDFSIO.1.8

Missing arguments.

Usage: TestDFSIO [genericOptions] -read [-random | -backward | -skip [-skipSize Size]] | -write | -append | -truncate | -clean [-compression codecClassName] [-nrFiles N] [-size Size[B|KB|MB|GB|TB]] [-resFile resultFileName] [-bufferSize Bytes] [-rootDir]

(2)使用TeraSort全排序测评HDFS

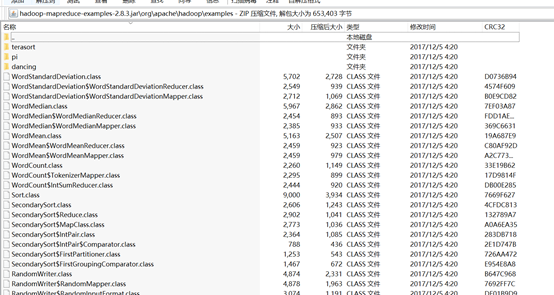

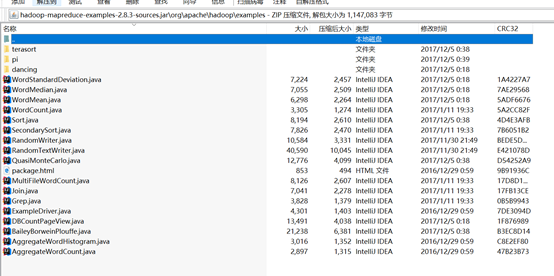

TeraSort在D:hadoophadoop-2.8.3sharehadoopmapreduce的hadoop-mapreduce-examples-2.8.3.jar中。排序前要用teragen类生成随机数,然后在用TeraSort类进行全排序,源码在D:hadoophadoop-2.8.3sharehadoopmapreducesources中hadoop-mapreduce-examples-2.8.3-sources.jar。

1)生成10万亿随机数

teragen是一个map任务作业,可以指定生成多少行随机数。mapreduce.job.maps=1000指定map任务数,10t是10trillion(万亿)的意思,random-data是输出目录。执行命令生成10万亿随机数。

hadoop jar hadoop-mapreduce-examples-2.8.3.jar teragen –D mapreduce.job.maps=1000 10t random-data

teragen类的定义如下

/** * Licensed to the Apache Software Foundation (ASF) under one * or more contributor license agreements. See the NOTICE file * distributed with this work for additional information * regarding copyright ownership. The ASF licenses this file * to you under the Apache License, Version 2.0 (the * "License"); you may not use this file except in compliance * with the License. You may obtain a copy of the License at * * http://www.apache.org/licenses/LICENSE-2.0 * * Unless required by applicable law or agreed to in writing, software * distributed under the License is distributed on an "AS IS" BASIS, * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. * See the License for the specific language governing permissions and * limitations under the License. */ package org.apache.hadoop.examples.terasort; import java.io.DataInput; import java.io.DataOutput; import java.io.IOException; import java.util.ArrayList; import java.util.List; import java.util.zip.Checksum; import org.apache.commons.logging.Log; import org.apache.commons.logging.LogFactory; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.conf.Configured; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.LongWritable; import org.apache.hadoop.io.NullWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.io.Writable; import org.apache.hadoop.io.WritableUtils; import org.apache.hadoop.mapreduce.Cluster; import org.apache.hadoop.mapreduce.Counter; import org.apache.hadoop.mapreduce.InputFormat; import org.apache.hadoop.mapreduce.InputSplit; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.JobContext; import org.apache.hadoop.mapreduce.MRJobConfig; import org.apache.hadoop.mapreduce.Mapper; import org.apache.hadoop.mapreduce.RecordReader; import org.apache.hadoop.mapreduce.TaskAttemptContext; import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; import org.apache.hadoop.util.PureJavaCrc32; import org.apache.hadoop.util.Tool; import org.apache.hadoop.util.ToolRunner; /** * Generate the official GraySort input data set. * The user specifies the number of rows and the output directory and this * class runs a map/reduce program to generate the data. * The format of the data is: * <ul> * <li>(10 bytes key) (constant 2 bytes) (32 bytes rowid) * (constant 4 bytes) (48 bytes filler) (constant 4 bytes) * <li>The rowid is the right justified row id as a hex number. * </ul> * * <p> * To run the program: * <b>bin/hadoop jar hadoop-*-examples.jar teragen 10000000000 in-dir</b> */ public class TeraGen extends Configured implements Tool { private static final Log LOG = LogFactory.getLog(TeraGen.class); public static enum Counters {CHECKSUM} /** * An input format that assigns ranges of longs to each mapper. */ static class RangeInputFormat extends InputFormat<LongWritable, NullWritable> { /** * An input split consisting of a range on numbers. */ static class RangeInputSplit extends InputSplit implements Writable { long firstRow; long rowCount; public RangeInputSplit() { } public RangeInputSplit(long offset, long length) { firstRow = offset; rowCount = length; } public long getLength() throws IOException { return 0; } public String[] getLocations() throws IOException { return new String[]{}; } public void readFields(DataInput in) throws IOException { firstRow = WritableUtils.readVLong(in); rowCount = WritableUtils.readVLong(in); } public void write(DataOutput out) throws IOException { WritableUtils.writeVLong(out, firstRow); WritableUtils.writeVLong(out, rowCount); } } /** * A record reader that will generate a range of numbers. */ static class RangeRecordReader extends RecordReader<LongWritable, NullWritable> { long startRow; long finishedRows; long totalRows; LongWritable key = null; public RangeRecordReader() { } public void initialize(InputSplit split, TaskAttemptContext context) throws IOException, InterruptedException { startRow = ((RangeInputSplit)split).firstRow; finishedRows = 0; totalRows = ((RangeInputSplit)split).rowCount; } public void close() throws IOException { // NOTHING } public LongWritable getCurrentKey() { return key; } public NullWritable getCurrentValue() { return NullWritable.get(); } public float getProgress() throws IOException { return finishedRows / (float) totalRows; } public boolean nextKeyValue() { if (key == null) { key = new LongWritable(); } if (finishedRows < totalRows) { key.set(startRow + finishedRows); finishedRows += 1; return true; } else { return false; } } } public RecordReader<LongWritable, NullWritable> createRecordReader(InputSplit split, TaskAttemptContext context) throws IOException { return new RangeRecordReader(); } /** * Create the desired number of splits, dividing the number of rows * between the mappers. */ public List<InputSplit> getSplits(JobContext job) { long totalRows = getNumberOfRows(job); int numSplits = job.getConfiguration().getInt(MRJobConfig.NUM_MAPS, 1); LOG.info("Generating " + totalRows + " using " + numSplits); List<InputSplit> splits = new ArrayList<InputSplit>(); long currentRow = 0; for(int split = 0; split < numSplits; ++split) { long goal = (long) Math.ceil(totalRows * (double)(split + 1) / numSplits); splits.add(new RangeInputSplit(currentRow, goal - currentRow)); currentRow = goal; } return splits; } } static long getNumberOfRows(JobContext job) { return job.getConfiguration().getLong(TeraSortConfigKeys.NUM_ROWS.key(), TeraSortConfigKeys.DEFAULT_NUM_ROWS); } static void setNumberOfRows(Job job, long numRows) { job.getConfiguration().setLong(TeraSortConfigKeys.NUM_ROWS.key(), numRows); } /** * The Mapper class that given a row number, will generate the appropriate * output line. */ public static class SortGenMapper extends Mapper<LongWritable, NullWritable, Text, Text> { private Text key = new Text(); private Text value = new Text(); private Unsigned16 rand = null; private Unsigned16 rowId = null; private Unsigned16 checksum = new Unsigned16(); private Checksum crc32 = new PureJavaCrc32(); private Unsigned16 total = new Unsigned16(); private static final Unsigned16 ONE = new Unsigned16(1); private byte[] buffer = new byte[TeraInputFormat.KEY_LENGTH + TeraInputFormat.VALUE_LENGTH]; private Counter checksumCounter; public void map(LongWritable row, NullWritable ignored, Context context) throws IOException, InterruptedException { if (rand == null) { rowId = new Unsigned16(row.get()); rand = Random16.skipAhead(rowId); checksumCounter = context.getCounter(Counters.CHECKSUM); } Random16.nextRand(rand); GenSort.generateRecord(buffer, rand, rowId); key.set(buffer, 0, TeraInputFormat.KEY_LENGTH); value.set(buffer, TeraInputFormat.KEY_LENGTH, TeraInputFormat.VALUE_LENGTH); context.write(key, value); crc32.reset(); crc32.update(buffer, 0, TeraInputFormat.KEY_LENGTH + TeraInputFormat.VALUE_LENGTH); checksum.set(crc32.getValue()); total.add(checksum); rowId.add(ONE); } @Override public void cleanup(Context context) { if (checksumCounter != null) { checksumCounter.increment(total.getLow8()); } } } private static void usage() throws IOException { System.err.println("teragen <num rows> <output dir>"); } /** * Parse a number that optionally has a postfix that denotes a base. * @param str an string integer with an option base {k,m,b,t}. * @return the expanded value */ private static long parseHumanLong(String str) { char tail = str.charAt(str.length() - 1); long base = 1; switch (tail) { case 't': base *= 1000 * 1000 * 1000 * 1000; break; case 'b': base *= 1000 * 1000 * 1000; break; case 'm': base *= 1000 * 1000; break; case 'k': base *= 1000; break; default: } if (base != 1) { str = str.substring(0, str.length() - 1); } return Long.parseLong(str) * base; } /** * @param args the cli arguments */ public int run(String[] args) throws IOException, InterruptedException, ClassNotFoundException { Job job = Job.getInstance(getConf()); if (args.length != 2) { usage(); return 2; } setNumberOfRows(job, parseHumanLong(args[0])); Path outputDir = new Path(args[1]); FileOutputFormat.setOutputPath(job, outputDir); job.setJobName("TeraGen"); job.setJarByClass(TeraGen.class); job.setMapperClass(SortGenMapper.class); job.setNumReduceTasks(0); job.setOutputKeyClass(Text.class); job.setOutputValueClass(Text.class); job.setInputFormatClass(RangeInputFormat.class); job.setOutputFormatClass(TeraOutputFormat.class); return job.waitForCompletion(true) ? 0 : 1; } public static void main(String[] args) throws Exception { int res = ToolRunner.run(new Configuration(), new TeraGen(), args); System.exit(res); } }

2)运行TearSort执行排序

hadoop jar hadoop-mapreduce-examples-2.8.3.jar terasort radon-data sorted-data

3)通过8088界面查看作业进度和时间

4)用teravalidate类验证sorted-data文件是否已排序

hadoop jar hadoop-mapreduce-examples-2.8.3.jar teravalidate sort-data report

(3)其他测评程序

MRBench小型作业测评

NNBench测试namenode硬件加载过程

Gridmix基准评测程序套装,集群负载建模。

SWIM(statistical workload injector for mapreduce)用来为被测系统生成代表性的测试负载。

1.1.2 用户作业

使用具有代表性的作业进行测评,用户可以用自己的作业作为急转测评程序,采用固定的数据集合,升级集群后,使用相同的数据集合对比新旧集群的性能。

自己开发了一个股票智能分析软件,功能很强大,需要的点击下面的链接获取: