通过oozie job id可以查看流程详细信息,命令如下:

oozie job -info 0012077-180830142722522-oozie-hado-W

流程详细信息如下:

Job ID : 0012077-180830142722522-oozie-hado-W

------------------------------------------------------------------------------------------------------------------------------------

Workflow Name : test_wf

App Path : hdfs://hdfs_name/oozie/test_wf.xml

Status : KILLED

Run : 0

User : hadoop

Group : -

Created : 2018-09-25 02:51 GMT

Started : 2018-09-25 02:51 GMT

Last Modified : 2018-09-25 02:53 GMT

Ended : 2018-09-25 02:53 GMT

CoordAction ID: -

Actions

------------------------------------------------------------------------------------------------------------------------------------

ID Status Ext ID Ext Status Err Code

------------------------------------------------------------------------------------------------------------------------------------

0012077-180830142722522-oozie-hado-W@:start: OK - OK -

------------------------------------------------------------------------------------------------------------------------------------

0012077-180830142722522-oozie-hado-W@test_spark_task ERROR application_1537326594090_5663FAILED/KILLEDJA018

------------------------------------------------------------------------------------------------------------------------------------

0012077-180830142722522-oozie-hado-W@Kill OK - OK E0729

------------------------------------------------------------------------------------------------------------------------------------

失败的任务定义如下

<action name="test_spark_task">

<spark xmlns="uri:oozie:spark-action:0.1">

<job-tracker>${job_tracker}</job-tracker>

<name-node>${name_node}</name-node>

<master>${jobmaster}</master>

<mode>${jobmode}</mode>

<name>${jobname}</name>

<class>${jarclass}</class>

<jar>${jarpath}</jar>

<spark-opts>--executor-memory 4g --executor-cores 2 --num-executors 4 --driver-memory 4g</spark-opts>

</spark>

在yarn上可以看到application_1537326594090_5663对应的application如下

application_1537326594090_5663 hadoop oozie:launcher:T=spark:W=test_wf:A=test_spark_task:ID=0012077-180830142722522-oozie-hado-W Oozie Launcher

查看application_1537326594090_5663日志发现

2018-09-25 10:52:05,237 [main] INFO org.apache.hadoop.yarn.client.api.impl.YarnClientImpl - Submitted application application_1537326594090_5664

yarn上application_1537326594090_5664对应的application如下

application_1537326594090_5664 hadoop TestSparkTask SPARK

即application_1537326594090_5664才是Action对应的spark任务,为什么中间会多一步,类结构和核心代码详见 https://www.cnblogs.com/barneywill/p/9895225.html

简要来说,Oozie执行Action时,即ActionExecutor(最主要的子类是JavaActionExecutor,hive、spark等action都是这个类的子类),JavaActionExecutor首先会提交一个LauncherMapper(map任务)到yarn,其中会执行LauncherMain(具体的action是其子类,比如JavaMain、SparkMain等),spark任务会执行SparkMain,在SparkMain中会调用org.apache.spark.deploy.SparkSubmit来提交任务

如果提交的是spark任务,那么按照上边的方法就可以跟踪到实际任务的applicationId;

如果你提交的hive2任务,实际是用beeline启动,从hive2开始,beeline命令的日志已经简化,不像hive命令可以看到详细的applicationId和进度,这时有两种方法:

1)修改hive代码,使得beeline命令和hive命令一样有详细日志输出

详见:https://www.cnblogs.com/barneywill/p/10185949.html

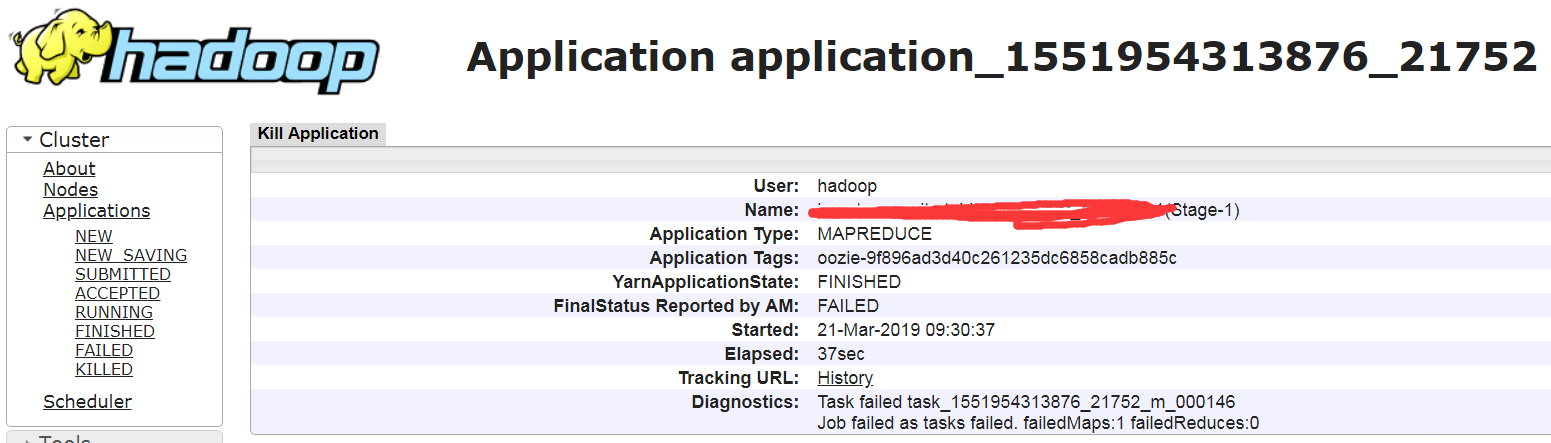

2)根据application tag手工查找任务

oozie在使用beeline提交任务时,会添加一个mapreduce.job.tags参数,比如

--hiveconf

mapreduce.job.tags=oozie-9f896ad3d40c261235dc6858cadb885c

但是这个tag从yarn application命令中查不到,只能手工逐个查找(实际启动的任务会在当前LuancherMapper的applicationId上递增),

然后就可以看到实际启动的applicationId了

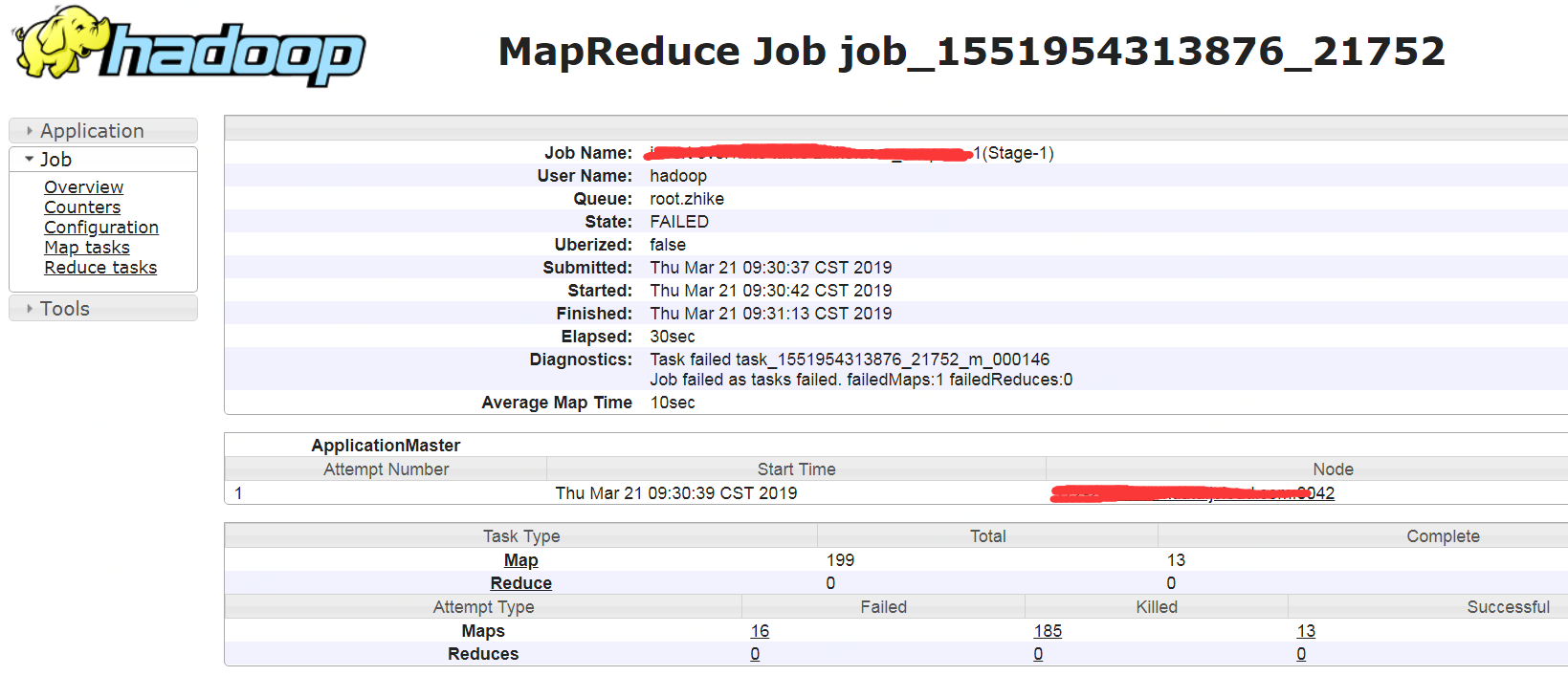

另外还可以从job history server上看到application的详细信息,比如configuration、task等

查看hive任务执行的完整sql详见:https://www.cnblogs.com/barneywill/p/10083731.html