一、环境准备

1.服务器说明

| 主机名 |

IP |

内核版本 |

| k8s-master01 |

10.0.0.81 |

5.4.156-1.el7.elrepo.x86_64 |

| k8s-master02 |

10.0.0.82 |

5.4.156-1.el7.elrepo.x86_64 |

| k8s-node01 |

10.0.0.84 |

5.4.156-1.el7.elrepo.x86_64 |

| SLB |

10.0.0.90 |

代理kube-apiserver |

2.需要的组件

#master节点:

kube-apiserver,kube-controller-manager,kube-scheduler,etcd,Kebelet,kube-proxy,flannel,DNS

#node节点:

kubelet,kube-proxy,docker,flannel

3.系统设置(所有节点)

#关闭selinux

#永久

[root@k8s-master01 ~]# sed -i 's#enforcing#disabled#g' /etc/selinux/config

#临时

[root@k8s-master01 ~]# setenforce 0

#检查

[root@k8s-master01 ~]# /usr/sbin/sestatus -v

SELinux status: enabled

#关闭防火墙

[root@k8s-master01 ~]# systemctl disable --now firewalld

#关闭swap分区

[root@k8s-master01 ~]# swapoff -a

[root@k8s-master01 ~]# sed -i 's/^.*centos-swap/# &/g' /etc/fstab

[root@k8s-master01 ~]# echo 'KUBELET_EXTRA_ARGS=\"--fail-swap-on=false\"' > /etc/sysconfig/kubelet

#优化系统

[root@k8s-master01 ~]# rm -f /etc/yum.repos.d/*

[root@k8s-master01 ~]# curl -o /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo

[root@k8s-master01 ~]# curl -o /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo

[root@k8s-master01 ~]# yum makecache

#配置hosts文件

[root@k8s-master01 ~]# cat >> /etc/hosts << EOF

10.0.0.81 k8s-master01 m1

10.0.0.82 k8s-master02 m2

10.0.0.84 k8s-node01 n1

EOF

#免密连接

[root@k8s-master01 ~]# ssh-keygen

[root@k8s-master01 ~]# ssh-copy-id root@10.0.0.81

[root@k8s-master01 ~]# ssh-copy-id root@10.0.0.82

[root@k8s-master01 ~]# ssh-copy-id root@10.0.0.84

#内核下载地址:https://elrepo.org/linux/kernel/el7/x86_64/RPMS/

#安装

[root@k8s-master01 ~]# yum localinstall -y kernel*

#设置启动优先级

[root@k8s-master01 ~]# grub2-set-default 0 && grub2-mkconfig -o /etc/grub2.cfg

#查看内核版本

[root@k8s-master01 ~]# grubby --default-kernel

/boot/vmlinuz-5.4.155-1.el7.elrepo.x86_64

#安装ipvs

[root@k8s-master01 ~]# yum install conntrack-tools ipvsadm ipset libseccomp wget expect vim net-tools ntp bash-completion ipvsadm jq iptables conntrack sysstat yum-utils device-mapper-persistent-data lvm2 ntp ntpdate -y

#加载ipvs模块

[root@k8s-master01 ~]# cat > /etc/sysconfig/modules/ipvs.modules << EOF

#!/bin/bash

ipvs_modules="ip_vs ip_vs_lc ip_vs_wlc ip_vs_rr ip_vs_wrr ip_vs_lblc ip_vs_lblcr ip_vs_dh ip_vs_sh ip_vs_fo ip_vs_nq ip_vs_sed ip_vs_ftp nf_conntrack"

for kernel_module in \${ipvs_modules}; do

/sbin/modinfo -F filename \${kernel_module} > /dev/null 2>&1

if [ $? -eq 0 ]; then

/sbin/modprobe \${kernel_module}

fi

done

EOF

[root@k8s-master01 ~]# chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep ip_vs

#优化系统内核参数

[root@k8s-master01 ~]# cat > /etc/sysctl.d/k8s.conf <<EOF

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

fs.may_detach_mounts = 1

vm.overcommit_memory=1

vm.panic_on_oom=0

fs.inotify.max_user_watches=89100

fs.file-max=52706963

fs.nr_open=52706963

net.ipv4.tcp_keepalive_time = 600

net.ipv4.tcp.keepaliv.probes = 3

net.ipv4.tcp_keepalive_intvl = 15

net.ipv4.tcp.max_tw_buckets = 36000

net.ipv4.tcp_tw_reuse = 1

net.ipv4.tcp.max_orphans = 327680

net.ipv4.tcp_orphan_retries = 3

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.ip_conntrack_max = 65536

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.top_timestamps = 0

net.core.somaxconn = 16384

EOF

#生效

[root@k8s-master01 ~]# sysctl -p

#设置时区

[root@k8s-master01 ~]# ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime && echo 'Asia/Shanghai' > /etc/timezone

#同步时间

[root@k8s-master01 ~]# ntpdate ntp.aliyun.com

#设置定时任务

[root@k8s-master01 ~]# crontab -e

#每隔两小时同步时间

* */2 * * * /usr/sbin/ntpdate ntp.aliyun.com &> /dev/null

#安装docker

#安装依赖

[root@docker ~]# yum install -y yum-utils device-mapper-persistent-data lvm2

#安装阿里云源仓库

[root@docker ~]# yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

[root@docker ~]# sed -i 's+download.docker.com+mirrors.aliyun.com/docker-ce+' /etc/yum.repos.d/docker-ce.repo

#查看docker版本

[root@docker ~]# yum list docker-ce --showduplicates | sort -r

#选择最新的19版本,进行安装

[root@docker ~]# yum install -y docker-ce-19.03.9

[root@docker ~]# mkdir /etc/docker

[root@docker ~]# cat > /etc/docker/daemon.json << EOF

{

"registry-mirrors": ["https://dp0vxr93.mirror.aliyuncs.com"]

}

EOF

[root@docker ~]# systemctl daemon-reload

[root@docker ~]# systemctl enable --now docker

二、Master准备证书

1.安装证书生成工具

1、CA

CA证书是用来生成应用证书。

2、应用证书

应用才是给予应用来使用

#下载地址:https://github.com/cloudflare/cfssl/releases

[root@k8s-master01 ~]# wget https://github.com/cloudflare/cfssl/releases/download/v1.6.0/cfssl_1.6.0_linux_amd64

[root@k8s-master01 ~]# wget https://github.com/cloudflare/cfssl/releases/download/v1.6.0/cfssljson_1.6.0_linux_amd64

[root@kubernetes-master01 ~]# chmod +x cfssl*

[root@kubernetes-master01 ~]# mv cfssl_1.6.0_linux_amd64 /usr/local/bin/cfssl

[root@kubernetes-master01 ~]# mv cfssljson_1.6.0_linux_amd64 /usr/local/bin/cfssljson

[root@kubernetes-master01 ~]# cfssl

No command is given.

Usage:

Available commands:

----- --- 省略 ---- --------

2.生成ETCD证书

1)自签证书颁发机构(CA)

#创建证书目录

[root@k8s-master01 ~]# mkdir -p /opt/{etcd,k8s}

[root@k8s-master01 ~]# cd /opt/etcd

#创建自签CA证书

[root@k8s-master01 /opt/etcd]# cat > ca-config.json <<EOF

{

"signing": {

"default": {

"expiry": "8760h"

},

"profiles": {

"kubernetes": {

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

],

"expiry": "8760h"

}

}

}

}

EOF

[root@k8s-master01 /opt/etcd]# cat > ca-csr.json << EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names":[{

"C": "CN",

"ST": "ShangHai",

"L": "ShangHai"

}]

}

EOF

#生成证书

[root@k8s-master01 /opt/etcd]# cfssl gencert -initca ca-csr.json | cfssljson -bare ca -

2021/11/06 10:06:59 [INFO] generating a new CA key and certificate from CSR

2021/11/06 10:06:59 [INFO] generate received request

2021/11/06 10:06:59 [INFO] received CSR

2021/11/06 10:06:59 [INFO] generating key: rsa-2048

2021/11/06 10:06:59 [INFO] encoded CSR

2021/11/06 10:06:59 [INFO] signed certificate with serial number 613459344032981532294523362615024309659184234885

#使用自签CA签发Etcd HTTPS应用证书

[root@k8s-master01 /opt/etcd]# cat > etcd-csr.json << EOF

{

"CN": "etcd",

"hosts": [

"127.0.0.1",

"10.0.0.81",

"10.0.0.82",

"10.0.0.84"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "ShangHai",

"L": "ShangHai"

}

]

}

EOF

#注:文件hosts字段中IP为所有etcd节点的集群内部通信IP,一个都不能少!为了方便后期扩容可以多写几个预留的IP。

#生成ETCD证书

[root@k8s-master01 /opt/etcd]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes etcd-csr.json | cfssljson -bare etcd

2021/11/06 10:16:14 [INFO] generate received request

2021/11/06 10:16:14 [INFO] received CSR

2021/11/06 10:16:14 [INFO] generating key: rsa-2048

2021/11/06 10:16:14 [INFO] encoded CSR

2021/11/06 10:16:14 [INFO] signed certificate with serial number 178581506183035585018851502308100317958063154518

#分发证书至master节点

[root@k8s-master01 /opt/etcd]# for ip in m1 m2

do

ssh root@${ip} "mkdir -pv /etc/etcd/ssl"

scp /opt/etcd/ca*.pem root@${ip}:/etc/etcd/ssl

scp /opt/etcd/etcd*.pem root@${ip}:/etc/etcd/ssl

done

[root@k8s-master01 /opt/etcd]# for ip in m1 m2

do

ssh root@${ip} "ls -l /etc/etcd/ssl";

done

2)在master节点部署Etcd

#在master节点部署Etcd

[root@k8s-master01 /opt/etcd]# mkdir /opt/data

[root@k8s-master01 /opt/etcd]# cd /opt/data

#下载包并解压

[root@k8s-master01 /opt/data]# wget https://mirrors.huaweicloud.com/etcd/v3.3.24/etcd-v3.3.24-linux-amd64.tar.gz

[root@k8s-master01 /opt/data]# tar xf etcd-v3.3.24-linux-amd64.tar.gz

#分发至master节点

[root@k8s-master01 /opt/data]# for i in m2 m1

do

scp /opt/data/etcd-v3.3.24-linux-amd64/etcd* root@$i:/usr/local/bin/

done

#创建etcd配置文件

#一定要所有master节点上执行

ETCD_NAME=`hostname`

INTERNAL_IP=`hostname -i`

INITIAL_CLUSTER=k8s-master01=https://10.0.0.81:2380,k8s-master02=https://10.0.0.82:2380

[root@k8s-master01 /opt/data]# cat << EOF | sudo tee /usr/lib/systemd/system/etcd.service

[Unit]

Description=etcd

Documentation=https://github.com/coreos

[Service]

ExecStart=/usr/local/bin/etcd \\

--name ${ETCD_NAME} \\

--cert-file=/etc/etcd/ssl/etcd.pem \\

--key-file=/etc/etcd/ssl/etcd-key.pem \\

--peer-cert-file=/etc/etcd/ssl/etcd.pem \\

--peer-key-file=/etc/etcd/ssl/etcd-key.pem \\

--trusted-ca-file=/etc/etcd/ssl/ca.pem \\

--peer-trusted-ca-file=/etc/etcd/ssl/ca.pem \\

--peer-client-cert-auth \\

--client-cert-auth \\

--initial-advertise-peer-urls https://${INTERNAL_IP}:2380 \\

--listen-peer-urls https://${INTERNAL_IP}:2380 \\

--listen-client-urls https://${INTERNAL_IP}:2379,https://127.0.0.1:2379 \\

--advertise-client-urls https://${INTERNAL_IP}:2379 \\

--initial-cluster-token etcd-cluster \\

--initial-cluster ${INITIAL_CLUSTER} \\

--initial-cluster-state new \\

--data-dir=/var/lib/etcd

Restart=on-failure

RestartSec=5

Type=notify

[Install]

WantedBy=multi-user.target

EOF

#参数详解:

name 节点名称

data-dir 指定节点的数据存储目录

listen-peer-urls 与集群其它成员之间的通信地址

listen-client-urls 监听本地端口,对外提供服务的地址

initial-advertise-peer-urls 通告给集群其它节点,本地的对等URL地址

advertise-client-urls 客户端URL,用于通告集群的其余部分信息

initial-cluster 集群中的所有信息节点

initial-cluster-token 集群的token,整个集群中保持一致

initial-cluster-state 初始化集群状态,默认为new

--cert-file 客户端与服务器之间TLS证书文件的路径

--key-file 客户端与服务器之间TLS密钥文件的路径

--peer-cert-file 对等服务器TLS证书文件的路径

--peer-key-file 对等服务器TLS密钥文件的路径

--trusted-ca-file 签名client证书的CA证书,用于验证client证书

--peer-trusted-ca-file 签名对等服务器证书的CA证书。

--trusted-ca-file 签名client证书的CA证书,用于验证client证书

--peer-trusted-ca-file 签名对等服务器证书的CA证书。

#设置自启动

[root@k8s-master01 /opt/data]# systemctl daemon-reload

[root@k8s-master01 /opt/data]# systemctl enable --now etcd;systemctl status etcd

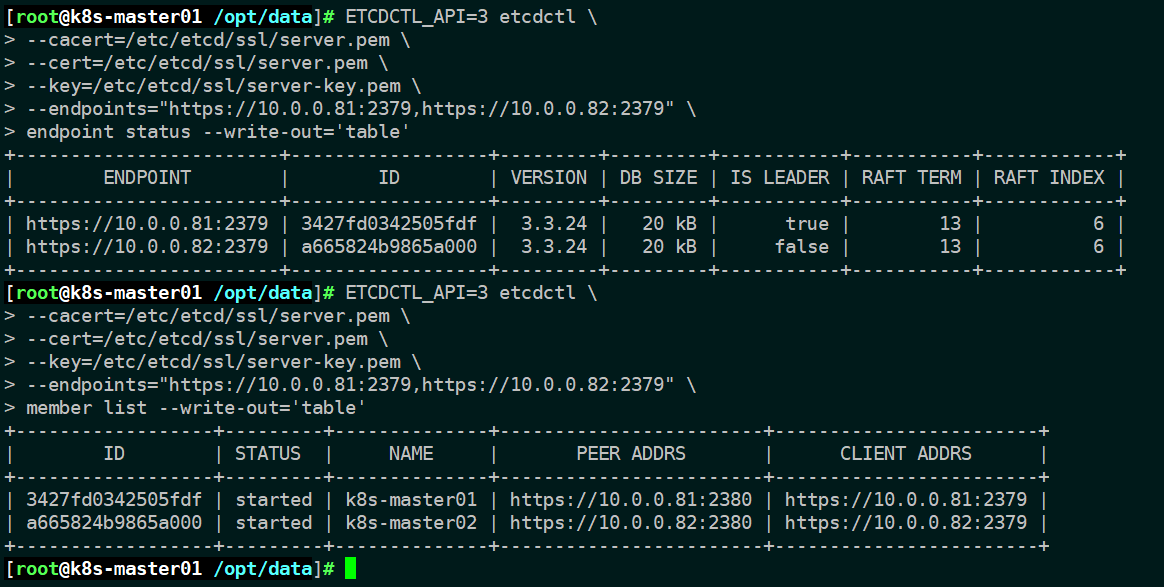

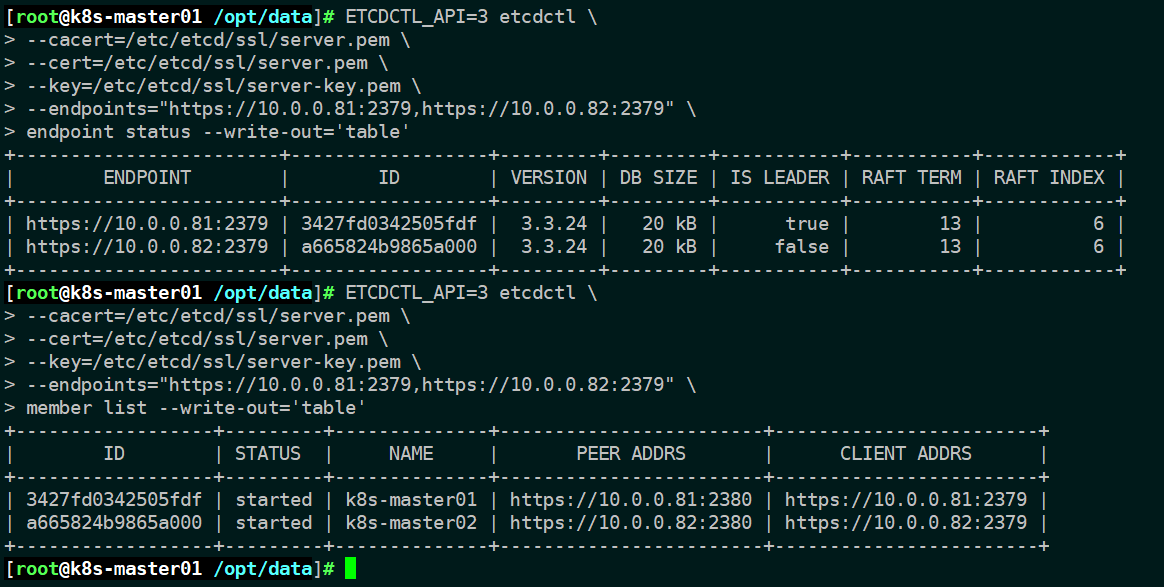

#测试

ETCDCTL_API=3 etcdctl \

--cacert=/etc/etcd/ssl/etcd.pem \

--cert=/etc/etcd/ssl/etcd.pem \

--key=/etc/etcd/ssl/etcd-key.pem \

--endpoints="https://10.0.0.81:2379,https://10.0.0.82:2379" \

endpoint status --write-out='table'

ETCDCTL_API=3 etcdctl \

--cacert=/etc/etcd/ssl/etcd.pem \

--cert=/etc/etcd/ssl/etcd.pem \

--key=/etc/etcd/ssl/etcd-key.pem \

--endpoints="https://10.0.0.81:2379,https://10.0.0.82:2379" \

member list --write-out='table'

3.生成kube-apiserver证书

1)自签证书颁发机构(CA)

#创建自签CA证书

[root@k8s-master01 /opt/data]# cd ../k8s/

[root@k8s-master01 /opt/k8s]# cat > ca-config.json << EOF

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF

[root@k8s-master01 /opt/k8s]# cat > ca-csr.json << EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "ShangHai",

"ST": "ShangHai"

}

]

}

EOF

#生成证书

[root@k8s-master01 /opt/k8s]# cfssl gencert -initca ca-csr.json | cfssljson -bare ca -

2021/11/06 11:53:38 [INFO] generating a new CA key and certificate from CSR

2021/11/06 11:53:38 [INFO] generate received request

2021/11/06 11:53:38 [INFO] received CSR

2021/11/06 11:53:38 [INFO] generating key: rsa-2048

2021/11/06 11:53:39 [INFO] encoded CSR

2021/11/06 11:53:39 [INFO] signed certificate with serial number 366988660322959184208668209387097490923322703608

2)使用自签CA签发kube-apiserver HTTPS应用证书

[root@k8s-master01 /opt/k8s]# cat > server-csr.json << EOF

{

"CN": "kubernetes",

"hosts": [

"127.0.0.1",

"10.0.0.81",

"10.0.0.82",

"10.0.0.90",

"10.96.0.1",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "ShangHai",

"ST": "ShangHai"

}

]

}

EOF

#注:上述文件hosts字段中IP为所有Master/SLB等 IP,一个都不能少!为了方便后期扩容可以多写几个预留的IP。

#生成证书

[root@k8s-master01 /opt/k8s]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes server-csr.json | cfssljson -bare server

2021/11/06 11:58:55 [INFO] generate received request

2021/11/06 11:58:55 [INFO] received CSR

2021/11/06 11:58:55 [INFO] generating key: rsa-2048

2021/11/06 11:58:55 [INFO] encoded CSR

2021/11/06 11:58:55 [INFO] signed certificate with serial number 605090051078916633653585216288258509934433433424

4.签发kube-controller-manager证书

#配置证书文件

[root@k8s-master01 /opt/k8s]# cat > kube-controller-manager-csr.json << EOF

{

"CN": "system:kube-controller-manager",

"hosts": [

"127.0.0.1",

"10.0.0.81",

"10.0.0.82",

"10.0.0.90"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "system:kube-controller-manager",

"OU": "System"

}

]

}

EOF

#注:上述文件hosts字段中IP为所有Master/SLB等 IP

#生成证书

[root@k8s-master01 /opt/k8s]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-controller-manager-csr.json | cfssljson -bare kube-controller-manager

2021/11/06 12:06:23 [INFO] generate received request

2021/11/06 12:06:23 [INFO] received CSR

2021/11/06 12:06:23 [INFO] generating key: rsa-2048

2021/11/06 12:06:23 [INFO] encoded CSR

2021/11/06 12:06:23 [INFO] signed certificate with serial number 694330250436490692683340848243604432030903814050

5.签发kube-scheduler证书

#配置证书文件

[root@k8s-master01 /opt/k8s]# cat > kube-scheduler-csr.json << EOF

{

"CN": "system:kube-scheduler",

"hosts": [

"127.0.0.1",

"10.0.0.81",

"10.0.0.82",

"10.0.0.90"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "system:kube-scheduler",

"OU": "System"

}

]

}

EOF

#注:上述文件hosts字段中IP为所有Master/SLB等 IP

#生成证书

[root@k8s-master01 /opt/k8s]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-scheduler-csr.json | cfssljson -bare kube-scheduler

2021/11/06 12:07:55 [INFO] generate received request

2021/11/06 12:07:55 [INFO] received CSR

2021/11/06 12:07:55 [INFO] generating key: rsa-2048

2021/11/06 12:07:56 [INFO] encoded CSR

2021/11/06 12:07:56 [INFO] signed certificate with serial number 481690545072639220914991608235750425011097835557

6.签发kube-proxy证书

#配置证书文件

[root@k8s-master01 /opt/k8s]# cat > kube-proxy-csr.json << EOF

{

"CN":"system:kube-proxy",

"hosts":[],

"key":{

"algo":"rsa",

"size":2048

},

"names":[

{

"C":"CN",

"L":"BeiJing",

"ST":"BeiJing",

"O":"system:kube-proxy",

"OU":"System"

}

]

}

EOF

#生成证书

[root@k8s-master01 /opt/k8s]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

2021/11/06 12:09:50 [INFO] generate received request

2021/11/06 12:09:50 [INFO] received CSR

2021/11/06 12:09:50 [INFO] generating key: rsa-2048

2021/11/06 12:09:51 [INFO] encoded CSR

2021/11/06 12:09:51 [INFO] signed certificate with serial number 246343755155369121131238609726259158176230709260

2021/11/06 12:09:51 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

7.签发管理员证书

#配置证书文件

[root@k8s-master01 /opt/k8s]# cat > admin-csr.json << EOF

{

"CN":"admin",

"key":{

"algo":"rsa",

"size":2048

},

"names":[

{

"C":"CN",

"L":"BeiJing",

"ST":"BeiJing",

"O":"system:masters",

"OU":"System"

}

]

}

EOF

#生成证书

[root@k8s-master01 /opt/k8s]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin

2021/11/06 12:12:03 [INFO] generate received request

2021/11/06 12:12:03 [INFO] received CSR

2021/11/06 12:12:03 [INFO] generating key: rsa-2048

2021/11/06 12:12:03 [INFO] encoded CSR

2021/11/06 12:12:03 [INFO] signed certificate with serial number 132018959260043977966121317527729855821294541387

2021/11/06 12:12:03 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

#分发证书至master节点

[root@k8s-master01 /opt/k8s]# mkdir -pv /etc/kubernetes/ssl

[root@k8s-master01 /opt/k8s]# cp -p ./{ca*pem,server*pem,kube-controller-manager*pem,kube-scheduler*.pem,kube-proxy*pem,admin*.pem} /etc/kubernetes/ssl

[root@k8s-master01 /opt/k8s]# for i in m2

do

ssh root@$i "mkdir -pv /etc/kubernetes/ssl"

scp /etc/kubernetes/ssl/* root@$i:/etc/kubernetes/ssl

done

三、部署master

1.部署kubernetes

#下载组件

[root@k8s-master01 /opt/data]# wget https://dl.k8s.io/v1.18.8/kubernetes-server-linux-amd64.tar.gz

[root@k8s-master01 /opt/data]# tar xf kubernetes-server-linux-amd64.tar.gz

[root@k8s-master01 /opt/data]# cd kubernetes/server/bin/

#分发组件

[root@k8s-master01 /opt/data/kubernetes/server/bin]# for i in m1 m2

do

scp kube-apiserver kube-controller-manager kube-scheduler kubectl kubelet kube-proxy root@$i:/usr/local/bin/

done

2.创建集群配置文件

1)创建kube-controller-manager.kubeconfig文件

[root@k8s-master01 /opt/data/kubernetes/server/bin]# cd /opt/k8s

#VIP 的节点

[root@k8s-master01 /opt/k8s]# export KUBE_APISERVER="https://10.0.0.90:8443"

#设置集群参数

[root@k8s-master01 /opt/k8s]# kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kube-controller-manager.kubeconfig

#设置客户端认证参数

[root@k8s-master01 /opt/k8s]# kubectl config set-credentials "kube-controller-manager" \

--client-certificate=/etc/kubernetes/ssl/kube-controller-manager.pem \

--client-key=/etc/kubernetes/ssl/kube-controller-manager-key.pem \

--embed-certs=true \

--kubeconfig=kube-controller-manager.kubeconfig

#设置上下文参数(在上下文参数中将集群参数和用户参数关联起来)

[root@k8s-master01 /opt/k8s]# kubectl config set-context default \

--cluster=kubernetes \

--user="kube-controller-manager" \

--kubeconfig=kube-controller-manager.kubeconfig

#配置默认上下文

[root@k8s-master01 /opt/k8s]# kubectl config use-context default --kubeconfig=kube-controller-manager.kubeconfig

2)创建kube-scheduler.kubeconfig文件

[root@k8s-master01 /opt/k8s]# export KUBE_APISERVER="https://10.0.0.90:8443"

#设置集群参数

[root@k8s-master01 /opt/k8s]# kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kube-scheduler.kubeconfig

#设置客户端认证参数

[root@k8s-master01 /opt/k8s]# kubectl config set-credentials "kube-scheduler" \

--client-certificate=/etc/kubernetes/ssl/kube-scheduler.pem \

--client-key=/etc/kubernetes/ssl/kube-scheduler-key.pem \

--embed-certs=true \

--kubeconfig=kube-scheduler.kubeconfig

#设置上下文参数(在上下文参数中将集群参数和用户参数关联起来)

[root@k8s-master01 /opt/k8s]# kubectl config set-context default \

--cluster=kubernetes \

--user="kube-scheduler" \

--kubeconfig=kube-scheduler.kubeconfig

#配置默认上下文

[root@kubernetes-master01 k8s]# kubectl config use-context default --kubeconfig=kube-scheduler.kubeconfig

3)创建kube-proxy.kubeconfig文件

[root@k8s-master01 /opt/k8s]# export KUBE_APISERVER="https://10.0.0.90:8443"

# 设置集群参数

[root@k8s-master01 /opt/k8s]# kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kube-proxy.kubeconfig

# 设置客户端认证参数

[root@k8s-master01 /opt/k8s]# kubectl config set-credentials "kube-proxy" \

--client-certificate=/etc/kubernetes/ssl/kube-proxy.pem \

--client-key=/etc/kubernetes/ssl/kube-proxy-key.pem \

--embed-certs=true \

--kubeconfig=kube-proxy.kubeconfig

# 设置上下文参数(在上下文参数中将集群参数和用户参数关联起来)

[root@k8s-master01 /opt/k8s]# kubectl config set-context default \

--cluster=kubernetes \

--user="kube-proxy" \

--kubeconfig=kube-proxy.kubeconfig

# 配置默认上下文

[root@k8s-master01 /opt/k8s]# kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig

4)创建admin集群配置参数

[root@k8s-master01 /opt/k8s]# export KUBE_APISERVER="https://10.0.0.90:8443"

#设置集群参数

[root@k8s-master01 /opt/k8s]# kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=admin.kubeconfig

#设置客户端认证参数

[root@k8s-master01 /opt/k8s]# kubectl config set-credentials "admin" \

--client-certificate=/etc/kubernetes/ssl/admin.pem \

--client-key=/etc/kubernetes/ssl/admin-key.pem \

--embed-certs=true \

--kubeconfig=admin.kubeconfig

#设置上下文参数(在上下文参数中将集群参数和用户参数关联起来)

[root@k8s-master01 /opt/k8s]# kubectl config set-context default \

--cluster=kubernetes \

--user="admin" \

--kubeconfig=admin.kubeconfig

#配置默认上下文

[root@k8s-master01 /opt/k8s]# kubectl config use-context default --kubeconfig=admin.kubeconfig

5)创建TLS bootstrapping

- 生成TLS bootstrapping所需token

[root@k8s-master01 /opt/k8s]# TLS_BOOTSTRAPPING_TOKEN=`head -c 16 /dev/urandom | od -An -t x | tr -d ' '`

[root@k8s-master01 /opt/k8s]# cat > token.csv << EOF

${TLS_BOOTSTRAPPING_TOKEN},kubelet-bootstrap,10001,"system:kubelet-bootstrap"

EOF

- 创建TLS bootstrapping集群配置文件

[root@k8s-master01 /opt/k8s]# export KUBE_APISERVER="https://10.0.0.90:8443"

#设置集群参数

[root@k8s-master01 /opt/k8s]# kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kubelet-bootstrap.kubeconfig

#查看token值

[root@k8s-master01 /opt/k8s]# cat token.csv

188196ad998dba1830f4214c3a08294d,kubelet-bootstrap,10001,"system:kubelet-bootstrap"

#设置客户端认证参数,此处token必须用上叙token.csv中的token

[root@k8s-master01 /opt/k8s]# kubectl config set-credentials "kubelet-bootstrap" \

--token=188196ad998dba1830f4214c3a08294d \

--kubeconfig=kubelet-bootstrap.kubeconfig

#设置上下文参数(在上下文参数中将集群参数和用户参数关联起来)

[root@k8s-master01 /opt/k8s]# kubectl config set-context default \

--cluster=kubernetes \

--user="kubelet-bootstrap" \

--kubeconfig=kubelet-bootstrap.kubeconfig

#配置默认上下文

[root@k8s-master01 /opt/k8s]# kubectl config use-context default --kubeconfig=kubelet-bootstrap.kubeconfig

6)分发

[root@k8s-master01 /opt/k8s]# for i in m1 m2

do

ssh root@$i "mkdir -p /etc/kubernetes/cfg";

scp token.csv kube-scheduler.kubeconfig kube-controller-manager.kubeconfig admin.kubeconfig kube-proxy.kubeconfig kubelet-bootstrap.kubeconfig root@$i:/etc/kubernetes/cfg;

done

3.高可用部署api-server

[root@k8s-master01 /opt/k8s]# yum install -y keepalived haproxy

#配置haproxy

[root@k8s-master01 /opt/k8s]# cat > /etc/haproxy/haproxy.cfg <<EOF

global

maxconn 2000

ulimit-n 16384

log 127.0.0.1 local0 err

stats timeout 30s

defaults

log global

mode http

option httplog

timeout connect 5000

timeout client 50000

timeout server 50000

timeout http-request 15s

timeout http-keep-alive 15s

frontend monitor-in

bind *:33305

mode http

option httplog

monitor-uri /monitor

listen stats

bind *:8006

mode http

stats enable

stats hide-version

stats uri /stats

stats refresh 30s

stats realm Haproxy\ Statistics

stats auth admin:admin

frontend k8s-master

bind 0.0.0.0:8443

bind 127.0.0.1:8443

mode tcp

option tcplog

tcp-request inspect-delay 5s

default_backend k8s-master

backend k8s-master

mode tcp

option tcplog

option tcp-check

balance roundrobin

default-server inter 10s downinter 5s rise 2 fall 2 slowstart 60s maxconn 250 maxqueue 256 weight 100

server k8s-master01 10.0.0.81:6443 check inter 2000 fall 2 rise 2 weight 100

server k8s-master02 10.0.0.82:6443 check inter 2000 fall 2 rise 2 weight 100

EOF

#配置keepalived

[root@k8s-master01 /opt/k8s]# mv /etc/keepalived/keepalived.conf /etc/keepalived/keepalived.conf_bak

[root@k8s-master01 /opt/k8s]# KUBE_APISERVER_IP=`hostname -i`

[root@k8s-master01 /opt/k8s]# cat > /etc/keepalived/keepalived.conf <<EOF

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL

}

vrrp_instance VI_1 {

state MASTER

interface eth0

mcast_src_ip ${KUBE_APISERVER_IP}

virtual_router_id 51

priority 100

advert_int 2

authentication {

auth_type PASS

auth_pass K8SHA_KA_AUTH

}

virtual_ipaddress {

10.0.0.90

}

}

EOF

#启动

[root@k8s-master01 /opt/k8s]# systemctl enable --now keepalived.service haproxy.service

#分发至其他master节点

[root@k8s-master01 /opt/k8s]# for i in m2

do

ssh root@$i "yum install -y keepalived haproxy"

scp /etc/haproxy/haproxy.cfg $i:/etc/haproxy/

ssh root@$i "mv /etc/keepalived/keepalived.conf /etc/keepalived/keepalived.conf_bak"

scp /etc/keepalived/keepalived.conf $i:/etc/keepalived/

done

#配置k8s-master02节点

sed -i '7s/MASTER/BACKUP/g;9s/10.0.0.81/10.0.0.82/g;11s/100/90/g' /etc/keepalived/keepalived.conf

systemctl enable --now keepalived.service haproxy.service

4.部署kube-apiserver

[root@k8s-master01 /etc/kubernetes/cfg]# KUBE_APISERVER_IP=`hostname -i`

#创建kube-apiserver配置文件

[root@k8s-master01 /etc/kubernetes/cfg]# cat > kube-apiserver.conf << EOF

KUBE_APISERVER_OPTS="--logtostderr=false \\

--v=2 \\

--log-dir=/var/log/kubernetes \\

--advertise-address=${KUBE_APISERVER_IP} \\

--default-not-ready-toleration-seconds=360 \\

--default-unreachable-toleration-seconds=360 \\

--max-mutating-requests-inflight=2000 \\

--max-requests-inflight=4000 \\

--default-watch-cache-size=200 \\

--delete-collection-workers=2 \\

--bind-address=0.0.0.0 \\

--secure-port=6443 \\

--allow-privileged=true \\

--service-cluster-ip-range=10.96.0.0/16 \\

--service-node-port-range=30000-60000 \\

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction \\

--authorization-mode=RBAC,Node \\

--enable-bootstrap-token-auth=true \\

--token-auth-file=/etc/kubernetes/cfg/token.csv \\

--kubelet-client-certificate=/etc/kubernetes/ssl/server.pem \\

--kubelet-client-key=/etc/kubernetes/ssl/server-key.pem \\

--tls-cert-file=/etc/kubernetes/ssl/server.pem \\

--tls-private-key-file=/etc/kubernetes/ssl/server-key.pem \\

--client-ca-file=/etc/kubernetes/ssl/ca.pem \\

--service-account-key-file=/etc/kubernetes/ssl/ca-key.pem \\

--audit-log-maxage=30 \\

--audit-log-maxbackup=3 \\

--audit-log-maxsize=100 \\

--audit-log-path=/var/log/kubernetes/k8s-audit.log \\

--etcd-servers=https://10.0.0.81:2379,https://10.0.0.82:2379 \\

--etcd-cafile=/etc/etcd/ssl/ca.pem \\

--etcd-certfile=/etc/etcd/ssl/etcd.pem \\

--etcd-keyfile=/etc/etcd/ssl/etcd-key.pem"

EOF

#systemd管理apiserver

[root@k8s-master01 /etc/kubernetes/cfg]# cat > /usr/lib/systemd/system/kube-apiserver.service << EOF

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

EnvironmentFile=/etc/kubernetes/cfg/kube-apiserver.conf

ExecStart=/usr/local/bin/kube-apiserver \$KUBE_APISERVER_OPTS

Restart=on-failure

RestartSec=10

Type=notify

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

#启动并设置开机自启

systemctl daemon-reload

systemctl enable --now kube-apiserver.service

#把master1节点上内容分别拷贝到在master2和master3节点上,注意token不变,配置文件分别修改--bind-address成本机ip,--advertise-address是SLB的ip地址不变化(已经在负载配置完成)

#分发至其他master节点

[root@k8s-master01 /etc/kubernetes/cfg]# for i in m2

do

scp -r /etc/kubernetes/cfg/kube-apiserver.conf $i:/etc/kubernetes/cfg

scp -r /usr/lib/systemd/system/kube-apiserver.service $i:/usr/lib/systemd/system/

done

#配置k8s-master02节点

sed -i '4s/10.0.0.81/10.0.0.82/g' /etc/kubernetes/cfg/kube-apiserver.conf

systemctl daemon-reload;systemctl enable --now kube-apiserver.service

#创建TLS低权限用户

kubectl create clusterrolebinding kubelet-bootstrap \

--clusterrole=system:node-bootstrapper \

--user=kubelet-bootstrap

#验证

1.浏览器输入

https://10.0.0.81:6443/version

https://10.0.0.82:6443/version

https://10.0.0.90:6443/version

#以上地址返回kubernetes版本信息说明正常

2.在shell上执行

[root@k8s-master01 /opt/k8s]# curl -k https://10.0.0.90:6443/version

[root@k8s-master01 /opt/k8s]# curl -k https://10.0.0.81:6443/version

[root@k8s-master01 /opt/k8s]# curl -k https://10.0.0.82:6443/version

{

"major": "1",

"minor": "18",

"gitVersion": "v1.18.8",

"gitCommit": "9f2892aab98fe339f3bd70e3c470144299398ace",

"gitTreeState": "clean",

"buildDate": "2020-08-13T16:04:18Z",

"goVersion": "go1.13.15",

"compiler": "gc",

"platform": "linux/amd64"

}

5.部署kube-controller-manager服务

#创建配置文件

[root@k8s-master01 ~]# cat > /etc/kubernetes/cfg/kube-controller-manager.conf << EOF

KUBE_CONTROLLER_MANAGER_OPTS="--logtostderr=false \\

--v=2 \\

--log-dir=/var/log/kubernetes \\

--leader-elect=true \\

--cluster-name=kubernetes \\

--bind-address=127.0.0.1 \\

--allocate-node-cidrs=true \\

--cluster-cidr=10.244.0.0/12 \\

--service-cluster-ip-range=10.96.0.0/16 \\

--cluster-signing-cert-file=/etc/kubernetes/ssl/ca.pem \\

--cluster-signing-key-file=/etc/kubernetes/ssl/ca-key.pem \\

--root-ca-file=/etc/kubernetes/ssl/ca.pem \\

--service-account-private-key-file=/etc/kubernetes/ssl/ca-key.pem \\

--kubeconfig=/etc/kubernetes/cfg/kube-controller-manager.kubeconfig \\

--tls-cert-file=/etc/kubernetes/ssl/kube-controller-manager.pem \\

--tls-private-key-file=/etc/kubernetes/ssl/kube-controller-manager-key.pem \\

--experimental-cluster-signing-duration=87600h0m0s \\

--controllers=*,bootstrapsigner,tokencleaner \\

--use-service-account-credentials=true \\

--node-monitor-grace-period=10s \\

--horizontal-pod-autoscaler-use-rest-clients=true"

EOF

#注册服务

[root@k8s-master01 ~]# cat > /usr/lib/systemd/system/kube-controller-manager.service << EOF

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

EnvironmentFile=/etc/kubernetes/cfg/kube-controller-manager.conf

ExecStart=/usr/local/bin/kube-controller-manager \$KUBE_CONTROLLER_MANAGER_OPTS

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOF

#启动服务

[root@k8s-master01 ~]# systemctl enable --now kube-controller-manager.service

#发送至其他master节点

[root@k8s-master01 ~]# for i in m2

do

scp /etc/kubernetes/cfg/kube-controller-manager.conf $i:/etc/kubernetes/cfg/

scp /usr/lib/systemd/system/kube-controller-manager.service $i:/usr/lib/systemd/system/

ssh root@$i "systemctl enable --now kube-controller-manager.service"

done

6.部署kube-scheduler服务

#创建配置文件

[root@k8s-master01 ~]# cat > /etc/kubernetes/cfg/kube-scheduler.conf << EOF

KUBE_SCHEDULER_OPTS="--logtostderr=false \\

--v=2 \\

--log-dir=/var/log/kubernetes \\

--kubeconfig=/etc/kubernetes/cfg/kube-scheduler.kubeconfig \\

--leader-elect=true \\

--master=http://127.0.0.1:8080 \\

--bind-address=127.0.0.1 "

EOF

#注册服务

[root@k8s-master01 ~]# cat > /usr/lib/systemd/system/kube-scheduler.service << EOF

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

EnvironmentFile=/etc/kubernetes/cfg/kube-scheduler.conf

ExecStart=/usr/local/bin/kube-scheduler \$KUBE_SCHEDULER_OPTS

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOF

#启动

[root@k8s-master01 ~]# systemctl enable --now kube-scheduler.service

#发送至其他master节点

[root@k8s-master01 ~]# for i in m2

do

scp /etc/kubernetes/cfg/kube-scheduler.conf $i:/etc/kubernetes/cfg/

scp /usr/lib/systemd/system/kube-scheduler.service $i:/usr/lib/systemd/system/

ssh root@$i "systemctl enable --now kube-scheduler.service"

done

7.部署kubelet

#配置文件

KUBE_HOSTNAME=`hostname`

KUBE_HOSTNAME_IP=`hostname -i`

[root@k8s-master01 ~]# cat > /etc/kubernetes/cfg/kubelet.conf << EOF

KUBELET_OPTS="--logtostderr=false \\

--v=2 \\

--log-dir=/var/log/kubernetes \\

--hostname-override=${KUBE_HOSTNAME} \\

--container-runtime=docker \\

--kubeconfig=/etc/kubernetes/cfg/kubelet.kubeconfig \\

--bootstrap-kubeconfig=/etc/kubernetes/cfg/kubelet-bootstrap.kubeconfig \\

--config=/etc/kubernetes/cfg/kubelet-config.yml \\

--cert-dir=/etc/kubernetes/ssl \\

--image-pull-progress-deadline=15m \\

--pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/k8sos/pause:3.2"

EOF

[root@k8s-master01 ~]# cat > /etc/kubernetes/cfg/kubelet-config.yml << EOF

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

address: ${KUBE_HOSTNAME_IP}

port: 10250

readOnlyPort: 10255

cgroupDriver: cgroupfs

clusterDNS:

- 10.96.0.2

clusterDomain: cluster.local

failSwapOn: false

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 2m0s

enabled: true

x509:

clientCAFile: /etc/kubernetes/ssl/ca.pem

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 5m0s

cacheUnauthorizedTTL: 30s

evictionHard:

imagefs.available: 15%

memory.available: 100Mi

nodefs.available: 10%

nodefs.inodesFree: 5%

maxOpenFiles: 1000000

maxPods: 110

EOF

#注册服务

[root@k8s-master01 ~]# cat > /usr/lib/systemd/system/kubelet.service << EOF

[Unit]

Description=Kubernetes Kubelet

After=docker.service

[Service]

EnvironmentFile=/etc/kubernetes/cfg/kubelet.conf

ExecStart=/usr/local/bin/kubelet \$KUBELET_OPTS

Restart=on-failure

RestartSec=10

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

#启动

[root@k8s-master01 ~]# systemctl daemon-reload;systemctl enable --now kubelet; systemctl status kubelet.service

#发送至其他master节点

[root@k8s-master01 ~]# for i in m2

do

scp /etc/kubernetes/cfg/kubelet.conf $i:/etc/kubernetes/cfg/

scp /etc/kubernetes/cfg/kubelet-config.yml $i:/etc/kubernetes/cfg/

scp /usr/lib/systemd/system/kubelet.service $i:/usr/lib/systemd/system/

done

#配置k8s-master02节点

sed -i '4s/k8s-master01/k8s-master02/g' /etc/kubernetes/cfg/kubelet.conf

sed -i '3s/10.0.0.81/10.0.0.82/g' /etc/kubernetes/cfg/kubelet-config.yml

systemctl daemon-reload;systemctl enable --now kubelet;systemctl status kube-proxy

8.部署kube-proxy

#配置文件

[root@k8s-master01 ~]# cat > /etc/kubernetes/cfg/kube-proxy.conf << EOF

KUBE_PROXY_OPTS="--logtostderr=false \\

--v=2 \\

--log-dir=/var/log/kubernetes \\

--config=/etc/kubernetes/cfg/kube-proxy-config.yml"

EOF

KUBE_HOSTNAME=`hostname`

KUBE_HOSTNAME_IP=`hostname -i`

[root@k8s-master01 ~]# cat > /etc/kubernetes/cfg/kube-proxy-config.yml << EOF

kind: KubeProxyConfiguration

apiVersion: kubeproxy.config.k8s.io/v1alpha1

bindAddress: ${KUBE_HOSTNAME_IP}

healthzBindAddress: ${KUBE_HOSTNAME_IP}:10256

metricsBindAddress: ${KUBE_HOSTNAME_IP}:10249

clientConnection:

burst: 200

kubeconfig: /etc/kubernetes/cfg/kube-proxy.kubeconfig

qps: 100

hostnameOverride: ${KUBE_HOSTNAME}

clusterCIDR: 10.96.0.0/16

enableProfiling: true

mode: "ipvs"

kubeProxyIPTablesConfiguration:

masqueradeAll: false

kubeProxyIPVSConfiguration:

scheduler: rr

excludeCIDRs: []

EOF

#注册服务

[root@k8s-master01 ~]# cat > /usr/lib/systemd/system/kube-proxy.service << EOF

[Unit]

Description=Kubernetes Proxy

After=network.target

[Service]

EnvironmentFile=/etc/kubernetes/cfg/kube-proxy.conf

ExecStart=/usr/local/bin/kube-proxy \$KUBE_PROXY_OPTS

Restart=on-failure

RestartSec=10

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

#启动

[root@k8s-master01 ~]# systemctl daemon-reload; systemctl enable --now kube-proxy; systemctl status kube-proxy

#发送至其他master节点

[root@k8s-master01 ~]# for i in m2

do

scp /etc/kubernetes/cfg/kube-proxy.conf $i:/etc/kubernetes/cfg

scp /etc/kubernetes/cfg/kube-proxy-config.yml $i:/etc/kubernetes/cfg

scp /usr/lib/systemd/system/kube-proxy.service $i:/usr/lib/systemd/system/

done

#配置k8s-master02节点

sed -i 's/10.0.0.81/10.0.0.82/g;s/k8s-master01/k8s-master02/g' /etc/kubernetes/cfg/kube-proxy-config.yml

systemctl daemon-reload; systemctl enable --now kube-proxy; systemctl status kube-proxy

9.允许节点加入集群

[root@k8s-master01 /opt/k8s]# kubectl certificate approve `kubectl get csr | grep "Pending" | awk '{print $1}'`

#设置Master角色

kubectl label nodes k8s-master01 node-role.kubernetes.io/master=k8s-master01

kubectl label nodes k8s-master02 node-role.kubernetes.io/master=k8s-master02

#查看

[root@k8s-master01 /etc/kubernetes/cfg]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master01 Ready master 7m4s v1.18.8

k8s-master02 Ready master 5m39s v1.18.8

#master节点一般情况下不运行pod,因此我们需要给master节点添加污点使其不被调度

kubectl taint nodes k8s-master01 node-role.kubernetes.io/master=k8s-master01:NoSchedule --overwrite

kubectl taint nodes k8s-master02 node-role.kubernetes.io/master=k8s-master02:NoSchedule --overwrite

10.部署flannel网络插件

#下载网络插件

[root@k8s-master01 /opt/data]# wget https://github.com/coreos/flannel/releases/download/v0.13.1-rc1/flannel-v0.13.1-rc1-linux-amd64.tar.gz

[root@k8s-master01 /opt/data]# tar xf flannel-v0.13.1-rc1-linux-amd64.tar.gz

[root@k8s-master01 /opt/data]# for i in m1 m2

do

scp flanneld mk-docker-opts.sh root@$i:/usr/local/bin

done

#master节点配置写入ETCD

etcdctl \

--ca-file=/etc/etcd/ssl/ca.pem \

--cert-file=/etc/etcd/ssl/etcd.pem \

--key-file=/etc/etcd/ssl/etcd-key.pem \

--endpoints="https://10.0.0.81:2379,https://10.0.0.82:2379" \

mk /coreos.com/network/config '{"Network":"10.244.0.0/12", "SubnetLen": 21, "Backend": {"Type": "vxlan", "DirectRouting": true}}'

etcdctl \

--ca-file=/etc/etcd/ssl/ca.pem \

--cert-file=/etc/etcd/ssl/etcd.pem \

--key-file=/etc/etcd/ssl/etcd-key.pem \

--endpoints="https://10.0.0.81:2379,https://10.0.0.82:2379" \

get /coreos.com/network/config

#注册flannle的服务

[root@k8s-master01 /opt/data]# cat > /usr/lib/systemd/system/flanneld.service << EOF

[Unit]

Description=Flanneld address

After=network.target

After=network-online.target

Wants=network-online.target

After=etcd.service

Before=docker.service

[Service]

Type=notify

ExecStart=/usr/local/bin/flanneld \\

-etcd-cafile=/etc/etcd/ssl/ca.pem \\

-etcd-certfile=/etc/etcd/ssl/etcd.pem \\

-etcd-keyfile=/etc/etcd/ssl/etcd-key.pem \\

-etcd-endpoints=https://10.0.0.81:2379,https://10.0.0.82:2379 \\

-etcd-prefix=/coreos.com/network \\

-ip-masq

ExecStartPost=/usr/local/bin/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/subnet.env

Restart=always

RestartSec=5

StartLimitInterval=0

[Install]

WantedBy=multi-user.target

RequiredBy=docker.service

EOF

#分发flanneld脚本

[root@k8s-master01 /opt/data]# for i in m2

do

scp /usr/lib/systemd/system/flanneld.service root@$i:/usr/lib/systemd/system

done

#修改Docker的网络模式

sed -i '/ExecStart/s/\(.*\)/#\1/' /usr/lib/systemd/system/docker.service

sed -i '/ExecReload/a ExecStart=/usr/bin/dockerd $DOCKER_NETWORK_OPTIONS -H fd:// --containerd=/run/containerd/containerd.sock' /usr/lib/systemd/system/docker.service

sed -i '/ExecReload/a EnvironmentFile=-/run/flannel/subnet.env' /usr/lib/systemd/system/docker.service

#分发docker脚本

[root@k8s-master01 ~]# for ip in m2

do

scp /usr/lib/systemd/system/docker.service root@${ip}:/usr/lib/systemd/system

done

#设置开机自启并重启docker

[root@k8s-master01 ~]# for i in m1 m2

do

echo ">>> $i"

ssh root@$i "systemctl daemon-reload"

ssh root@$i "systemctl enable --now flanneld"

ssh root@$i "systemctl restart docker"

done

11.部署coreDNS

#下载包并解压

[root@k8s-master01 /opt/data]# rz deployment-master.zip

[root@k8s-master01 /opt/data]# unzip deployment-master.zip

[root@k8s-master01 /opt/data]# cd /opt/data/deployment-master/kubernetes/

#绑定集群匿名用户权限

[root@k8s-master01 /opt/data/deployment-master/kubernetes]# kubectl create clusterrolebinding cluster-system-anonymous --clusterrole=cluster-admin --user=kubernetes

#修改CoreDNS

[root@k8s-master01 /opt/data/deployment-master/kubernetes]# sed -i 's#coredns/coredns#registry.cn-hangzhou.aliyuncs.com/k8sos/coredns#g' coredns.yaml.sed

#部署

[root@k8s-master01 /opt/data/deployment-master/kubernetes]# ./deploy.sh -i 10.96.0.2 -s | kubectl apply -f -

#绑定用户的超管权限,此举是将超管的用户权限绑定到集群

[root@k8s-master01 ~]# kubectl create clusterrolebinding cluster-system-anonymous --clusterrole=cluster-admin --user=kubernetes

#测试集群

[root@k8s-master01 ~]# kubectl run test -it --rm --image=busybox:1.28.3

If you don't see a command prompt, try pressing enter.

/ # nslookup kubernetes

Server: 10.96.0.2

Address 1: 10.96.0.2 kube-dns.kube-system.svc.cluster.local

Name: kubernetes

Address 1: 10.96.0.1 kubernetes.default.svc.cluster.local

四、部署node节点

1.分发工具

[root@k8s-master01 /opt/data]# cd /opt/data/kubernetes/server/bin

[root@k8s-master01 /opt/data/kubernetes/server/bin]# for i in n1

do

scp kubelet kube-proxy kubectl root@$i:/usr/local/bin/

done

2.颁发证书

[root@k8s-master01 ~]# for i in n1

do

ssh root@$i "mkdir -pv /etc/kubernetes/ssl"

scp -pr /etc/kubernetes/ssl/{ca*.pem,admin*pem,kube-proxy*pem} root@$i:/etc/kubernetes/ssl

done

3.部署kubelet服务

[root@k8s-master01 ~]# for ip in n1

do

ssh root@${ip} "mkdir -pv /var/log/kubernetes"

ssh root@${ip} "mkdir -pv /etc/kubernetes/cfg/"

scp /etc/kubernetes/cfg/{kubelet-config.yml,kubelet.conf,kubelet-bootstrap.kubeconfig} root@${ip}:/etc/kubernetes/cfg

scp /usr/lib/systemd/system/kubelet.service root@${ip}:/usr/lib/systemd/system

done

#修改配置文件中的IP,需要在对应的node节点上执行

sed -i 's#10.0.0.81#10.0.0.84#g' /etc/kubernetes/cfg/kubelet-config.yml

sed -i 's#k8s-master01#k8s-node01#g' /etc/kubernetes/cfg/kubelet.conf

#设置开机自启动

[root@k8s-node01 ~]# systemctl daemon-reload;systemctl enable --now kubelet;systemctl status kubelet.service

5.配置kube-proxy

[root@k8s-master01 ~]# for ip in n1

do

scp /etc/kubernetes/cfg/{kube-proxy-config.yml,kube-proxy.conf,kube-proxy.kubeconfig} root@${ip}:/etc/kubernetes/cfg/

scp /usr/lib/systemd/system/kube-proxy.service root@${ip}:/usr/lib/systemd/system/

done

#修改配置文件中的IP,需要在对应的node节点上执行

sed -i 's#k8s-master01#k8s-node01#g' /etc/kubernetes/cfg/kube-proxy-config.yml

sed -i 's#10.0.0.81#10.0.0.84#g' /etc/kubernetes/cfg/kube-proxy-config.yml

#设置开机自启动

[root@k8s-node01 ~]# systemctl daemon-reload; systemctl enable --now kube-proxy; systemctl status kube-proxy

6.部署网络插件

#分发网络插件

[root@k8s-master01 ~]# cd /opt/data

[root@k8s-master01 /opt/data]# for i in n1

do

scp flanneld mk-docker-opts.sh root@$i:/usr/local/bin

done

#分发ETCD证书

[root@k8s-master01 /opt/data]# for i in n1

do

ssh root@$i "mkdir -pv /etc/etcd/ssl"

scp -p /etc/etcd/ssl/*.pem root@$i:/etc/etcd/ssl

done

#分发flannel脚本

[root@k8s-master01 /opt/data]# for i in n1

do

scp /usr/lib/systemd/system/flanneld.service root@$i:/usr/lib/systemd/system

done

#分发docker脚本

[root@k8s-master01 /opt/data]# for ip in n1

do

scp /usr/lib/systemd/system/docker.service root@${ip}:/usr/lib/systemd/system

done

#重启flannel

[root@k8s-master01 /opt/data]# for i in n1

do

echo ">>> $i"

ssh root@$i "systemctl daemon-reload"

ssh root@$i "systemctl enable --now flanneld"

ssh root@$i "systemctl restart docker"

done

7.批准node节点加入

#批准node节点加入

[root@k8s-master01 /opt/data]# kubectl certificate approve `kubectl get csr | grep "Pending" | awk '{print $1}'`

#查看节点详情

[root@k8s-master01 /opt/data]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master01 Ready master 4h58m v1.18.8

k8s-master02 Ready master 4h58m v1.18.8

k8s-node01 NotReady <none> 3s v1.18.8

#设置node角色

[root@k8s-master01 /opt/data]# for i in 1

do

kubectl label nodes k8s-node0$i node-role.kubernetes.io/node=k8s-node0$i

done

[root@k8s-master01 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master01 Ready master 4h58m v1.18.8

k8s-master02 Ready master 4h58m v1.18.8

k8s-node01 Ready node 147m v1.18.8

8.验证集群

[root@k8s-master01 ~]# kubectl run test -it --rm --image=busybox:1.28.3

If you don't see a command prompt, try pressing enter.

/ # nslookup kubernetes

Server: 10.96.0.2

Address 1: 10.96.0.2 kube-dns.kube-system.svc.cluster.local

Name: kubernetes

Address 1: 10.96.0.1 kubernetes.default.svc.cluster.local

9.tab提示工具

yum install -y bash-completion

source /usr/share/bash-completion/bash_completion

source <(kubectl completion bash)

echo "source <(kubectl completion bash)" >> ~/.bashrc

五、部署k8s图像化界面

1.安装Dashboard

[root@k8s-master01 ~]# kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.5/aio/deploy/recommended.yaml

[root@k8s-master01 ~]# kubectl get pods -n kubernetes-dashboard

NAME READY STATUS RESTARTS AGE

dashboard-metrics-scraper-78f5d9f487-lckpq 1/1 Running 0 46m

kubernetes-dashboard-54445cdd96-wfsdl 1/1 Running 1 46m

2.映射端口

[root@kubernetes-master01 ~]# kubectl edit -n kubernetes-dashboard svc kubernetes-dashboard

#修改这一行

type: NodePort

3.查看端口

[root@k8s-master01 ~]# kubectl get svc -n kubernetes-dashboard

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

dashboard-metrics-scraper ClusterIP 10.96.219.246 <none> 8000/TCP 49m

kubernetes-dashboard NodePort 10.96.194.127 <none> 443:35903/TCP 49m

4.浏览器测试

https://10.0.0.81:35903

5.创建TOKEN

[root@k8s-master01 ~]# cat > token.yaml << EOF

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kube-system

EOF

[root@k8s-master01 ~]# kubectl apply -f token.yaml

[root@k8s-master01 ~]# kubectl -n kube-system describe secret $(kubectl -n kube-system get secret | grep admin-user | awk '{print $1}') | grep 'token:' | awk -F: '{print $2}' | tr -d ' '