Hadoop环境搭建

hadoop和jdk的下载问题:如果是下载到windows上,需要移动到虚拟机上。只需直接拖拽就可以完成文件的移动。如果没能拖拽成功,则需要使用远程连接的软件来完成文件的上传,这里推荐使用MobaXterm的安装和使用:https://www.cnblogs.com/cainiao-chuanqi/p/11366726.html

完成上传之后,其中的simple/soft等文件夹需要自己去创建(创建目录的命令为mkdir xxx,这里的创建没有硬性规定,随自己喜好,但是前提是你可以找到自己创建的目录文件夹在那个位置。

环境变量的设置需要准确定位到JDK和Hadoop存放目录的绝对路径。

文章涉及到的IP地址为本虚拟机的IP地址。大家做实验的时候需要使用自己虚拟机的IP地址(查看:ifconfig)

JDK的安装与配置

首先是JDK的选择,建议选择JDK1.8版本。防止兼容性问题。因为一个Hadoop的安装过程中会调用许多jar包。(Hadoop本身是由Java来写的)。

下载JDK

下载到一个文件夹中,这里选择的是 /home/cai/simple/soft文件夹。把下载过后的JDK复制到此文件夹中。(到网上下载Linux版本的jdk)。ps:用cp或者mv来移动文件。

cd /home/cai/simple/soft

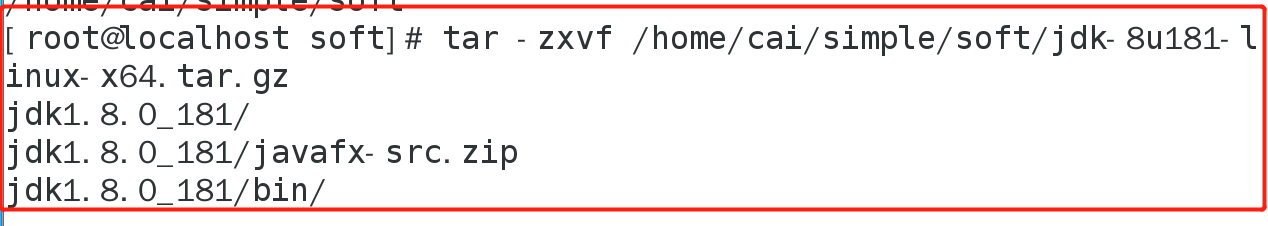

解压JDK

如果你下载的是一个压缩包,那么就需要解压这个文件。tar -zxvf /home/cai/simple/soft/jdk-8u181-linux-x64.tar.gz

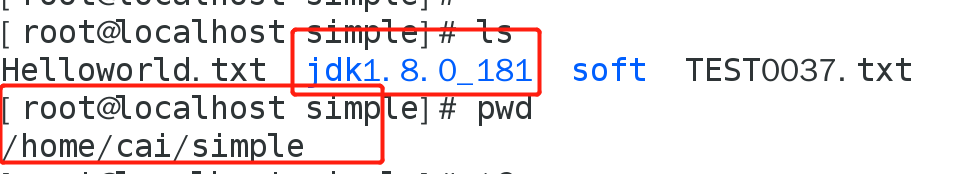

注意:在环境变量中 我把解压后的文件移动到home/cai/simple/目录下了,在后面的环境变量配置需要注意;

tar -zxvf /home/cai/simple/soft/jdk-8u181-linux-x64.tar.gz /home/cai/simple/

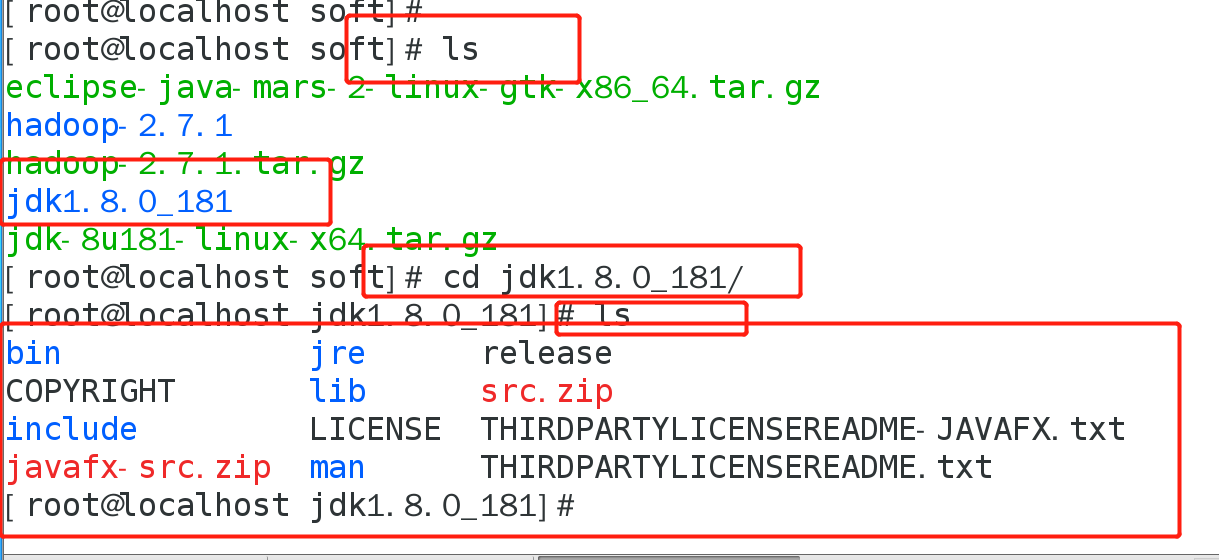

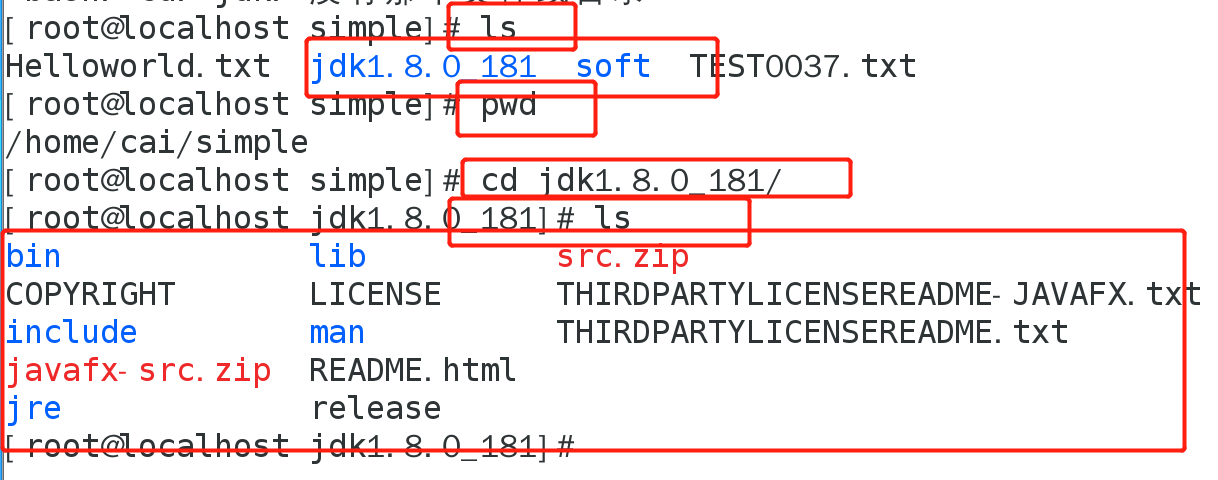

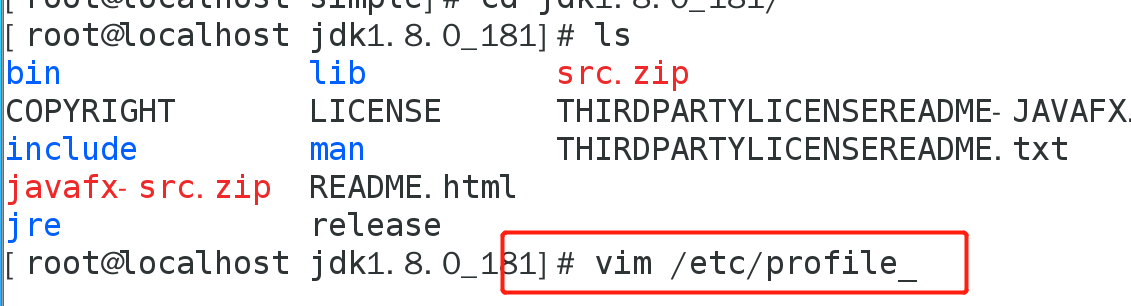

进入JDK目录

cd /home/cai/simple/soft/ jdk1.8.0_181

这里我的JDK目录在中

cd /home/cai/simple/soft/

这里根据自己文件位置选择。。确定文件解压无误。。

配置JDK环境

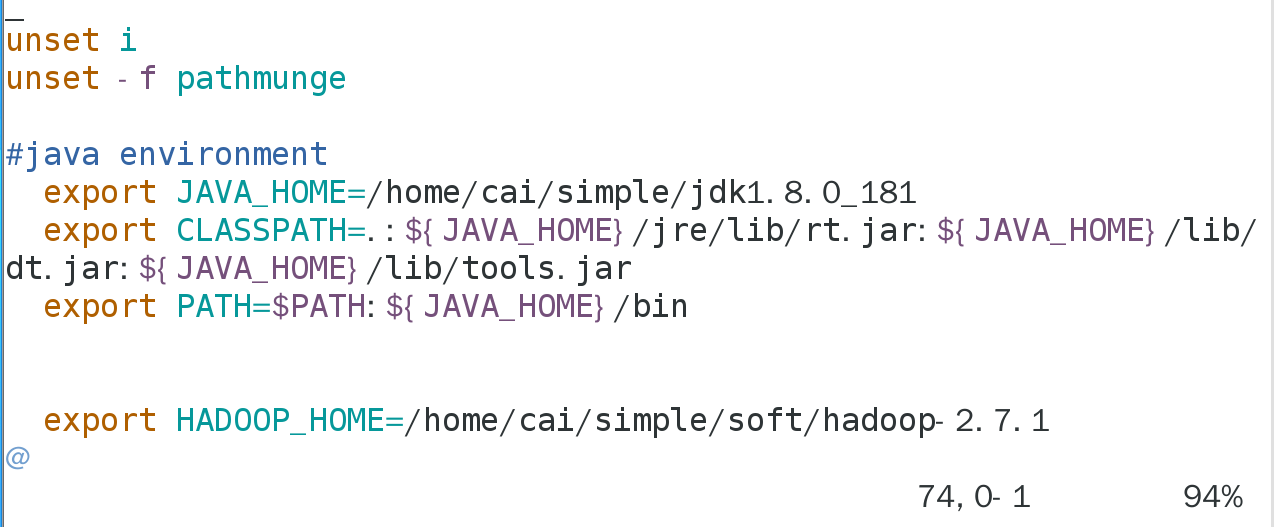

vim /etc/profile

#java environment

export JAVA_HOME=/home/cai/simple/jdk1.8.0_181

export CLASSPATH=.:${JAVA_HOME}/jre/lib/rt.jar:${JAVA_HOME}/lib/dt.jar:${JAVA_HOME}/lib/tools.jar

export PATH=$PATH:${JAVA_HOME}/bin

更新配置文件

完成编辑后,执行source /etc/profile,刷新配置,配置中的文件才会生效。

source /etc/profile

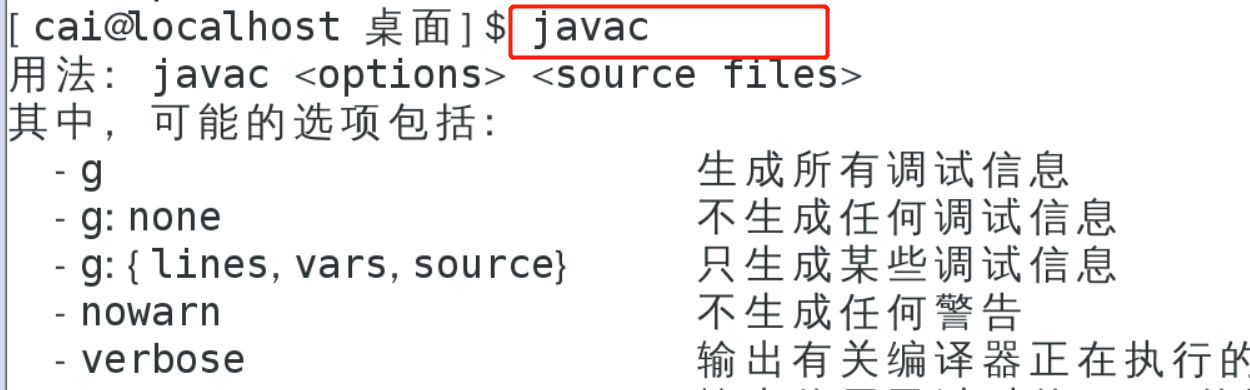

测试配置是否成功

在任何目录下执行javac命令,如果提示‘找不到命令’,则表示配置未成功,否则,表示配置成功。

javac

Hadoop的安装与配置

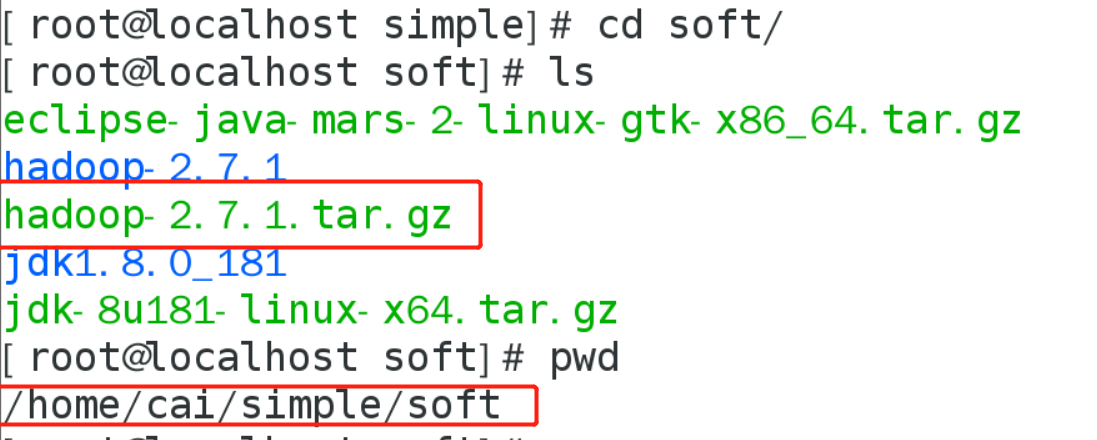

下载Hadoop

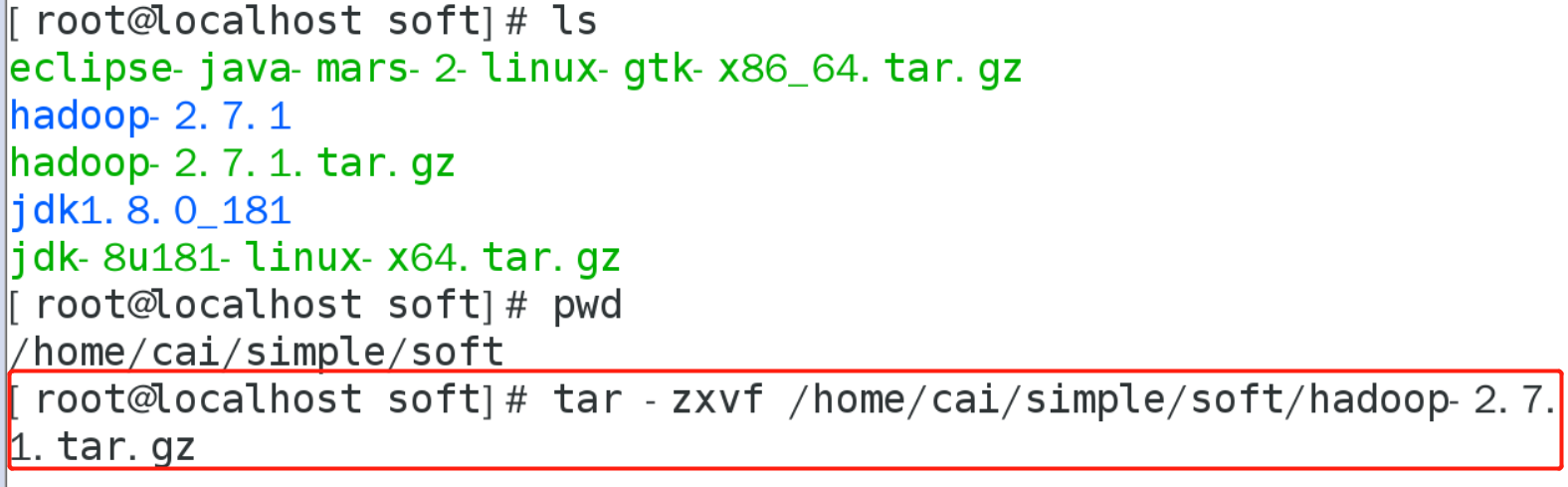

下载文件到 /home/cai/simple/soft/ 中,(下载位置自己选择)。ps:用cp或者mv来移动文件。

cd /home/cai/simple/soft/

解压Hadoop

tar -zxvf /home/cai/simple/soft/hadoop-2.7.1.tar.gz

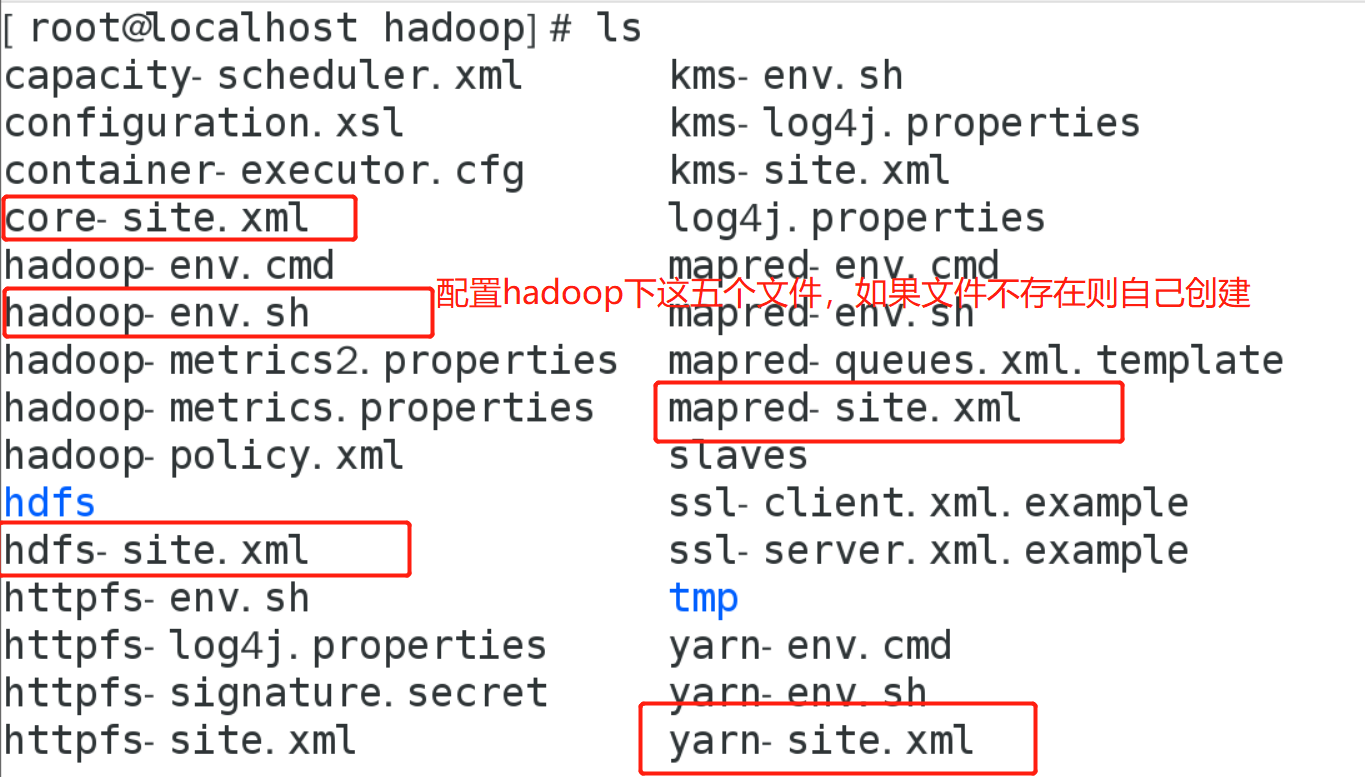

查看Hadoop的etc文件

首先查看是否解压成功,如果解压成功,则进入hadoop-2.7.1文件夹中。

查看/home/cai/simple/soft/hadoop-2.7.1/etc/hadoop目录下的文件

cd /home/cai/simple/soft/hadoop-2.7.1/etc/hadoop

查看配置文件

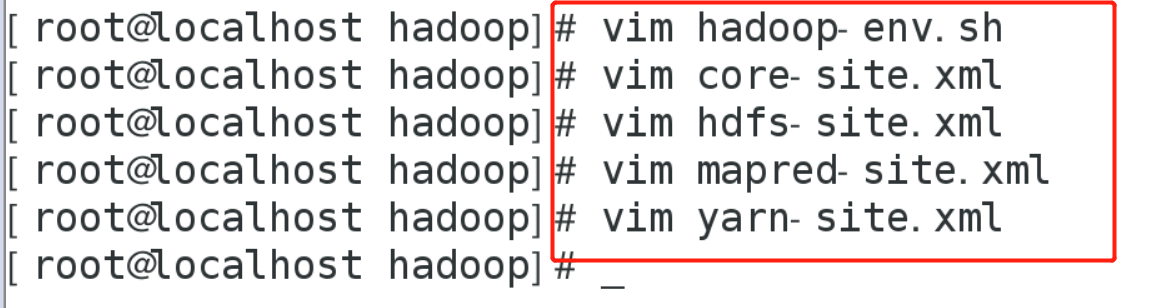

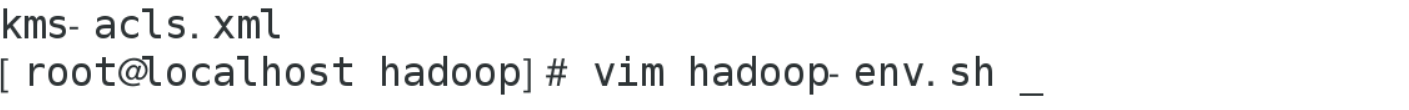

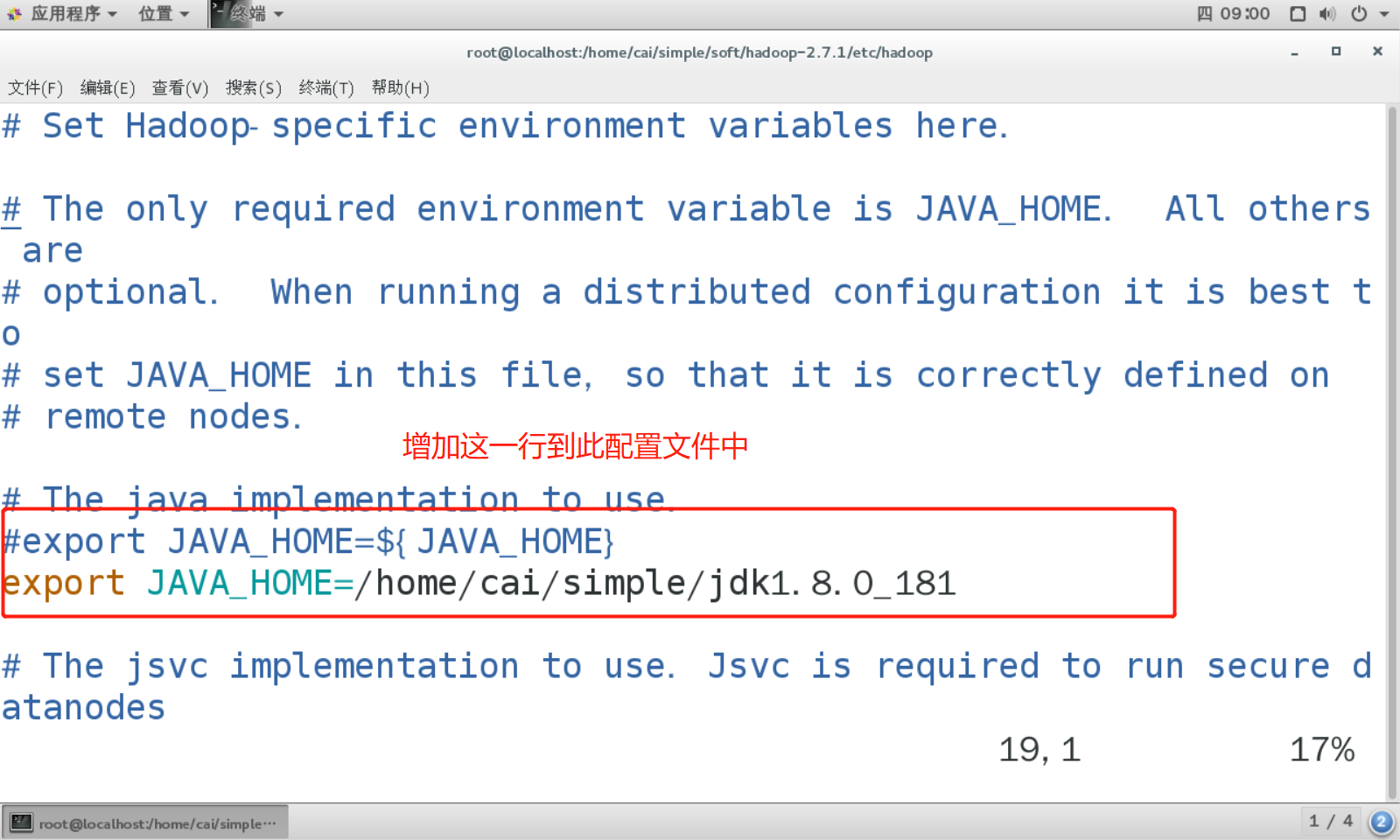

配置$HADOOP_HOME/etc/hadoop下的hadoop-env.sh文件

vim hadoop-env.sh

# The java implementation to use.

#export JAVA_HOME=${JAVA_HOME}

export JAVA_HOME=/home/cai/simple/jdk1.8.0_181

配置$HADOOP_HOME/etc/hadoop下的core-site.xml文件

vim core-site.xml

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<!-- HDFS file path -->

<property>

<name>fs.default.name</name>

<value>hdfs://172.16.12.37:9000</value>

</property>

<property>

<name>fs.defaultFS</name>

<value>hdfs://172.16.12.37:9000</value>

</property>

<property>

<name>io.file.buffer.size</name>

<value>131072</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/home/cai/simple/soft/hadoop-2.7.1/tmp</value>

<description>Abasefor other temporary directories.</description>

</property>

</configuration>

配置$HADOOP_HOME/etc/hadoop下的hdfs-site.xml文件

vim hdfs-site.xml 这里需要注意的是:如果找不到 /hdfs 文件,可以把文件路径改为 /tmp/dfs 下查找name与data文件

<value>/home/cai/simple/soft/hadoop-2.7.1/hdfs/name</value>

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributeid on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

ldoop时,需要对conf目录下的三个文件进行配置imitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>dfs.namenode.name.dir</name>

<value>/home/cai/simple/soft/hadoop-2.7.1/hdfs/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>/home/cai/simple/soft/hadoop-2.7.1/hdfs/data</value>

</property>

<!--

<property>

<name>dfs.namenode.name.dir</name>

<value>/home/cai/simple/soft/hadoop-2.7.1/etc/hadoop

/hdfs/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>/home/cai/simple/soft/hadoop-2.7.1/etc/hadoop

/hdfs/data</value>

</property>

-->

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.webhdfs.enabled</name>

<value>true</value>

</property>

</configuration>

配置$HADOOP_HOME/etc/hadoop下的mapred-site.xml文件

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>mapreduce.jobhistory.address</name>

<value>172.16.12.37:10020</value>

</property>

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>172.16.12.37:19888</value>

</property>

</configuration>

</configuration>

配置$HADOOP_HOME/etc/hadoop下的yarn-site.xml文件

<?xml version="1.0"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<configuration>

<!-- Site specific YARN configuration properties -->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

<property>

<name>yarn.resourcemanager.address</name>

<value>172.16.12.37:8032</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address</name>

<value>172.16.12.37:8030</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>172.16.12.37:8035</value>

</property>

<property>

<name>yarn.resourcemanager.admin.address</name>

<value>172.16.12.37:8033</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address</name>

<value>172.16.12.37:8088</value>

</property>

</configuration>

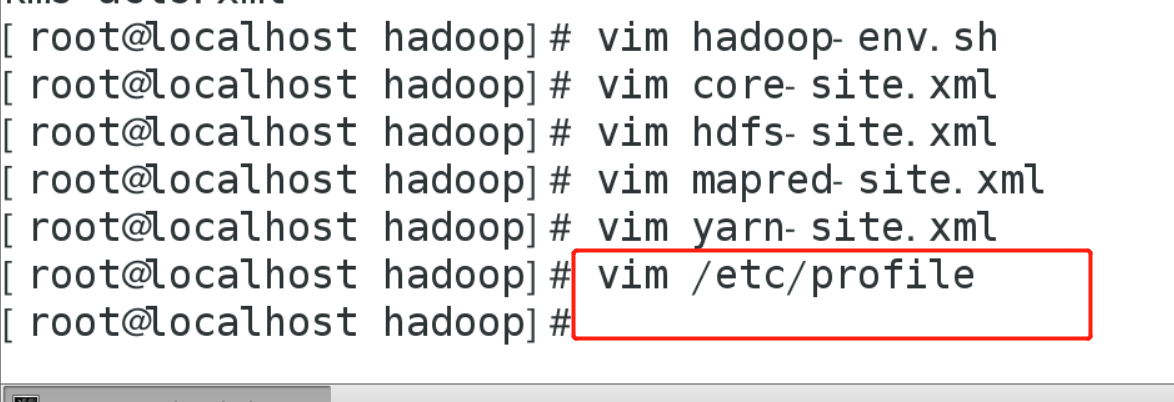

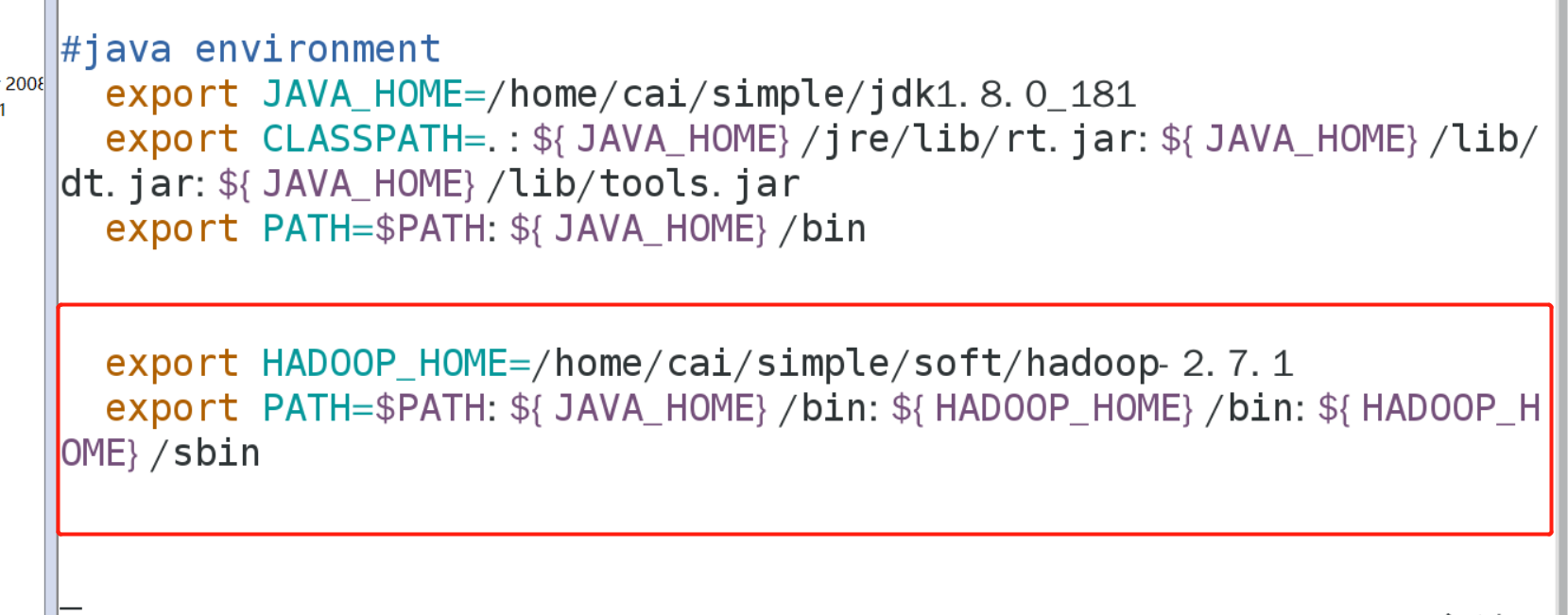

配置/etc/profile文件

vim /etc/profile

# /etc/profile

# System wide environment and startup programs, for login setup

# Functions and aliases go in /etc/bashrc

# It's NOT a good idea to change this file unless you know what you

# are doing. It's much better to create a custom.sh shell script in

# /etc/profile.d/ to make custom changes to your environment, as this

# will prevent the need for merging in future updates.

pathmunge () {

case ":${PATH}:" in

*:"$1":*)

;;

*)

if [ "$2" = "after" ] ; then

PATH=$PATH:$1

else

PATH=$1:$PATH

fi

esac

}

if [ -x /usr/bin/id ]; then

if [ -z "$EUID" ]; then

# ksh workaround

EUID=`id -u`

UID=`id -ru`

fi

USER="`id -un`"

LOGNAME=$USER

MAIL="/var/spool/mail/$USER"

fi

# Path manipulation

if [ "$EUID" = "0" ]; then

pathmunge /usr/sbin

pathmunge /usr/local/sbin

else

pathmunge /usr/local/sbin after

pathmunge /usr/sbin after

fi

HOSTNAME=`/usr/bin/hostname 2>/dev/null`

HISTSIZE=1000

if [ "$HISTCONTROL" = "ignorespace" ] ; then

export HISTCONTROL=ignoreboth

else

export HISTCONTROL=ignoredups

fi

export PATH USER LOGNAME MAIL HOSTNAME HISTSIZE HISTCONTROL

# By default, we want umask to get set. This sets it for login shell

# Current threshold for system reserved uid/gids is 200

# You could check uidgid reservation validity in

# /usr/share/doc/setup-*/uidgid file

if [ $UID -gt 199 ] && [ "`id -gn`" = "`id -un`" ]; then

umask 002

else

umask 022

fi

for i in /etc/profile.d/*.sh ; do

if [ -r "$i" ]; then

if [ "${-#*i}" != "$-" ]; then

. "$i"

else

. "$i" >/dev/null

fi

fi

done

unset i

unset -f pathmunge

#java environment

export JAVA_HOME=/home/cai/simple/jdk1.8.0_181

export CLASSPATH=.:${JAVA_HOME}/jre/lib/rt.jar:${JAVA_HOME}/lib/dt.jar:${JAVA_HOME}/lib/tools.jar

export PATH=$PATH:${JAVA_HOME}/bin

export HADOOP_HOME=/home/cai/simple/soft/hadoop-2.7.1

export PATH=$PATH:${JAVA_HOME}/bin:${HADOOP_HOME}/bin:${HADOOP_HOME}/sbin

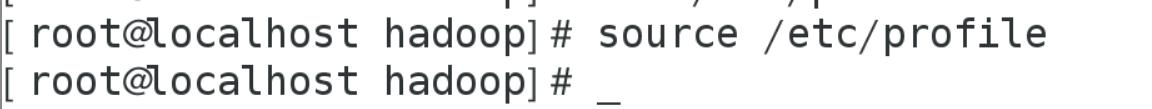

更新配置文件

让配置文件生效,需要执行命令source /etc/profile

source /etc/profile

格式化NameNode

格式化NameNode,在任意目录下执行 hdfs namenode -format 或者 hadoop namenode -format ,实现格式化。

hdfs namenode -format 或者 hadoop namenode -format

启动Hadoop集群

启动Hadoop进程,首先执行命令start-dfs.sh,启动HDFS系统。

start-dfs.sh

启动yarn集群

start-yarn.sh

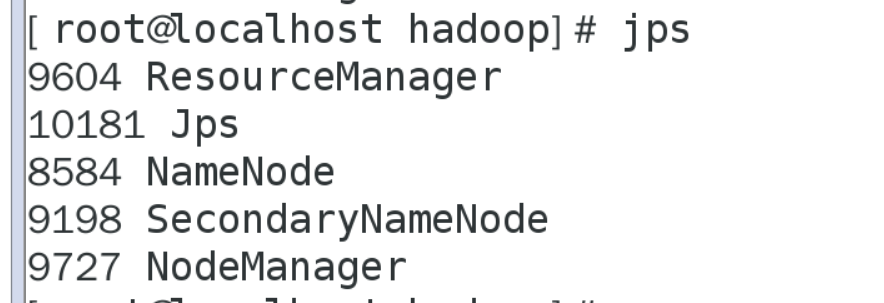

jps查看配置信息

jps

UI测试

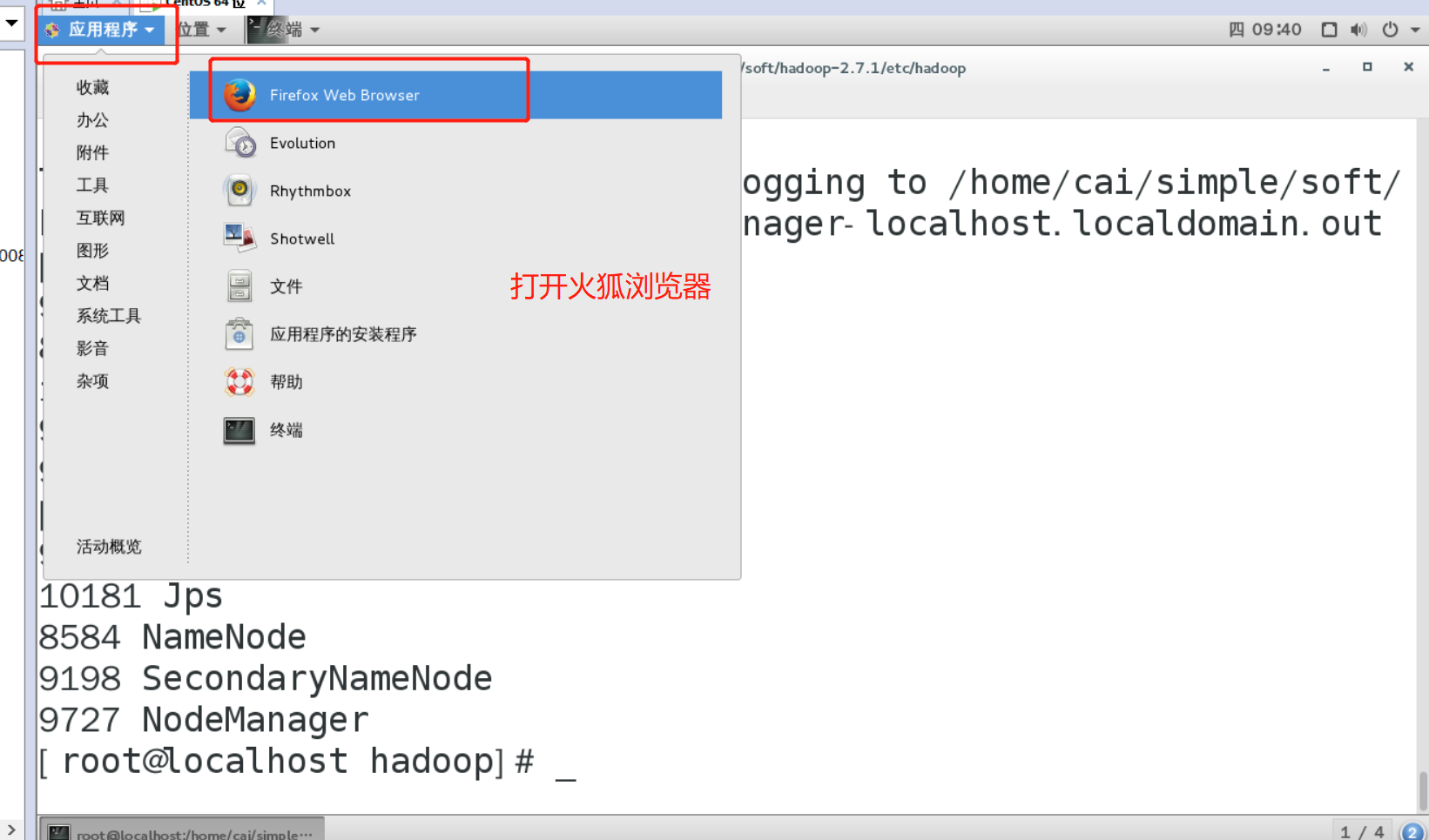

测试HDFS和yarn(推荐使用火狐浏览器)有两种方法,一个是在命令行中打开,另一个是直接双击打开

firefox

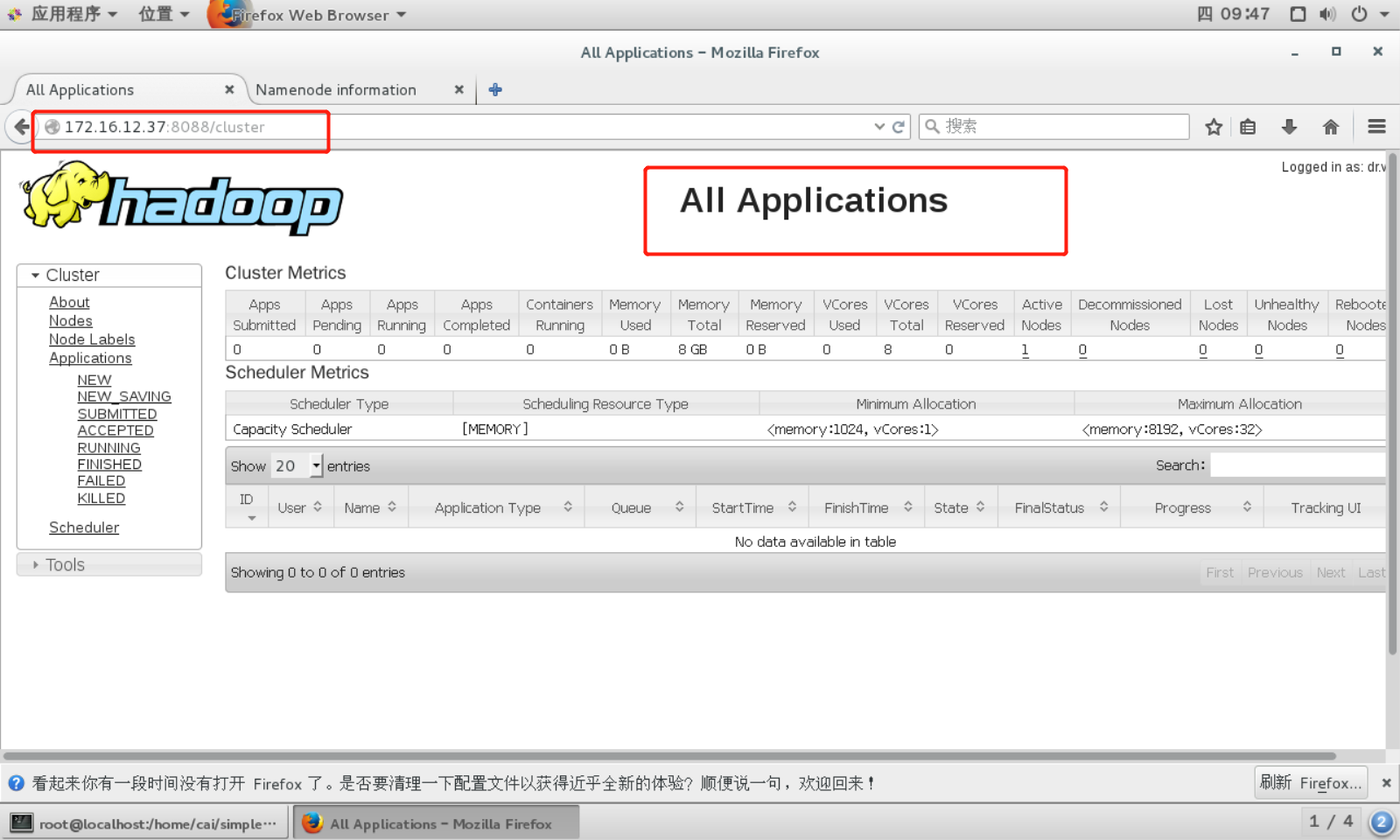

端口:8088与50070端口

首先在浏览器中输入http://172.16.12.37:50070/ (HDFS管理界面)(此IP是自己虚拟机的IP地址,端口为固定端口)每个人的IP不一样,根据自己的IP地址来定。。。

在浏览器中输入http://172.16.12.37:8088/(MR管理界面)(此IP是自己虚拟机的IP地址,端口为固定端口)每个人的IP不一样,根据自己的IP地址来定。。。