作业一

爬取当当网站图书数据

items.py

import scrapy

class BookItem(scrapy.Item):

title =scrapy.Field()

author = scrapy.Field()

date=scrapy.Field()

publisher=scrapy.Field()

detail=scrapy.Field()

price=scrapy.Field()MySpider.py

import scrapy

from ..items import BookItem

from bs4 import UnicodeDammit

class MySpider(scrapy.Spider):

name = "mySpider"

key = 'python'

source_url='http://search.dangdang.com/'

def start_requests(self):

url = MySpider.source_url+"?key="+MySpider.key

yield scrapy.Request(url=url,callback=self.parse)

def parse(self, response):

try:

dammit = UnicodeDammit(response.body, ["utf-8", "gbk"])

data = dammit.unicode_markup

selector=scrapy.Selector(text=data)

lis=selector.xpath("//li['@ddt-pit'][starts-with(@class,'line')]")

for li in lis:

title=li.xpath("./a[position()=1]/@title").extract_first()

price=li.xpath("./p[@class='price']/span[@class='search_now_price']/text()").extract_first()

author = li.xpath("./p[@class='search_book_author']/span[position()=1]/a/@title").extract_first()

date =li.xpath("./p[@class='search_book_author']/span[position()=last()- 1]/text()").extract_first()

publisher = li.xpath("./p[@class='search_book_author']/span[position()=last()]/a/@title ").extract_first()

detail = li.xpath("./p[@class='detail']/text()").extract_first()

item=BookItem()

item["title"]=title.strip() if title else ""

item["author"]=author.strip() if author else ""

item["date"] = date.strip()[1:] if date else ""

item["publisher"] = publisher.strip() if publisher else ""

item["price"] = price.strip() if price else ""

item["detail"] = detail.strip() if detail else ""

yield item

link = selector.xpath("//div[@class='paging']/ul[@name='Fy']/li[@class='next'] / a / @ href").extract_first()

if link:

url = response.urljoin(link)

yield scrapy.Request(url=url, callback=self.parse)

except Exception as err:

print(err)pipelines.py

from itemadapter import ItemAdapter

import pymysql

class BookPipeline:

def open_spider(self, spider):

print("opened")

try:

self.con = pymysql.connect(host="127.0.0.1", port=3306, user="root", passwd="axx123123", db="mydb",

charset="utf8")

self.cursor = self.con.cursor(pymysql.cursors.DictCursor)

self.cursor.execute("delete from money")

self.opened = True

self.count = 0

except Exception as err:

print(err)

self.opened = False

def close_spider(self, spider):

if self.opened:

self.con.commit()

self.con.close()

self.opened = False

print("closed")

def process_item(self, item, spider):

try:

print(item["title"])

print(item["author"])

print(item["publisher"])

print(item["date"])

print(item["price"])

print(item["detail"])

print()

if self.opened:

self.cursor.execute("insert into money(Id,bTitle,bAuthor,bPublisher,bDate,bPrice,bDetail) "

"values (%s,%s,%s,%s,%s,%s,%s)",

(self.count,item["title"],item["author"],item["publisher"],item["date"],item["price"],item["detail"]))

self.count += 1

except Exception as err:

print(err)

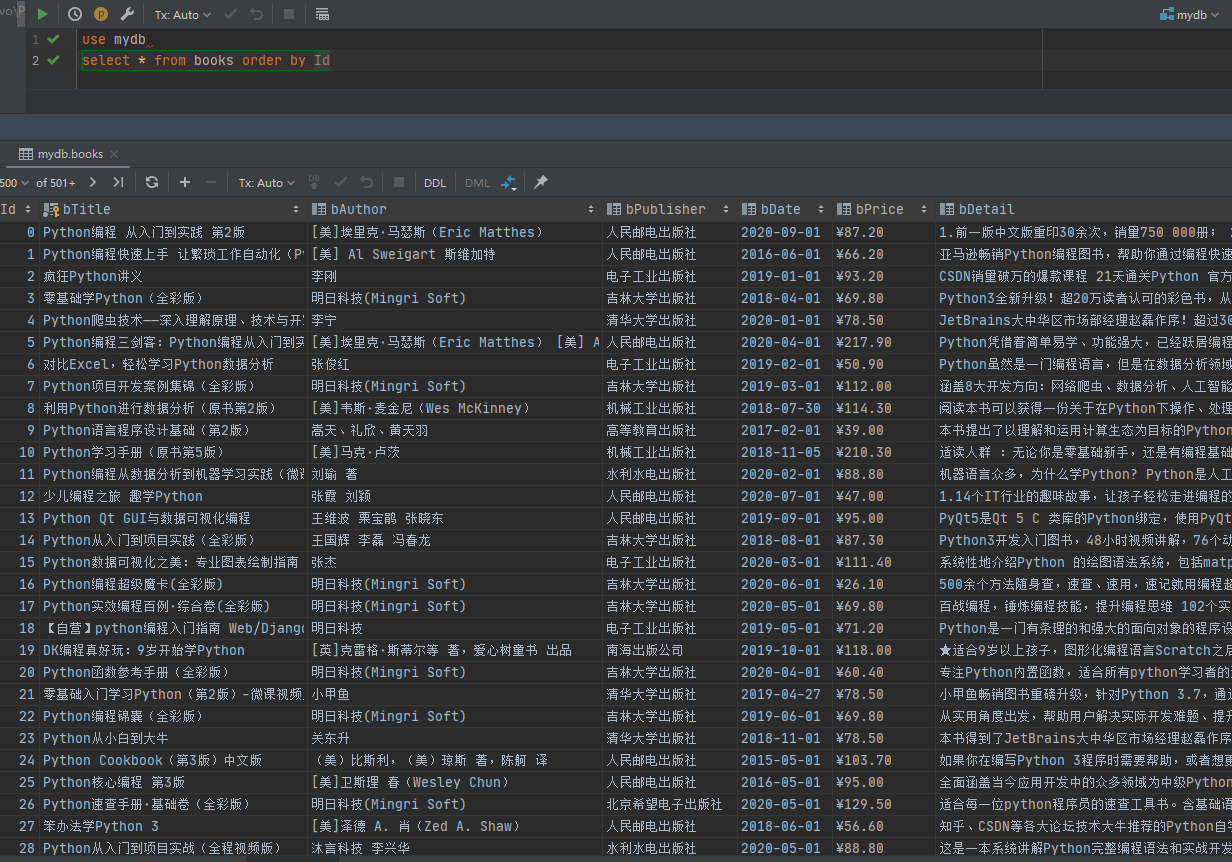

return item运行截图

心得体会

sql语言也有课程在学,所以sql的部分代码也还算不错。

可以在pycharm里面直接查找数据库,但是如果语言报错,只会提示在哪里附近错了,不会提示报错的具体类型,眼神不好使的话就很难过了

作业二

Scrapy+Xpath+MySQL数据库存储技术路线爬取股票相关信息

items.py

import scrapy

class ShareItem(scrapy.Item):

id=scrapy.Field()

shareNumber=scrapy.Field()

shareName=scrapy.Field()

newestPrice=scrapy.Field()

changeRate=scrapy.Field()

changePrice=scrapy.Field()

turnover=scrapy.Field()

turnoverPrice=scrapy.Field()

amplitude=scrapy.Field()

highest=scrapy.Field()

lowest=scrapy.Field()

today=scrapy.Field()

yesterday=scrapy.Field()

passMySpider.py

import scrapy

from selenium import webdriver

from ..items import ShareItem

class MySpider(scrapy.Spider):

name = 'share'

def start_requests(self):

url = 'http://quote.eastmoney.com/center/gridlist.html#hs_a_board'

yield scrapy.Request(url=url, callback=self.parse)

def parse(self, response):

driver = webdriver.Firefox()

try:

driver.get("http://quote.eastmoney.com/center/gridlist.html#hs_a_board")

list=driver.find_elements_by_xpath("//table[@id='table_wrapper-table'][@class='table_wrapper-table']/tbody/tr")

for li in list:

id=li.find_elements_by_xpath("./td[position()=1]")[0].text

shareNumber=li.find_elements_by_xpath("./td[position()=2]/a")[0].text

shareName=li.find_elements_by_xpath("./td[position()=3]/a")[0].text

newestPrice=li.find_elements_by_xpath("./td[position()=5]/span")[0].text

changeRate=li.find_elements_by_xpath("./td[position()=6]/span")[0].text

changePrice =li.find_elements_by_xpath("./td[position()=7]/span")[0].text

turnover =li.find_elements_by_xpath("./td[position()=8]")[0].text

turnoverPrice =li.find_elements_by_xpath("./td[position()=9]")[0].text

amplitude =li.find_elements_by_xpath("./td[position()=10]")[0].text

highest =li.find_elements_by_xpath("./td[position()=11]/span")[0].text

lowest =li.find_elements_by_xpath("./td[position()=12]/span")[0].text

today =li.find_elements_by_xpath("./td[position()=13]/span")[0].text

yesterday =li.find_elements_by_xpath("./td[position()=14]")[0].text

print("%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s"

%(id,shareNumber,shareName,newestPrice,changeRate,changePrice,

turnover,turnoverPrice,amplitude,highest,lowest,today,yesterday))

item=ShareItem()

item["id"]=id

item["shareNumber"]=shareNumber

item["shareName"]=shareName

item["newestPrice"]=newestPrice

item["changeRate"]=changeRate

item["changePrice"]=changePrice

item["turnover"]=turnover

item["turnoverPrice"]=turnoverPrice

item["amplitude"]=amplitude

item["highest"]=highest

item["lowest"]=lowest

item["today"]=today

item["yesterday"]=yesterday

yield item

except Exception as err:

print(err)pipelines.py

import pymysql

class SharePipeline:

def open_spider(self, spider):

print("opened")

try:

self.con = pymysql.connect(host="127.0.0.1", port=3306, user="root", passwd="axx123123", db="mydb",

charset="utf8")

self.cursor = self.con.cursor(pymysql.cursors.DictCursor)

self.cursor.execute("delete from share")

self.opened = True

self.count = 0

except Exception as err:

print(err)

self.opened = False

def close_spider(self, spider):

if self.opened:

self.con.commit()

self.con.close()

self.opened = False

print("closed")

def process_item(self, item, spider):

try:

if self.opened:

self.cursor.execute(

"insert into share(Sid,Snumber,Sname,SnewestPrice,SchangeRate,SchangePrice,"

"Sturnover,SturnoverPrice,Samplitude,Shighest,Slowest,Stoday,Syesterday)"

"values (%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s)",

(item["id"],item["shareNumber"],item["shareName"],item["newestPrice"],item["changeRate"],item["changePrice"],

item["turnover"],item["turnoverPrice"],item["amplitude"],item["highest"],item["lowest"],item["today"],item["yesterday"]))

except Exception as err:

print(err)

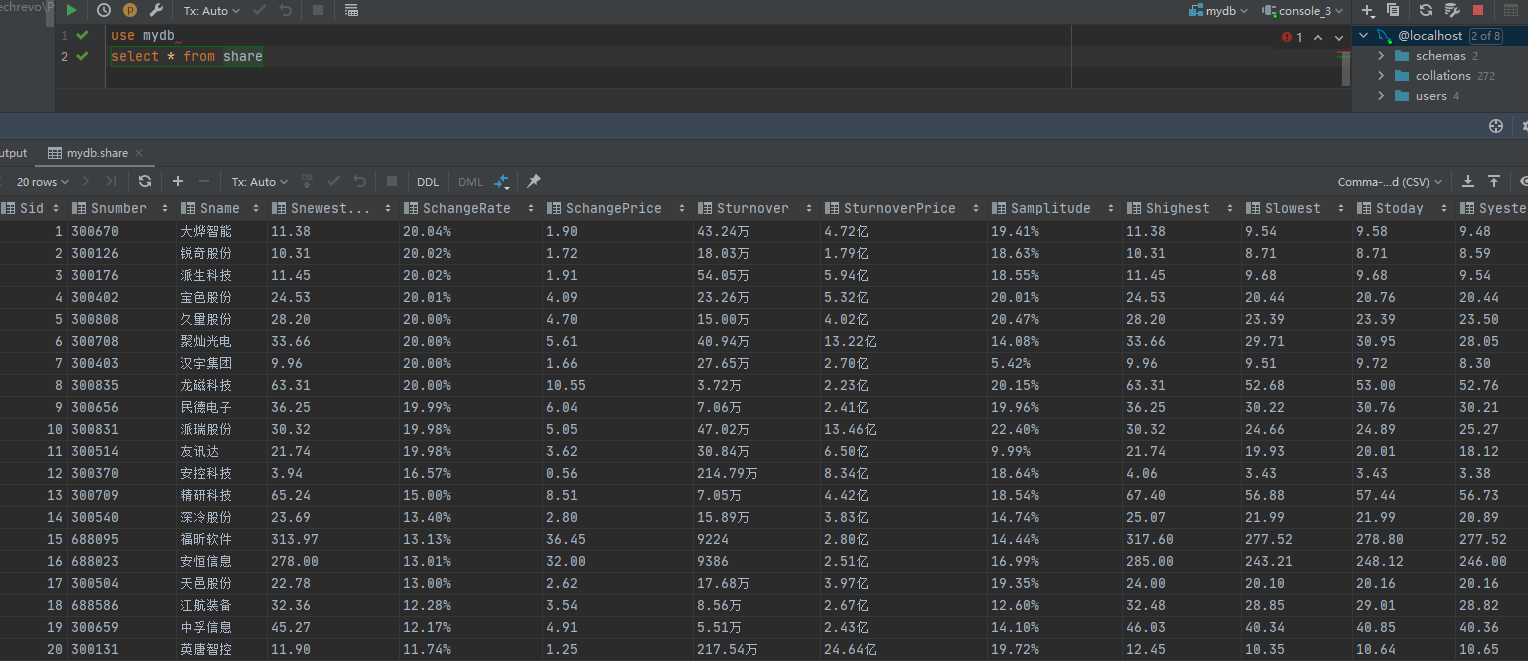

return item运行截图

心得体会

算是第一次接触selenium,比较麻烦的还得用firefox,组装的过程比较麻烦(所以数据表的创建就很笼统的全部是字符串类型),内容和上次实验一样,但是寻找数据方面上花的时间少了许多,selenium+xpath寻找到对应数据的速度比上次快,而且处理上面也省了许多事(firefox自带查找 太香了,孩子很开心 下次还用)

作业三

使用scrapy框架+Xpath+MySQL数据库存储技术路线爬取外汇网站数据。

items.py

import scrapy

class MoneyItem(scrapy.Item):

id=scrapy.Field()

currency=scrapy.Field()

tsp=scrapy.Field()

csp=scrapy.Field()

tbp=scrapy.Field()

cbp=scrapy.Field()

time=scrapy.Field()

passMySpider.py

import scrapy

from ..items import MoneyItem

from bs4 import BeautifulSoup

from bs4 import UnicodeDammit

class MySpider(scrapy.Spider):

name = "mySpider"

source_url='http://fx.cmbchina.com/hq/'

def start_requests(self):

url = MySpider.source_url

yield scrapy.Request(url=url,callback=self.parse)

def parse(self, response):

try:

dammit = UnicodeDammit(response.body, ["utf-8", "gbk"])

data = dammit.unicode_markup

selector=scrapy.Selector(text=data)

count=1

list=selector.xpath("//table[@cellspacing='1']/tr")

idn=1

for li in list:

if count!=1:

id=idn

currency=li.xpath("./td[@class='fontbold']/text()").extract_first().strip()

tsp=li.xpath("./td[@class='numberright'][position()=1]/text()").extract_first().strip()

csp=li.xpath("./td[@class='numberright'][position()=2]/text()").extract_first().strip()

tbp=li.xpath("./td[@class='numberright'][position()=3]/text()").extract_first().strip()

cbp=li.xpath("./td[@class='numberright'][position()=4]/text()").extract_first().strip()

time=li.xpath("./td[@align='center'][position()=3]/text()").extract_first().strip()

item=MoneyItem()

item["id"] = id if id else ""

item["currency"] = currency.strip() if currency else ""

item["tsp"] = tsp if tsp else ""

item["csp"] = csp if csp else ""

item["tbp"] = tbp if tbp else ""

item["cbp"] = cbp if cbp else ""

item["time"] = time if time else ""

yield item

idn=idn+1

count=count+1

except Exception as err:

print(err)pipelines.py

from itemadapter import ItemAdapter

import pymysql

class MoneyPipeline:

def open_spider(self, spider):

print("opened")

try:

self.con = pymysql.connect(host="127.0.0.1", port=3306, user="root", passwd="axx123123", db="mydb",

charset="utf8")

self.cursor = self.con.cursor(pymysql.cursors.DictCursor)

self.cursor.execute("delete from money")

self.opened = True

self.count = 0

except Exception as err:

print(err)

self.opened = False

def close_spider(self, spider):

if self.opened:

self.con.commit()

self.con.close()

self.opened = False

print("closed")

def process_item(self, item, spider):

try:

if self.opened:

self.cursor.execute("insert into money(Mid,Mcurrency,Mtsp,Mcsp,Mtbp,Mcbp,Mtime) "

"values (%s,%s,%s,%s,%s,%s,%s)",

(item["id"], item["currency"], item["tsp"], item["csp"], item["tbp"], item["tsp"],item["time"]))

except Exception as err:

print(err)

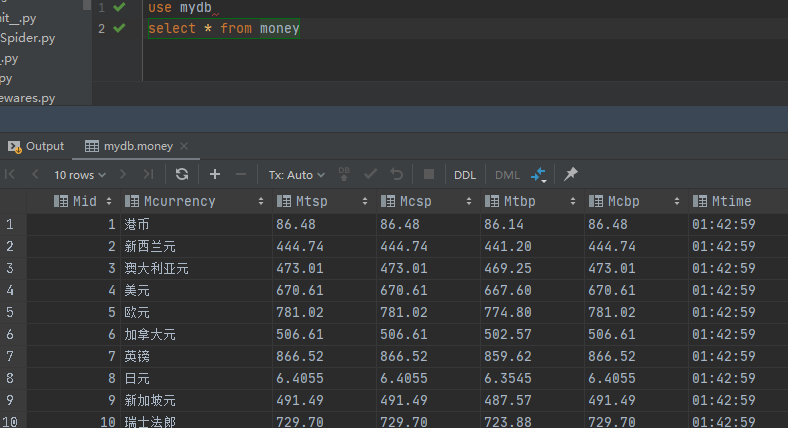

return item运行截图

心得体会

在前两个实验的基础下,加上外汇网站本身的html结构不是很复杂,多用tag[condition]的方法很快就能找到对应数据的xpath表达式