TensorBoard

TensorFlow自带的可视化工具,能够以直观的流程图的方式,清楚展示出整个神经网络的结构和框架,便于理解模型和发现问题。

- 可视化学习:https://www.tensorflow.org/guide/summaries_and_tensorboard

- 图的直观展示:https://www.tensorflow.org/guide/graph_viz

- 直方图信息中心:https://www.tensorflow.org/guide/tensorboard_histograms

启动TensorBoard

- 使用命令“tensorboard --logdir=path/to/log-directory”(或者“python -m tensorboard.main”);

- 参数logdir指向FileWriter将数据序列化的目录,建议在logdir上一级目录执行此命令;

- TensorBoard运行后,在浏览器输入“localhost:6006”即可查看TensorBoard;

帮助信息

- 使用“tensorboard --help”查看tensorboard的详细参数

示例

程序代码

1 # coding=utf-8 2 from __future__ import print_function 3 import tensorflow as tf 4 import numpy as np 5 import matplotlib.pyplot as plt 6 import os 7 8 os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2' 9 10 11 # ### 添加神经层 12 13 14 def add_layer(inputs, in_size, out_size, n_layer, activation_function=None): # 参数n_layer用来标识层数 15 layer_name = 'layer{}'.format(n_layer) 16 with tf.name_scope(layer_name): # 使用with tf.name_scope定义图层,并指定在可视化图层中的显示名称 17 with tf.name_scope('weights'): # 定义图层并指定名称,注意这里是上一图层的子图层 18 Weights = tf.Variable(tf.random_normal([in_size, out_size]), name='W') # 参数name指定名称 19 tf.summary.histogram(layer_name + '/weights', Weights) # 生成直方图summary,指定图表名称和记录的变量 20 with tf.name_scope('biases'): # 定义图层并指定名称 21 biases = tf.Variable(tf.zeros([1, out_size]) + 0.1, name='b') # 参数name指定名称 22 tf.summary.histogram(layer_name + '/biases', biases) # 生成直方图summary 23 with tf.name_scope('Wx_plus_b'): # 定义图层并指定名称 24 Wx_plus_b = tf.matmul(inputs, Weights) + biases 25 if activation_function is None: 26 outputs = Wx_plus_b 27 else: 28 outputs = activation_function(Wx_plus_b) 29 tf.summary.histogram(layer_name + '/outputs', outputs) # 生成直方图summary 30 return outputs 31 32 33 # ### 构建数据 34 x_data = np.linspace(-1, 1, 300, dtype=np.float32)[:, np.newaxis] 35 noise = np.random.normal(0, 0.05, x_data.shape).astype(np.float32) 36 y_data = np.square(x_data) - 0.5 + noise 37 38 # ### 搭建网络 39 with tf.name_scope('inputs'): # 定义图层并指定名称 40 xs = tf.placeholder(tf.float32, [None, 1], name='x_input') # 指定名称为x_input,也就是在可视化图层中的显示名称 41 ys = tf.placeholder(tf.float32, [None, 1], name='y_input') # 指定名称为y_input 42 43 h1 = add_layer(xs, 1, 10, n_layer=1, activation_function=tf.nn.relu) # 隐藏层 44 prediction = add_layer(h1, 10, 1, n_layer=2, activation_function=None) # 输出层 45 46 with tf.name_scope('loss'): # 定义图层并指定名称 47 loss = tf.reduce_mean(tf.reduce_sum(tf.square(ys - prediction), 48 reduction_indices=[1])) 49 tf.summary.scalar('loss', loss) # 用于标量的summary,loss在TensorBoard的event栏 50 51 with tf.name_scope('train'): # 定义图层并指定名称 52 train_step = tf.train.GradientDescentOptimizer(0.1).minimize(loss) 53 54 sess = tf.Session() 55 merged = tf.summary.merge_all() # 合并之前定义的所有summary操作 56 writer = tf.summary.FileWriter("logs/", sess.graph) # 创建FileWriter对象和event文件,指定event文件的存放目录 57 init = tf.global_variables_initializer() 58 sess.run(init) 59 60 # ### 结果可视化 61 fig = plt.figure() 62 ax = fig.add_subplot(1, 1, 1) 63 ax.scatter(x_data, y_data) 64 plt.ion() 65 plt.show() 66 67 # ### 训练 68 for i in range(1001): 69 sess.run(train_step, feed_dict={xs: x_data, ys: y_data}) 70 if i % 50 == 0: 71 result = sess.run(loss, feed_dict={xs: x_data, ys: y_data}) 72 print("Steps:{} Loss:{}".format(i, result)) 73 rs = sess.run(merged, feed_dict={xs: x_data, ys: y_data}) # 在sess.run中运行 74 writer.add_summary(rs, i) 75 try: 76 ax.lines.remove(lines[0]) 77 except Exception: 78 pass 79 prediction_value = sess.run(prediction, feed_dict={xs: x_data}) 80 lines = ax.plot(x_data, prediction_value, 'r-', lw=5) 81 plt.pause(0.2) 82 83 # ### TensorBoard 84 # TensorFlow自带的可视化工具,能够以直观的流程图的方式,清楚展示出整个神经网络的结构和框架,便于理解模型和发现问题; 85 # - 可视化学习:https://www.tensorflow.org/guide/summaries_and_tensorboard 86 # - 图的直观展示:https://www.tensorflow.org/guide/graph_viz; 87 # - 直方图信息中心:https://www.tensorflow.org/guide/tensorboard_histograms 88 # 89 # ### 启动TensorBoard 90 # 使用命令“tensorboard --logdir=path/to/log-directory”(或者“python -m tensorboard.main”); 91 # 参数logdir指向FileWriter将数据序列化的目录,建议在logdir上一级目录执行此命令; 92 # TensorBoard运行后,在浏览器输入“localhost:6006”即可查看TensorBoard;

程序运行结果

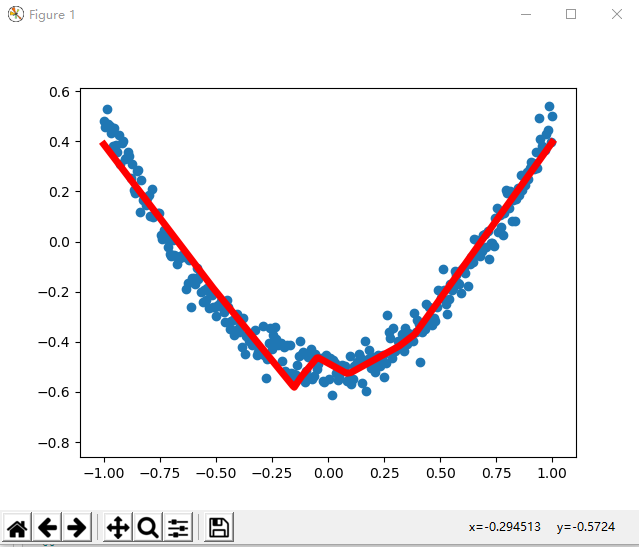

运行过程中显示的图形:

某一次运行的命令行输出:

Steps:0 Loss:0.19870562851428986

Steps:50 Loss:0.006314810831099749

Steps:100 Loss:0.0050856382586061954

Steps:150 Loss:0.0048223137855529785

Steps:200 Loss:0.004617161583155394

Steps:250 Loss:0.004429362714290619

Steps:300 Loss:0.004260621033608913

Steps:350 Loss:0.004093690309673548

Steps:400 Loss:0.003932977095246315

Steps:450 Loss:0.0038178395479917526

Steps:500 Loss:0.003722294932231307

Steps:550 Loss:0.003660505171865225

Steps:600 Loss:0.0036110866349190474

Steps:650 Loss:0.0035716891288757324

Steps:700 Loss:0.0035362064372748137

Steps:750 Loss:0.0034975067246705294

Steps:800 Loss:0.003465239657089114

Steps:850 Loss:0.003431882942095399

Steps:900 Loss:0.00339301535859704

Steps:950 Loss:0.0033665322698652744

Steps:1000 Loss:0.003349516075104475

生成的TensorBoard文件:

(mlcc) D:AnlivenAnliven-CodePycharmProjectsTempTest>dir logs 驱动器 D 中的卷是 Files 卷的序列号是 ACF9-2E0E D:AnlivenAnliven-CodePycharmProjectsTempTestlogs 的目录 2019/02/24 23:41 <DIR> . 2019/02/24 23:41 <DIR> .. 2019/02/24 23:41 137,221 events.out.tfevents.1551022894.DESKTOP-68OFQFP 1 个文件 137,221 字节 2 个目录 219,401,887,744 可用字节 (mlcc) D:AnlivenAnliven-CodePycharmProjectsTempTest>

启动与TensorBoard

执行下面的启动命令,然后在浏览器中输入“http://localhost:6006/”查看。

(mlcc) D:AnlivenAnliven-CodePycharmProjectsTempTest>tensorboard --logdir=logs

TensorBoard 1.12.0 at http://DESKTOP-68OFQFP:6006 (Press CTRL+C to quit)

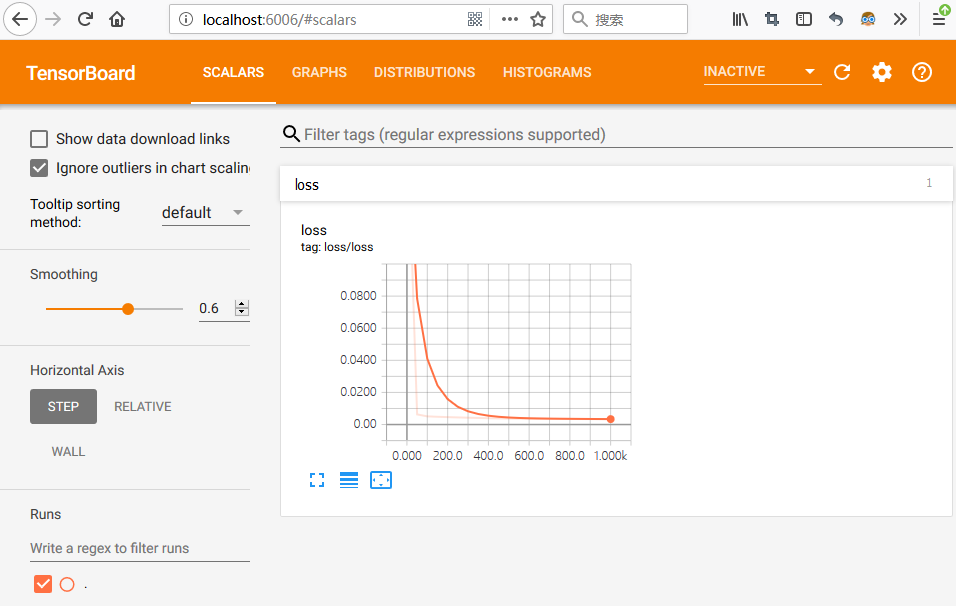

栏目Scalars

栏目Graphs

- 通过鼠标滑轮可以改变显示大小和位置

- 鼠标双击“+”标识可以查看进一步的信息

- 可以将指定图层从主图层移出,单独显示

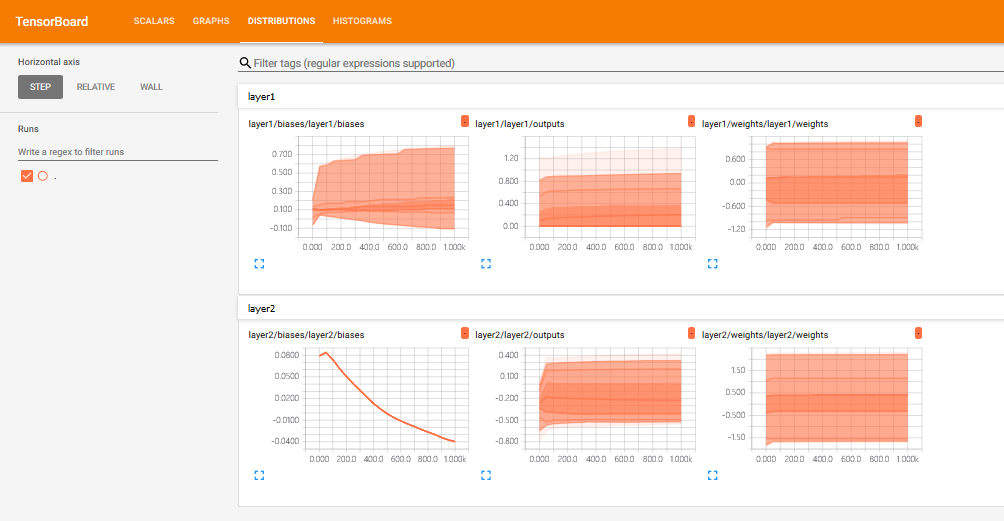

栏目Distributions

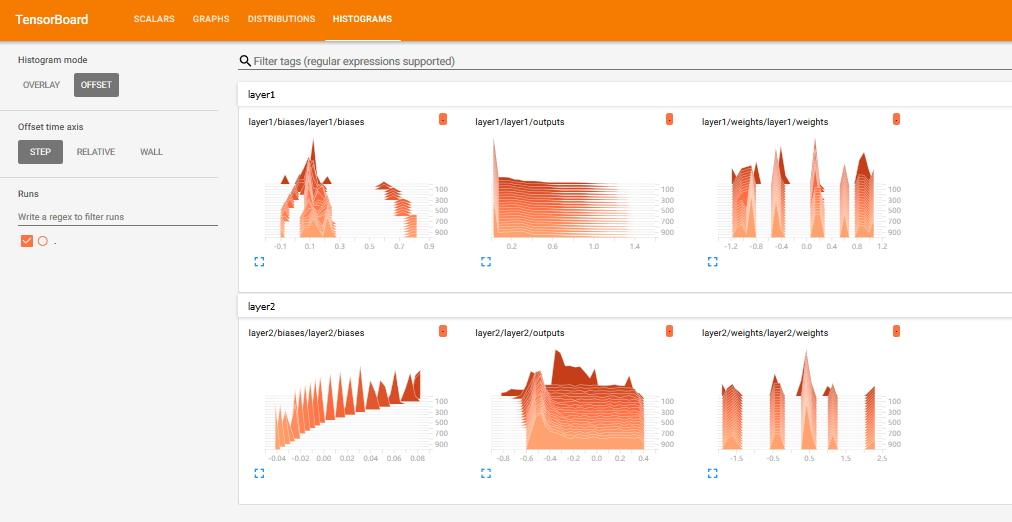

栏目histograms