神经网络通过torch.nn包来重建。

神经网络基于自动梯度(autograd)来定义一些模型。一个nn.Module包括层和一个方法forward(input),会返回输出(output)。

一个典型的神经网络训练过程包括以下几个:

1.定义一个包含可训练参数的神经网络

2.迭代整个输入

3.通过神经网络处理输入

4.计算损失(损失)

5.反向传播梯度到神经网络的参数

6.更新网络的参数,典型的用一个简单的更新方法:权重 = 权重 - 学习率 *梯度

定义神经网络:

1 class Net(nn.Module): 2 3 def __init__(self): 4 super(Net, self).__init__() 5 # 1 input image channel, 6 output channels, 5*5 square conwolution 6 # kernel 7 self.conv1 = nn.Conv2d(1, 6, 5) # 定义一个输入深度为1,输出为6,卷积核大小为 5*5 的 conv1 变量 8 self.conv2 = nn.Conv2d(6, 16, 5) # 输入通道数为6 输出通道数为16 9 # an affine operation: y = Wx + b 10 self.fc1 = nn.Linear(16 * 5 * 5, 120) 11 self.fc2 = nn.Linear(120, 84) 12 self.fc3 = nn.Linear(84, 10) # 最终属于10类中的一个 13 14 def forward(self, x): 15 # 定义forward()函数:可以在此函数中使用任何Tensor操作 16 # backward()函数被autograd自动定义 17 # Max pooling over a (2,2) window 18 # 输入x -> conv1 -> relu -> 2*2窗口的最大池化 19 x = F.max_pool2d(F.relu(self.conv1(x)), (2, 2)) 20 # If the size is a square you can only specify a single number 21 x = F.max_pool2d(F.relu(self.conv2(x)), 2) 22 # view 函数将张量x变形成一维向量形式,总特征数不变,为全连接层做准备 23 x = x.view(-1, self.num_flat_features(x)) 24 x = F.relu(self.fc1(x)) 25 x = F.relu(self.fc2(x)) 26 x = self.fc3(x) 27 return x 28 29 def num_flat_features(self, x): 30 size = x.size()[1:] # all dimensions except the batch dimension 31 num_features = 1 32 for s in size: 33 num_features *= s 34 return num_features

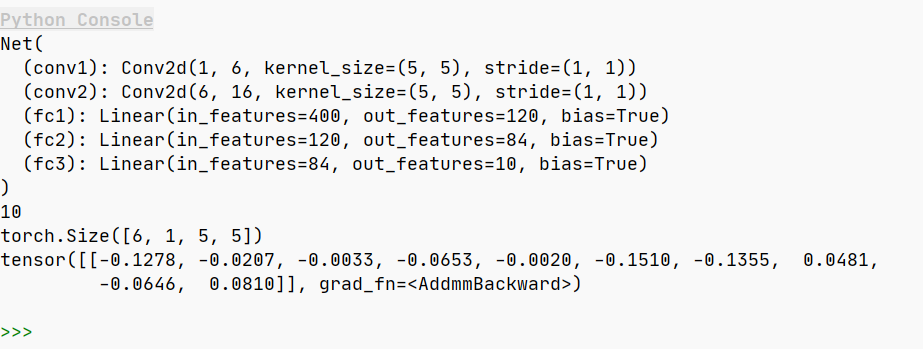

输出如下:

注意:torch.nn 仅支持小批量样本输入,而不支持单个样本。例如,nn.Conv2d 接受一个 4D 张量 (nSamples x nChannels x Height x Width)。如果仅有一个样本输入,只需使用input.unsqueeze(0) 添加伪造的批次尺寸。

- 已经学到的:

torch.Tensor- A multi-dimensional array with support for autograd operations likebackward(). Also holds the gradient w.r.t. the tensor. 张量对象,支持autograd各种操作,记录梯度。nn.Module- Neural network module. Convenient way of encapsulating parameters, with helpers for moving them to GPU, exporting, loading, etc. 方便封装参数,可移植。nn.Parameter- A kind of Tensor, that is automatically registered as a parameter when assigned as an attribute to aModule. 一种张量,作为Module的属性时,自动登记参数。autograd.Function- Implements forward and backward definitions of an autograd operation. EveryTensoroperation creates at least a singleFunctionnode that connects to functions that created aTensorand encodes its history. 实现autograd 的 forward 和 backward函数。

损失函数:

以(output, target) 作为输入,计算一个值来评估output距离target有多远。例如:nn.MSELoss用于计算均方误差。

1 if __name__ == '__main__': 2 net = Net() 3 print(net) 4 # 模型中可学习的参数通过net.parameters()返回 5 params = list(net.parameters()) 6 print(len(params)) 7 print(params[0].size()) # conv1's.weight 8 input = torch.randn(1, 1, 32, 32) # try a random 32*32 input 9 # It means expected input size of this net is 32*32.In order to use this net on MNIST dataset,resize the images 10 # from the dataset to 32*32. 11 out = net(input) 12 print(out) 13 14 # # Zero the gradient buffers of all parameters and backprops with random gradients:利用随机梯度反向传播 15 # net.zero_grad() 16 # out.backward(torch.randn(1, 10)) 17 18 target = torch.randn(10) # a dummy target 19 target = target.view(1, -1) # the same shape as output 20 criterion = nn.MSELoss() 21 22 loss = criterion(out, target) 23 print(loss) 24 25 print(loss.grad_fn) # MSELoss 26 print(loss.grad_fn.next_functions[0][0]) # Linear 27 print(loss.grad_fn.next_functions[0][0].next_functions[0][0]) # ReLu 28 # input -> conv2d -> relu -> maxpool2d -> conv2d -> relu -> maxpool2d 29 # -> view -> linear -> relu -> linear -> relu -> linear 30 # -> MSELoss 31 # -> loss 32 33 # 使用损失函数进行反向传播 34 # 只需要使用loss.backward(),但注意要清空现存的梯度,否则梯度会进行累加。 35 # 调用loss.backward(), 查看con1的偏置项在反向传播之前和之后的变化 36 net.zero_grad() 37 print('before backward') 38 print(net.conv1.bias.grad) # 更新后由全0向量变为None 39 loss.backward() 40 print('after backward') 41 print(net.conv1.bias.grad) 42 43 # 更新神经网络参数(weight) rule:随机梯度下降 44 learning_rate = 0.01 45 for f in net.parameters(): 46 f.data.sub_(f.grad.data * learning_rate) # weight = weight - learning_rate * gradient 47 48 # more update rule:torch.optim 例如:SGD,Nesterov-SGD,Adam,RMSProp... 49 import torch.optim as optim 50 51 # create optimizer 52 optimizer = optim.SGD(net.parameters(), lr=0.01) 53 54 # in training loop: 55 optimizer.zero_grad() # zero the gradient buffers 56 output = net(input) 57 loss = criterion(output, target) 58 loss.backward() 59 optimizer.step() # update