大纲>>

- time &datetime模块

- random模块

- OS模块

- sys模块

- shelve模块

- shutil模块

- xml模块

- configparser模块

- Hashlib、Hmac模块

- zipfile&tarfile模块

- PyYAML模块

- re正则表达式

time & datetime模块

# !/usr/bin/env python

import time, datetime

"""

常用标准库:

time

1、时间戳:时间戳表示的是从1970年1月1日00:00:00开始按秒计算的偏移量;

2、格式化的时间字符串

3、元组(struct_time):struct_time元组共有9个元素

格式:

%a 本地(locale)简化星期名称

%A 本地完整星期名称

%b 本地简化月份名称

%B 本地完整月份名称

%c 本地相应的日期和时间表示

%d 一个月中的第几天(01 - 31)

%H 一天中的第几个小时(24小时制,00 - 23)

%I 第几个小时(12小时制,01 - 12)

%j 一年中的第几天(001 - 366)

%m 月份(01 - 12)

%M 分钟数(00 - 59)

%p 本地am或者pm的相应符 一

%S 秒(01 - 61) 二

%U 一年中的星期数。(00 - 53星期天是一个星期的开始。)第一个星期天之前的所有天数都放在第0周。 三

%w 一个星期中的第几天(0 - 6,0是星期天) 三

%W 和%U基本相同,不同的是%W以星期一为一个星期的开始。

%x 本地相应日期

%X 本地相应时间

%y 去掉世纪的年份(00 - 99)

%Y 完整的年份

%Z 时区的名字(如果不存在为空字符)

%% ‘%’字符

"""

# print(help(time))

# print(help(time.ctime)) # 查看具体命令用法

# 当前时间 时间戳

print(time.time())

# cpu 时间

print(time.clock())

# 延迟多少秒

# print(time.sleep(1))

# 返回元组格式的时间 UTC time.gmtime(x) x为时间戳

print(time.gmtime())

# 返回元组格式的时间 UTC+8 这是我们常用的时间 time.localtime(x) x为时间戳

print(time.localtime())

x = time.localtime()

print("x:", x)

# 将元组格式的时间格式化为str格式的自定义格式时间 time.strftime(str_format, x) str_format:格式 x元组时间

print(time.strftime("%Y-%m-%d %H:%M:%S", x))

# 秒格式化为字符串形式 格式为:Tue Jun 16 11:53:31 2009

print(time.ctime(1245124411))

# 获取元组时间中的具体时间 年/月/日......

print(x.tm_year, x.tm_mon, x.tm_mday, x.tm_hour, x.tm_min, x.tm_sec)

# 将元组格式的时间转换为时间戳

print(time.mktime(x))

# 将时间戳转为字符串格式

print(time.gmtime(time.time()-86640)) # 将utc时间戳转换成struct_time格式

print(time.strftime("%Y-%m-%d %H:%M:%S", time.gmtime())) # 将utc struct_time格式转成指定的字符串格式

"""

datetime模块:

"""

print("时间加减datetime模块".center(50, "~"))

# 返回 2018-01-20 23:20:49.418354

print(datetime.datetime.now())

# 时间戳直接转成日期格式 2018-01-20

print(datetime.date.fromtimestamp(time.time()))

# 当前时间+3天

print(datetime.datetime.now() + datetime.timedelta(3))

# 当前时间-3天

print(datetime.datetime.now() + datetime.timedelta(-3))

# 当前时间+3小时

print(datetime.datetime.now() + datetime.timedelta(hours=3))

# 当前时间+30分

print(datetime.datetime.now() + datetime.timedelta(minutes=30))

c_time = datetime.datetime.now()

# 时间替换

print(c_time.replace(minute=54, hour=5))

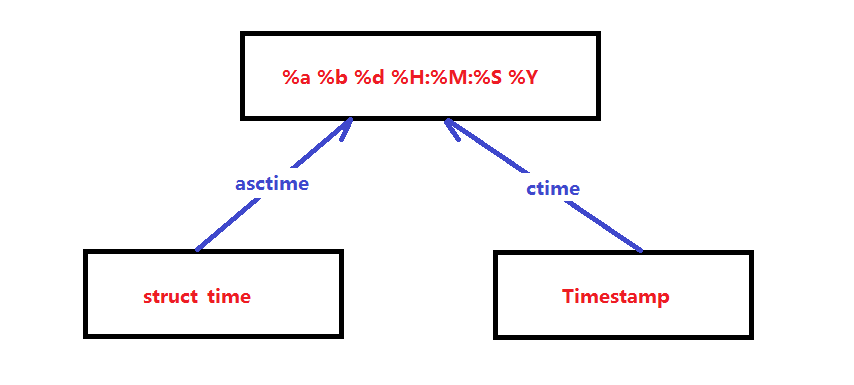

时间关系转换图:

random模块

# Author:Allister.Liu

# !/usr/bin/env python

import random

"""

random模块:

"""

# 用于生成一个0到1的随机符点数: 0 <= n < 1.0

print(random.random())

# random.randint(a, b),用于生成一个指定范围内的整数。其中参数a是下限,参数b是上限,生成的随机数n: a <= n <= b

print(random.randint(1, 10))

# random.randrange([start], stop[, step]),

# 从指定范围内,按指定基数递增的集合中 获取一个随机数。如:random.randrange(10, 100, 2),

# 结果相当于从[10, 12, 14, 16, ... 96, 98]序列中获取一个随机数。

# random.randrange(10, 100, 2)在结果上与 random.choice(range(10, 100, 2) 等效。

print(random.randrange(1, 10))

print(random.choice(range(10, 100, 2)))

# 从序列中获取一个随机元素。 random.choice(sequence) sequence在python不是一种特定的类型,而是泛指一系列的类型。 list, tuple, 字符串都属于sequence

print(random.choice("abcdef"))

print(random.choice("学习Python的小伙伴")) # 伙

print(random.choice(["JGood", "is", "a", "handsome", "boy"])) # boy-- List

print(random.choice(("Tuple","List","Dict"))) # Tuple

# random.sample(sequence, k),从指定序列中随机获取指定长度的片断。sample函数不会修改原有序列。

print(random.sample([1, 2, 3, 4, 5, 6, 7, 8, 9], 5)) # [2, 1, 9, 5, 7]

# 随机整数:

print(random.randint(0, 99)) # 70

# 随机选取0到100间的偶数:

print(random.randrange(0, 101, 2)) # 4

# 随机浮点数:

print(random.random()) # 0.2746445568079129

print(random.uniform(1, 10)) # 9.887001463194844

# 随机字符:

print(random.choice('abcdefg&#%^*f')) # e

# 多个字符中选取特定数量的字符:

print(random.sample('abcdefghij123', 3)) # ['3', 'j', 'i']

# 随机选取字符串:

print(random.choice(['apple', 'pear', 'peach', 'orange', 'lemon'])) # peach

# 洗牌#

items = [1, 2, 3, 4, 5, 6, 7, 8, 9]

print(items) # [1, 2, 3, 4, 5, 6, 7, 8, 9]

random.shuffle(items)

print(items) # [8, 3, 6, 1, 4, 9, 5, 7, 2]

"""

生成6为验证码:由数字, 大写字母, 小写字母组成的6位随机验证码

"""

def produce_check_code(scope = 6):

check_code = ""

for i in range(scope):

tmp = random.randint(0, 10)

if tmp < 6:

tmp = random.randint(0, 9)

elif tmp > 8:

tmp = chr(random.randint(65, 90))

else:

tmp = chr(random.randint(97, 122))

check_code += str(tmp)

return check_code

print(produce_check_code(8))

0.21786963196954112 3 2 34 b 的 JGood List [7, 2, 6, 4, 8] 12 14 0.5355914470942843 3.3065568721321013 % ['2', 'g', 'f'] pear [1, 2, 3, 4, 5, 6, 7, 8, 9] [6, 7, 5, 9, 1, 2, 3, 4, 8] D626EbYt

OS模块

提供对操作系统进行调用的接口:

# Author:Allister.Liu

# !/usr/bin/env python

import os

"""

OS模块:

"""

path = "E:/logo/ic2c/logo.png"

# 获取当前工作目录,即当前python脚本工作的目录路径 === linux: pwd

print(os.getcwd())

# 改变当前脚本工作目录;相当于shell下cd

# os.chdir("dirname")

# 返回当前目录: ('.')

print(os.curdir)

# 获取当前目录的父目录字符串名:('..')

print(os.pardir)

# 可生成多层递归目录

# os.makedirs('dirname1/dirname2')

# 若目录为空,则删除,并递归到上一级目录,如若也为空,则删除,依此类推

# os.removedirs('dirname1')

# 生成单级目录;相当于shell中mkdir dirname

# os.mkdir('dirname')

# 删除单级空目录,若目录不为空则无法删除,报错;相当于shell中rmdir dirname

# os.rmdir('dirname')

# 列出指定目录下的所有文件和子目录,包括隐藏文件,并以列表方式打印

print(os.listdir('E:/logo'))

# 删除一个文件

# os.remove()

# 重命名文件/目录

# os.rename("oldname","newname")

# 获取文件/目录信息

# os.stat('path/filename')

# 输出操作系统特定的路径分隔符,win下为"\",Linux下为"/"

os.sep

# 输出当前平台使用的行终止符,win下为"

",Linux下为"

"

os.linesep

# 输出用于分割文件路径的字符串 eg:环境变量path的分隔符

os.pathsep

# 输出字符串指示当前使用平台。win->'nt'; Linux->'posix'

os.name

# 运行shell命令,直接显示

os.system("dir")

# 获取系统环境变量

print(os.environ)

# 返回path规范化的绝对路径

print(os.path.abspath(path))

# 将path分割成目录和文件名二元组返回

print(os.path.split(path))

# 返回path的目录。其实就是os.path.split(path)的第一个元素

print(os.path.dirname(path))

# 返回path最后的文件名。如何path以/或结尾,那么就会返回空值。即os.path.split(path)的第二个元素

print(os.path.basename(path))

# 如果path存在,返回True;如果path不存在,返回False

print(os.path.exists(path))

# 如果path是绝对路径,返回True

print(os.path.isabs(path))

# 如果path是一个存在的文件,返回True。否则返回False

print(os.path.isfile(path))

# 如果path是一个存在的目录,则返回True。否则返回False

print(os.path.isdir(path))

# 将多个路径组合后返回,第一个绝对路径之前的参数将被忽略

# os.path.join(path1[, path2[, ...]])

# 返回path所指向的文件或者目录的最后存取时间

print(os.path.getatime(path))

# 返回path所指向的文件或者目录的最后修改时间

print(os.path.getmtime(path))

sys模块

# Author:Allister.Liu

# !/usr/bin/env python

import sys

print(help(sys))

# 命令行参数List,第一个元素是程序本身路径

sys.argv

# 退出程序,正常退出时exit(0)

# sys.exit(0)

# 获取Python解释程序的版本信息

print(sys.version)

# 最大的Int值

print(sys.maxsize)

# 返回模块的搜索路径,初始化时使用PYTHONPATH环境变量的值

print(sys.path)

# 返回操作系统平台名称

print(sys.platform)

# 不换行输出 进度条

sys.stdout.write('please:')

val = sys.stdin.readline()[:-1]

print(val)

shelve模块

# Author:Allister.Liu

# !/usr/bin/env python

import shelve

import os, datetime

"""

shelve模块:shelve模块是一个简单的k,v将内存数据通过文件持久化的模块,可以持久化任何pickle可支持的python数据格式

"""

file_path = "datas"

# 文件夹不存在则创建

if not os.path.exists(file_path):

os.mkdir(file_path)

# 打开一个文件

d = shelve.open(file_path + "/shelve_file.data")

class Test(object):

def __init__(self, n):

self.n = n

t1 = Test(123)

t2 = Test(123334)

names = ["Allister", "Linde", "Heddy", "Daty"]

# 持久化列表 k为names

d["names"] = names

# 持久化类

d["t1"] = t1

d["t2"] = t2

d["date"] = datetime.datetime.now()

"""

获取文件内容

"""

# 根据key获取value

print(d.get("names"))

print(d.get("t1"))

print(d.get("date"))

print(d.items())

shutil模块

# Author:Allister.Liu

# !/usr/bin/env python

import shutil

"""

shutil模块:

shutil.copyfileobj(fsrc, fdst[, length]):将文件内容拷贝到另一个文件中,可以部分内容;

shutil.copyfile(src, dst):拷贝文件;

shutil.copymode(src, dst):仅拷贝权限。内容、组、用户均不变;

shutil.copystat(src, dst):拷贝状态的信息,包括:mode bits, atime, mtime, flags;

shutil.copy(src, dst):拷贝文件和权限;

shutil.copy2(src, dst):拷贝文件和状态信息&权限等;

shutil.rmtree(path[, ignore_errors[, onerror]]):递归的去删除文件;

shutil.move(src, dst):递归的去移动文件;

shutil.copytree(src, dst, symlinks=False, ignore=None):递归的去拷贝文件,目录;

shutil.move(src, dst):递归的去移动文件

shutil.make_archive(base_name, format,...):创建压缩包并返回文件路径,例如:zip、tar;

base_name: 压缩包的文件名,也可以是压缩包的路径。只是文件名时,则保存至当前目录,否则保存至指定路径, 如:ic2c =>保存至当前路径;

如:/Users/Allister/ic2c =>保存至/Users/Allister/;

format: 压缩包种类,“zip”, “tar”, “bztar”,“gztar”;

root_dir: 要压缩的文件夹路径(默认当前目录);

owner: 用户,默认当前用户;

group: 组,默认当前组;

logger: 用于记录日志,通常是logging.Logger对象;

"""

"""

复制“笔记.data”至文件“笔记1.data”

"""

with open("笔记.data", "r", encoding= "utf-8") as f1:

with open("笔记1.data", "w", encoding="utf-8") as f2:

shutil.copyfileobj(f1, f2)

# 无需打开文件,copyfile自动打开文件并复制

# shutil.copyfile("笔记.data", "笔记2.data")

# 递归copy文件夹下的所有文件,

# shutil.copytree("../day4", "../day5/copys")

# 将以上递归copy的目录删除

# shutil.rmtree("copys")

# 压缩文件并返回路径

# print(shutil.make_archive("H:/wx/432", "zip" ,root_dir="H:/PycharmProjects/python_tutorial/"))

xml模块

1 <data> 2 <country name="Liechtenstein"> 3 <rank updated="yes">2</rank> 4 <year updated="yes">2009</year> 5 <gdppc>141100</gdppc> 6 <neighbor direction="E" name="Austria" /> 7 <neighbor direction="W" name="Switzerland" /> 8 </country> 9 <country name="Singapore"> 10 <rank updated="yes">5</rank> 11 <year updated="yes">2012</year> 12 <gdppc>59900</gdppc> 13 <neighbor direction="N" name="Malaysia" /> 14 </country> 15 <country name="Panama"> 16 <rank updated="yes">69</rank> 17 <year updated="yes">2012</year> 18 <gdppc>13600</gdppc> 19 <neighbor direction="W" name="Costa Rica" /> 20 <neighbor direction="E" name="Colombia" /> 21 </country> 22 </data>

# Author:Allister.Liu

# !/usr/bin/env python

import xml.etree.ElementTree as ET

"""

xml处理模块:xml是实现不同语言或程序之间进行数据交换的协议,跟json差不多,但json使用起来更简单,不过,古时候,在json还没诞生的黑暗年代,大家只能选择用xml呀,

至今很多传统公司如金融行业的很多系统的接口还主要是xml。

"""

# xml协议在各个语言里的都 是支持的,在python中可以用以下模块操作xml

tree = ET.parse("datas/xml_test.xml")

root = tree.getroot()

print("父节点:", root.tag)

# print("遍历xml文档".center(50, "~"))

# # 遍历xml文档

# for child in root:

# print(child.tag, child.attrib)

# for i in child:

# print(i.tag, i.text)

#

# print("year节点".center(50, "~"))

# # 只遍历year节点

# for node in root.iter('year'):

# print(node.tag, node.text)

"""

修改和删除xml文档内容

"""

# 修改

for node in root.iter('year'):

new_year = int(node.text) + 1

node.text = str(new_year)

node.set("updated", "yes")

tree.write("datas/xmltest.xml")

# 删除node

for country in root.findall('country'):

rank = int(country.find('rank').text)

if rank > 50:

root.remove(country)

tree.write('datas/output.xml')

"""

创建xml文档

"""

new_xml = ET.Element("namelist")

name = ET.SubElement(new_xml, "name", attrib={"enrolled": "yes"})

age = ET.SubElement(name, "age", attrib={"checked": "no"})

sex = ET.SubElement(name, "sex")

sex.text = '33'

name2 = ET.SubElement(new_xml, "name", attrib={"enrolled": "no"})

age = ET.SubElement(name2, "age")

age.text = '19'

et = ET.ElementTree(new_xml) # 生成文档对象

et.write("datas/test.xml", encoding="utf-8", xml_declaration=True)

ET.dump(new_xml) # 打印生成的格式

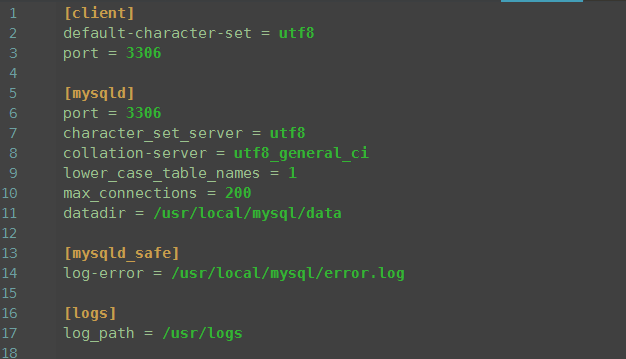

configparser模块

- 文件的生成:

# Author:Allister.Liu

# !/usr/bin/env python

import configparser

"""

mysql的配置文件:

"""

config = configparser.ConfigParser()

# 第一种赋值

config["client"] = {'port': '3306',

'default-character-set': 'utf8'}

# 第二种赋值

config['mysqld'] = {}

config['mysqld']['port'] = '3306'

config['mysqld']['character_set_server'] = 'utf8'

config['mysqld']['collation-server'] = 'utf8_general_ci'

config['mysqld']['lower_case_table_names'] = '1'

config['mysqld']['max_connections'] = '200'

# 第三种赋值

config['mysqld_safe'] = {}

topsecret = config['mysqld_safe']

topsecret['log-error'] = '/usr/local/mysql/error.log'

config['mysqld']['datadir'] = '/usr/local/mysql/data'

with open('datas/my.ini', 'w') as configfile:

config.write(configfile)

- 文件的读取:

# Author:Allister.Liu

# !/usr/bin/env python

import configparser

"""

configparser的读取:

"""

config = configparser.ConfigParser()

# 打开文件,返回文件路径

config.read('datas/my.ini')

# 读取文件中的父节点

print(config.sections()) # ['client', 'mysqld', 'mysqld_safe', 'logs']

# 判断节点是否存在文件中

print("mysqld" in config) # True

# 获取节点下某个值

print(config["mysqld"]["port"]) # 3306

print(config["mysqld_safe"]["log-error"]) # /usr/local/mysql/error.log

topsecret = config["mysqld_safe"]

print(topsecret["log-error"]) # /usr/local/mysql/error.log

print("遍历配置文件".center(50, "~"))

for key in config["mysqld"]:

print(key)

# 返回元组格式的属性

# [('port', '3306'), ('character_set_server', 'utf8'), ('collation-server', 'utf8_general_ci'), ('lower_case_table_names', '1'), ('max_connections', '200'), ('datadir', '/usr/local/mysql/data')]

print(config.items("mysqld"))

print(" 改写 ".center(50, "#"))

# 删除mysqld后重新写入

# sec = config.remove_section('mysqld') # 要删除的key

# config.write(open('datas/my.ini', "w"))

# # 判断一个节点是否存在

# sec = config.has_section('mysqld')

# print(sec)

# # 添加一个节点,如果存在会报错

# sec = config.add_section('logs')

# config.write(open('datas/my.ini', "w"))

# 新增logs节点下的log_path

config.set('logs', 'log_path', "/usr/logs")

config.write(open('datas/my.ini', "w"))

Hashlib、Hmac模块

# Author:Allister.Liu

# !/usr/bin/env python

import hashlib

"""

hashlib模块:用于加密相关的操作,3.x里代替了md5模块和sha模块,主要提供 SHA1, SHA224, SHA256, SHA384, SHA512 ,MD5 算法。

"""

m1 = hashlib.md5()

m1.update("asdfghjkl".encode("utf-8"))

# 2进制

print(m1.digest())

# 16进制

print(m1.hexdigest())

# ######## md5 ########

print(" md5 ".center(50, "#"))

hash = hashlib.md5()

hash.update('admin'.encode("utf-8"))

print(hash.hexdigest())

# ######## sha1 ########

print(" sha1 ".center(50, "#"))

hash = hashlib.sha1()

hash.update('admin'.encode("utf-8"))

print(hash.hexdigest())

# ######## sha256 ########

print(" sha256 ".center(50, "#"))

hash = hashlib.sha256()

hash.update('admin'.encode("utf-8"))

print(hash.hexdigest())

# ######## sha384 ########

print(" sha384 ".center(50, "#"))

hash = hashlib.sha384()

hash.update('admin'.encode("utf-8"))

print(hash.hexdigest())

# ######## sha512 ########

print(" sha512 ".center(50, "#"))

hash = hashlib.sha512()

hash.update('admin'.encode("utf-8"))

print(hash.hexdigest())

"""

python 还有一个 hmac 模块,它内部对我们创建 key 和 内容 再进行处理然后再加密

散列消息鉴别码,简称HMAC,是一种基于消息鉴别码MAC(Message Authentication Code)的鉴别机制。使用HMAC时,消息通讯的双方,通过验证消息中加入的鉴别密钥K来鉴别消息的真伪;

一般用于网络通信中消息加密,前提是双方先要约定好key,就像接头暗号一样,然后消息发送把用key把消息加密,接收方用key + 消息明文再加密,拿加密后的值 跟 发送者的相对比是否相等,这样就能验证消息的真实性,及发送者的合法性了。

"""

import hmac

h = hmac.new('中华好儿女'.encode("utf-8"), '美丽的山河'.encode("utf-8"))

print(h.hexdigest())

zipfile&tarfile模块

# Author:Allister.Liu

# !/usr/bin/env python

"""

zip解压缩

"""

import zipfile

# 压缩

z = zipfile.ZipFile('Allister.zip', 'w')

z.write('笔记.data')

z.write('sys_test.py')

z.close()

# 解压

z = zipfile.ZipFile('Allister.zip', 'r')

z.extractall()

z.close()

"""

tar解压缩

"""

import tarfile

# 压缩

tar = tarfile.open('your.tar', 'w')

tar.add('/home/dsa.tools/mysql.zip', arcname='mysql.zip')

tar.add('/Users/wupeiqi/PycharmProjects/cmdb.zip', arcname='cmdb.zip')

tar.close()

# 解压

tar = tarfile.open('your.tar', 'r')

tar.extractall() # 可设置解压地址

tar.close()

a、zipfile