Zabbix监控Redis服务

OpenFalcon监控Redis服务

CacheCloud搭建

系统CentOS 7.4,mysql 5.7,Redis 4.0

# yum -y install git

# cd /usr/local/

# git clone https://github.com/sohutv/cachecloud.git

卸载OpenJdk

# rpm -qa | grep jdk

# rpm -e --nodeps ...

# yum -y remove ...

安装Java环境

# tar xvf jdk-8u65-linux-x64.tar.gz -C /usr/local

配置环境变量

export JAVA_HOME=/usr/local/jdk1.8.0_65

export JAVA_BIN=/usr/local/jdk1.8.0_65/bin

export PATH=$PATH:$JAVA_HOME/bin

export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

# source /etc/profile

# java -version

安装MySQL5.7略

初始化CacheCloud数据

innodb_page_size = 16384

sql_mode = ""

mysql> create database cachecloud charset utf8;

mysql> use cachecloud;

mysql> source /usr/local/cachecloud/script/cachecloud.sql;

创建CacheCloud连接用户

mysql> grant all on cachecloud.* to 'admin'@'%' identified by 'admin';

mysql> flush privileges;

CacheCloud使用了maven作为项目构建的工具,所以先安装maven

# yum -y install maven(注意安装maven时会安装openjdk)

CacheCloud提供了local.properties和online.properties两套配置作为测试、线上的隔离

# vim /usr/local/cachecloud/cachecloud-open-web/src/main/swap/local.properties

cachecloud.db.url = jdbc:mysql://192.168.1.101:3306/cachecloud

cachecloud.db.user = admin

cachecloud.db.password = admin

cachecloud.maxPoolSize = 20

isClustered = true

isDebug = true

spring-file = classpath:spring/spring-local.xml

log_base = /opt/cachecloud-web/logs

web.port = 9999

log.level = INFO

# vim /usr/local/cachecloud/cachecloud-open-web/src/main/swap/online.properties

cachecloud.db.url = jdbc:mysql://192.168.1.101:3306/cachecloud

cachecloud.db.user = admin

cachecloud.db.password = admin

cachecloud.maxPoolSize = 20

isClustered = true

isDebug = false

spring-file=classpath:spring/spring-online.xml

log_base=/opt/cachecloud-web/logs

web.port=8585

log.level=WARN

创建快照

在cachecloud根目录下运行

# mvn clean compile install -Plocal

在cachecloud-open-web模块下运行

# mvn spring-boot:run

生产环境部署

在cachecloud根目录下运行

# mvn clean compile install -Ponline

执行deploy.sh脚本:

# cd /usr/local/cachecloud/script/

# bash deploy.sh /usr/local/

安装maven时会安装openjdk,会导致跟你安装的oracle jdk有冲突,所以编译完CacheCloud后可以卸载openjdk

# yum -y remove java-1.8.0-openjdk*

启动CacheCloud

# bash /opt/cachecloud-web/start.sh

客户端执行

# sh /usr/local/cachecloud/script/cachecloud-init.sh

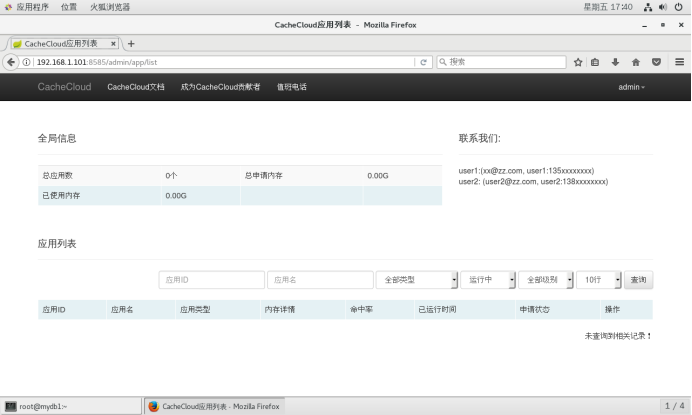

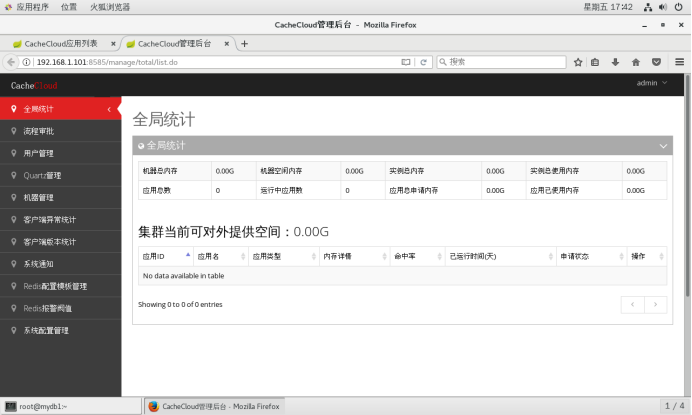

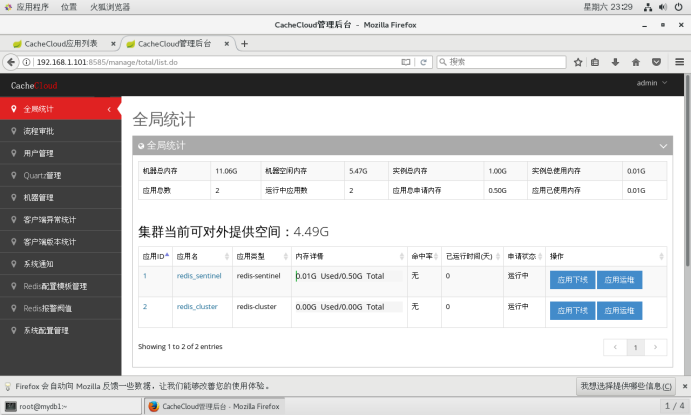

访问管理平台http://192.168.1.101:8585/

进入管理后台

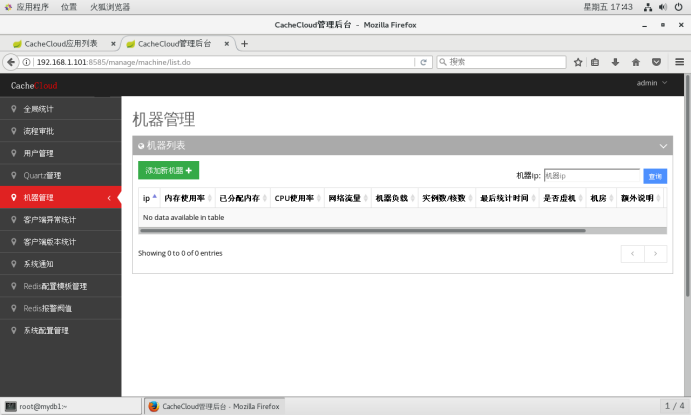

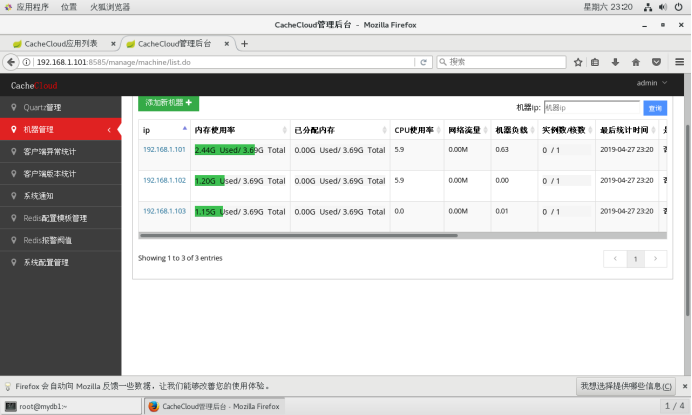

机器管理

应用申请,流程审批

Redis-Sentinel,Redis-Cluster

redis-cli --stat

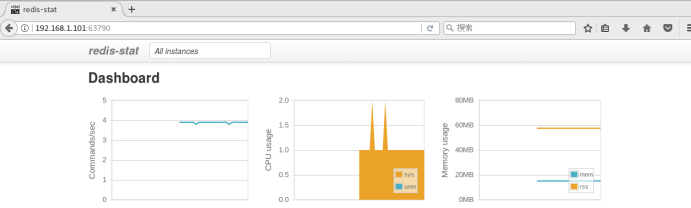

# gem install redis-stat

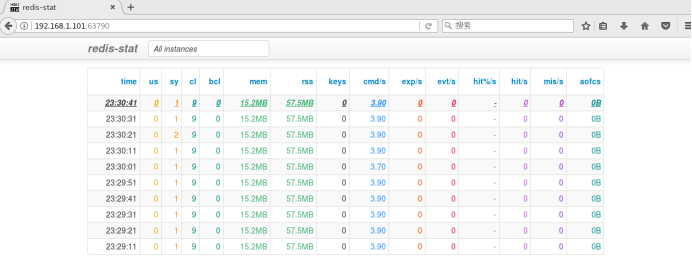

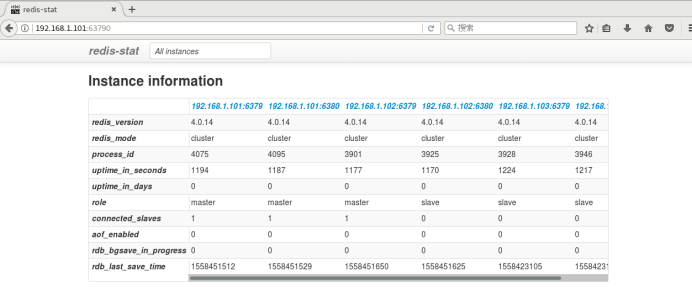

# redis-stat 192.168.1.101:6379 192.168.1.101:6380 192.168.1.102:6379 192.168.1.102:6380 192.168.1.103:6379 192.168.1.103:6380 --server=63790 10 --daemon

指定web界面的端口为63790,10秒监控一次,deamon为后台运行,访问192.168.1.101:63790

slow log,info,benchmark,monitor

监控指标

1、redis服务进程

ps aux | grep -E "redis-server.*$port"

2、连接客户数

grep "connected_clients:" ${tmpFile} | awk -F ":" '{print $2}'

3、阻塞连接数

grep "blocked_clients:" ${tmpFile} | awk -F ":" '{print $2}'

4、 redis占用内存,单位Byte转成MB

grep "used_memory:" ${tmpFile} | awk -F ":" '{print $2}' | awk '{printf "%.2f",$1/1024/1024}'

5、内存峰值,单位Byte转成MB

grep "used_memory_peak:" ${tmpFile} | awk -F ":" '{print $2}' | awk '{printf "%.2f",$1/1024/1024}'

6、主从角色

grep "role:" ${tmpFile} | awk -F ":" '{print $2}' # master(主),slave(从)

7、 master_link_status

grep "master_link_status:" ${tmpFile} | awk -F ":" '{print $2}' # up down

down: Master 已经不可访问了,Slave依然运行良好,并且保留有AOF与RDB文件

8、执行命令总数和qps

grep "total_commands_processed:" ${tmpFile} | awk -F ":" '{print $2}'

计算qps需要计算两次total_commands_processed,然后除以时间差。逻辑是第一分钟将total_commands_processed的值和当时采集该值的时间保存到last.cache中,第二分钟采集的时候获取值和时间,和上次相减得到两个差值相除即可。

9、键个数 (keys): redis实例包含的键个数