原创转载请注明出处:https://www.cnblogs.com/agilestyle/p/15136054.html

Kafka Setup

wget https://downloads.apache.org/kafka/2.8.0/kafka_2.12-2.8.0.tgz tar zxvf kafka_2.12-2.8.0.tgz -C ~/app cd ~/app/kafka_2.12-2.8.0 # start the zookeeper service bin/zookeeper-server-start.sh config/zookeeper.properties # start the Kafka broker service bin/kafka-server-start.sh config/server.properties # create topic bin/kafka-topics.sh --create --topic flink_topic --bootstrap-server localhost:9092 # describle message bin/kafka-topics.sh --describe --topic flink_topic --bootstrap-server localhost:9092 # produce message bin/kafka-console-producer.sh --topic flink_topic --bootstrap-server localhost:9092 # consumer message bin/kafka-console-consumer.sh --topic flink_topic --from-beginning --bootstrap-server localhost:9092

Maven Dependency

<?xml version="1.0" encoding="UTF-8"?> <project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd"> <modelVersion>4.0.0</modelVersion> <groupId>org.fool</groupId> <artifactId>flink</artifactId> <version>1.0-SNAPSHOT</version> <properties> <maven.compiler.source>8</maven.compiler.source> <maven.compiler.target>8</maven.compiler.target> </properties> <dependencies> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-java</artifactId> <version>1.12.5</version> </dependency> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-streaming-java_2.12</artifactId> <version>1.12.5</version> </dependency> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-clients_2.12</artifactId> <version>1.12.5</version> </dependency> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-connector-kafka_2.12</artifactId> <version>1.12.5</version> </dependency> <dependency> <groupId>org.projectlombok</groupId> <artifactId>lombok</artifactId> <version>1.18.20</version> </dependency> </dependencies> </project>

SRC

SourceKafkaTest.java

package org.fool.flink.source; import org.apache.flink.api.common.serialization.SimpleStringSchema; import org.apache.flink.streaming.api.datastream.DataStream; import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment; import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer; import java.util.Properties; public class SourceKafkaTest { public static void main(String[] args) throws Exception { StreamExecutionEnvironment environment = StreamExecutionEnvironment.getExecutionEnvironment(); Properties properties = new Properties(); properties.setProperty("bootstrap.servers", "localhost:9092"); properties.setProperty("group.id", "consumer-group"); properties.setProperty("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer"); properties.setProperty("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer"); properties.setProperty("auto.offset.reset", "latest"); DataStream<String> dataStreamSource = environment.addSource(new FlinkKafkaConsumer<>("flink_topic", new SimpleStringSchema(), properties)); dataStreamSource.print(); environment.execute("source kafka job"); } }

Run

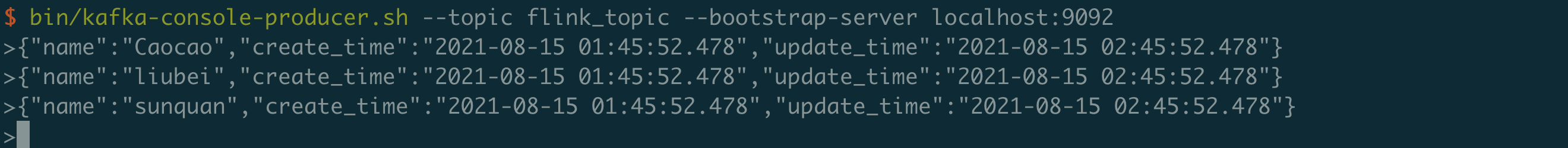

Kafka Producer

Console Output

欢迎点赞关注和收藏