Preface

Today I'm gonna implement a consul in my environment to discover service of MySQL database and check whether the master-slave is working normally.

Introduce

Consul is a tool like zookeeper which can discover services we've registered on it.It's just a simple binary command which can run with simple configuration file(json format).

official website:

Framework

| Hostname | IP | Port | OS Version | MySQL Version | MySQL Role | Consul Version | Consul Role |

| zlm1 | 192.168.56.100 | 3306 | CentOS 7.0 | 5.7.21 | master | Consul v1.2.2 | server |

| zlm2 | 192.168.56.101 | 3306 | CentOS 7.0 | 5.7.21 | slave | Consul v1.2.2 | client |

Procedure

Download and install consul.

1 [root@zlm1 10:12:06 /vagrant] 2 #wget https://releases.hashicorp.com/consul/1.2.2/consul_1.2.2_linux_amd64.zip 3 --2018-08-16 10:02:13-- https://releases.hashicorp.com/consul/1.2.2/consul_1.2.2_linux_amd64.zip 4 Resolving releases.hashicorp.com (releases.hashicorp.com)... 151.101.229.183, 2a04:4e42:36::439 5 Connecting to releases.hashicorp.com (releases.hashicorp.com)|151.101.229.183|:443... connected. 6 HTTP request sent, awaiting response... 200 OK 7 Length: 18371676 (18M) [application/zip] 8 Saving to: ‘consul_1.2.2_linux_amd64.zip’ 9 10 100%[===========================================================================================================>] 18,371,676 38.8KB/s in 6m 12s 11 12 2018-08-16 10:08:29 (48.3 KB/s) - ‘consul_1.2.2_linux_amd64.zip’ saved [18371676/18371676] 13 14 [root@zlm1 10:14:12 /vagrant/consul_1.2.2_linux_amd64] 15 #ls -l 16 total 82431 17 -rwxrwxrwx 1 vagrant vagrant 84408501 Jul 30 18:04 consul //There's only a binary command in the unziped directory of zip file. 18 19 [root@zlm1 10:26:18 /vagrant/consul_1.2.2_linux_amd64] 20 #mkdir /etc/consul.d 21 22 [root@zlm1 10:26:36 /vagrant/consul_1.2.2_linux_amd64] 23 #mkdir /data/consul 24 25 [root@zlm1 10:27:44 /vagrant/consul_1.2.2_linux_amd64] 26 #cp consul ~ 27 28 [root@zlm1 10:29:58 ~] 29 #cd /usr/local/bin 30 31 [root@zlm1 10:30:02 /usr/local/bin] 32 #ls -l|grep consul 33 lrwxrwxrwx 1 root root 12 Aug 16 10:29 consul -> /root/consul 34 35 [root@zlm1 10:32:08 /usr/local/bin] 36 #consul --help 37 Usage: consul [--version] [--help] <command> [<args>] 38 39 Available commands are: 40 agent Runs a Consul agent 41 catalog Interact with the catalog 42 connect Interact with Consul Connect 43 event Fire a new event 44 exec Executes a command on Consul nodes 45 force-leave Forces a member of the cluster to enter the "left" state 46 info Provides debugging information for operators. 47 intention Interact with Connect service intentions 48 join Tell Consul agent to join cluster 49 keygen Generates a new encryption key 50 keyring Manages gossip layer encryption keys 51 kv Interact with the key-value store 52 leave Gracefully leaves the Consul cluster and shuts down 53 lock Execute a command holding a lock 54 maint Controls node or service maintenance mode 55 members Lists the members of a Consul cluster 56 monitor Stream logs from a Consul agent 57 operator Provides cluster-level tools for Consul operators 58 reload Triggers the agent to reload configuration files 59 rtt Estimates network round trip time between nodes 60 snapshot Saves, restores and inspects snapshots of Consul server state 61 validate Validate config files/directories 62 version Prints the Consul version 63 watch Watch for changes in Consul

Start agent of consul by "dev" mode.

1 [root@zlm1 10:40:43 /usr/local/bin] 2 #consul agent -dev 3 ==> Starting Consul agent... 4 ==> Consul agent running! 5 Version: 'v1.2.2' 6 Node ID: '7c839914-8a47-ab36-8920-1a9da54fc6c3' 7 Node name: 'zlm1' 8 Datacenter: 'dc1' (Segment: '<all>') 9 Server: true (Bootstrap: false) 10 Client Addr: [127.0.0.1] (HTTP: 8500, HTTPS: -1, DNS: 8600) 11 Cluster Addr: 127.0.0.1 (LAN: 8301, WAN: 8302) 12 Encrypt: Gossip: false, TLS-Outgoing: false, TLS-Incoming: false 13 14 ==> Log data will now stream in as it occurs: 15 16 2018/08/16 10:47:41 [DEBUG] agent: Using random ID "7c839914-8a47-ab36-8920-1a9da54fc6c3" as node ID 17 2018/08/16 10:47:41 [INFO] raft: Initial configuration (index=1): [{Suffrage:Voter ID:7c839914-8a47-ab36-8920-1a9da54fc6c3 Address:127.0.0.1:8300}] 18 2018/08/16 10:47:41 [INFO] serf: EventMemberJoin: zlm1.dc1 127.0.0.1 19 2018/08/16 10:47:41 [INFO] serf: EventMemberJoin: zlm1 127.0.0.1 20 2018/08/16 10:47:41 [INFO] raft: Node at 127.0.0.1:8300 [Follower] entering Follower state (Leader: "") 21 2018/08/16 10:47:41 [INFO] consul: Adding LAN server zlm1 (Addr: tcp/127.0.0.1:8300) (DC: dc1) 22 2018/08/16 10:47:41 [INFO] consul: Handled member-join event for server "zlm1.dc1" in area "wan" 23 2018/08/16 10:47:41 [DEBUG] agent/proxy: managed Connect proxy manager started 24 2018/08/16 10:47:41 [WARN] agent/proxy: running as root, will not start managed proxies 25 2018/08/16 10:47:41 [INFO] agent: Started DNS server 127.0.0.1:8600 (tcp) 26 2018/08/16 10:47:41 [INFO] agent: Started DNS server 127.0.0.1:8600 (udp) 27 2018/08/16 10:47:41 [INFO] agent: Started HTTP server on 127.0.0.1:8500 (tcp) 28 2018/08/16 10:47:41 [INFO] agent: started state syncer 29 2018/08/16 10:47:41 [WARN] raft: Heartbeat timeout from "" reached, starting election 30 2018/08/16 10:47:41 [INFO] raft: Node at 127.0.0.1:8300 [Candidate] entering Candidate state in term 2 31 2018/08/16 10:47:41 [DEBUG] raft: Votes needed: 1 32 2018/08/16 10:47:41 [DEBUG] raft: Vote granted from 7c839914-8a47-ab36-8920-1a9da54fc6c3 in term 2. Tally: 1 33 2018/08/16 10:47:41 [INFO] raft: Election won. Tally: 1 34 2018/08/16 10:47:41 [INFO] raft: Node at 127.0.0.1:8300 [Leader] entering Leader state 35 2018/08/16 10:47:41 [INFO] consul: cluster leadership acquired 36 2018/08/16 10:47:41 [INFO] consul: New leader elected: zlm1 37 2018/08/16 10:47:41 [INFO] connect: initialized CA with provider "consul" 38 2018/08/16 10:47:41 [DEBUG] consul: Skipping self join check for "zlm1" since the cluster is too small 39 2018/08/16 10:47:41 [INFO] consul: member 'zlm1' joined, marking health alive 40 2018/08/16 10:47:41 [DEBUG] agent: Skipping remote check "serfHealth" since it is managed automatically 41 2018/08/16 10:47:41 [INFO] agent: Synced node info 42 2018/08/16 10:47:44 [DEBUG] agent: Skipping remote check "serfHealth" since it is managed automatically 43 2018/08/16 10:47:44 [DEBUG] agent: Node info in sync 44 2018/08/16 10:47:44 [DEBUG] agent: Node info in sync 45 2018/08/16 10:48:41 [DEBUG] consul: Skipping self join check for "zlm1" since the cluster is too small 46 2018/08/16 10:49:13 [DEBUG] agent: Skipping remote check "serfHealth" since it is managed automatically 47 2018/08/16 10:49:13 [DEBUG] agent: Node info in sync 48 2018/08/16 10:49:41 [DEBUG] manager: Rebalanced 1 servers, next active server is zlm1.dc1 (Addr: tcp/127.0.0.1:8300) (DC: dc1) 49 2018/08/16 10:49:41 [DEBUG] consul: Skipping self join check for "zlm1" since the cluster is too small 50 2018/08/16 10:50:41 [DEBUG] consul: Skipping self join check for "zlm1" since the cluster is too small 51 2018/08/16 10:51:06 [DEBUG] agent: Skipping remote check "serfHealth" since it is managed automatically 52 2018/08/16 10:51:06 [DEBUG] agent: Node info in sync 53 54 //Now the consul cluser has only one node "zlm1". 55 56 [root@zlm1 11:11:17 ~] 57 #consul members 58 Node Address Status Type Build Protocol DC Segment 59 zlm1 127.0.0.1:8301 alive server 1.2.2 2 dc1 <all> 60 61 //Type "Ctrl+C" can exit consul gracefully. 62 ^C 2018/08/16 11:24:50 [INFO] agent: Caught signal: interrupt 63 2018/08/16 11:24:50 [INFO] agent: Graceful shutdown disabled. Exiting 64 2018/08/16 11:24:50 [INFO] agent: Requesting shutdown 65 2018/08/16 11:24:50 [WARN] agent: dev mode disabled persistence, killing all proxies since we can't recover them 66 2018/08/16 11:24:50 [DEBUG] agent/proxy: Stopping managed Connect proxy manager 67 2018/08/16 11:24:50 [INFO] consul: shutting down server 68 2018/08/16 11:24:50 [WARN] serf: Shutdown without a Leave 69 2018/08/16 11:24:50 [WARN] serf: Shutdown without a Leave 70 2018/08/16 11:24:50 [INFO] manager: shutting down 71 2018/08/16 11:24:50 [INFO] agent: consul server down 72 2018/08/16 11:24:50 [INFO] agent: shutdown complete 73 2018/08/16 11:24:50 [INFO] agent: Stopping DNS server 127.0.0.1:8600 (tcp) 74 2018/08/16 11:24:50 [INFO] agent: Stopping DNS server 127.0.0.1:8600 (udp) 75 2018/08/16 11:24:50 [INFO] agent: Stopping HTTP server 127.0.0.1:8500 (tcp) 76 2018/08/16 11:24:50 [INFO] agent: Waiting for endpoints to shut down 77 2018/08/16 11:24:50 [INFO] agent: Endpoints down 78 2018/08/16 11:24:50 [INFO] agent: Exit code: 1 79 80 [root@zlm1 11:24:50 /usr/local/bin] 81 #ps aux|grep consul 82 root 4245 0.0 0.0 112640 960 pts/0 R+ 11:24 0:00 grep --color=auto consul 83 84 [root@zlm1 11:24:59 /usr/local/bin] 85 #consul members 86 Error retrieving members: Get http://127.0.0.1:8500/v1/agent/members?segment=_all: dial tcp 127.0.0.1:8500: connect: connection refused

Prepare the server configuration file and start it again.

1 [root@zlm1 11:38:35 /etc/consul.d] 2 #cat server.json 3 { 4 "data_dir":"/data/consul", 5 "datacenter":"dc1", 6 "log_level":"INFO", 7 "server":true, 8 "bootstrap_expect":1, 9 "bind_addr":"192.168.56.100", 10 "client_addr":"192.168.56.100", 11 "ports":{ 12 }, 13 "ui":true, 14 "retry_join":[], 15 "retry_interval":"3s", 16 "raft_protocol":3, 17 "rejoin_after_leave":true 18 } 19 20 [root@zlm1 11:38:39 /etc/consul.d] 21 #consul agent --config-dir=/etc/consul.d/ > /data/consul/consul.log 2>&1 & 22 [1] 4320 23 24 [root@zlm1 11:39:02 /etc/consul.d] 25 #ps aux|grep consul 26 root 4320 1.3 2.0 54388 21252 pts/0 Sl 11:39 0:00 consul agent --config-dir=/etc/consul.d/ 27 root 4333 0.0 0.0 112640 960 pts/0 R+ 11:39 0:00 grep --color=auto consul 28 29 [root@zlm1 11:39:08 /etc/consul.d] 30 #tail -f /data/consul/consul.log 31 2018/08/16 11:39:02 [INFO] agent: Started HTTP server on 192.168.56.100:8500 (tcp) 32 2018/08/16 11:39:02 [INFO] agent: started state syncer 33 2018/08/16 11:39:07 [WARN] raft: Heartbeat timeout from "" reached, starting election 34 2018/08/16 11:39:07 [INFO] raft: Node at 192.168.56.100:8300 [Candidate] entering Candidate state in term 2 35 2018/08/16 11:39:07 [INFO] raft: Election won. Tally: 1 36 2018/08/16 11:39:07 [INFO] raft: Node at 192.168.56.100:8300 [Leader] entering Leader state 37 2018/08/16 11:39:07 [INFO] consul: cluster leadership acquired 38 2018/08/16 11:39:07 [INFO] consul: New leader elected: zlm1 39 2018/08/16 11:39:07 [INFO] consul: member 'zlm1' joined, marking health alive 40 2018/08/16 11:39:07 [INFO] agent: Synced node info 41 42 [root@zlm1 11:51:38 ~] 43 #consul members 44 Error retrieving members: Get http://127.0.0.1:8500/v1/agent/members?segment=_all: dial tcp 127.0.0.1:8500: connect: connection refused 45 46 [root@zlm1 11:51:44 ~] 47 #consul members --http-addr=192.168.56.100:8500 48 Node Address Status Type Build Protocol DC Segment 49 zlm1 192.168.56.100:8301 alive server 1.2.2 2 dc1 <all>

Add a client on zlm2.

1 [root@zlm1 13:33:57 /etc/consul.d] 2 #scp /root/consul zlm2:~ 3 consul 100% 80MB 40.3MB/s 00:02 4 5 [root@zlm2 13:35:33 ~] 6 #mkdir /etc/consul.d 7 8 [root@zlm2 13:36:37 ~] 9 #mkdir /data/consul 10 11 [root@zlm2 13:37:11 ~] 12 #cd /etc/consul.d/ 13 14 [root@zlm2 13:37:46 /etc/consul.d] 15 #ls -l 16 total 4 17 -rw-r--r-- 1 root root 241 Aug 16 13:35 client.json 18 19 [root@zlm2 13:37:47 /etc/consul.d] 20 #cat client.json 21 { 22 "data_dir": "/data/consul", 23 "enable_script_checks": true, 24 "bind_addr": "192.168.56.101", 25 "retry_join": ["192.168.56.100"], 26 "retry_interval": "30s", 27 "rejoin_after_leave": true, 28 "start_join": ["192.168.56.100"] 29 } 30 31 [root@zlm2 13:43:42 /etc/consul.d] 32 #consul agent -client 192.168.56.101 -bind 192.168.56.101 --config-dir=/etc/consul.d 33 ==> Starting Consul agent... 34 ==> Joining cluster... 35 Join completed. Synced with 1 initial agents 36 ==> Consul agent running! 37 Version: 'v1.2.2' 38 Node ID: 'a69eae21-4e31-7edf-1f1a-3ec285a8fb3b' 39 Node name: 'zlm2' 40 Datacenter: 'dc1' (Segment: '') 41 Server: false (Bootstrap: false) 42 Client Addr: [192.168.56.101] (HTTP: 8500, HTTPS: -1, DNS: 8600) 43 Cluster Addr: 192.168.56.101 (LAN: 8301, WAN: 8302) 44 Encrypt: Gossip: false, TLS-Outgoing: false, TLS-Incoming: false 45 46 ==> Log data will now stream in as it occurs: 47 48 2018/08/16 13:43:48 [INFO] serf: EventMemberJoin: zlm2 192.168.56.101 49 2018/08/16 13:43:48 [INFO] agent: Started DNS server 192.168.56.101:8600 (udp) 50 2018/08/16 13:43:48 [WARN] agent/proxy: running as root, will not start managed proxies 51 2018/08/16 13:43:48 [INFO] agent: Started DNS server 192.168.56.101:8600 (tcp) 52 2018/08/16 13:43:48 [INFO] agent: Started HTTP server on 192.168.56.101:8500 (tcp) 53 2018/08/16 13:43:48 [INFO] agent: (LAN) joining: [192.168.56.100] 54 2018/08/16 13:43:48 [INFO] agent: Retry join LAN is supported for: aliyun aws azure digitalocean gce os packet scaleway softlayer triton vsphere 55 2018/08/16 13:43:48 [INFO] agent: Joining LAN cluster... 56 2018/08/16 13:43:48 [INFO] agent: (LAN) joining: [192.168.56.100] 57 2018/08/16 13:43:48 [INFO] serf: EventMemberJoin: zlm1 192.168.56.100 58 2018/08/16 13:43:48 [INFO] agent: (LAN) joined: 1 Err: <nil> 59 2018/08/16 13:43:48 [INFO] agent: started state syncer 60 2018/08/16 13:43:48 [INFO] consul: adding server zlm1 (Addr: tcp/192.168.56.100:8300) (DC: dc1) 61 2018/08/16 13:43:48 [INFO] agent: (LAN) joined: 1 Err: <nil> 62 2018/08/16 13:43:48 [INFO] agent: Join LAN completed. Synced with 1 initial agents 63 2018/08/16 13:43:48 [INFO] agent: Synced node info 64 65 [root@zlm2 13:45:36 /etc/consul.d] 66 #consul members --http-addr=192.168.56.100:8500 67 Node Address Status Type Build Protocol DC Segment 68 zlm1 192.168.56.100:8301 alive server 1.2.2 2 dc1 <all> 69 zlm2 192.168.56.101:8301 alive client 1.2.2 2 dc1 <default>

Add two services on node zlm2.

1 [root@zlm2 13:58:37 /etc/consul.d] 2 #cat service_master_check.json 3 { 4 "service": 5 { 6 "name": "w_db3306", 7 "tags": [ 8 "Master Satus Check" 9 ], 10 "address": "192.168.56.100", 11 "port": 3306, 12 "check": 13 { 14 "args": [ 15 "/data/consul/script/CheckMaster.py", 16 "3306" 17 ], 18 "interval": "15s" 19 } 20 } 21 } 22 23 [root@zlm2 13:59:42 /etc/consul.d] 24 #cat service_slave_check.json 25 { 26 "service": 27 { 28 "name": "r_db3306", 29 "tags": [ 30 "Slave Satus Check" 31 ], 32 "address": "192.168.56.101", 33 "port": 3306, 34 "check": 35 { 36 "args": [ 37 "/data/consul/script/CheckSlave.py", 38 "3306" 39 ], 40 "interval": "15s" 41 } 42 } 43 } 44 45 [root@zlm2 14:02:53 /etc/consul.d] 46 #ls -l 47 total 12 48 -rw-r--r-- 1 root root 241 Aug 16 13:35 client.json 49 -rw-r--r-- 1 root root 593 Aug 16 13:56 service_master_check.json 50 -rw-r--r-- 1 root root 590 Aug 16 13:57 servi_slave_check.json

Check CheckMaster.py script.

1 [root@zlm2 14:05:13 /data/consul/script] 2 #cat CheckMaster.py 3 #!/usr/bin/python 4 import sys 5 import os 6 import pymysql 7 8 port = int(sys.argv[1]) 9 var={} 10 conn = pymysql.connect(host='192.168.56.100',port=port,user='zlm',passwd='zlmzlm') 11 #cur = conn.cursor(pymysql.cursor.DictCursor) 12 cur = conn.cursor() 13 14 cur.execute("show global variables like "%read_only%"") 15 rows = cur.fetchall() 16 17 for r in rows: 18 var[r[0]]=r[1] 19 if var['read_only']=='OFF' and var['super_read_only']=='OFF': 20 print "MySQL %d master instance." % port 21 else: 22 print "This is read only instance." 23 sys.exit(2) 24 25 sys.exit(0) 26 cur.close() 27 conn.close()

Check CheckSlave.py script.

1 [root@zlm2 14:07:11 /data/consul/script] 2 #cat CheckSlave.py 3 #!/usr/bin/python 4 import sys 5 import os 6 import pymysql 7 8 port = int(sys.argv[1]) 9 var={} 10 conn = pymysql.connect(host='192.168.56.101',port=port,user='zlm',passwd='zlmzlm') 11 cur = conn.cursor 12 cur.execute("show global variables like "%read_only%"") 13 rows = cur.fetchall() 14 15 for r in rows: 16 var[r[0]]=r[1] 17 if var['read_only']=='OFF' and var['super_read_only']=='OFF': 18 print "MySQL %d master instance." % port 19 sys.exit(2) 20 else: 21 print "MySQL %d is read only instance." % port 22 23 cur = conn.cursor(pymysql.cursors.DictCursor) 24 cur.execute("show slave status") 25 slave_status = cur.fetchone() 26 27 if len(slave_status)<30: 28 print "Slave replication setup error."; 29 sys.exit(2) 30 31 if slave_status['Slave_IO_Running'] !='Yes' or slave_status['Slave_SQL_Running'] !='Yes': 32 print "Replication error: replication from host=%s, port=%s, io_thread=%s, sql_thread=%s, error info %s %s" % (slave_status['Master_Host'],slave_status['Master_Port'],slave_status['Slave_IO_Running'],slave_status['Slave_SQL_Running'],slave_status['Last_IO_Error'],slave_status['Last_SQL_Error']) 33 sys.exit(1) 34 print slave_status 35 36 sys.exit(0) 37 cur.close() 38 conn.close()

Restartt consul(or reload).

1 [root@zlm2 14:12:37 /etc/consul.d] 2 #consul members --http-addr=192.168.56.100:8500 3 Node Address Status Type Build Protocol DC Segment 4 zlm1 192.168.56.100:8301 alive server 1.2.2 2 dc1 <all> 5 zlm2 192.168.56.101:8301 alive client 1.2.2 2 dc1 <default> 6 7 [root@zlm2 14:13:27 /etc/consul.d] 8 #consul leave --http-addr=192.168.56.101:8500 9 Graceful leave complete 10 11 [root@zlm2 14:13:41 /etc/consul.d] 12 #consul members --http-addr=192.168.56.100:8500 13 Node Address Status Type Build Protocol DC Segment 14 zlm1 192.168.56.100:8301 alive server 1.2.2 2 dc1 <all> 15 zlm2 192.168.56.101:8301 left client 1.2.2 2 dc1 <default> 16 17 [root@zlm2 14:18:44 /etc/consul.d] 18 #consul agent --config-dir=/etc/consul.d/ -client 192.168.56.101 > /data/consul/consul.log 2>&1 & 19 [1] 5013 20 21 [root@zlm2 14:19:59 /etc/consul.d] 22 #!ps 23 ps aux|grep consul 24 root 5013 2.3 2.0 56440 21132 pts/2 Sl 14:19 0:00 consul agent --config-dir=/etc/consul.d/ -client 192.168.56.101 25 root 5026 0.0 0.0 112640 960 pts/2 R+ 14:19 0:00 grep --color=auto consul 26 27 [root@zlm2 14:20:49 /etc/consul.d] 28 #consul reload -http-addr=192.168.56.101:8500 29 Configuration reload triggered

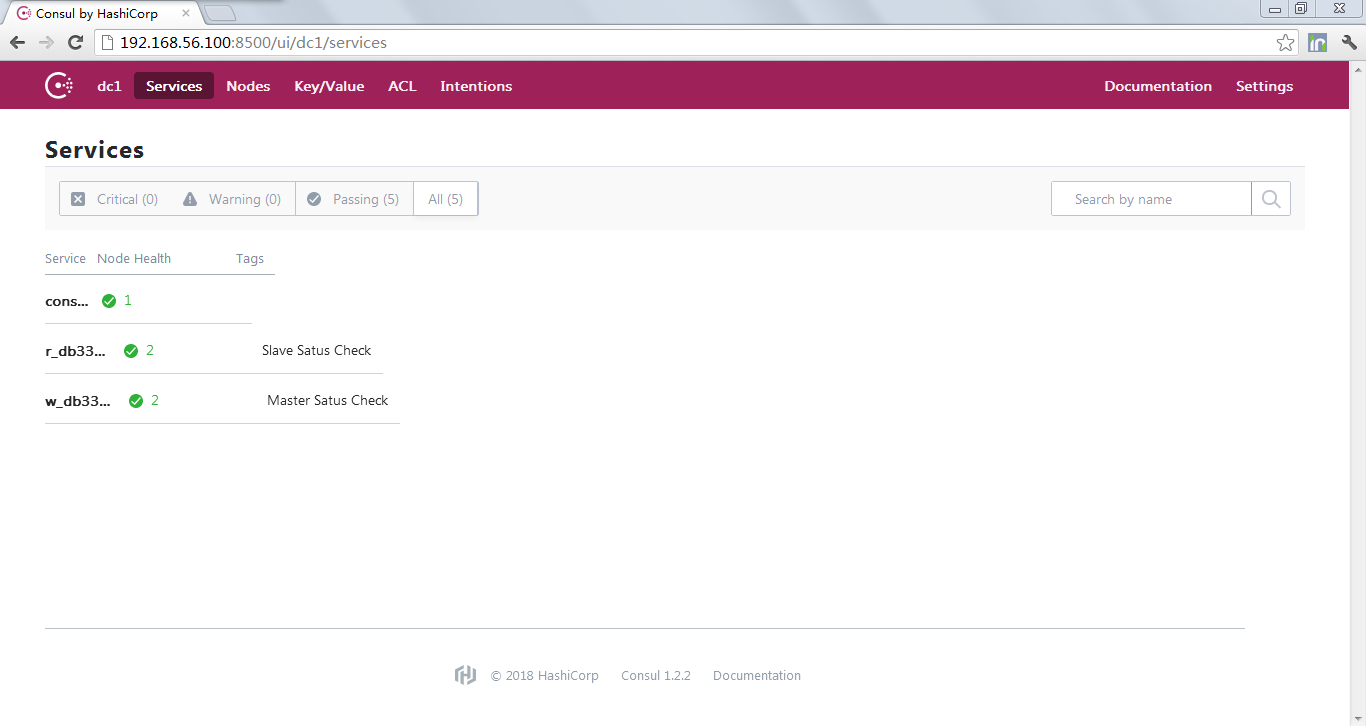

Well,now we can login the dashbord(GUI) of consul to check MySQL working status.