在开发项目中,经常有一些操作时间比较长(生产环境中超过了nginx的timeout时间),或者是间隔一段时间就要执行的任务。

在这种情况下,使用celery就是一个很好的选择。

celery是一个异步任务队列/基于分布式消息传递的作业队列。

Celery通过消息(message)进行通信,使用代理(broker)在客户端和工作执行者之间进行交互。

当开始一个任务时,客户端发送消息到队列并由代理将其发往响应的工作执行者处。

准备使用redis作为消息代理(broker),Django数据库作为结果存储(ResultStore)。

1、安装

redis:

windows:

linux:

yum install redis-server

PS:需要在cmd中运行,不能再powercmd。很奇怪。

pip install celery

pip install celery-with-redis

pip install django-celery

2、django代码(whthas_home为project,portal为app)

修改代码,whthas_home/__init__.py

1 from __future__ import absolute_import 2 3 from .celery import app as celery_app

修改代码,whthas_home/setting.py

1 # Celery settings 2 import djcelery 3 djcelery.setup_loader() 4 5 BROKER_URL = 'redis://127.0.0.1:6379/0' 6 CELERY_RESULT_BACKEND = 'redis://127.0.0.1:6379/0' ##加密方式CELERY_RESULT_BACKEND = 'redis://:密码@127.0.0.1:6379/0' 7 CELERY_ACCEPT_CONTENT = ['json'] 8 CELERY_TASK_SERIALIZER = 'json' 9 CELERY_RESULT_SERIALIZER = 'json'

14 15 INSTALLED_APPS = [ 16 'django.contrib.auth', 17 'django.contrib.contenttypes', 18 'django.contrib.sessions', 19 'django.contrib.messages', 20 'django.contrib.staticfiles', 21 'suit', 22 'django.contrib.admin', 23 'DjangoUeditor', 24 'portal', 25 'djcelery', 26 ]

增加文件, whthas_home/celery.py

1 from __future__ import absolute_import

2

3 import os

4 from celery import Celery

5 from django.conf import settings

6

7 os.environ.setdefault('DJANGO_SETTINGS_MODULE', 'whthas_home.settings')

8

9 app = Celery('portal')

10

11 app.config_from_object('django.conf:settings')

12 app.autodiscover_tasks(lambda: settings.INSTALLED_APPS)

13

14

15 @app.task(bind=True)

16 def debug_task(self):

17 print('Request: {0!r}'.format(self.request))

增加文件,portal/tasks.py

1 from celery import task

2 from time import sleep

3

4

5 @task()

6 def Task_A(message):

7 Task_A.update_state(state='PROGRESS', meta={'progress': 0})

8 sleep(10)

9 Task_A.update_state(state='PROGRESS', meta={'progress': 30})

10 sleep(10)

11 return message

12

13

14 def get_task_status(task_id):

15 task = Task_A.AsyncResult(task_id)

16

17 status = task.state

18 progress = 0

19

20 if status == u'SUCCESS':

21 progress = 100

22 elif status == u'FAILURE':

23 progress = 0

24 elif status == 'PROGRESS':

25 progress = task.info['progress']

26

27 return {'status': status, 'progress': progress}

3、测试

启动broker:python manage.py celeryd -l info

进入shell:python manage.py shell,测试程序是否正常。

>>> from portal.tasks import *

>>> t = TaskA.delay("heel2")

>>> get_task_status(t.id)

{'status': u'PROGRESS', 'progress': 0}

>>> get_task_status(t.id)

{'status': u'PROGRESS', 'progress': 0}

>>> get_task_status(t.id)

{'status': u'PROGRESS', 'progress': 30}

>>> get_task_status(t.id)

{'status': u'PROGRESS', 'progress': 30}

>>> get_task_status(t.id)

{'status': u'SUCCESS', 'progress': 100}

>>>

同时broker侧能看到:

[2017-04-21 16:38:47,023: INFO/MainProcess] Received task: portal.tasks.Task_A[da948495-c64b-4ff9-882b-876721cd5017] [2017-04-21 16:39:07,035: INFO/MainProcess] Task portal.tasks.Task_A[da948495-c64b-4ff9-882b-876721cd5017] succeeded in 20.0099999905s: heel

表示代码能正常运行。

使用redis-client看到任务状态:

127.0.0.1:6379> get "celery-task-meta-da948495-c64b-4ff9-882b-876721cd5017"

"{"status": "SUCCESS", "traceback": null, "result": "heel", "children": []}"

相关redis命令:keys *

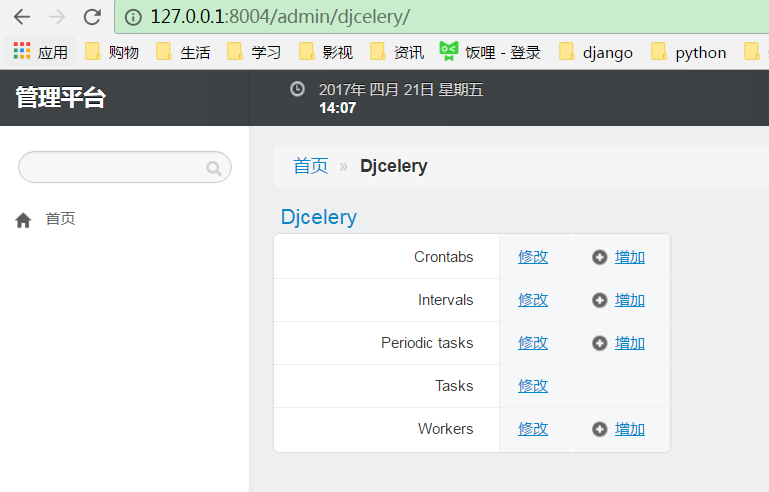

4、django后台定义任务

进入后台

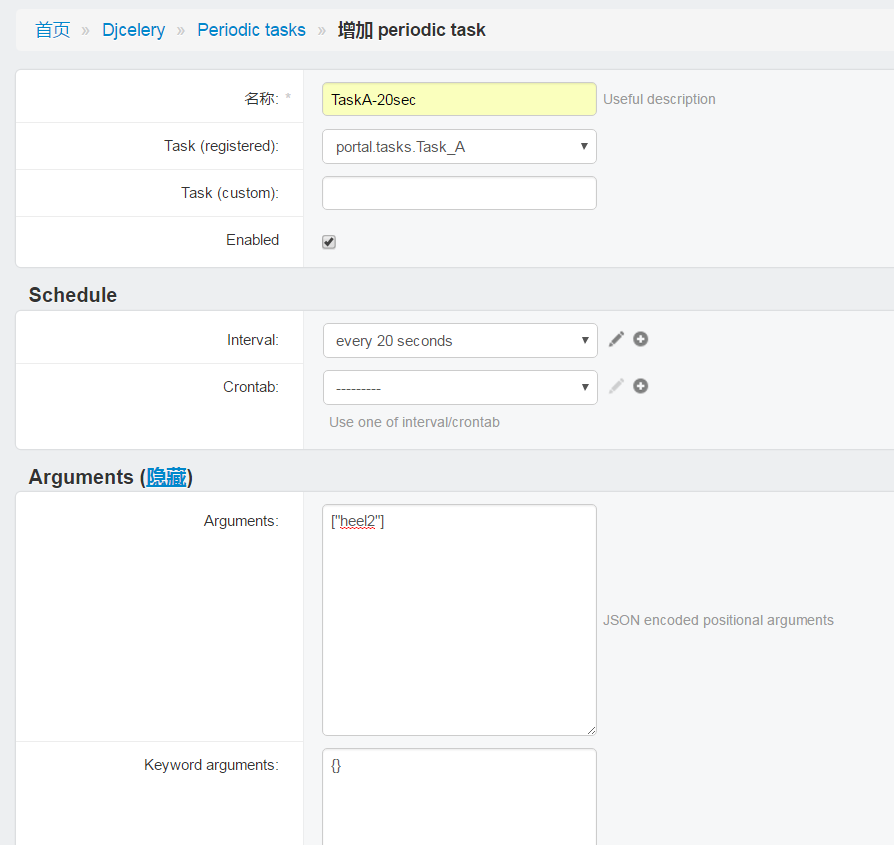

设置任务

因为这里是个定时任务,所以还需要启动心跳 :python manage.py celery beat

broker侧能看到:

[2017-04-21 16:56:33,216: INFO/MainProcess] Received task: portal.tasks.Task_A[d8a26977-8413-4bf0-b518-b53052af4cee] [2017-04-21 16:56:53,211: INFO/MainProcess] Received task: portal.tasks.Task_A[00f1cab7-eb56-4cc3-9979-a8a68aaaf2de] [2017-04-21 16:56:53,220: INFO/MainProcess] Task portal.tasks.Task_A[d8a26977-8413-4bf0-b518-b53052af4cee] succeeded in 20.003000021s: heel2 [2017-04-21 16:57:13,211: INFO/MainProcess] Received task: portal.tasks.Task_A[aa652612-8525-4110-94ea-9010085ec20b] [2017-04-21 16:57:13,223: INFO/MainProcess] Task portal.tasks.Task_A[00f1cab7-eb56-4cc3-9979-a8a68aaaf2de] succeeded in 20.0080001354s: heel2 [2017-04-21 16:57:33,213: INFO/MainProcess] Received task: portal.tasks.Task_A[9876890e-6a71-4501-bdae-775492ebae88] [2017-04-21 16:57:33,219: INFO/MainProcess] Task portal.tasks.Task_A[aa652612-8525-4110-94ea-9010085ec20b] succeeded in 20.0050001144s: heel2 [2017-04-21 16:57:53,211: INFO/MainProcess] Received task: portal.tasks.Task_A[c12fcffc-3910-4a22-93b3-0df740910728] [2017-04-21 16:57:53,221: INFO/MainProcess] Task portal.tasks.Task_A[9876890e-6a71-4501-bdae-775492ebae88] succeeded in 20.0069999695s: heel2 [2017-04-21 16:58:13,211: INFO/MainProcess] Received task: portal.tasks.Task_A[ccfad575-c0b4-48f5-9385-85ff5dac76fc] [2017-04-21 16:58:13,217: INFO/MainProcess] Task portal.tasks.Task_A[c12fcffc-3910-4a22-93b3-0df740910728] succeeded in 20.003000021s: heel2 [2017-04-21 16:58:33,211: INFO/MainProcess] Received task: portal.tasks.Task_A[0d6f77a6-a29c-4ead-9428-e3e758c754e1] [2017-04-21 16:58:33,221: INFO/MainProcess] Task portal.tasks.Task_A[ccfad575-c0b4-48f5-9385-85ff5dac76fc] succeeded in 20.007999897s: heel2 [2017-04-21 16:58:53,211: INFO/MainProcess] Received task: portal.tasks.Task_A[34b2b3a0-771c-4a28-94a1-92e25763fae1] [2017-04-21 16:58:53,217: INFO/MainProcess] Task portal.tasks.Task_A[0d6f77a6-a29c-4ead-9428-e3e758c754e1] succeeded in 20.0039999485s: heel2 [2017-04-21 16:59:13,211: INFO/MainProcess] Received task: portal.tasks.Task_A[d4be920f-376a-46a2-9edd-095234d29ef2] [2017-04-21 16:59:13,217: INFO/MainProcess] Task portal.tasks.Task_A[34b2b3a0-771c-4a28-94a1-92e25763fae1] succeeded in 20.0039999485s: heel2

可见:设置定时任务成功。

参考资料:

1、https://gist.github.com/tyrchen/1436486,Django-celery + Redis notes

2、http://www.cnblogs.com/aguncn/p/4947092.html,django celery redis简单测试

3、https://my.oschina.net/kinegratii/blog/292395?fromerr=2lvw3H0L,djcelery入门:实现运行定时任务

另一个教程: https://www.cnblogs.com/alex3714/p/6351797.html

二:

如果有耗时比较长的任务,也可以用celery先返回一个ID,然后在页面中用ajax拿着id再取结果。