url请求

1 from urllib.request import urlopen 2 url="****" 3 respones = urlopen(url) 4 content = respones.read() 5 content = content.decode('utf-8') 6 print(content)

request请求

1 import requests 2 url="***" 3 headers = {'Accept': '*/*', 4 'Accept-Language': 'en-US,en;q=0.8', 5 'Cache-Control': 'max-age=0', 6 'User-Agent': 'Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/48.0.2564.116 Safari/537.36', 7 'Connection': 'keep-alive', 8 'Referer': 'http://www.baidu.com/' 9 } 10 res = requests.get (url,headers=headers)#加headers头是为了伪装成浏览器浏览网页 11 print(res.status_code)#打印状态码 12 print(res.text)#打印文本 13 print(res.content)#打印图片或者视频文本都可以

解析库BeautifulSoup

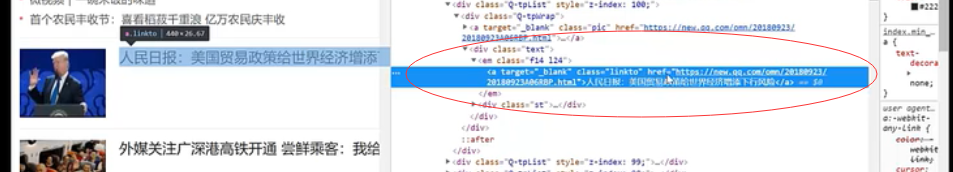

1 import requests 2 from bs4 import BeautifulSoup 3 url="http://news.qq.com/" 4 headers = {'Accept': '*/*', 5 'Accept-Language': 'en-US,en;q=0.8', 6 'Cache-Control': 'max-age=0', 7 'User-Agent': 'Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/48.0.2564.116 Safari/537.36', 8 'Connection': 'keep-alive', 9 'Referer': 'http://www.baidu.com/' 10 } 11 res = requests.get (url,headers=headers)#加headers头是为了伪装成浏览器浏览网页 12 Soup = BeautifulSoup(res.text.encode("utf-8"),'lxml') 13 en = Soup.find_all('en',attrs={'class':'f14 124'}) 14 for i in en: 15 title = i.a.get_text() 16 link = i.a['href'] 17 print({ 18 '标题':title, 19 '链接':link 20 })

解析库lxml,xpath表达法

1 import requests 2 from lxml import etree 3 url="http://news.qq.com/" 4 headers = {'Accept': '*/*', 5 'Accept-Language': 'en-US,en;q=0.8', 6 'Cache-Control': 'max-age=0', 7 'User-Agent': 'Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/48.0.2564.116 Safari/537.36', 8 'Connection': 'keep-alive', 9 'Referer': 'http://www.baidu.com/' 10 } 11 html = requests.get (url,headers=headers)#加headers头是为了伪装成浏览器浏览网页 12 con = etree.HTML(html.text) 13 title = con.xpath('//en[@class="f14 124"]/a/text()') 14 link = con.xpath('//en[@class="f14 124"]/a/@href') 15 for i in zip(title,link): 16 print({ 17 '标题':i[0], 18 '链接':i[1] 19 })

selesct方法

1 import requests 2 from bs4 import BeautifulSoup 3 url="http://news.qq.com/" 4 headers = {'Accept': '*/*', 5 'Accept-Language': 'en-US,en;q=0.8', 6 'Cache-Control': 'max-age=0', 7 'User-Agent': 'Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/48.0.2564.116 Safari/537.36', 8 'Connection': 'keep-alive', 9 'Referer': 'http://www.baidu.com/' 10 } 11 res = requests.get (url,headers=headers)#加headers头是为了伪装成浏览器浏览网页 12 Soup = BeautifulSoup(res.text.encode("utf-8"),'lxml') 13 en = Soup.select('en[class="f12 124"] a') 14 for i in en: 15 title = i.a.get_text() 16 link = i.a['href'] 17 print({ 18 '标题':i[0], 19 '链接':i[1] 20 })