一、Keepalived案例分析

·企业应用中,单台服务器承担应用存在单点故障的危险

·单点故障一旦发生,企业服务将发生中断,造成极大的危害

二、Keepalived工具介绍

1、专为LVS和HA设计的一款健康检查工具

·支持故障自动切换(Failover)

·支持节点健康状态检查(Health Checking)

·官方网站:http://www.Keepalived.org/

三、Keepalived实现原理剖析

1、Keepalived采用VRRP热备份协议实现Linux服务器的多机热备功能

2、VRRP(虚拟路由冗余协议)是针对路由器的一种备份解决方案

·由多台路由器组成一个热备组,通过共用的虚拟IP地址对外提供服务

·每个热备组内同时只有一台主路由器提供服务,其他路由器处于冗余状态

·若当前在线的路由器失效,则其他路由器会根据设置的优先级自动接替虚拟IP地址,继续提供服务

四、Keepalived案例讲解

1、双机热备的故障切换是由虚拟IP地址的漂移来实现,适用于各种应用服务器

2、实现基于Web服务的双机热备

·漂移地址:192.168.10.72

·主、备服务器:192.168.10.73、192.168.10.74

·提供的应用服务:Web

3、环境(基于LVS-DR进行搭建)

4、配置主调度器(192.168.100.10)

加载ip_vs模块

[root@lvs-zhu ~]# cat /proc/net/ip_vs

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

[root@lvs-zhu ~]# rpm -ivh /mnt/Packages/ipvsadm-1.27-7.el7.x86_64.rpm

[root@lvs-zhu ~]# yum -y install gcc gcc-c++ make popt-devel openssl-devel kernel-devel

[root@lvs-zhu ~]# tar zxf keepalived-2.0.13.tar.gz

[root@lvs-zhu ~]# cd keepalived-2.0.13/

[root@lvs-zhu keepalived-2.0.13]# ./configure --prefix=/

[root@lvs-zhu keepalived-2.0.13]# make && make install

[root@lvs-zhu keepalived-2.0.13]# cp keepalived/etc/init.d/keepalived /etc/init.d/

[root@lvs-zhu keepalived-2.0.13]# systemctl enable keepalived.service

[root@lvs-zhu keepalived-2.0.13]# vi /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id LVS_01 #本服务器的名称

}

vrrp_instance VI_1 { #定义VRRP热备实例

state MASTER #热备状态,MASTER表示主服务器,BACKUP表示从服务器

interface ens33 #承载VIP地址的物理接口

virtual_router_id 51 #虚拟路由器的ID号,每个热备组保持一致

priority 110 #优先级,数值越大优先级越高

advert_int 1 #通告间隔秒数(心跳频率)

authentication { #热备认证信息,每个热备组保持一致

auth_type PASS #认证类型

auth_pass 6666 #密码字符串

}

virtual_ipaddress { #指定飘逸地址(VIP),可以有多个

192.168.100.100

}

}

virtual_server 192.168.100.100 80 { #虚拟服务器地址(VIP)、端口

delay_loop 6 #健康检查的间隔时间(秒)

lb_algo rr #轮询(rr)调度算法

lb_kind DR #直接路由(DR)群集工作模式

persistence_timeout 6 #连接保持时间(秒)

protocol TCP #应用服务器采用的是TCP协议

real_server 192.168.100.20 80 { #第一个web服务器节点的地址、端口

weight 1 #节点的权重

TCP_CHECK { #健康检查方式

connect_port 80 #检查的目标端口

connect_timeout 3 #连接超时(秒)

nb_get_retry 3 #重试次数

delay_before_retry 3 #重试间隔

}

}

real_server 192.168.100.30 80 {

weight 1

TCP_CHECK {

connect_port 80

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

}

[root@lvs-zhu keepalived-2.0.13]# systemctl start keepalived.service

[root@lvs-zhu keepalived-2.0.13]# tail -f /var/log/messages

[root@lvs-zhu keepalived-2.0.13]# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.100.100:80 rr persistent 6

-> 192.168.100.20:80 Route 1 0 0

-> 192.168.100.30:80 Route 1 0 0

[root@lvs-zhu keepalived-2.0.13]# ip addr show dev ens33

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:9a:cd:27 brd ff:ff:ff:ff:ff:ff

inet 192.168.100.10/24 brd 192.168.100.255 scope global ens33

valid_lft forever preferred_lft forever

inet 192.168.100.100/32 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::26b5:ebd3:a0d2:db12/64 scope link

valid_lft forever preferred_lft forever

5、配置从调度器(192.168.100.40)

[root@lvs-bei ~]# modprobe ip_vs

[root@lvs-bei ~]# cat /proc/net/ip_vs

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

[root@lvs-bei ~]# rpm -ivh /mnt/Packages/ipvsadm-1.27-7.el7.x86_64.rpm

[root@lvs-bei ~]# yum -y install gcc gcc-c++ make popt-devel openssl-devel kernel-devel

[root@lvs-bei ~]# tar zxf keepalived-2.0.13.tar.gz

[root@lvs-bei ~]# cd keepalived-2.0.13/

[root@lvs-bei keepalived-2.0.13]# ./configure --prefix=/

[root@lvs-bei keepalived-2.0.13]# make && make install

[root@lvs-bei keepalived-2.0.13]# cp keepalived/etc/init.d/keepalived /etc/init.d/

[root@lvs-bei keepalived-2.0.13]# systemctl enable keepalived.service

[root@lvs-bei keepalived-2.0.13]# vi /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id LVS_02 #本服务器的名称

}

vrrp_instance VI_1 { #定义VRRP热备实例

state BACKUP #热备状态,MASTER表示主服务器,BACKUP表示从服务器

interface ens33 #承载VIP地址的物理接口

virtual_router_id 51 #虚拟路由器的ID号,每个热备组保持一致

priority 105 #优先级,数值越大优先级越高

advert_int 1 #通告间隔秒数(心跳频率)

authentication { #热备认证信息,每个热备组保持一致

auth_type PASS #认证类型

auth_pass 6666 #密码字符串

}

virtual_ipaddress { #指定飘逸地址(VIP),可以有多个

192.168.100.100

}

}

virtual_server 192.168.100.100 80 { #虚拟服务器地址(VIP)、端口

delay_loop 6 #健康检查的间隔时间(秒)

lb_algo rr #轮询(rr)调度算法

lb_kind DR #直接路由(DR)群集工作模式

persistence_timeout 6 #连接保持时间(秒)

protocol TCP #应用服务器采用的是TCP协议

real_server 192.168.100.20 80 { #第一个web服务器节点的地址、端口

weight 1 #节点的权重

TCP_CHECK { #健康检查方式

connect_port 80 #检查的目标端口

connect_timeout 3 #连接超时(秒)

nb_get_retry 3 #重试次数

delay_before_retry 3 #重试间隔

}

}

real_server 192.168.100.30 80 {

weight 1

TCP_CHECK {

connect_port 80

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

}

[root@lvs-bei keepalived-2.0.13]# systemctl start keepalived.service

[root@lvs-bei keepalived-2.0.13]# tail -f /var/log/messages

[root@lvs-bei keepalived-2.0.13]# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.100.100:80 rr

-> 192.168.100.20:80 Route 1 0 0

-> 192.168.100.30:80 Route 1 0 0

[root@lvs-bei keepalived-2.0.13]# ip addr show dev ens33

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:dc:10:18 brd ff:ff:ff:ff:ff:ff

inet 192.168.100.40/24 brd 192.168.100.255 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::c1f0:d588:3477:d684/64 scope link

valid_lft forever preferred_lft forever

6、配置NFS服务器(192.168.100.50)

[root@nfs-server ~]# yum -y install rpcbind nfs-utils

[root@nfs-server ~]# mkdir -p /opt/web1

[root@nfs-server ~]# mkdir -p /opt/web2

[root@nfs-server ~]# echo '<h1>this is web 1!</h1>' > /opt/web1/index.html

[root@nfs-server ~]# echo '<h1>this is web 2!</h1>' > /opt/web2/index.html

[root@nfs-server ~]# vi /etc/exports

/opt/web1 192.168.100.20(ro)

/opt/web2 192.168.100.30(ro)

[root@nfs-server ~]# systemctl start nfs

[root@nfs-server ~]# systemctl start rpcbind

[root@nfs-server ~]# systemctl enable nfs

[root@nfs-server ~]# systemctl enable rpcbind

[root@nfs-server ~]# showmount -e

Export list for nfs-server:

/opt/web2 192.168.100.30

/opt/web1 192.168.100.20

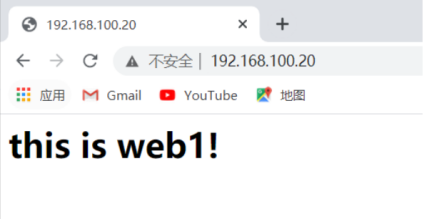

7、配置Web1服务器(192.168.100.20)

[root@web1 ~]# yum -y install httpd

[root@web1 ~]# mount 192.168.100.50:/opt/web1 /var/www/html

[root@web1 ~]# showmount -e 192.168.100.50

Export list for 192.168.100.50:

/opt/web2 192.168.100.30

/opt/web1 192.168.100.20

[root@web1 ~]# systemctl start httpd

[root@web1 ~]# curl http://localhost

<h1>this is web1!</h1>

[root@web1 ~]# vi web1.sh

#!/bin/bash

# web1

ifconfig lo:0 192.168.100.100 broadcast 192.168.100.100 netmask 255.255.255.255 up

route add -host 192.168.100.100 dev lo:0

echo "1" > /proc/sys/net/ipv4/conf/lo/arp_ignore

echo "1" > /proc/sys/net/ipv4/conf/all/arp_ignore

echo "2" > /proc/sys/net/ipv4/conf/lo/arp_announce

echo "2" > /proc/sys/net/ipv4/conf/all/arp_announce

sysctl -p &> /dev/null

[root@web1 ~]# sh web1.sh

[root@web1 ~]# ifconfig

......

lo:0: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 192.168.100.100 netmask 255.255.255.255

loop txqueuelen 1 (Local Loopback)

......

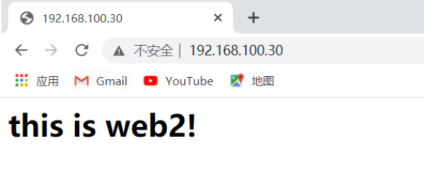

8、配置web2服务器(192.168.100.30)

[root@web2 ~]# yum -y install httpd

[root@web2 ~]# mount 192.168.100.50:/opt/web2 /var/www/html

[root@web2 ~]# showmount -e 192.168.100.50

Export list for 192.168.100.50:

/opt/web2 192.168.100.30

/opt/web1 192.168.100.20

[root@web2 ~]# systemctl start httpd

[root@web2 ~]# curl http://localhost

<h1>this is web2!</h1>

[root@web2 ~]# vi web2.sh

#!/bin/bash

# web2

ifconfig lo:0 192.168.100.100 broadcast 192.168.100.100 netmask 255.255.255.255 up

route add -host 192.168.100.100 dev lo:0

echo "1" > /proc/sys/net/ipv4/conf/lo/arp_ignore

echo "1" > /proc/sys/net/ipv4/conf/all/arp_ignore

echo "2" > /proc/sys/net/ipv4/conf/lo/arp_announce

echo "2" > /proc/sys/net/ipv4/conf/all/arp_announce

sysctl -p &> /dev/null

[root@web2 ~]# sh web2.sh

[root@web2 ~]# ifconfig

......

lo:0: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 192.168.100.100 netmask 255.255.255.255

loop txqueuelen 1 (Local Loopback)

......

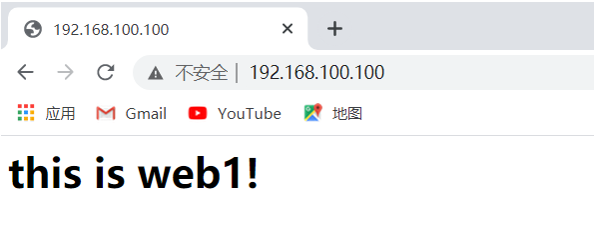

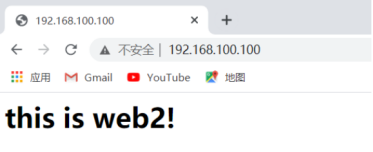

9、测试web1和web2的IP访问

10、测试虚拟IP地址级查看状态

[root@lvs-zhu keepalived-2.0.13]# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.100.100:80 rr

-> 192.168.100.20:80 Route 1 1 1

-> 192.168.100.30:80 Route 1 1 0

11、模拟主调度器故障,验证结果

[root@lvs-zhu keepalived-2.0.13]# systemctl stop keepalived.service

[root@lvs-bei keepalived-2.0.13]# tail -f /var/log/messages

[root@lvs-bei keepalived-2.0.13]# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.100.100:80 rr

-> 192.168.100.20:80 Route 1 0 0

-> 192.168.100.30:80 Route 1 0 0

[root@lvs-bei keepalived-2.0.13]# ip addr show dev ens33

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:dc:10:18 brd ff:ff:ff:ff:ff:ff

inet 192.168.100.40/24 brd 192.168.100.255 scope global ens33

valid_lft forever preferred_lft forever

inet 192.168.100.100/32 scope global ens33

valid_lft forever preferred_lft forever #虚拟地址漂移到备调度器上

inet6 fe80::c1f0:d588:3477:d684/64 scope link

valid_lft forever preferred_lft forever

查看备调度器连接状态

[root@lvs-bei keepalived-2.0.13]# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.100.100:80 rr

-> 192.168.100.20:80 Route 1 0 1

-> 192.168.100.30:80 Route 1 2 0

12、开启主调度器并查看状态

[root@lvs-zhu keepalived-2.0.13]# systemctl start keepalived.service

[root@lvs-zhu keepalived-2.0.13]# tail -f /var/log/messages

[root@lvs-zhu keepalived-2.0.13]# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.100.100:80 rr

-> 192.168.100.20:80 Route 1 0 0

-> 192.168.100.30:80 Route 1 0 0

[root@lvs-zhu keepalived-2.0.13]# ip addr show dev ens33

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:9a:cd:27 brd ff:ff:ff:ff:ff:ff

inet 192.168.100.10/24 brd 192.168.100.255 scope global ens33

valid_lft forever preferred_lft forever

inet 192.168.100.100/32 scope global ens33

valid_lft forever preferred_lft forever #虚拟地址又到主调度器上

inet6 fe80::26b5:ebd3:a0d2:db12/64 scope link

valid_lft forever preferred_lft forever

13、模拟web服务器故障,查看状态

①关闭web1的httpd服务

1 [root@web1 ~]# systemctl stop httpd

②测试网页,只能查看web2服务器的网页

③查看调度器节点状态

[root@lvs-zhu keepalived-2.0.13]# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.100.100:80 rr

-> 192.168.100.30:80 Route 1 1 2

④开启Web1服务又可以轮询了

[root@lvs-zhu keepalived-2.0.13]# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.100.100:80 rr

-> 192.168.100.20:80 Route 1 2 0

-> 192.168.100.30:80 Route 1 3 1