论文链接: https://arxiv.org/pdf/1504.08083.pdf

代码下载: https://github.com/rbgirshick/fast-rcnn

- Abstract

Compared to previous work, Fast R-CNN employs several innovations to improve training and testing speed while also increasing detection accuracy #相比于之前的创作,Fast R-CNN在提高训练和测试速度上引入了许多创新,同时也增加了检测的准确度.

- Introduction

Due to this complexity, current approaches (e.g., [9, 11, 19, 25]) train models in multi-stage pipelines that are slow and inelegant. #由于这些复杂性,当前的多个阶段训练模型方式显得缓慢笨拙.

First, numerous candidate object locations (often called “proposals”) must be processed. Second, these candidates provide only rough localization that must be refined to achieve precise localization. #首先,大量的候选定位目标需要被处理.此外,这些候选目标只提供了粗略的位置,因而需要在修正后达到准确的定位.

1.1 R-CNN and SPP-Net

R-CNN,however, has notable drawbacks:1)Training is a multi-stage pipeline.2)Training is expensive in space and time. 3)Object detection is slow. #但是,R-CNN有非常明显的缺点:1)训练是多个阶段进行.2)训练过程非常消耗时间和空间.3)目标检测比较慢

R-CNN is slow because it performs a ConvNet forward pass for each object proposal, without sharing computation.Spatial pyramid pooling networks (SPPnets) [11] were proposed to speed up R-CNN by sharing computation.SPPnet accelerates R-CNN by 10 to 100× at test time. Training time is also reduced by 3× due to faster proposal feature extraction. #R-CNN运行缓慢是由于对每个候选区域进行前向卷积运算,未使用权值共享.空间金字塔池化网络(SPP-Nets)通过共享权值来加速R-CNN运算.SPP-Net使得R-CNN的测试阶段提高了10到100倍,同时更快的区域特征提取使训练时间降低到原先的1/3.

SPPnet also has notable drawbacks. Like R-CNN, training is a multi-stage pipeline that involves extracting features, fine-tuning a network with log loss, training SVMs,and finally fitting bounding-box regressors. Features are also written to disk. But unlike R-CNN, the fine-tuning algorithm proposed in [11] cannot update the convolutional layers that precede the spatial pyramid pooling. #SPP-Net当然也有明显缺点.与R-CNN一样,训练也是多个阶段进行,包括特征提取,基于损失函数的fine-tuing,SVMs的训练及最后的bouding-box回归.特征需要写入硬盘.但与R-CNN不同的是,fine-tuning不会更新空间金字塔池化之前的卷积层.

1.2 Contributions

We propose a new training algorithm that fixes the disadvantages of R-CNN and SPPnet, while improving on their speed and accuracy.The Fast R-CNN method has several advantages:1. Higher detection uality (mAP) than R-CNN, SPPnet 2. Training is single-stage, using a multi-task loss 3. Training can update all network layers 4. No disk storage is required for feature caching

#为了解决R-CNN和SPP-Net的上述缺点,我们提出了一种新的训练算法,提高了训练的速度和模型的准确度.

#Fast R-CNN有以下几个优点:1)优于R-CNN,SPPnet的平均准确率.2)multi-task损失的引入使得训练是单一阶段的.3)训练可以更新所有网络权值.4)无需缓存特征.

- Fast R-CNN architecture and training

The network first processes the whole image with several convolutional (conv) and max pooling layers to produce a conv feature map. Then, for each object proposal a region of interest (RoI) pooling layer extracts a fixed-length feature vector from the feature map. #网络首先对整张图片进行卷积及最大池化运算,进而生成卷积特征图.然后,兴趣区域(ROI)池化层对每个建议目标提取固定特定长度的特征向量.

2.1 The ROI pooling layer

RoI max pooling works by dividing the h × w RoI window into an H × W grid of sub-windows of approximate size h/H × w/W and then max-pooling the values in each sub-window into the corresponding output grid cell. #ROI最大池化通过将h*w ROI 窗口以h/H*w/W 的子窗口划分成H*W尺度,然后对各子窗口进行最大池化操作,输出到对应的窗口.

2.2 Initializing from pretrained networks

When a pre-trained network initializes a Fast R-CNN network, it undergoes three transformations.

#当使用预训练网络对Fast R-CNN进行网络初始化时,它发生了三种变换.

First, the last max pooling layer is replaced by a RoI pooling layer that is configured by setting H and W to be compatible with the net’s first fully connected layer (e.g.,H = W = 7 for VGG16).

#1)ROI池化层通过设置与第一个全连接层适配的H和W值,替换了最后一层最大池化层.

Second, the network’s last fully connected layer and softmax (which were trained for 1000-way ImageNet classification) are replaced with the two sibling layers described earlier (a fully connected layer and softmax over K + 1 categories and category-specific bounding-box regressors).

#2)网络最后一层全连接层及softmax层(用于输出Imagenet的1000种分类结果)被两个之前提到过的sibling layers(K+1分类输出的全连接层和softmax层,以及特定范围内的bounding-box回归)替换

Third, the network is modified to take two data inputs: a list of images and a list of RoIs in those images.

#3)网络被修改以接收两个输入数据:一系列图片以及这些图片中的ROI

2.3 Finetuning for detection

First, let’s elucidate why SPPnet is unable to update weights below the spatial pyramid pooling layer.

#首先,我们解释一下为什么SPP-Net无法更新空间金字塔池化层之后的权值.

The root cause is that back-propagation through the SPP layer is highly inefficient when each training sample (i.e.RoI) comes from a different image, which is exactly how R-CNN and SPPnet networks are trained.

#其根本原因在于每个训练样本来自于不同的图片,通过SPP层的反向传播是无效的,而R-CNN和SPP-Net正是这样被训练的.

One concern over this strategy is it may cause slow training convergence because RoIs from the same image are correlated. This concern does not appear to be a practical issue and we achieve good results with N = 2 and R = 128 using fewer SGD iterations than R-CNN.

#关于这个策略需要考虑一点:同一张图片的ROI可能相互关联,导致训练收敛缓慢.实践时并未出现这种担忧,而我们通过设置N=2,R=128,实现了相比R-CNN更少的SGD过程,从而取得了比较好的训练结果.

In addition to hierarchical sampling, Fast R-CNN uses a streamlined training process with one fine-tuning stage that jointly optimizes a softmax classifier and bounding-box regressors, rather than training a softmax classifier, SVMs,and regressors in three separate stages [9, 11]. #在分层采样方面,Fast R-CNN使用了单一fine-tuning阶段实现了流线型的训练过程,从而显著优化了softmax分类器和bounding-box regressors,而不是在三个不同阶段训练softmax分类器,SVMs和regressors.

1) Multi-task loss

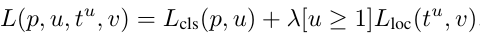

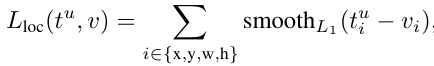

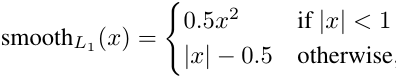

Each training RoI is labeled with a ground-truth class u and a ground-truth bounding-box regression target v. We use a multi-task loss L on each labeled RoI to jointly train for classification and bounding-box regression:

#每个的训练ROI都对应一个真实的类别和定位框回归目标标记值.我们在每个标记的ROI上使用了一个复合任务损失L用于分类任何和定位框回归的联合训练.

#对于background没有对应的groundtruth,因而忽略其损失函数,对于bounding-box,其损失函数对应如下

#同时Fast R-CNN使用的L1损失函数相比R-CNN和SPP-Net使用的L2损失函数具有更好的鲁棒性

2)Mini-batch sampling

During fine-tuning, each SGD mini-batch is constructed from N = 2 images, chosen uniformly at random (as is common practice, we actually iterate over permutations of the dataset). We use mini-batches of size R = 128, sampling 64 RoIs from each image. #在fine-tuning过程中,每个SGD mini-batch随机选取2张图片(通常在时间中,我们会重复数据集中的所有排列).我们使用R=128的mini-batches,包含从每张图片选取的64个ROI

As in [9], we take 25% of the RoIs from object proposals that have intersection over union (IoU) overlap with a ground-truth bounding box of at least 0.5.These RoIs comprise the examples labeled with a foreground object class, i.e.u ≥ 1.

#如文献[9]描述的,我们从选取object proposals中25%的ROI,这些ROI与ground-truth的交集(IoU)超过0.5.这些RoI构成了前景样本,标记为u≥1.

The remaining RoIs are sampled from object proposals that have a maximum IoU with ground truth in the interval [0.1, 0.5), following [11]. These are the background examples and are labeled with u = 0.The lower threshold of 0.1 appears to act as a heuristic for hard example mining[8].

#如文献[11]描述的,剩下的与ground-truth中交集在0.1和0.5之间的RoI为背景样本,标记为u=0.

During training, images are horizontally flipped with probability 0.5. No other data augmentation is used.

#在整个训练过程中,图片以0.5的概率水平翻转,此外没有使用其他的数据增广手段.

3)Back-propagation through RoI pooling layers

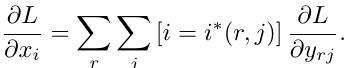

Back-propagation routes derivatives through the RoI pooling layer. #反向传播偏导传播路径经过ROI池化层 Let xi ∈ R be the i-th activation input into the RoI pooling layer and let yrj be the layer’s j-th output from the r-th RoI. #假定xi是ROI的第i-th激活输入,yrj是r-th个RoI的j-th输出.

The RoI pooling layer’s backwards function computes partial derivative of the loss function with respect to each input variable xi by following the argmax switches:

#ROI池化层的反向传播函数基于输入变量xi及argmax选择计算损失函数偏导:

In words, for each mini-batch RoI r and for each pooling output unit yrj , the partial derivative ∂L/∂yrj is accumulated if i is the argmax selected for yrj by max pooling.In back-propagation, the partial derivatives ∂L/∂yrj are already computed by the backwards function of the layer on top of the RoI pooling layer. #换句话说,对于每个mini-bath RoI r 以及输出单位yrj,当i被最大池化层选中时,∂L/∂yrj偏导数才被计算,在反向传播中,∂L/∂yrj的偏导计算已经由于RoI池化层完成.

3)SGD hyper-parameters

The fully connected layers used for softmax classification and bounding-box regression are initialized from zero-mean Gaussian distributions with standard deviations 0.01 and 0.001, respectively. Biases are initialized to 0. #softmax和bounding-box所用的全连接层分别初始化为零均值,均方差为0.01和0.001的高斯分布.偏置值初始化为0. All layers use a per-layer learning rate of 1 for weights and 2 for biases and a global learning rate of 0.001. #所有层使用一个权值为1,偏置为2的per-layer学习率以及0.001的全局学习率. When training on VOC07 or VOC12 trainval we run SGD for 30k mini-batch iterations, and then lower the learning rate to 0.0001 and train for another 10k iterations. #我们在VOC07和VOC12数据集上训练了30k个iterations,然后的10k个iterations我们把学习率降低到0.0001. When we train on larger datasets, we run SGD for more iterations,as described later. A momentum of 0.9 and parameter decay of 0.0005 (on weights and biases) are used. #我们训练大的数据集时,会使用SGD跑更多的iterations,正如之后描述的一样.训练中使用了momentum=0.9和decay=0.0005.

2.4 Scale invariance

We explore two ways of achieving scale invariant object detection: (1) via “brute force” learning and (2) by using image pyramids. These strategies follow the two approaches in [11]. #为了实现尺度不变性,我们尝试了两种方式:(1)通过"brute force"学习 (2)通过使用图像金字塔.这两种方式中使用的策略在文献[11]中阐述. In the brute-force approach, each image is processed at a pre-defined pixel size during both training and testing. The network must directly learn scale-invariant object detection from the training data. #在brute-force方法中,训练和测试时每张图片都被预处理成预定义的尺寸.网络必须直接从训练数据中学习尺度不变特征. The multi-scale approach, in contrast, provides approximate scale-invariance to the network through an image pyramid.At test-time, the image pyramid is used to approximately scale-normalize each object proposal. #在multi-scale方法中,作为对比,通过图像金字塔的时候近似提供了网络的尺度不变性.测试阶段,图像金字塔近似用于每个pbject proposal的标准化.

- Fast R-CNN detection

Once a Fast R-CNN network is fine-tuned, detection amounts to little more than running a forward pass (assuming object proposals are pre-computed). #Fast R-CNN网络一旦fine-tuned完成,检测即意味着比前向工作稍微多一点(假设object proposals提前计算好了) The network takes as input an image (or an image pyramid, encoded as a list of images) and a list of R object proposals to score.At test-time, R is typically around 2000, although we will consider cases in which it is larger (≈ 45k). When using an image pyramid, each RoI is assigned to the scale such that the scaled RoI is closest to 2242 pixels in area #网络读取一张图片并输出一系列R个object proposals.在测试阶段,R通常是接近2000,当然我们也会考虑更大的数值(约等于45k).使用图像金字塔时,每个RoI会被设定为scale,因此通常scaled的ROI最近接224*224.

3.1 Truncated SVD for faster detection

For whole-image classification, the time spent computing the fully connected layers is small compared to the conv layers. On the contrary, for detection the number of RoIs to process is large and nearly half of the forward pass time is spent computing the fully connected layers (see Fig. 2).Large fully connected layers are easily accelerated by compressing them with truncated SVD [5, 23]. #对于整个图像分类任务,全连接层计算所消耗的时间远小于卷积层计算.相反的是,对于检测过程,ROI的数量非常巨大,几乎一半的前向计算花在全连接层.大型的全连接层很容易通过SVD截断进行压缩,起到加速的效果. To compress a network, the single fully connected layer corresponding to W is replaced by two fully connected layers, without a non-linearity between them.This simple compression method gives good speedups when the number of RoIs is large. #为了压缩网络,W代表的单一的全连接层被两个线性不相关的全连接层所取代.这个简单的压缩策略在RoI比较大时可以起到很好的加速效果.

- Main results

4.1 Experimental setup

Our experiments use three pre-trained ImageNet models that are available online.2 The first is the CaffeNet (essentially AlexNet [14]) from R-CNN [9]. We alternatively refer to this CaffeNet as model S, for “small.” The second network is VGG_CNN_M_1024 from [3], which has the same depth as S, but is wider. We call this network model M,for “medium.” The final network is the very deep VGG16 model from [20]. Since this model is the largest, we callit model L. #我们的实验使用了三种网上可以下载的预训练模型.第一个是基于CaffeNet的AlexNet.不妨称之为S,因为这个网络相对较小.第二个网络是文献3中提到的VGG_CNN_M_1024,它的深度与S一样,但更宽,我们称之为M.最后一个网络是文献20中所述的非常深的VGG16,由于这个网络最大,我们称之为模型L.

4.2 VOC 2010 and 2012 results

略过

4.3 VOC 2007 results

略过

4.4 Training and testing time

For VGG16, Fast R-CNN processes images 146× faster than R-CNN without truncated SVD and 213× faster with it. Training time is reduced by 9×, from 84 hours to 9.5. Compared to SPPnet, Fast R-CNN trains VGG16 2.7× faster (in 9.5 vs. 25.5 hours) and tests 7× faster without truncated SVD or 10× faster with it. Fast R-CNN also eliminates hundreds of gigabytes of disk storage, because it does not cache features. #对于VGG16,Fast R-CNN在没有使用SVD截断的情况下比R-CNN快146倍,以及在使用SVD截断的情况下比R-CNN快213倍.训练时间降低到原先的1/9,从84小时到9.5小时.相比于SPP-Net,Fast R-CNN在训练阶段快了2.7倍,在测试阶段不使用SVD截断的情况下快了7倍以及使用SVD截断的情况下快了10倍.同时由于Fast R-CNN不缓存特征减少了上百GB的存储空间.

Truncated SVD can reduce detection time by more than 30% with only a small (0.3 percentage point) drop in mAP and without needing to perform additional fine-tuning after model compression.

#SVD截断可以在只损失0.3%的平均准确率情况下降低30%的检测时间,而不需要在模型压缩后进行额外的fine-tuning.

4.5 Which layers to finetune?

For the less deep networks considered in the SPPnet paper [11], fine-tuning only the fully connected layers appeared to be sufficient for good accuracy. 对于文献11中描述的不那么深的网络,只对全连接层进行fine-tuning足以获得比较好的准确率. We hypothesized that this result would not hold for very deep networks. 我们假定这个结果对于非常深的网络不再奏效. To validate that fine-tuning the conv layers is important for VGG16, we use Fast R-CNN to fine-tune, but freeze the thirteen conv layers so that only the fully connected layers learn. 为了验证卷积层的fine-tuning对于VGG16同样重要,我们使用fast R-CNN进行fine-tune,但是冻结十三层卷积层因此只有全连接层进行学习. This ablation emulates single-scale SPPnet training and decreases mAP from 66.9% to 61.4% (Table 5). 这个融合模仿了单一尺度SPP-Net训练过程,同时mAP从66.9%降低到61.4%. Does this mean that all conv layers should be fine-tuned?In short, no. 这是否意味着所有卷积层都需要fine-tuned,简单来说,不需要. In the smaller networks (S and M) we find that conv1 is generic and task independent (a well-known fact [14]). Allowing conv1 to learn, or not, has no meaningful effect on mAP. 在小型网络中(S和M)我们发现conv1是通用的与具体任务无关的(文献14).时候调整conv1对最终的mAP并没有显著的差异. For VGG16, we found it only necessary to update layers from conv3_1 and up (9 of the 13conv layers). 对于VGG16,我们发现只需要更新conv3_1及以上的卷积层.

- Design evaluation

5.1 Does multitask training help?

Multi-task training is convenient because it avoids managing a pipeline of sequentially-trained tasks. But it also has the potential to improve results because the tasks influence each other through a shared representation (the ConvNet)[2]. Does multi-task training improve object detection accuracy in Fast R-CNN? #多任务训练是便利的,由于它避免了对顺序训练任务的管理.但是它还有提升空间,由于共享的表达中训练任务相互影响,那么多任务训练时候提升Fast R-CNN的检测准确率呢?

5.2 Scale invariance: to brute force or finesse?

We compare two strategies for achieving scale-invariant object detection: brute-force learning (single scale) and image pyramids (multi-scale). In either case, we define the scale s of an image to be the length of its shortest side. #我们对比了用于尺度不变性的两种策略:brute-force learning (单一尺度)和image pyramids (多尺度).在这些情形中,我们把图像中最短的编定义为它的尺度.

5.3 Do we need more training data?

A good object detector should improve when supplied with more training data.Zhu et al.[24] found that DPM [8]mAP saturates after only a few hundred to thousand training examples.

#一个良好的检测器应该在提供更多训练数据时拥有更好的效果.Zhu et al.发现DPM的mAP仅仅在几百到上千训练数据时达到饱和.

5.4 Do SVMs outperform softmax?

Fast R-CNN uses the softmax classifier learnt during fine-tuning instead of training one-vs-rest linear SVMs post-hoc, as was done in R-CNN and SPPnet. #Fast R-CNN在fine-tuning过程中使用了softmax分类器,而并没有像R-CNN和SPP-Net一样先训练后使用线性SVMs post-hoc进行分类.

5.5 Are more proposals always better?

There are (broadly) two types of object detectors: those that use a sparse set of object proposals (e.g., selective search [21]) and those that use a dense set (e.g., DPM [8]). #广义上说有两种目标检测器:使用object proposals的稀疏集(e.g., selective search [21])以及使用紧凑集(e.g., DPM [8]). Classifying sparse proposals is a type of cascade [22] in which the proposal mechanism first rejects a vast number of candidates leaving the classifier with a small set to evaluate.This cascade improves detection accuracy when applied to DPM detections [21]. We find evidence that the proposal-classifier cascade also improves Fast R-CNN accuracy. #稀疏集意味着一种串联类型,其中建议机制首先拒绝大量的候选,给分类器留下一小部分用于评估.这种串联机制可以提高DPM检测的准确性.我们证实proposal分类器串联机制也可以提高R-CNN准确率.

5.6 Preliminary MS COCO results

We applied Fast R-CNN (with VGG16) to the MS COCO dataset [18] to establish a preliminary baseline. We trained on the 80k image training set for 240k iterations and evaluated on the “test-dev” set using the evaluation server.The PASCAL-style mAP is 35.9%; the new COCO-style AP, which also averages over IoU thresholds, is 19.7%. #我们把Fast R-CNN(基于VGG16)应用到微软COCO数据集为了实现初步的baseline.我们使用了80k张图片训练了240k iterations,然后使用评估服务器在test-dev进行评估.PASCAL-style平均准确率在35.9%,在新的COCO-style AP上取得了19.7%的准确率.

- Conclusion

This paper proposes Fast R-CNN, a clean and fast update to R-CNN and SPPnet. #这篇文章提出了Fast R-CNN,基于R-CNN和SPP-Net干净快速上提升. In addition to reporting state-of-the-art detection results, we present detailed experiments that we hope provide new insights. #除了报告state-of-art的检测结果,我们提供了详尽的实现以希望能够提供更多新思路 Of particular note, sparse object proposals appear to improve detector quality. #特别要强调的是,稀疏的object proposals似乎可以提高检测器质量 This issue was too costly (in time) to probe in the past, but becomes practical with Fast R-CNN. #过去目标检测看起来太消耗时间,但是Fast R-CNN使得它具备可操作性 Of course, there may exist yet undiscovered techniques that allow dense boxes to perform as well as sparse proposals. #当然,尚有很多未被发现的技巧可以使紧凑boxes获得与稀疏proposals一样好的效果 Such methods, if developed, may help further accelerate object detection. #这种尝试,如果有突破的话,可以进一步提高目标检测的速度.