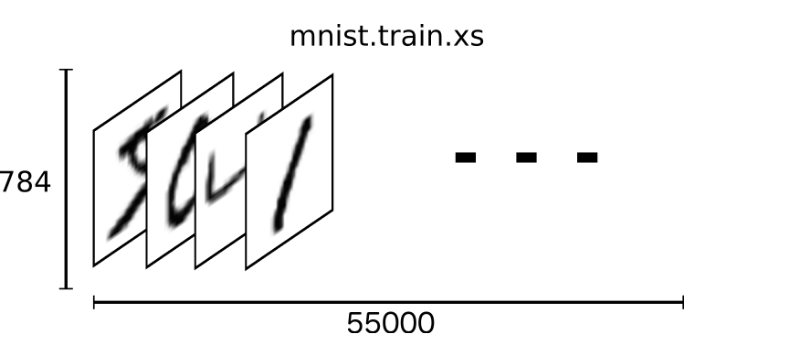

Mnist数据集可以从官网下载,网址: http://yann.lecun.com/exdb/mnist/ 下载下来的数据集被分成两部分:55000行的训练数据集(mnist.train)和10000行的测试数据集(mnist.test)。每一个MNIST数据单元有两部分组成:一张包含手写数字的图片和一个对应的标签。我们把这些图片设为“xs”,把这些标签设为“ys”。训练数据集和测试数据集都包含xs和ys,比如训练数据集的图片是 mnist.train.images ,训练数据集的标签是 mnist.train.labels。

我们可以知道图片是黑白图片,每一张图片包含28像素X28像素。我们把这个数组展开成一个向量,长度是 28x28 = 784。因此,在MNIST训练数据集中,mnist.train.images 是一个形状为 [60000, 784] 的张量。

MNIST中的每个图像都具有相应的标签,0到9之间的数字表示图像中绘制的数字。用的是one-hot编码

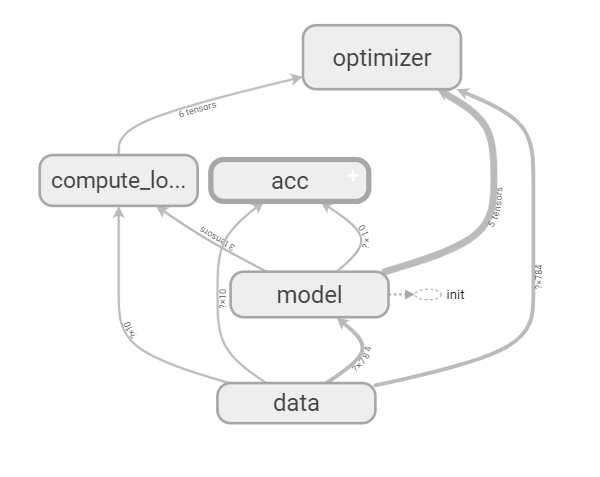

单层(全连接层)实现手写数字识别

1,定义数据占位符 特征值[None,784] 目标值[None,10]

with tf.variable_scope("data"): x = tf.placeholder(tf.float32,[None,784]) y_true = tf.placeholder(tf.float32,[None,10])

2,建立模型 随机初始化权重和偏置,w[784,10],b= [10] y_predict = tf.matmul(x,w)+b

with tf.variable_scope("model"): w = tf.Variable(tf.random_normal([784,10],mean=0.0,stddev=1.0)) b = tf.Variable(tf.constant(0.0,shape=[10])) y_predict = tf.matmul(x,w)+b

3,计算损失 loss 平均样本损失

with tf.variable_scope("compute_loss"): loss = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(labels=y_true,logits=y_predict))

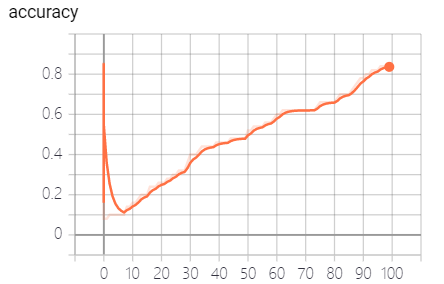

4,梯度下降优化 0.1 步数 2000 从而得出准确率

with tf.variable_scope("optimizer"): train_op = tf.train.GradientDescentOptimizer(0.1).minimize(loss)

5,模型评估 argmax() reduce_mean

with tf.variable_scope("acc"): eq = tf.equal(tf.argmax(y_true, 1), tf.argmax(y_predict, 1)) accuracy = tf.reduce_mean(tf.cast(eq,tf.float32))

加载mnist数据集

import tensorflow as tf # 这里我们利用tensorflow给好的读取数据的方法 from tensorflow.examples.tutorials.mnist import input_data def full_connected(): # 加载mnist数据集 mnist = input_data.read_data_sets("data/mnist/input_data",one_hot=True)

运行结果

accuracy: 0.08

accuracy: 0.08

accuracy: 0.1

accuracy: 0.1

accuracy: 0.1

accuracy: 0.1

accuracy: 0.1

accuracy: 0.1

accuracy: 0.14

accuracy: 0.14

accuracy: 0.16

accuracy: 0.16

accuracy: 0.18

accuracy: 0.2

accuracy: 0.2

accuracy: 0.2

accuracy: 0.24

accuracy: 0.24

accuracy: 0.24

accuracy: 0.26

accuracy: 0.26

accuracy: 0.26

accuracy: 0.28

accuracy: 0.28

accuracy: 0.3

accuracy: 0.3

accuracy: 0.32

accuracy: 0.32

accuracy: 0.32

accuracy: 0.36

accuracy: 0.4

accuracy: 0.4

accuracy: 0.4

accuracy: 0.42

accuracy: 0.44

accuracy: 0.44

accuracy: 0.44

accuracy: 0.44

accuracy: 0.44

accuracy: 0.46

accuracy: 0.46

accuracy: 0.46

accuracy: 0.46

accuracy: 0.46

accuracy: 0.48

accuracy: 0.48

accuracy: 0.48

accuracy: 0.48

accuracy: 0.48

accuracy: 0.48

accuracy: 0.52

accuracy: 0.52

accuracy: 0.54

accuracy: 0.54

accuracy: 0.54

accuracy: 0.54

accuracy: 0.56

accuracy: 0.56

accuracy: 0.56

accuracy: 0.58

accuracy: 0.6

accuracy: 0.6

accuracy: 0.62

accuracy: 0.62

accuracy: 0.62

accuracy: 0.62

accuracy: 0.62

accuracy: 0.62

accuracy: 0.62

accuracy: 0.62

accuracy: 0.62

accuracy: 0.62

accuracy: 0.62

accuracy: 0.62

accuracy: 0.64

accuracy: 0.66

accuracy: 0.66

accuracy: 0.66

accuracy: 0.66

accuracy: 0.66

accuracy: 0.66

accuracy: 0.68

accuracy: 0.7

accuracy: 0.7

accuracy: 0.7

accuracy: 0.7

accuracy: 0.72

accuracy: 0.74

accuracy: 0.76

accuracy: 0.78

accuracy: 0.78

accuracy: 0.8

accuracy: 0.8

accuracy: 0.82

accuracy: 0.82

accuracy: 0.82

accuracy: 0.84

accuracy: 0.84

accuracy: 0.84

accuracy: 0.84

Process finished with exit code 0

对于使用下面的式子当作损失函数不太理解的:

tf.nn.softmax_cross_entropy_with_logits