阿里es磁盘问题排查: https://yq.aliyun.com/articles/688232?spm=a2c4e.11155435.0.0.e5e03312t6BoTN

阿里es定时删除索引: https://zhuanlan.zhihu.com/p/33294589

wget https://artifacts.elastic.co/downloads/kibana/kibana-6.3.1-x86_64.rpm

wget https://artifacts.elastic.co/downloads/elasticsearch/logstash-6.3.1.rpm

wget https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-6.3.1.rpm

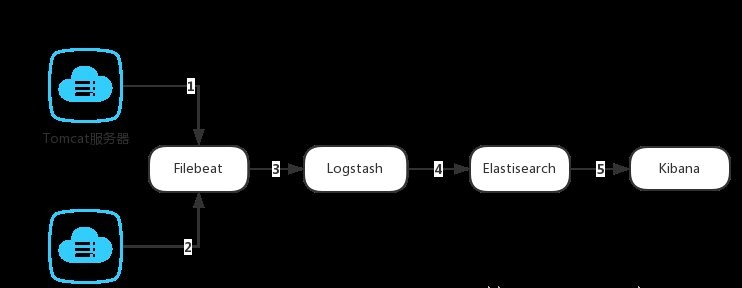

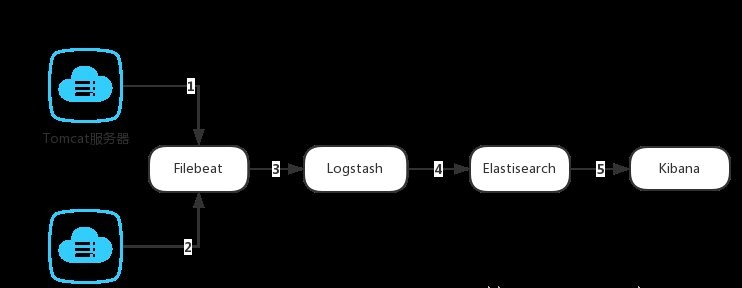

1. ELK是Elasticsearch,Logstash,Kibana 开源软件的集合,对外是作为一个日志管理系统的开源方案。它可以从任何来源,任何格式进行日志搜索,分析获取数据,并实时进行展示。

2. 基本软件作用如下:

2.1、Filebeat:监控日志文件、转发,获取指定路径的日志文件,传输日志文件给Logstash;

2.2、Logstash: 日志收集,管理,存储,转发日志给Elasticsearch进行处理;

2.3、Elasticsearch:搜索,提供分布式全文搜索引擎,搜索是实时进行处理的,对数据进行索引和聚合等;

2.4、Kibana :日志的过滤web展示,图形界面话操作日志记录。别名Elasticsearch Dashboard 顾名思义是基于游览器的Elasticsearch分析和仪表盘工具;

3. 官网地址:https://www.elastic.co

4. 分支软件官方文档地址:

4.1、Logstash官方文档地址:https://www.elastic.co/guide/en/logstash/current/index.html

4.2、Filebeat官方文档地址:https://www.elastic.co/guide/en/beats/filebeat/current/index.html

4.3、Elasticsearch官方文档地址:https://www.elastic.co/guide/en/elasticsearch/reference/current/index.html

4.4、Kibana 官方文档地址:https://www.elastic.co/guide/en/kibana/current/index.html

5. 第1、2步代表使用FileBeat获取Tomcat服务器上的日志。当启动Filebeat时,它将启动一个或多个prospectors (检测者),查找Tomcat上指定的日志文件,作为日志的源头等待输出到Logstash。

6. 第3步代表Logstash从FileBeat获取日志文件。Filebeat作为Logstash的输入input将获取到的日志进行处理(FileBeat其实就是Logstash的一个输入插件beats,具体的处理过程后边学习),将处理好的日志文件输出到Elasticsearch进行处理。

7. 第4步代表Elasticsearch得到Logstash的数据之后进行相应的搜索存储操作。将写入的数据可以被检索和聚合等以便于搜索操作。

8. 第5代表Kibana 通过Elasticsearch提供的API将日志信息可视化的操作。

9. 下载安装jdk,elasticsearch,kibana,logstash,filebeat

jdk、elasticsearch、kibana安装配置就不介绍了,很简单

10. 解压logstash之后,不做修改

11. 解压filebeat

修改配置文件,使其连接logstash

filebeat.inputs:

- type: log

enabled: true #打开此配置文件,false则此配置文件无效

paths:

- /mkt/tomcat/8.5.32/11001/logs/catalina.out #读取日志文件路劲

multiline.pattern: ^[ #正则表达式

multiline.negate: true #true 或 false;默认是false,匹配pattern的行合并到上一行;true,不匹配pattern的行合并到上一行

multiline.match: after #或 before,合并到上一行的末尾或开头

output.logstash: #filebeat将日志输出到logstash

hosts: ["192.168.5.16:5044"] #logstash的地址和端口

fields:

env: uat #添加标签,以标签创建不同的索引

./filebeat -e -c filebeat.yml -d "publish" #启动filebeat nohup ./filebeat -e -c filebeat.yml >/dev/null 2>&1 & ./bin/logstash -f tomcat.conf & nohup ./bin/kibana & ./bin/elasticsearch -d

12. 创建新的logstash配置文件:

input {

beats {

port => "5044"

}

}

output {

elasticsearch {

hosts => [ "192.168.5.16:9200" ]

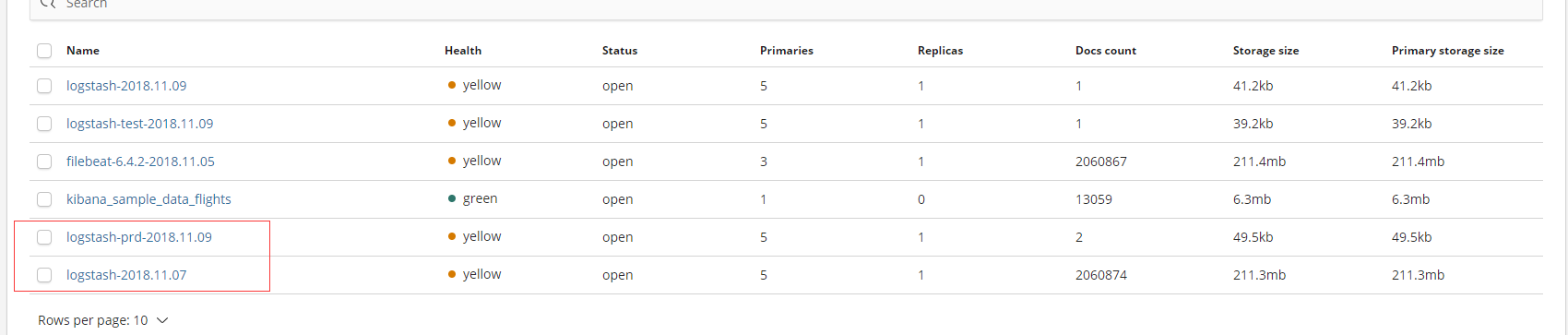

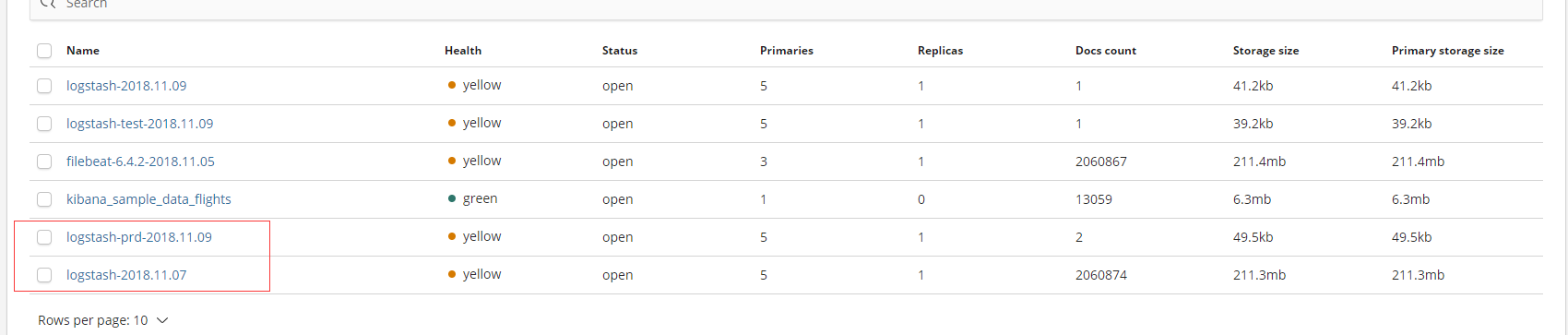

index => "logstash-%{[fields][env]}-%{+YYYY.MM.dd}"

}

}

./bin/logstash -f tomcat.conf启动

一个filebeat上输出多个tomcat日志配置

#=========================== Filebeat inputs =============================

filebeat.inputs:

# Each - is an input. Most options can be set at the input level, so

# you can use different inputs for various configurations.

# Below are the input specific configurations.

#10001-log

- type: log

enable: true

paths:

- /mkt/tomcat/8.5.32/10001/logs/mstore.log

fields:

env: prdweb1-10001-log

#10001-catalina

- type: log

enable: true

paths:

- /mkt/tomcat/8.5.32/10001/logs/catalina.out

fields:

env: prdweb1-10001-catalina

#11001-log

- type: log

enabled: true

paths:

- /mkt/tomcat/8.5.32/11001/logs/delivery.log

#- c:programdataelasticsearchlogs*

fields:

env: prdweb1-11001-log

#11001-catalina.out

- type: log

enable: true

paths:

- /mkt/tomcat/8.5.32/11001/logs/catalina.out

fields:

env: prdweb1-11001-catalina

#12001-log

- type: log

enable: true

paths:

- /mkt/tomcat/8.5.32/12001/logs/mstore.log

fields:

env: prdweb1-12001-log

#12001-catalina

- type: log

enable: true

paths:

- /mkt/tomcat/8.5.32/12001/logs/catalina.out

fields:

env: prdweb1-12001-catalina

收集tomcat错误日志配置

#10001-log

- type: log

enable: true

paths:

- /mkt/tomcat/8.5.32/10001/logs/mstore.log

fields:

env: uat-10001-log

include_lines: ['ERROR']

#10001-catalina

- type: log

enable: true

paths:

- /mkt/tomcat/8.5.32/10001/logs/catalina.out

fields:

env: uat-10001-catalina

include_lines: ['ERROR']

#11001-log

- type: log

enabled: true

paths:

- /mkt/tomcat/8.5.32/11001/logs/delivery.log

include_lines: ['ERROR']

#- c:programdataelasticsearchlogs*

fields:

env: uat-11001-log

#11001-catalina.out

- type: log

enable: true

paths:

- /mkt/tomcat/8.5.32/11001/logs/catalina.out

fields:

env: uat-11001-catalina

include_lines: ['ERROR']

#12001-log

- type: log

enable: true

paths:

- /mkt/tomcat/8.5.32/12001/logs/mstore.log

fields:

env: uat-12001-log

include_lines: ['ERROR']

#12001-catalina

- type: log

enable: true

paths:

- /mkt/tomcat/8.5.32/12001/logs/catalina.out

fields:

env: uat-12001-catalina

include_lines: ['ERROR']

json格式收集java日志

input {

beats {

port => "5044"

}

}

filter {

grok {

match => {"message" => "%{TIMESTAMP_ISO8601:timestamp} [%{NOTSPACE:thread}] %{LOGLEVEL:level} [s*(?<hydraTraceId>(.*?))s*] %{WORD:module}.%{WORD:pid}:%{NUMBER:line}-%{GREEDYDATA:jsonContent}$"}

}

json {

source => "jsonContent"

remove_field => ["jsonContent", "message"]

}

date {

match => ["requestTimeStamp", "UNIX_MS", "UNIX"]

target => 'requestTimeStamp'

}

date {

match => ["responseTimeStamp", "UNIX_MS", "UNIX"]

target => 'responseTimeStamp'

}

date {

match => ["thirdPartRequestTimeStamp", "UNIX_MS", "UNIX"]

target => 'thirdPartRequestTimeStamp'

}

date {

match => ["thirdPartResponseTimeStamp", "UNIX_MS", "UNIX"]

target => 'thirdPartResponseTimeStamp'

}

}

output {

elasticsearch {

hosts => [ "localhost:9200" ]

user => "elastic"

password => "KRpr7GB8luXY4tBf"

index => "filebeat-%{+YYYY.MM.dd}"

}

}