跟着视频做的一个小Demo

建表语句

CREATE table customer( id BIGINT PRIMARY KEY, firstName VARCHAR(50), lastName VARCHAR(50), birthday VARCHAR(50) )

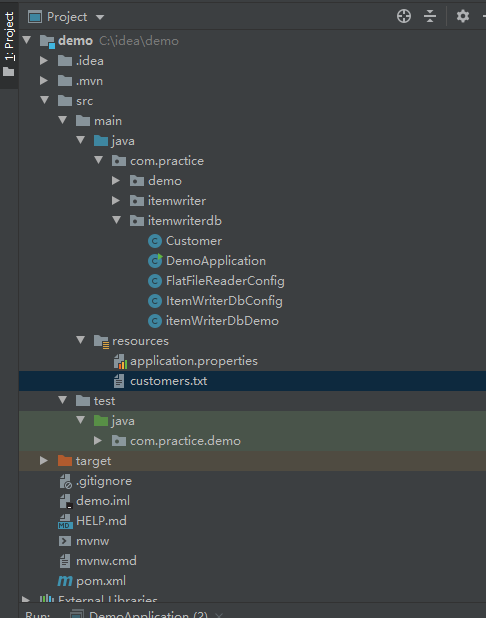

目录结构

Customer实体类

package com.practice.itemwriterdb; public class Customer { private Long id; private String firstName; private String lastName; private String birthday; public long getId() { return id; } public void setId(long id) { this.id = id; } public String getFirstName() { return firstName; } public void setFirstName(String firstName) { this.firstName = firstName; } public String getLastName() { return lastName; } public void setLastName(String lastName) { this.lastName = lastName; } public String getBirthday() { return birthday; } public void setBirthday(String birthday) { this.birthday = birthday; } @Override public String toString() { return "Customer{" + "id=" + id + ", firstName='" + firstName + ''' + ", lastName='" + lastName + ''' + ", birthday='" + birthday + ''' + '}'; } }

DemoApplication

package com.practice.itemwriterdb; import org.springframework.batch.core.configuration.annotation.EnableBatchProcessing; import org.springframework.boot.SpringApplication; import org.springframework.boot.autoconfigure.SpringBootApplication; @SpringBootApplication @EnableBatchProcessing public class DemoApplication { public static void main(String[] args) { SpringApplication.run(DemoApplication.class, args); } }

FlatFileReaderConfig

package com.practice.itemwriterdb; import org.springframework.batch.core.configuration.annotation.StepScope; import org.springframework.batch.item.file.FlatFileItemReader; import org.springframework.batch.item.file.mapping.DefaultLineMapper; import org.springframework.batch.item.file.mapping.FieldSetMapper; import org.springframework.batch.item.file.transform.DelimitedLineTokenizer; import org.springframework.batch.item.file.transform.FieldSet; import org.springframework.context.annotation.Bean; import org.springframework.context.annotation.Configuration; import org.springframework.core.io.ClassPathResource; import org.springframework.validation.BindException; @Configuration public class FlatFileReaderConfig { @Bean public FlatFileItemReader<Customer> flatFileReader(){ FlatFileItemReader<Customer> reader = new FlatFileItemReader<Customer>(); reader.setResource(new ClassPathResource("customers.txt")); // reader.setLinesToSkip(1); //解析数据 DelimitedLineTokenizer tokenizer = new DelimitedLineTokenizer(); tokenizer.setNames(new String[]{"id","firstName","lastName","birthday"}); DefaultLineMapper<Customer> mapper = new DefaultLineMapper<>(); mapper.setLineTokenizer(tokenizer); mapper.setFieldSetMapper(new FieldSetMapper<Customer>() { @Override public Customer mapFieldSet(FieldSet fieldSet) throws BindException { Customer customer = new Customer(); customer.setId(fieldSet.readLong("id")); customer.setFirstName(fieldSet.readString("firstName")); customer.setLastName(fieldSet.readString("lastName")); customer.setBirthday(fieldSet.readString("birthday")); return customer; } }); mapper.afterPropertiesSet(); reader.setLineMapper(mapper); return reader; } }

ItemWriterDbConfig

package com.practice.itemwriterdb; import org.springframework.batch.item.database.BeanPropertyItemSqlParameterSourceProvider; import org.springframework.batch.item.database.JdbcBatchItemWriter; import org.springframework.beans.factory.annotation.Autowired; import org.springframework.context.annotation.Bean; import org.springframework.context.annotation.Configuration; import javax.sql.DataSource; @Configuration public class ItemWriterDbConfig { @Autowired private DataSource dataSource; @Bean public JdbcBatchItemWriter<Customer> itemWriterDb(){ JdbcBatchItemWriter<Customer> writer = new JdbcBatchItemWriter<Customer>(); writer.setDataSource(dataSource); writer.setSql("insert into customer(id,firstName,lastName,birthday) values "+ "(:id,:firstName,:lastName,:birthday)"); writer.setItemSqlParameterSourceProvider( new BeanPropertyItemSqlParameterSourceProvider<>()); return writer; } }

itemWriterDbDemo

package com.practice.itemwriterdb; import org.springframework.batch.core.Job; import org.springframework.batch.core.Step; import org.springframework.batch.core.configuration.annotation.JobBuilderFactory; import org.springframework.batch.core.configuration.annotation.StepBuilderFactory; import org.springframework.batch.item.ItemReader; import org.springframework.batch.item.ItemWriter; import org.springframework.batch.item.file.FlatFileItemReader; import org.springframework.beans.factory.annotation.Autowired; import org.springframework.beans.factory.annotation.Qualifier; import org.springframework.context.annotation.Bean; import org.springframework.context.annotation.Configuration; @Configuration public class itemWriterDbDemo { @Autowired private JobBuilderFactory jobBuilderFactory; @Autowired private StepBuilderFactory stepBuilderFactory; @Autowired @Qualifier("flatFileReader") ItemReader<Customer> flatFileItemReader; @Autowired @Qualifier("itemWriterDb") ItemWriter<? super Customer> itemWriterDb; @Bean public Job itemWriterDemoDbJob(){ return jobBuilderFactory.get("itemWriterDemoDbJob").start(itemWriterDbDemoStep()).build(); } @Bean public Step itemWriterDbDemoStep() { return stepBuilderFactory.get("itemWriterDbDemoStep").<Customer,Customer>chunk(10) .reader(flatFileItemReader) .writer(itemWriterDb) .build(); } }

customers

1,john,Barrett,1994-10-19 14:11:03

2,Mary,Barrett2,1999-10-19 14:11:03

3,lisa,Barrett3,1995-10-19 14:11:03

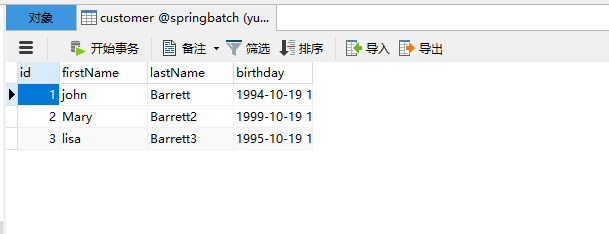

运行结果: