The operating system has two primary purpose:(1)to protect the hardware from misuse by runaway applications, and(2)to provide applications with simple and uniform mechanisms for manipulating complicated and often wildly different low-level hardware devices.

Files are abstractions for I/O devices,virtual memory is an abstraction for both the main memory and disk I/O devices,and processes are abstraction for the processor, main memory and I/O devices.

1.7.1 Processes

A process is the operating system's abstraction for a running program.By concurrently,we mean that the instructions of one process are interleaved with the instractions of another process.Each process appears to have exclusive use of the hardware.The operating system performs this interleaving with a mechanism known as context switching.

The operating system keeps track of all the state information that the proecss needs in order to run.This state,which is known as the context,includes information such as the current values of the PC,the register file,and the contents of main memory.When OS desides to transfer control form one program to another,it performs a context switch by saving the context of the current process,restoring the context of the new process,and then passing control to the new process.

1.7.2 Threads

A process can actually consist of multiple execution units ,called threads,each running in the context of the process and sharing the same code and global data.

1.7.3 Virtual memory

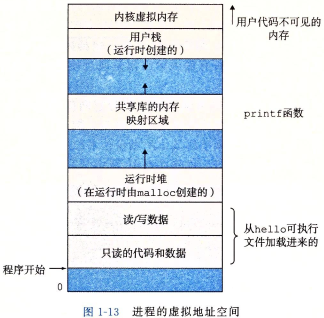

Virtual memory is an abstraction that provides each process with the illusion that it has exclusive use of the main memory.Each process has the same uniform view of memory,which is known as its virtual memory address space.In linux,the topmost region of the address space is reserved for code and data in the OS that is common to all process.The lower region of the address space holds the code and data defined by the user's process.

Note that addresses in the figure increase from the bottom to the top.

Program code and data(程序代码和数据):Code begins at the same fixed address for all processes,followed by data locations that correspond to global C variables.The code and data areas are initialized directly from the contents of the executable object file.That means that once the process begin running,the code and data areas are fixed in size.

————————

global C variables

Code (the same fixed address [0x00400000(64)/0x08048000(32)])

————————

Heap:The code and data area are followed immediately by the run-time heap(运行时的堆).The heap expands and contracts dynamically at run time as a result of calls to C standard library routines such as malloc and free.

Shared libraries(共享库):an area that holds the code and data for shared libraries suchas the C standard library and the math library.(chapter 7)

Stack:At the top of the user's virtual address space is the user stack that the compiler uses to implement function calls(编译器用它来实现函数调用).The user stack expands and contracts dynamiccally during the execution of the program.In particular,each time we call a function,the stack grows.Each time we return from a function ,it contracts.

Kernel virtual memory:The top region of the address space is reserved for the kernel.Application programs are not allowed to read or write the contents of this area or to directly call funtions defined in the kernel code.The basic idea is to store the contents of the proess's virtural memory on disk,and then use the main memory as a cache for the disk.

1.7.4 Files

A file is a sequence of bytes(文件就是字节序列),nothing more and nothing less.Every I/O devices,including disks,keyboards,display,and even networks,is modeled as a file.all input and output in the system is performed by reading and writing files,using a small set of system calls known as Unix I/O.

1.8 Systems communicate with other systems using networks

From the point of view of an individual system,the network can be viewed as just another I/O device.When the system copies a sequence of bytes from main memory to the network adapter, the data flows across the network to another machine,instead of ,say,to a local disk drive.Similarly,the system can read data sent from other machines and copy this data to its main memory.

1.9 Important Themes

1.9.1Amdahl's Law

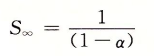

当k-->无穷的时候,

1.9.2 Concurrency and Parallelism

We use the term concurrency to refer to the general concept of a system with multiple,simultaneous activities,and the term parallelism to refer to the use of concurrency to make a system run faster.

注:首先,并发和并行的区别在于微观上或者说同一时刻,并发是指在一个时段内同时运行多个程序,并行是指在同一时刻内运行多个程序。例如一个人先煮饭再炒菜,并在规定的时间内(1MIN)完成做饭则属于并发做饭,,两个人同时一个在煮饭一个在炒菜但他们也是1分钟完成则是并行做饭。因为我觉得同一时刻,就想质点一样是一个抽象的概念很难具体形容。所以 为了达到并发的效果,可以一个人做的很快同时做多个事情(模拟并发),也可以让多个人去做每个人做的很慢(并行实现并发)。

Thread-level concurrency

Tranditionally,this concurrent executing(time-sharing systems advented in the early 1960s) was only simulated(模拟),by having a single computer rapidlly switch among its execution processes.This configuration is known as a uniprocessor system.

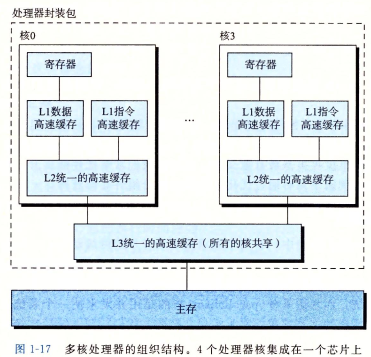

When we construct a system consisting of multiple processors all under the control of a single operating system kernel,we have a multiprocessor system with the advent of multi-core processors and hyperthreading.

Multi-core processors have several CPUs integrated onto a single integrated-circuit chip.

Hyperthreading, sometimes called simultaneous multi-threading,is a technique that allows a single CPU to execute multiple flows of control.It involves having multiple copies(多个备份) of some of the CPU hardware,such as program conuters and register files,while having only single copies of other parts of the hardware,such as the units that perform floating-point arithmetic(浮点算术运算单元).Whereas a conventional processor requires around 20000clock cycles to shift between different threads, a hyterthreaded processor decides which of its thread to execute on a cycle-by-cycle basis.The Intel Core i7 processor can have each core executing two threads, and so a four-core system can actually execute eight threads in parallel.

注:超线程:从1960s-2000年左右都是单核系统(uniprocessor system),2004年奔腾4实现了TH,即单核双线程。(所以人类有50多年的模拟并发经验)

HT(hyper-threading)是可在同一时间里,应用程序可以使用芯片的不同部分。虽然单线程芯片每秒钟能够处理成千上万条指令,但是在任一时刻只能够对一条线程进行操作。而超线程技术可以使芯片同时进行多线程处理,使芯片性能得到提升。但HT只是一个物理核心实现两个虚拟核心,目的是为了提高CPU效率,但是采用超线程技术能同时执行两个线程,但它并不像两个真正的CPU那样,每个CPU都具有独立的资源,即超线程的性能并不等于两颗CPU的性能。

Instruction-Level Parallelism

At a much lower level of abstraction,modern processors can execute multiple instruction at one time, a property known as instruction-level parallelism.For example,earl mircroprocessors,such as the 1978-vintage Intel 8086 required multiple(typically,3-10)clock cycles to execute a single instruction.More recent processors can sustain exeuction rates of 2-4 instructions per clock cycle.Any given instruction requires much longer from start to finish,perhaps 20cycles or more,but the processor uses a number of clever tricks(pipelining,流水线) to process as many as 100 instructions at a time.

Processors that can sustain execution rates faster than one instruction per cycle are known as superscalar processors(超标量处理器).

Single-Instrucion,Multiple-Data(SIMD) Parallelism

At the lowest level,many modern processors have special hardware that allows a single instruction to cause multiple operations to be performed in parallel,a mode known as single-instruction,multiple-data,or"SIMD" parallelism.For example,recent generations of Intel and AMD processors have instructions that can add four pairs of single-precision floating-point numbers(C data type float)in parallel.

1.9.2The Importance of Abstraction in Computer Systems