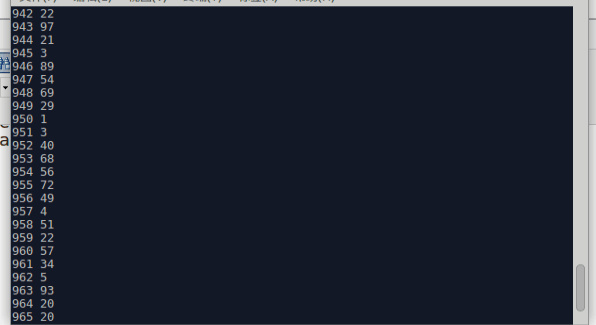

创建实验数据:

from pyspark import SparkContext

import random

OutputFile = "file:///usr/local/spark/mycode/exercise/people"

sc = SparkContext('local','createPeopleAgeData')

peopleAge = []

for i in range(1,1001):

rand = random.randint(1,100)

peopleAge.append(str(i)+" "+str(rand))

RDD = sc.parallelize(peopleAge)

RDD.saveAsTextFile(OutputFile)

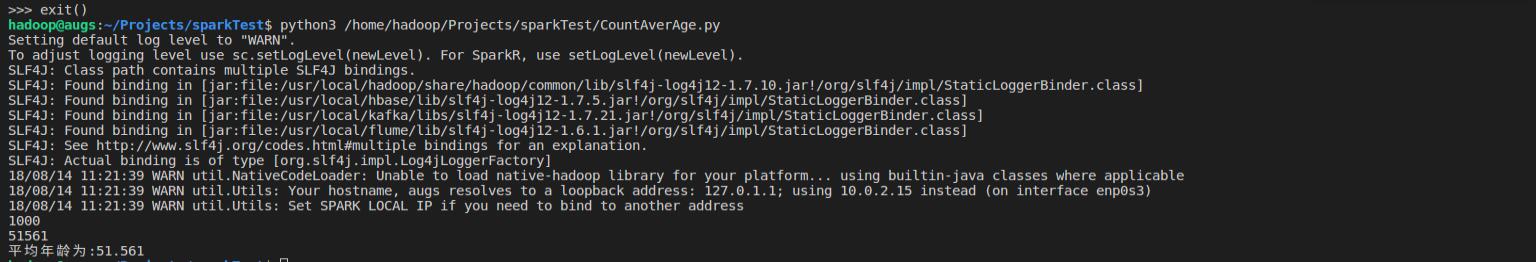

from pyspark import SparkContext

#配置sc

sc = SparkContext('local','CountAverAge')

#创建RDD 读入数据

RDD = sc.textFile("file:///usr/local/spark/mycode/exercise/peopleAge.txt")

#得到数据总条数

Count =RDD.count()

#对数据进行切割,只取年龄部分,然后把年龄字符串转成Int,然后用reduce函数累加

Average = RDD.map(lambda line : line.split(" ")[1]).map(lambda a: int(a)).reduce(lambda a,b :(a+b))

print(Count)

print(Average)

print("平均年龄为:{0}".format(Average / Count))