最近偶然打开一个英文网站,仔细一看原来是中国日报的英文版本,本着培养语感的想法多看看英语新闻,奈何水平渣渣,机智如我想到了爬取文章高频词汇,废话少说,看下文:

爬取中国日报全网所有文章链接

1.用bs4获取所有含有href属性的a标签

import requests from bs4 import BeautifulSoup url = 'http://www.chinadaily.com.cn/' wbdata = requests.get(url).text soup = BeautifulSoup(wbdata,'lxml') #创建一个beautifulsoup对象 print soup linklist = [] for i in soup.find_all('a'): link = i['href'] linklist.append(link) set(linklist) #去重 print linklist

输出:

['http://www.chinadaily.com.cn', 'http://usa.chinadaily.com.cn', 'http://europe.chinadaily.com.cn', 'http://africa.chinadaily.com.cn', 'http://www.chinadaily.com.cn/business/2017-09/18/content_32146768.htm', 'http://www.chinadaily.com.cn/world/2017-09/18/content_32146327.htm', 'http://www.chinadaily.com.cn/world/2017-09/18/content_32146327.htm', 'javascript:void(0)', 'javascript:void(0)', 'javascript:void(0)', 'javascript:void(0)', 'china/2017-09/18/content_32158742.htm', 'business/2017-09/18/content_32157059.htm', 'china/2017-09/18/content_32157984.htm', 'business/2017cnruimf/2017-09/18/content_32157756.htm'...]

2.正则提取符合要求的链接

linklist = ['china/2017-09/18/content_32143681.htm','http://www.chinadaily.com.cn/culture/2017-09/18/content_32158002.htm','javascript:void(0)'] s = ",".join(linklist) print s links = re.findall('.*?/2017-09/18/content_d+.htm', s) print links

输出:

china/2017-09/18/content_32143681.htm,http://www.chinadaily.com.cn/culture/2017-09/18/content_32158002.htm,javascript:void(0) ['china/2017-09/18/content_32143681.htm', ',http://www.chinadaily.com.cn/culture/2017-09/18/content_32158002.htm']

3.完整源码如下:

import requests from bs4 import BeautifulSoup import re url = 'http://www.chinadaily.com.cn/' wbdata = requests.get(url).text soup = BeautifulSoup(wbdata,'lxml') #创建一个beautifulsoup对象 #print soup linklist = [] for i in soup.find_all('a'): link = i['href'] #print link linklist.append(link) #set(linklist) #去重 #print linklist print len(linklist) newlist = [] for i in linklist: link = re.findall('.*?/content_d+.htm', i) if link != []: newlist.append(link[0]) print len(newlist) for i in range(0,len(newlist)): if newlist[i].find('http://www.chinadaily.com.cn/') == -1: newlist[i] = 'http://www.chinadaily.com.cn/' + newlist[i] print newlist

输出:

获取链接文章内容

for url in newlist: print url wbdata = requests.get(url).content soup = BeautifulSoup(wbdata,'lxml') # 替换换行字符 text = str(soup).replace(' ','').replace(' ','') # 替换<script>标签 text = re.sub(r'<script.*?>.*?</script>',' ',text) # 替换HTML标签 text = re.sub(r'<.*?>'," ",text) text = re.sub(r'[^a-zA-Z]',' ',text) # 转换为小写 text = text.lower() text = text.split() text = [i for i in text if len(i) > 1 and i != 'chinadaily' and i != 'com' and i != 'cn'] text = ' '.join(text) print text with open(r"C:UsersHPDesktopcodesDATAwords.txt",'a+') as file: file.write(text+' ') print("写入成功")

输出:

高频词汇分析

基本语法说明:参考

from nltk.corpus import PlaintextCorpusReader

corpus_root = ''

wordlists = PlaintextCorpusReader(corpus_root, 'datafile.txt') #载入文件作为语料库

wordlists.words() #整个语料库中的词汇

from nltk.corpus import stopwords

stop = stopwords.words('english') #获得英语中的停用词

from nltk.probability import *

fdist = nltk.FreqDist(swords) #创建一个条件频率分布

源码如下:

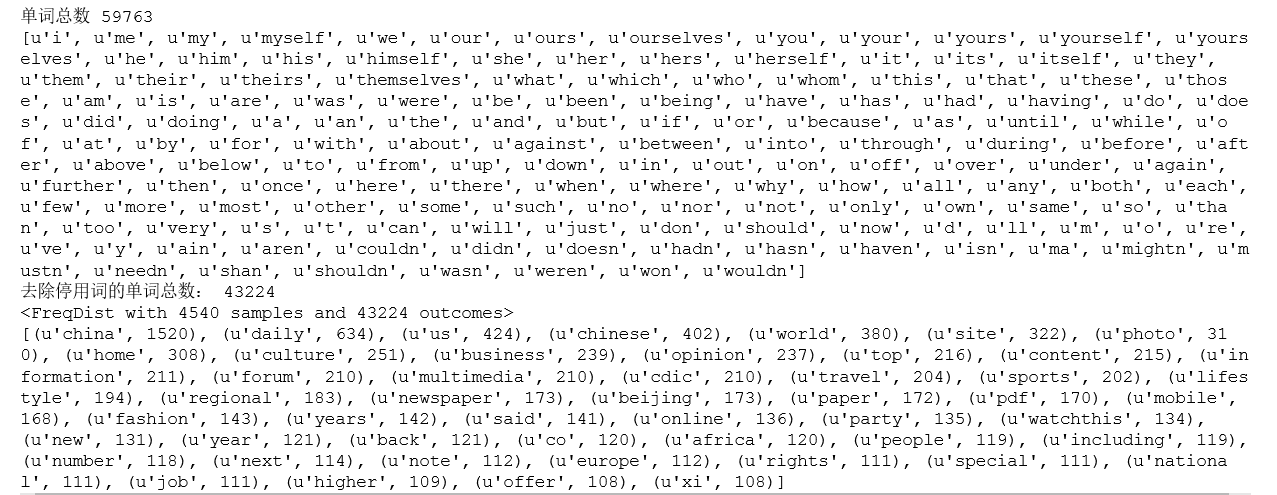

from nltk.corpus import stopwords from nltk.probability import * from nltk.corpus import PlaintextCorpusReader import nltk corpus_root = 'C:/Users/HP/Desktop/codes/DATA' wordlists = PlaintextCorpusReader(corpus_root, '.*') #载入文件作为语料库 text = nltk.Text(wordlists.words('words.txt')) #获取整个语料库中的词汇 #text = 'gold and silver mooncakes look more alluring than real ones home business biz photos gold and silver mooncakes look more alluring than real ones by tan xinyu tan xinyu gold and silver mooncakes look more alluring than real ones mid autumn festival mooncake jewelry biz photos webnews enpproperty the jade mooncake left looks similar to the real one right in guangzhou south china guangdong province sept photo ic as the mid autumn festival is around the corner the traditional chinese delicacy mooncake has already hit shops and stores across the country and mooncake makers also are doing their best to attract customers with various flavors and stuffing the family reunion festival falls on oct this year or the th day of the th chinese lunar month and eating mooncakes with families on the day is one of important ways for chinese people to celebrate it sadly these mooncakes displayed in jewelry outlet cannot be eaten as they are made of gold silver or jade although they look more real than real previous next previous next photo on the move kazak herdsmen head to winter pastures salt lake in shanxi looks like double flavor hot pot special subway train for wuhan open ready to serve world top financial centers rostov on don makes preparations for world cup new chinese embassy sign of stronger china panama ties back to the top home china world business lifestyle culture travel sports opinion regional forum newspaper china daily pdf china daily paper mobile copyright all rights reserved the content including but not limited to text photo multimedia information etc published in this site belongs to china daily information co cdic without written authorization from cdic such content shall not be republished or used in any form note browsers with or higher resolution are suggested for this site license for publishing multimedia online registration number about china daily advertise on site contact us job offer expat employment follow us' print u"单词总数",len(text) stop = stopwords.words('english') #获得英语中的停用词 print stop swords = [i for i in text if i not in stop] print u"去除停用词的单词总数:",len(swords) fdist = FreqDist(swords) print fdist #print fdist.items() #元组列表 #print fdist.most_common(10) newd = sorted(fdist.items(), key=lambda fdist: fdist[1], reverse=True) #设置以字典值排序,使频率从高到低显示 print newd[:50]

注:type(text)为<class 'nltk.text.Text'>,故text若为字符串得出的是字母的频率

输出:

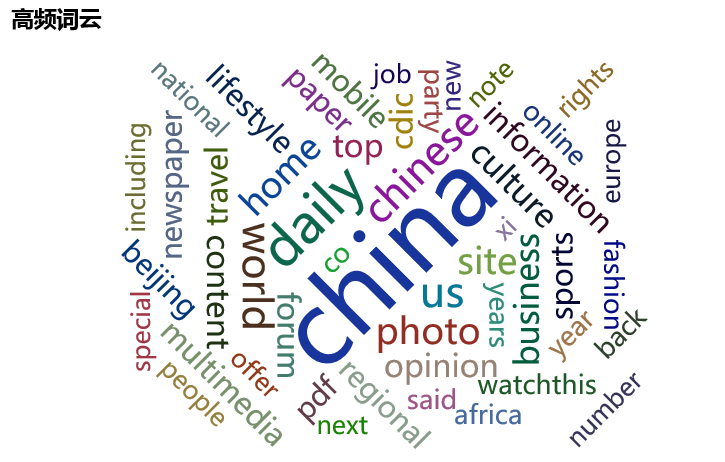

高频词云

from pyecharts import WordCloud data = dict(newd[:50]) wordcloud = WordCloud('高频词云',width = 600,height = 400) wordcloud.add('ryana',data.keys(),data.values(),word_size_range = [20,80]) wordcloud

输出:

中国日报文章单词出现频率最高的是'china'和'daily',实在无语,看来仅过滤一个'chinadaily'不管用啊啊啊