tensorflow:Your input ran out of data; interrupting training. Make sure that your dataset or generator can generate at least steps_per_epoch * epochs batches

一、总结

一句话总结:

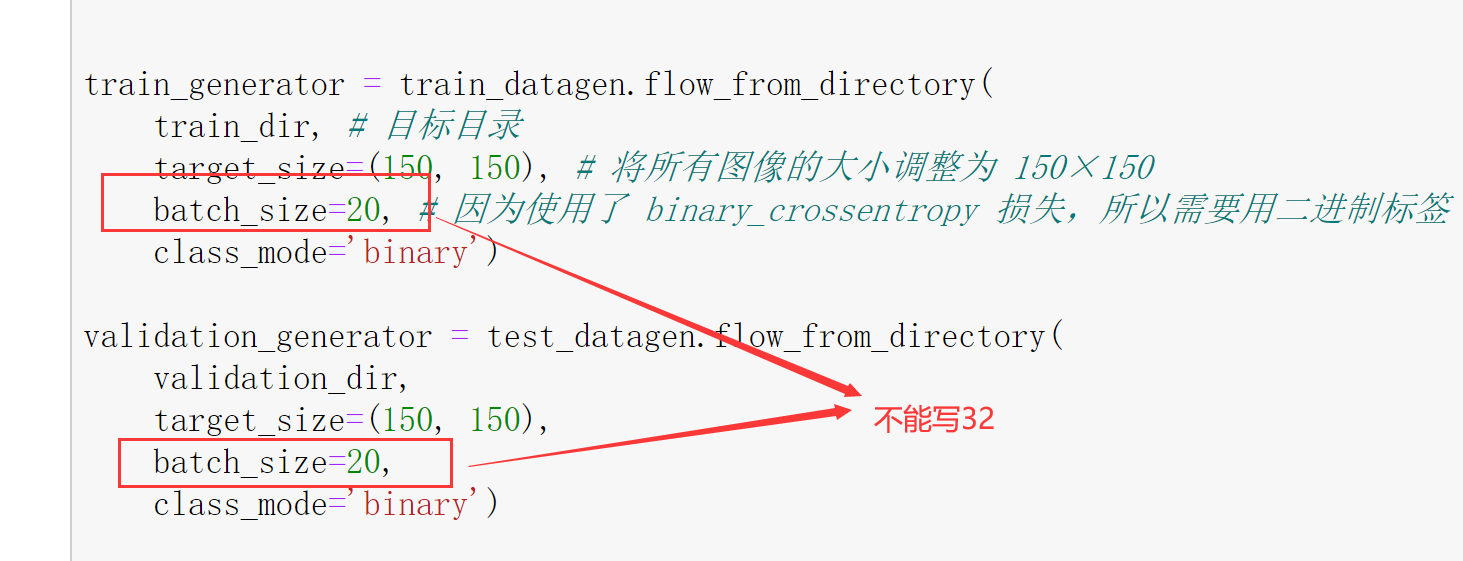

保证batch_size(图像增强中)*steps_per_epoch(fit中)小于等于训练样本数

train_generator = train_datagen.flow_from_directory(

train_dir, # 目标目录

target_size=(150, 150), # 将所有图像的大小调整为 150×150

batch_size=20, # 因为使用了 binary_crossentropy 损失,所以需要用二进制标签

class_mode='binary')

history = model.fit(

train_generator,

steps_per_epoch=100,

epochs=150,

validation_data=validation_generator,

validation_steps=50)

# case 1

# 如果上面train_generator的batch_size是32,如果这里steps_per_epoch=100,那么会报错

"""

tensorflow:Your input ran out of data; interrupting training.

Make sure that your dataset or generator can generate at least `steps_per_epoch * epochs` batches (in this case, 50 batches).

You may need to use the repeat() function when building your dataset.

"""

# 因为train样本数是2000(猫1000,狗1000),小于100*32

# case 2

# 如果上面train_generator的batch_size是20,如果这里steps_per_epoch=100,那么不会报错

# 因为大小刚好

# case 3

# 如果上面train_generator的batch_size是32,如果这里steps_per_epoch=int(1000/32),

# 那么不会报错,但是会有警告,因为也是不整除

# 不会报错因为int(1000/32)*32 < 2000

# case 4

# 如果上面train_generator的batch_size是40,如果这里steps_per_epoch=100,照样报错

# 因为40*100>2000

二、tensorflow:Your input ran out of data; interrupting training. Make sure that your dataset or generator can generate at least steps_per_epoch * epochs batches

转自或参考:https://stackoverflow.com/questions/60509425/how-to-use-repeat-function-when-building-data-in-keras

1、报错

WARNING:tensorflow:Your input ran out of data;

interrupting training. Make sure that your dataset or generator can generate at least

steps_per_epoch * epochs batches (in this case, 5000 batches).

You may need to use the repeat() function when building your dataset.

2、现象

I am training a binary classifier on a dataset of cats and dogs:

Total Dataset: 10000 images

Training Dataset: 8000 images

Validation/Test Dataset: 2000 images

The Jupyter notebook code:

# Part 2 - Fitting the CNN to the images

train_datagen = ImageDataGenerator(rescale = 1./255,

shear_range = 0.2,

zoom_range = 0.2,

horizontal_flip = True)

test_datagen = ImageDataGenerator(rescale = 1./255)

training_set = train_datagen.flow_from_directory('dataset/training_set',

target_size = (64, 64),

batch_size = 32,

class_mode = 'binary')

test_set = test_datagen.flow_from_directory('dataset/test_set',

target_size = (64, 64),

batch_size = 32,

class_mode = 'binary')

history = model.fit_generator(training_set,

steps_per_epoch=8000,

epochs=25,

validation_data=test_set,

validation_steps=2000)

I trained it on a CPU without a problem but when I run on GPU it throws me this error:

Found 8000 images belonging to 2 classes.

Found 2000 images belonging to 2 classes.

WARNING:tensorflow:From <ipython-input-8-140743827a71>:23: Model.fit_generator (from tensorflow.python.keras.engine.training) is deprecated and will be removed in a future version.

Instructions for updating:

Please use Model.fit, which supports generators.

WARNING:tensorflow:sample_weight modes were coerced from

...

to

['...']

WARNING:tensorflow:sample_weight modes were coerced from

...

to

['...']

Train for 8000 steps, validate for 2000 steps

Epoch 1/25

250/8000 [..............................] - ETA: 21:50 - loss: 7.6246 - accuracy: 0.5000

WARNING:tensorflow:Your input ran out of data; interrupting training. Make sure that your dataset or generator can generate at least `steps_per_epoch * epochs` batches (in this case, 200000 batches). You may need to use the repeat() function when building your dataset.

250/8000 [..............................] - ETA: 21:52 - loss: 7.6246 - accuracy: 0.5000

I would like to know how to use the repeat() function in keras using Tensorflow 2.0?

3、解决

Your problem stems from the fact that the parameters steps_per_epoch and validation_steps need to be equal to the total number of data points divided to the batch_size.

Your code would work in Keras 1.X, prior to August 2017.

Change your model.fit function to:

history = model.fit_generator(training_set,

steps_per_epoch=int(8000/batch_size),

epochs=25,

validation_data=test_set,

validation_steps=int(2000/batch_size))

As of TensorFlow2.1, fit_generator is being deprecated. You can use .fit() method also on generators.

TensorFlow >= 2.1 code:

history = model.fit(training_set.repeat(),

steps_per_epoch=int(8000/batch_size),

epochs=25,

validation_data=test_set.repeat(),

validation_steps=int(2000/batch_size))

Notice that int(8000/batch_size) is equivalent to 8000 // batch_size (integer division)

============================================================================

也就是steps_per_epoch=int(8000/batch_size),这里的8000是训练样本数

4、实例

训练样本为1000张,

train_datagen = ImageDataGenerator( rescale=1./255, rotation_range=40, width_shift_range=0.2, height_shift_range=0.2, shear_range=0.2, zoom_range=0.2, horizontal_flip=True,) # 注意,不能增强验证数据 test_datagen = ImageDataGenerator(rescale=1./255) # 这里batch_size不能是32,不然就报如下错误 ''' WARNING:tensorflow:Your input ran out of data; interrupting training. Make sure that your dataset or generator can generate at least steps_per_epoch * epochs batches (in this case, 5000 batches). You may need to use the repeat() function when building your dataset. ''' # 可能是整除关系吧 train_generator = train_datagen.flow_from_directory( train_dir, # 目标目录 target_size=(150, 150), # 将所有图像的大小调整为 150×150 batch_size=20, # 因为使用了 binary_crossentropy 损失,所以需要用二进制标签 class_mode='binary') validation_generator = test_datagen.flow_from_directory( validation_dir, target_size=(150, 150), batch_size=20, class_mode='binary')

history = model.fit( train_generator, steps_per_epoch=100, epochs=150, validation_data=validation_generator, validation_steps=50)

如果上面的batch_size=32,那么这里如果steps_per_epoch=100会报错

steps_per_epoch 参数的作用:从生成器中抽取 steps_per_epoch 个批量后(即运行了 steps_per_epoch 次梯度下降),拟合过程 将进入下一个轮次。

4.1、具体测试情况

# case 1

# 如果上面train_generator的batch_size是32,如果这里steps_per_epoch=100,那么会报错

"""

tensorflow:Your input ran out of data; interrupting training.

Make sure that your dataset or generator can generate at least `steps_per_epoch * epochs` batches (in this case, 50 batches).

You may need to use the repeat() function when building your dataset.

"""

# 因为train样本数是2000(猫1000,狗1000),小于100*32

# case 2

# 如果上面train_generator的batch_size是20,如果这里steps_per_epoch=100,那么不会报错

# 因为大小刚好

# case 3

# 如果上面train_generator的batch_size是32,如果这里steps_per_epoch=int(1000/32),

# 那么不会报错,但是会有警告,因为也是不整除

# 不会报错因为int(1000/32)*32 < 2000

# case 4

# 如果上面train_generator的batch_size是40,如果这里steps_per_epoch=100,照样报错

# 因为40*100>2000