- 新建项目创建爬虫

1 scrapy startproject Scrapy_crawl # 新建Scrapy项目 2 3 scrapy genspider -l # 查看全部模板 4 # Available templates: 5 # basic 6 # crawl 7 # csvfeed 8 # xmlfeed 9 10 scrapy genspider -t crawl china tech.china.com # 选择crawl模板创建爬虫 11 scrapy crawl china # 运行爬虫

- CrawlSpider,其内容如下所示

1 # china.py 2 from scrapy.linkextractors import LinkExtractor 3 from scrapy.spiders import CrawlSpider, Rule 4 5 class ChinaSpider(CrawlSpider): 6 name = 'china' 7 allowed_domains = ['tech.china.com'] 8 start_urls = ['http://tech.china.com/'] 9 # Rule的第一个参数是LinkExtractor,就是上文所说的LxmlLinkExtractor,只是名称不同。同时,默认的回调函数也不再是parse,而是parse_item 10 rules = ( 11 Rule(LinkExtractor(allow=r'Items/'), callback='parse_item', follow=True), 12 ) 13 14 def parse_item(self, response): 15 item = {} 16 #item['domain_id'] = response.xpath('//input[@id="sid"]/@value').get() 17 #item['name'] = response.xpath('//div[@id="name"]').get() 18 #item['description'] = response.xpath('//div[@id="description"]').get() 19 return item

- 修改start_urls链接:设置为爬取的第一个页面

1 start_urls = ['http://tech.china.com/articles/']

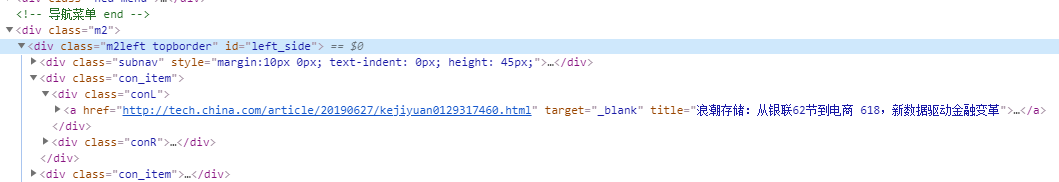

- 解析新闻链接:进入页面,F12使用开发者管理器查看源代码;所有的新闻链接都在ID为left_side节点中,具体就是每个class为con_item的节点里面;所有的新闻路径都是article开头

1 # allow:判断链接是否是新闻链接的正则;restrict_xpaths:检索新闻内容的正则;callback:回调函数,解析方法 2 Rule(LinkExtractor(allow='article/.*.html', restrict_xpaths='//div[@id="left_side"]//div[@class="con_item"]'), callback='parse_item')

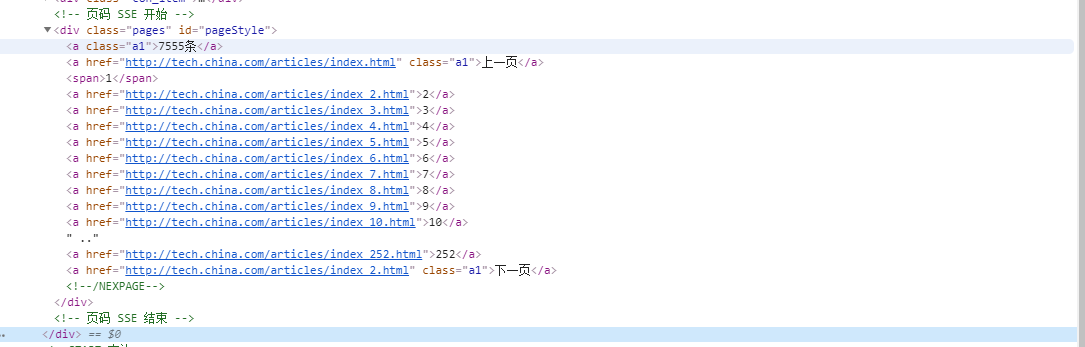

- 分析分页链接:分页链接都在ID为pageStyle的div中,然后不断的匹配下一页的链接文本

1 # 提取下一页链接 2 Rule(LinkExtractor(restrict_xpaths='//div[@id="pageStyle"]//a[contains(., "下一页")]'))

- 重写china.py的rules

1 rules = ( 2 Rule(LinkExtractor(allow='article/.*.html', restrict_xpaths='//div[@id="left_side"]//div[@class="con_item"]'), callback='parse_item'), 3 Rule(LinkExtractor(restrict_xpaths='//div[@id="pageStyle"]//a[contains(., "下一页")]')) 4 )

- 重写items.py

1 from scrapy import Field, Item 2 3 class NewsItem(Item): 4 """新闻数据结构""" 5 title = Field() # 标题 6 url = Field() # 链接 7 text = Field() # 正文 8 datetime = Field() # 发布时间 9 source = Field() # 来源 10 website = Field() # 站点名称

- 创建loaders.py

1 from scrapy.loader import ItemLoader 2 from scrapy.loader.processors import TakeFirst, Join, Compose 3 4 class NewsLoader(ItemLoader): # 继承ItemLoader 5 """TakeFirst返回列表的第一个非空值,类似extract_first()的功能""" 6 default_output_processor = TakeFirst() 7 8 class ChinaLoader(NewsLoader): # 继承NewsLoader 9 """每个输入值经过Join(),再经过strip()""" 10 text_out = Compose(Join(), lambda s: s.strip()) 11 source_out = Compose(Join(), lambda s: s.strip())

- 改写china.py的parse_item()方法:不使用通用爬虫的写法和使用通用爬虫的写法

1 def parse_item(self, response): 2 """不使用通用爬虫CrawlSpider的写法""" 3 item = NewsItem() 4 item['title'] = response.xpath('//h1[@id="chan_newsTitle"]/text()').extract_first() 5 item['url'] = response.url 6 item['text'] = ''.join(response.xpath('//div[@id="chan_newsDetail"]//text()').extract()).strip() 7 item['datetime'] = response.xpath('//div[@id="chan_newsInfo"]/text()').re_first('(d+-d+-d+sd+:d+:d+)') 8 item['source'] = response.xpath('//div[@id="chan_newsInfo"]/text()').re_first('来源:(.*)').strip() 9 item['website'] = '中华网' 10 yield item 11 12 def parse_item(self, response): 13 loader = ChinaLoader(item=NewsItem(), response=response) # 用该Item和Response对象实例化ItemLoader 14 # 用add_xpath()、add_css()、add_value()等方法对不同属性依次赋值,最后调用load_item()方法实现Item的解析 15 loader.add_xpath('title', '//h1[@id="chan_newsTitle"]/text()') 16 loader.add_value('url', response.url) 17 loader.add_xpath('text', '//div[@id="chan_newsDetail"]//text()') 18 loader.add_xpath('datetime', '//div[@id="chan_newsInfo"]/text()', re='(d+-d+-d+sd+:d+:d+)') 19 loader.add_xpath('source', '//div[@id="chan_newsInfo"]/text()', re='来源:(.*)') 20 loader.add_value('website', '中华网') 21 yield loader.load_item()

- 上面那些步只实现的爬虫的半通用化配置

- 下面抽取爬虫的通用配置

1 scrapy genspider -t crawl universal universal # 新建通用爬虫 2 python run.py china # 启动爬虫

- 建立configs文件夹,与spider文件夹并列,并创建配置文件china.json

1 { 2 "spider": "universal", # 爬虫名称 3 "website": "中华网科技", # 站点名称 4 "type": "新闻", # 站点类型 5 "index": "http://tech.china.com/", # 首页 6 "settings": { # user_agent 7 "USER_AGENT": "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_12_6) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/60.0.3112.90 Safari/537.36" 8 }, 9 "start_urls": [ 10 "http://tech.china.com/articles/" 11 ], 12 "allowed_domains": [ 13 "tech.china.com" 14 ], 15 "rules": "china" 16 }

- 新建rules.py:将所有rules单独定义在一块,实现Rule的分离

1 from scrapy.linkextractors import LinkExtractor 2 from scrapy.spiders import Rule 3 rules = { 4 'china': ( 5 Rule(LinkExtractor(allow='article/.*.html', restrict_xpaths='//div[@id="left_side"]//div[@class="con_item"]'), 6 callback='parse_item'), 7 Rule(LinkExtractor(restrict_xpaths='//div[@id="pageStyle"]//a[contains(., "下一页")]')) 8 ) 9 }

- 创建utils.py读取JSON配置文件:启动爬虫时需要读取配置文件然后动态加载到Spider中

1 from os.path import realpath, dirname 2 import json 3 def get_config(name): 4 path = dirname(realpath(__file__)) + '/configs/' + name + '.json' 5 with open(path, 'r', encoding='utf-8') as f: 6 return json.loads(f.read())

- 在项目的根目录设置入库文件run.py:用来启动爬虫

1 import sys 2 from scrapy.utils.project import get_project_settings 3 from Scrapy_crawl.spiders.universal import UniversalSpider 4 from Scrapy_crawl.utils import get_config 5 from scrapy.crawler import CrawlerProcess 6 def run(): 7 name = sys.argv[1] # 输入参数 8 custom_settings = get_config(name) # 获取JSON配置文件信息 9 spider = custom_settings.get('spider', 'universal') # 爬取使用的爬虫名称 10 project_settings = get_project_settings() # 声明配置 11 settings = dict(project_settings.copy()) 12 settings.update(custom_settings.get('settings')) # 获取到的settings配置和项目全局的settings配置做了合并 13 process = CrawlerProcess(settings) # 新建一个CrawlerProcess,传入爬取使用的配置 14 process.crawl(spider, **{'name': name}) # 启动爬虫 15 process.start() 16 if __name__ == '__main__': 17 run()

- 解析方法parse_item()的可配置化,添加新配置信息到china.json中

1 "item": { 2 "class": "NewsItem", # Item的类名 3 "loader": "ChinaLoader", # Item Loader的类名 4 "attrs": { # attrs属性来定义每个字段的提取规则 5 "title": [ 6 { 7 "method": "xpath", # title定义的提取方法,xpath就是相当于Item Loader的add_xpath()方法 8 "args": [ # 定义匹配的正则表达式 9 "//h1[@id='chan_newsTitle']/text()" 10 ] 11 } 12 ], 13 "url": [ 14 { 15 "method": "attr", # add_value 16 "args": [ 17 "url" 18 ] 19 } 20 ], 21 "text": [ 22 { 23 "method": "xpath", 24 "args": [ 25 "//div[@id='chan_newsDetail']//text()" 26 ] 27 } 28 ], 29 "datetime": [ 30 { 31 "method": "xpath", 32 "args": [ 33 "//div[@id='chan_newsInfo']/text()" 34 ], 35 "re": "(\d+-\d+-\d+\s\d+:\d+:\d+)" 36 } 37 ], 38 "source": [ 39 { 40 "method": "xpath", 41 "args": [ 42 "//div[@id='chan_newsInfo']/text()" 43 ], 44 "re": "来源:(.*)" 45 } 46 ], 47 "website": [ 48 { 49 "method": "value", # add_value 50 "args": [ 51 "中华网" 52 ] 53 } 54 ] 55 } 56 }

- 修改配置文件china.py的start_urls参数

1 "start_urls": { 2 "type": "static", # 静态类型,直接配置URL列表 3 "value": [ 4 "http://tech.china.com/articles/" 5 ] 6 } 7 ############################################################################## 8 "start_urls": { 9 "type": "dynamic", # 动态类型,调用方法生成 10 "method": "china", 11 "args": [ 12 5, 10 13 ] 14 }

- 创建urls.py类:当start_urls定义为dynamic类型,使用china()方法,只需要传入页码参数

1 def china(start, end): 2 for page in range(start, end + 1): 3 yield 'http://tech.china.com/articles/index_' + str(page) + '.html'

- 改写新建类universal.py

1 # -*- coding: utf-8 -*- 2 from scrapy.linkextractors import LinkExtractor 3 from scrapy.spiders import CrawlSpider, Rule 4 from Scrapy_crawl.items import * 5 from Scrapy_crawl.loaders import * 6 from Scrapy_crawl.utils import get_config 7 from Scrapy_crawl import urls 8 from Scrapy_crawl.rules import rules 9 10 class UniversalSpider(CrawlSpider): 11 name = 'universal' 12 13 def __init__(self, name, *args, **kwargs): 14 config = get_config(name) # 获取配置文件 15 self.config = config 16 self.rules = rules.get(config.get('rules')) # 获取Rule名称,然后去rules类中获取对应的Rule配置 17 start_urls = config.get('start_urls') # 获取start_urls配置 18 if start_urls: 19 if start_urls.get('type') == 'static': # 静态类型,直接获取连接 20 self.start_urls = start_urls.get('value') 21 elif start_urls.get('type') == 'dynamic': # 动态类型 22 self.start_urls = list(eval('urls.' + start_urls.get('method'))(*start_urls.get('args', []))) 23 self.allowed_domains = config.get('allowed_domains') # 获取主机 24 super(UniversalSpider, self).__init__(*args, **kwargs) 25 26 def parse_item(self, response): 27 item = self.config.get('item') # 获取解析函数配置信息 28 if item: 29 cls = eval(item.get('class'))()#获取Item类 30 loader = eval(item.get('loader'))(cls, response=response)#获取loader 31 # 动态获取属性配置 32 for key, value in item.get('attrs').items(): 33 for extractor in value: 34 if extractor.get('method') == 'xpath': 35 loader.add_xpath(key, *extractor.get('args'), **{'re': extractor.get('re')}) 36 if extractor.get('method') == 'css': 37 loader.add_css(key, *extractor.get('args'), **{'re': extractor.get('re')}) 38 if extractor.get('method') == 'value': 39 loader.add_value(key, *extractor.get('args'), **{'re': extractor.get('re')}) 40 if extractor.get('method') == 'attr': 41 loader.add_value(key, getattr(response, *extractor.get('args'))) 42 yield loader.load_item()