已经写了好几篇的网络爬虫了,都是单个应用程序,那个下面介绍一下简单易扩展的爬虫架构;

应用场景是:爬取百度百科搜索关键字的1000个相关链接及相应的简介;

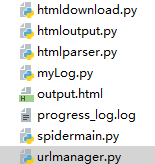

模块介绍:

首先是我们的主调度程序spidermain,用来决定从哪个地开始爬取及调用相关模块;

urlmanager 用来管理目标URL,对于新爬取的URL检查是否已爬取,并 对爬取过的URL做回收;

htmldownload 用来下载目标URL的网页源码;

htmlparser 用来分析目标URL网页源码,解析出源码中的URL及简介;

htmloutput 收集已经爬取过的网页内容,并输出;

spidermain.py

#!/usr/bin/env python #coding:utf-8 import htmldownload import htmlparser import urlmanager import htmloutput from myLog import MyLog as mylog class SpiderMain(object): def __init__(self): self.urls = urlmanager.UrlManager() self.downloader = htmldownload.HtmlDownLoad() self.parser = htmlparser.HtmlParser() self.outputer = htmloutput.HtmlOutPut() self.log = mylog() def crow(self,url): self.urls.add_new_url(url) count = 1 while self.urls.has_new_url(): try: new_url = self.urls.get_new_url() self.log.info(u"当前爬取的是%d 个URL:%s"%(count,new_url)) html_content = self.downloader.download(new_url) # print html_content new_urls,new_data = self.parser.parser(new_url,html_content) self.urls.add_new_urls(new_urls) self.outputer.collect_data(new_data) if count == 10 : break count += 1 except Exception,e: self.log.error(u"当前爬取的%d 个URL:%s,失败......"%(count,new_url)) self.log.error(e) self.outputer.output_html() if __name__ == "__main__": root_url = "https://baike.baidu.com/item/CSS/5457" obj_spider = SpiderMain() obj_spider.crow(root_url)

urlmanager.py

#coding:utf-8 class UrlManager(object): def __init__(self): self.new_urls = set() self.old_urls = set() def add_new_url(self,url): if url is None : return if url not in self.new_urls and url not in self.old_urls: self.new_urls.add(url) def add_new_urls(self,urls): if urls is None or len(urls) == 0 : return for url in urls: self.add_new_url(url) def has_new_url(self): return len(self.new_urls) != 0 def get_new_url(self): new_url = self.new_urls.pop() self.old_urls.add(new_url) return new_url

htmldownload.py

#coding:utf-8 import urllib2 class HtmlDownLoad(object): def download(self,url): if url is None: return headers = { "User-Agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/62.0.3202.94 Safari/537.36", "Referer":"https://baike.baidu.com/item" } req = urllib2.Request(url,headers=headers) response = urllib2.urlopen(req) if response.getcode() != 200: return None return response.read()

htmlparser.py

#coding:utf-8 from bs4 import BeautifulSoup import re import urlparse class HtmlParser(object): def _get_new_urls(self, page_url, soup): new_urls = set() links = soup.find_all('a', attrs={'href': re.compile(r'/item/.*?')}) for link in links: new_url = link.get('href') new_full_url = urlparse.urljoin(page_url, new_url) new_urls.add(new_full_url) return new_urls def _get_new_data(self, page_url, soup): res_data = {} title_node = soup.find('dd', attrs={'class': 'lemmaWgt-lemmaTitle-title'}).find('h1') res_data['title'] = title_node.get_text() summary_node = soup.find('div', attrs={'class': 'lemma-summary'}).find('div',attrs={'class':'para'}) res_data['summary'] = summary_node.get_text() res_data['url'] = page_url # print res_data.get('summary'),res_data.get('title'),res_data.get('url') return res_data def parser(self, page_url, html_content): if page_url is None or html_content is None: return soup = BeautifulSoup(html_content,'lxml') new_urls = self._get_new_urls(page_url, soup) new_data = self._get_new_data(page_url, soup) return new_urls, new_data

htmloutput.py

#coding:utf-8 import codecs class HtmlOutPut(object): def __init__(self): self.datas = [] def collect_data(self,data): if data is None: return self.datas.append(data) def output_html(self): # print 'i am run ' fout = codecs.open('output.html','w','utf-8') fout.write('<html>') fout.write('<body>') fout.write('<table style="border:2px solid #000;">') for data in self.datas: fout.write('<tr style="border:2px solid #000;">') fout.write('<td style="border:2px solid #000;200px">%s</td>'%data.get('url')) fout.write('<td style="border:2px solid #000;">%s</td>' % data.get('title')) fout.write('<td style="border:2px solid #000;">%s</td>' % data.get('summary')) fout.write("</tr>") fout.write('</table>') fout.write('</body>') fout.write('</html>')

经过扩展,可以轻易获取想要内容;

后续将会上传爬取JS渲染过的网页,一起学习进步!