一、首先什么是代理:

代理其实就是中间转发的那个玩意,所以在代码逻辑上也是如此的。

二、Python写http代理的基本逻辑:

(1)接受浏览器发出的请求,解析,拼凑成该有的样子,然后使用套接字发出去。

(2)完了,其实Demo就这么简单。

三、下面讲讲如何接受浏览器发起的请求,其实只要是请求就可以,没必要是浏览器的。外部发来的请求一样OK哦。

#接受请求就是一个服务器,没毛病老铁。所以用到了一个库BaseHTTPServer

1 #-*- coding:utf-8 -*- 2 3 #import lib-file 4 import urllib 5 import socket 6 from BaseHTTPServer import BaseHTTPRequestHandler,HTTPServer 7 8 #define handler function class 9 class MyHandler(BaseHTTPRequestHandler): 10 #HTTP method GET (e.x.) 11 def do_GET(self): 12 url = self.path 13 print "url:",url 14 protocol,rest = urllib.splittype(url) 15 print "protocol:",protocol 16 host,rest = urllib.splithost(rest) 17 print "host:",host 18 path = rest 19 print "path:",path 20 host,port = urllib.splitnport(host) 21 print "host:",host 22 port = 80 if port < 0 else port 23 host_ip = socket.gethostbyname(host) 24 print (host_ip,port) 25 #above easy to understand 26 del self.headers['Proxy-Connection'] 27 print self.headers 28 self.headers['Connection'] = 'close' 29 #Above! Three lines code removes Proxy-Connection columns and set connection to close to make sure no keep-alive link 30 #Bottom! Lines make request like what we see in the burpsuite! 31 send_data = 'GET ' + path + ' ' + self.protocol_version + ' ' 32 head = '' 33 for key, val in self.headers.items(): 34 head = head + "%s: %s " % (key, val) 35 send_data = send_data + head + ' ' 36 print send_data 37 # 38 client = socket.socket(socket.AF_INET,socket.SOCK_STREAM) 39 client.connect((host_ip,port)) 40 client.send(send_data) 41 #while True: 42 # ret = server.recv(4096) 43 # print ret 44 data = '' 45 while True: 46 tmp = client.recv(4096) 47 if not tmp: 48 break 49 data = data + tmp 50 51 # socprint data 52 client.close() 53 self.wfile.write(data)

看逻辑很简单,利用basehttpserver 收请求socket转发

起main函数:

1 def main(): 2 try: 3 server = HTTPServer(('127.0.0.1', 8888), MyHandler) 4 print 'Welcome to the machine...' 5 server.serve_forever() 6 print "testend" 7 except KeyboardInterrupt: 8 print '^C received, shutting down server' 9 server.socket.close() 10 11 if __name__ == '__main__': 12 main()

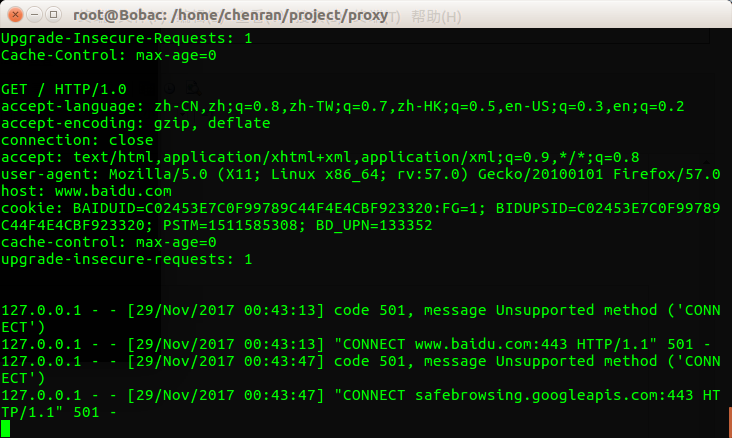

这里可以看到已经ok了,但是由于百度那边跳转和阻塞,还是没能成功完成代理,不过数据包确确实实转发出去了,但是代码逻辑已经ok。

参考:

http://www.lyyyuna.com/2016/01/16/http-proxy-get1/