环境:

win10

jdk1.8

之前有在虚拟机或者集群上安装spark安装包的,解压到你想要放spark的本地目录下,比如我的目录就是D:Hadoopspark-1.6.0-bin-hadoop2.6

/**

*注意:

之前在linux环境下安装的spark的版本是spark-2.2.0-bin-hadoop2.6,但后来搭建eclipse的spark开发环境时发现spark-2.2.0-bin-hadoop2.6解压后没有lib文件,也就没有关键的spark-assembly-1.6.0-hadoop2.6.0.jar这个jar包,不知道spark-2.2.0以后怎么支持eclipse的开发,所以我换了spark-1.6.0,如果有知道的大神,谢谢在下边留言指导一下。

**/

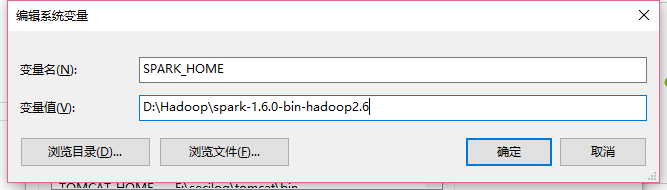

下边就简单了,先配置spark的环境变量,先添加一个SPARK_HOME,如下:

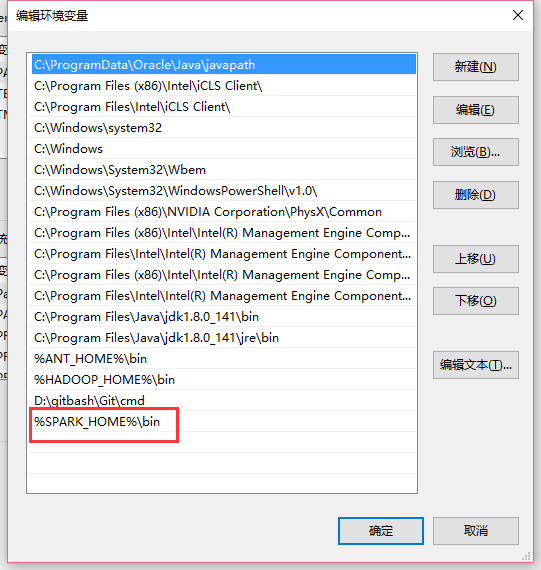

然后把SPARK_HOME配置到path,如下:

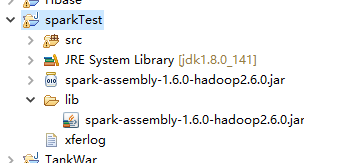

这样环境就搭好了,然后就是在eclipse上创建一个普通的java项目,然后把spark-assembly-1.6.0-hadoop2.6.0.jar这个包复制进工程并且导入,如下图

就可以开发spark程序了,下边附上一段小的测试代码:

import java.util.Arrays; import org.apache.spark.SparkConf; import org.apache.spark.api.java.JavaPairRDD; import org.apache.spark.api.java.JavaRDD; import org.apache.spark.api.java.JavaSparkContext; import org.apache.spark.api.java.function.FlatMapFunction; import org.apache.spark.api.java.function.Function2; import org.apache.spark.api.java.function.PairFunction; import org.apache.spark.api.java.function.VoidFunction; import scala.Tuple2; public class WordCount { public static void main(String[] args) { SparkConf conf = new SparkConf().setMaster("local").setAppName("wc"); JavaSparkContext sc = new JavaSparkContext(conf); JavaRDD<String> text = sc.textFile("hdfs://master:9000/user/hadoop/input/test"); JavaRDD<String> words = text.flatMap(new FlatMapFunction<String, String>() { private static final long serialVersionUID = 1L; @Override public Iterable<String> call(String line) throws Exception { return Arrays.asList(line.split(" "));//把字符串转化成list } }); JavaPairRDD<String, Integer> pairs = words.mapToPair(new PairFunction<String, String, Integer>() { private static final long serialVersionUID = 1L; @Override public Tuple2<String, Integer> call(String word) throws Exception { // TODO Auto-generated method stub return new Tuple2<String, Integer>(word, 1); } }); JavaPairRDD<String, Integer> results = pairs.reduceByKey(new Function2<Integer, Integer, Integer>() { private static final long serialVersionUID = 1L; @Override public Integer call(Integer value1, Integer value2) throws Exception { // TODO Auto-generated method stub return value1 + value2; } }); JavaPairRDD<Integer, String> temp = results.mapToPair(new PairFunction<Tuple2<String,Integer>, Integer, String>() { private static final long serialVersionUID = 1L; @Override public Tuple2<Integer, String> call(Tuple2<String, Integer> tuple) throws Exception { return new Tuple2<Integer, String>(tuple._2, tuple._1); } }); JavaPairRDD<String, Integer> sorted = temp.sortByKey(false).mapToPair(new PairFunction<Tuple2<Integer,String>, String, Integer>() { private static final long serialVersionUID = 1L; @Override public Tuple2<String, Integer> call(Tuple2<Integer, String> tuple) throws Exception { // TODO Auto-generated method stub return new Tuple2<String, Integer>(tuple._2,tuple._1); } }); sorted.foreach(new VoidFunction<Tuple2<String,Integer>>() { private static final long serialVersionUID = 1L; @Override public void call(Tuple2<String, Integer> tuple) throws Exception { System.out.println("word:" + tuple._1 + " count:" + tuple._2); } }); sc.close(); } }

统计的文件是下边的内容:

Look! at the window there leans an old maid. She plucks the withered leaf from the balsam, and looks at the grass-covered rampart, on which many children are playing. What is the old maid thinking of? A whole life drama is unfolding itself before her inward gaze. "The poor little children, how happy they are- how merrily they play and romp together! What red cheeks and what angels' eyes! but they have no shoes nor stockings. They dance on the green rampart, just on the place where, according to the old story, the ground always sank in, and where a sportive, frolicsome child had been lured by means of flowers, toys and sweetmeats into an open grave ready dug for it, and which was afterwards closed over the child; and from that moment, the old story says, the ground gave way no longer, the mound remained firm and fast, and was quickly covered with the green turf. The little people who now play on that spot know nothing of the old tale, else would they fancy they heard a child crying deep below the earth, and the dewdrops on each blade of grass would be to them tears of woe. Nor do they know anything of the Danish King who here, in the face of the coming foe, took an oath before all his trembling courtiers that he would hold out with the citizens of his capital, and die here in his nest; they know nothing of the men who have fought here, or of the women who from here have drenched with boiling water the enemy, clad in white, and 'biding in the snow to surprise the city. .

当然也可以把这个工程打包成jar包,放在spark集群上运行,比如我打成jar包的名称是WordCount.jar

运行命令:/usr/local/spark/bin/spark-submit --master local --class cn.spark.test.WordCount /home/hadoop/Desktop/WordCount.jar