首先在官网下载zookeeper3.4.6安装包,解压到/usr/local目录下

然后改名为zookeeper。

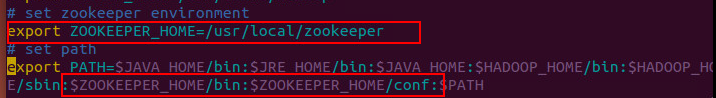

环境变量配置:sudo vim /etc/profile 添加环境变量如下图

然后进入zookeeper的conf目录,有一个zoo_sample.cfg文件,把它复制重命名为zoo.cfg

mv zoo_sample.cfg zoo.cfg

编辑这个新产生的文件,内容如下

# The number of milliseconds of each tick tickTime=2000 # The number of ticks that the initial # synchronization phase can take initLimit=10 # The number of ticks that can pass between # sending a request and getting an acknowledgement syncLimit=5 # the directory where the snapshot is stored. # do not use /tmp for storage, /tmp here is just # example sakes. dataDir=/usr/local/zookeeper/data dataLogDir=/usr/local/zookeeper/datalog # the port at which the clients will connect clientPort=2181 # Be sure to read the maintenance section of the # administrator guide before turning on autopurge. # http://zookeeper.apache.org/doc/current/zookeeperAdmin.html#sc_maintenance # The number of snapshots to retain in dataDir #autopurge.snapRetainCount=3 # Purge task interval in hours # Set to "0" to disable auto purge feature #autopurge.purgeInterval=1

server.1=master:2888:3888 server.2=slave1:2888:3888 server.3=slave2:2888:3888 server.4=slave3:2888:3888

分别创建之前dataDir和dataLogDir两个变量所对应的目录,即在zookeeper下创建data和datalog两个目录,并且进入dataDir对应的data目录,创建一个文件,名字叫myid,内容是一个数字,这个数字对应配置文件zoo.cfg中server.x这个x,比如在master中,数字就是1,在slave1中数字就是2.

这样就配置完了,然后分别发送到每个节点上,命令如下:

scp -r /uer/local/zookeeper slave1:/usr/local

传送完成之后,注意像上边说的修改myid文件中的数字,这样就配置完成了。

可以通过在每个节点上输入zkServer.sh start命令启动zookeeper,zkServer.sh status来检测,zkServer.sh stop来停止zookeeper。

有一点需要注意,集群式安装的zookeeper,假如没有把所有的机器都启动zookeeper时就去执行zkServer.sh status这个检查命令,检查结果会报错,其实是因为你在zoo.cfg中配置了集群环境,没有完全启动,检测结果当然是错的,那么等你把所有集群都启动,再检测,就不会报这个错误了。