Project members

MIT: Albert Huang, Abraham Bachrach, Garrett Hemann and Nicholas Roy

Univ. of Washington: Peter Henry, Mike Krainin, and Dieter Fox

Intel Labs Seattle: Xiaofeng Ren

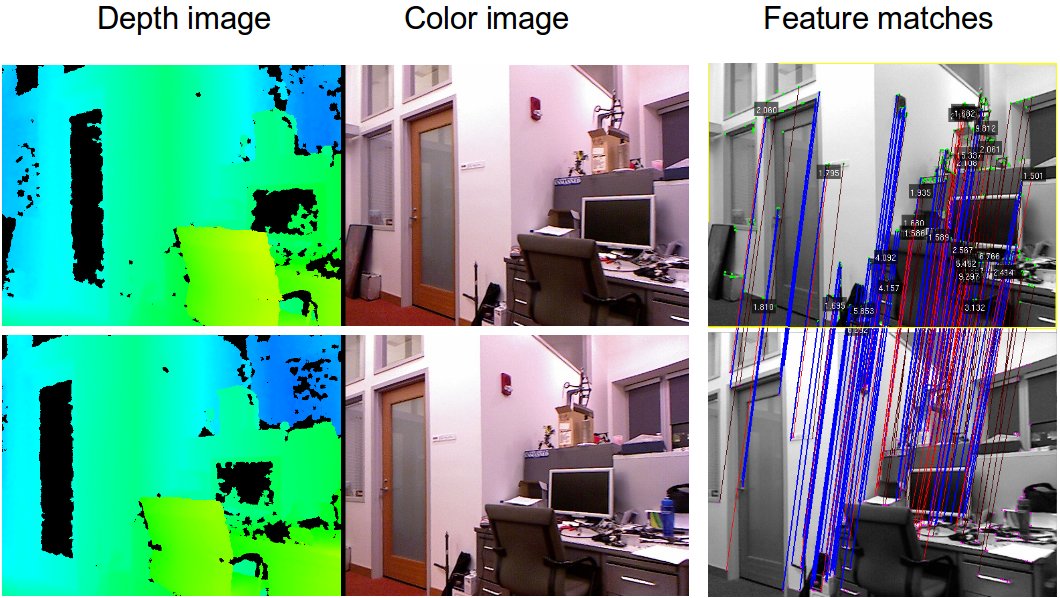

At MIT, we have developed a real-time visual odometry system that can use a Kinect to provide fast and accurate estimates of a vehicle's 3D trajectory. This system is based on recent advances in visual odometry research, and combines a number of ideas from the state-of-the-art algorithms. It aligns successive camera frames by matching features across images, and uses the Kinect-derived depth estimates to determine the camera's motion.

We have integrated the visual odometry into our Quadrotor system, which was previously developed for controlling the vehicle with laser scan-matching. The visual odometry runs in real-time, onboard the vehicle, and its estimates have low enough delay that we are successfully able to control the quadrotor using only the Kinect and onboard IMU, enabling fully autonomous 3D flight in unknown GPS-denied environments. Notably, it does not require a motion capture system or other external sensors -- all sensing and computation required for local position control is done onboard the vehicle.

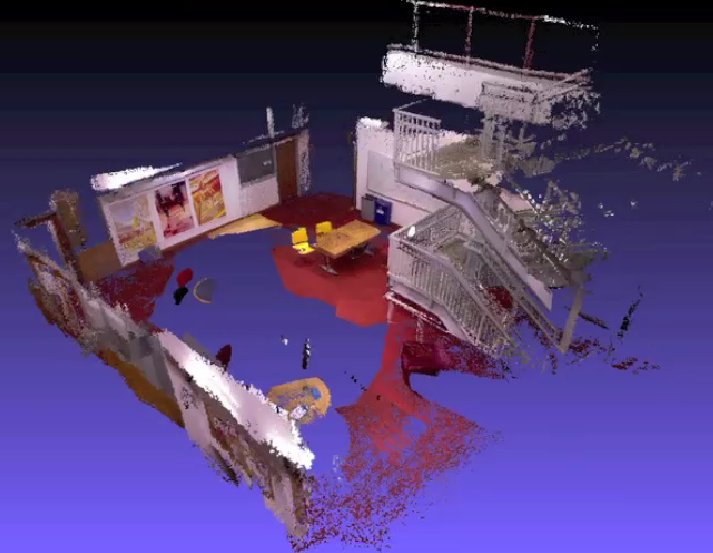

We have collaborated with Peter Henry and Mike Krainin from the Robotics and State Estimation Lab at University of Washington to use theirRGBD-SLAM algorithms to perform simultaneous localization and mapping (SLAM) and build metrically accurate and visually pleasing models of the environment through which the MAV has flown.

The RGBD-SLAM algorithms process data offboard the vehicle, but send position corrections back to the vehicle to enable globally consistent position estimates.

http://www.cs.washington.edu/ai/Mobile_Robotics/projects/rgbd-3d-mapping/

http://groups.csail.mit.edu/rrg/index.php?n=Main.VisualOdometryForGPS-DeniedFlight

http://www.cs.washington.edu/ai/Mobile_Robotics/

The goal of this research is to create dense 3D point cloud maps of building interiors using newly available inexpensive depth cameras. We use a reference design that is equivalent to the sensor in the Microsoft Kinect. These cameras provide depth information aligned with image pixels from a standard camera. We refer to this as RGB-D data (for Red, Green, Blue plus Depth).

We have recently collaborated with a team at MIT using a Kinect mounted on a quadrotor to localize and build 3D maps using our system as shown in the first video. More information on this collaboration is available here

Project members

MIT: Albert Huang, Abraham Bachrach, Garrett Hemann and Nicholas Roy

Univ. of Washington: Peter Henry, Mike Krainin, and Dieter Fox

Intel Labs Seattle: Xiaofeng Ren

At MIT, we have developed a real-time visual odometry system that can use a Kinect to provide fast and accurate estimates of a vehicle's 3D trajectory. This system is based on recent advances in visual odometry research, and combines a number of ideas from the state-of-the-art algorithms. It aligns successive camera frames by matching features across images, and uses the Kinect-derived depth estimates to determine the camera's motion.

We have integrated the visual odometry into our Quadrotor system, which was previously developed for controlling the vehicle with laser scan-matching. The visual odometry runs in real-time, onboard the vehicle, and its estimates have low enough delay that we are successfully able to control the quadrotor using only the Kinect and onboard IMU, enabling fully autonomous 3D flight in unknown GPS-denied environments. Notably, it does not require a motion capture system or other external sensors -- all sensing and computation required for local position control is done onboard the vehicle.

We have collaborated with Peter Henry and Mike Krainin from the Robotics and State Estimation Lab at University of Washington to use their RGBD-SLAM algorithms to perform simultaneous localization and mapping (SLAM) and build metrically accurate and visually pleasing models of the environment through which the MAV has flown.

The RGBD-SLAM algorithms process data offboard the vehicle, but send position corrections back to the vehicle to enable globally consistent position estimates.

Research > Computer Vision > RGB-D: Techniques and usages for Kinect style depth cameras

RGB-D: Techniques and usages for Kinect style depth cameras A RGBD Project

The RGB-D project is a joint research effort between Intel Labs Seattle and the University of Washington Department of Computer Science & Engineering. The goal of this project is to develop techniques that enable future use cases of depth cameras. Using the Primesense* depth cameras underlying the Kinect * technology, we've been working on areas ranging from 3D modeling of indoor environments to interactive projection systems and object recognition to robotic manipulation and interaction.

Below, you find a list of videos illustrating our work. More detailed technical background can be found in our research areas and at the UW Robotics and State Estimation Lab. Enjoy!

3D Modeling of Indoor Environments

Depth cameras provide a stream of color images and depth per pixel. They can be used to generate 3D maps of indoor environments. Here we show some 3D maps built in our lab. What you see is not the raw data collected by the camera, but a walk through the model generated by our mapping technique. The maps are not complete; they were generated by simply carrying a depth camera through the lab and aligning the data into a globally consistent model using statistical estimation techniques.

3D indoor models could be used to automatically generate architectural drawings, allow virtual flythroughs for real estate, or remodeling and furniture shopping by inserting 3D furniture models into the map.