手动调整pod数量

- 对yaml文件改replicas数量

- 在dashboard改deployment的pod值

- 通过kubectl scale命令

- 通过kubectl edit 编辑deployment

kubectl edit deployment danran-nginx-deployment -n danran

kubectl scale

kubectl scale 对运行在k8s 环境中的pod 数量进行扩容(增加)或缩容(减小)

当前pod数量

root@master1:~# kubectl get deployment -n danran

NAME READY UP-TO-DATE AVAILABLE AGE

danran-nginx-deployment 1/1 1 1 18h

danran-tomcat-app1-deployment-label 1/1 1 1 19h

执行扩容/缩容

root@master1:~# kubectl scale deployment/danran-nginx-deployment --replicas=2 -n danran

deployment.apps/danran-nginx-deployment scaled

验证手动扩容结果

root@master1:~# kubectl get deployment -n danran

NAME READY UP-TO-DATE AVAILABLE AGE

danran-nginx-deployment 2/2 2 2 18h

danran-tomcat-app1-deployment-label 1/1 1 1 19h

HPA自动伸缩pod数量

kubectl autoscale 自动控制在k8s集群中运行的pod数量(水平自动伸缩),需要提前设置pod范围及触发条件

控制管理器默认每隔15s(可以通过–horizontal-pod-autoscaler-sync-period修改)查询metrics的资源使用情况

支持以下三种metrics指标类型:

->预定义metrics(比如Pod的CPU)以利用率的方式计算

->自定义的Pod metrics,以原始值(raw value)的方式计算

->自定义的object metrics

支持两种metrics查询方式:

->Heapster

->自定义的REST API

支持多metrics

准备metrics-server

使用metrics-server作为HPA数据源(metrics-server版本为v0.3.6)

https://github.com/kubernetes-incubator/metrics-server

https://github.com/kubernetes-sigs/metrics-server/tree/v0.3.6

clone 代码

root@master1:/usr/local/src# git clone https://github.com/kubernetes-sigs/metrics-server.git

root@master1:/usr/local/src# ls

metrics-server-0.3.6

root@master1:/usr/local/src# cd metrics-server-0.3.6/

准备image

- google镜像仓库

docker pull k8s.gcr.io/metrics-server-amd64:v0.3.6 - 阿里云镜像仓库

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/metrics-server-amd64:v0.3.6

将metrics-server-amd64:v0.3.6 image 上传到harbor

root@master1:~/package# docker tag mirrorgooglecontainers/metrics-server-amd64:v0.3.6 harbor.linux.com/baseimages/metrics-server-amd64:v0.3.6

root@master1:~/package# docker push harbor.linux.com/baseimages/metrics-server-amd64:v0.3.6

The push refers to repository [harbor.linux.com/baseimages/metrics-server-amd64]

7bf3709d22bb: Pushed

932da5156413: Pushed

v0.3.6: digest: sha256:c9c4e95068b51d6b33a9dccc61875df07dc650abbf4ac1a19d58b4628f89288b size: 738

修改metrics-server yaml文件

root@master1:/usr/local/src/metrics-server-0.3.6/deploy/1.8+# pwd

/usr/local/src/metrics-server-0.3.6/deploy/1.8+

root@master1:/usr/local/src/metrics-server-0.3.6/deploy/1.8+# ls

aggregated-metrics-reader.yaml auth-delegator.yaml auth-reader.yaml metrics-apiservice.yaml metrics-server-deployment.yaml metrics-server-service.yaml resource-reader.yaml

修改image为harbor镜像

root@master1:/usr/local/src/metrics-server-0.3.6/deploy/1.8+# cat metrics-server-deployment.yaml

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: metrics-server

namespace: kube-system

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: metrics-server

namespace: kube-system

labels:

k8s-app: metrics-server

spec:

selector:

matchLabels:

k8s-app: metrics-server

template:

metadata:

name: metrics-server

labels:

k8s-app: metrics-server

spec:

serviceAccountName: metrics-server

volumes:

# mount in tmp so we can safely use from-scratch images and/or read-only containers

- name: tmp-dir

emptyDir: {}

containers:

- name: metrics-server

image: harbor.linux.com/baseimages/metrics-server-amd64:v0.3.6

imagePullPolicy: Always

volumeMounts:

- name: tmp-dir

mountPath: /tmp

创建pod

root@master1:/usr/local/src/metrics-server-0.3.6/deploy/1.8+# kubectl apply -f .

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created

clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator created

rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader created

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created

serviceaccount/metrics-server created

deployment.apps/metrics-server created

service/metrics-server created

clusterrole.rbac.authorization.k8s.io/system:metrics-server created

clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server created

验证 metrics-server pod是否运行

root@master1:/usr/local/src/metrics-server-0.3.6/deploy/1.8+# kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

danran danran-nginx-deployment-5f4c68cd88-8ghrp 1/1 Running 0 55m

danran danran-nginx-deployment-5f4c68cd88-ld4gp 1/1 Running 0 19h

danran danran-tomcat-app1-deployment-label-557cd56c58-s295s 1/1 Running 0 20h

default bash-b879cb84b-5k5t8 1/1 Running 0 23h

default busybox 1/1 Running 80 3d8h

default net-test2-565b5f575-b28kf 1/1 Running 2 3d22h

default net-test2-565b5f575-h692c 1/1 Running 2 4d

default net-test2-565b5f575-rlcwz 1/1 Running 1 4d

default net-test2-565b5f575-wwkl2 1/1 Running 1 3d22h

kube-system coredns-85bd4f9784-95qcb 1/1 Running 1 3d7h

kube-system kube-flannel-ds-amd64-4dvn9 1/1 Running 1 4d1h

kube-system kube-flannel-ds-amd64-6zk8z 1/1 Running 1 4d1h

kube-system kube-flannel-ds-amd64-d54j4 1/1 Running 1 4d1h

kube-system kube-flannel-ds-amd64-hmnsj 1/1 Running 1 3d23h

kube-system kube-flannel-ds-amd64-k52kz 1/1 Running 1 4d1h

kube-system kube-flannel-ds-amd64-q42lh 1/1 Running 2 4d1h

kube-system metrics-server-6dc4d646fb-5gf7n 1/1 Running 0 105s

kubernetes-dashboard dashboard-metrics-scraper-665dccf555-xlsm2 1/1 Running 1 3d20h

kubernetes-dashboard kubernetes-dashboard-6d489b6474-h7cqw 1/1 Running 1 3d19h

验证metrics-server pod

验证metrics-server 是否采集到node数据

root@master1:~# kubectl top nodes

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

10.203.104.20 44m 1% 2278Mi 31%

10.203.104.21 31m 0% 777Mi 10%

10.203.104.22 45m 1% 706Mi 9%

10.203.104.26 28m 0% 488Mi 6%

10.203.104.27 26m 0% 799Mi 10%

10.203.104.28 29m 0% 538Mi 7%

验证metrics-server 是否采集到pod数据

root@master1:~# kubectl top pods -n danran

NAME CPU(cores) MEMORY(bytes)

danran-nginx-deployment-5f4c68cd88-8ghrp 0m 3Mi

danran-nginx-deployment-5f4c68cd88-ld4gp 0m 4Mi

danran-tomcat-app1-deployment-label-557cd56c58-s295s 1m 265Mi

修改controller-manager启动参数

通过命令配置扩缩容

查看需要配置扩缩容的deployment为danran-tomcat-app1-deployment-label

root@master1:~# kubectl get deployment -n danran

NAME READY UP-TO-DATE AVAILABLE AGE

danran-nginx-deployment 2/2 2 2 20h

danran-tomcat-app1-deployment-label 1/1 1 1 20h

kubectl autoscale 配置自动扩缩容

root@master1:~# kubectl autoscale deployment/danran-tomcat-app1-deployment-label --min=2 --max=10 --cpupercent=80 -n danran

horizontalpodautoscaler.autoscaling/danran-tomcat-app1-podautoscalor created

验证信息

root@master1:~# kubectl describe deployment/danran-tomcat-app1-deployment-label -n danran

desired 最终期望处于READY状态的副本数

updated 当前完成更新的副本数

total 总计副本数

available 当前可用的副本数

unavailable 不可用副本数

yaml文件中定义扩缩容配置

查看需要配置扩缩容的deployment为danran-tomcat-app1-deployment-label

root@master1:~# kubectl get deployment -n danran

NAME READY UP-TO-DATE AVAILABLE AGE

danran-nginx-deployment 2/2 2 2 20h

danran-tomcat-app1-deployment-label 1/1 1 1 20h

在tomcat yaml 同级目录下定义hpa.yaml文件

root@master1:/opt/data/yaml/danran/tomcat-app1# ls

hpa.yaml tomcat-app1.yaml

root@master1:/opt/data/yaml/danran/tomcat-app1# cat hpa.yaml

apiVersion: autoscaling/v1

kind: HorizontalPodAutoscaler

metadata:

namespace: danran

name: danran-tomcat-app1-podautoscalor

labels:

app: danran-tomcat-app1

version: v2beta1

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: danran-tomcat-app1-deployment-label

minReplicas: 2

maxReplicas: 20

targetCPUUtilizationPercentage: 60

执行HPA pod

root@master1:/opt/data/yaml/danran/tomcat-app1# kubectl apply -f hpa.yaml

horizontalpodautoscaler.autoscaling/danran-tomcat-app1-podautoscalor created

验证HPA

扩容前

root@master1:~# kubectl get deployment -n danran

NAME READY UP-TO-DATE AVAILABLE AGE

danran-nginx-deployment 2/2 2 2 20h

danran-tomcat-app1-deployment-label 1/1 1 1 20h

扩容后

root@master1:~# kubectl get deployment -n danran

NAME READY UP-TO-DATE AVAILABLE AGE

danran-nginx-deployment 2/2 2 2 20h

danran-tomcat-app1-deployment-label 2/2 2 2 20h

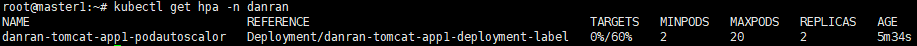

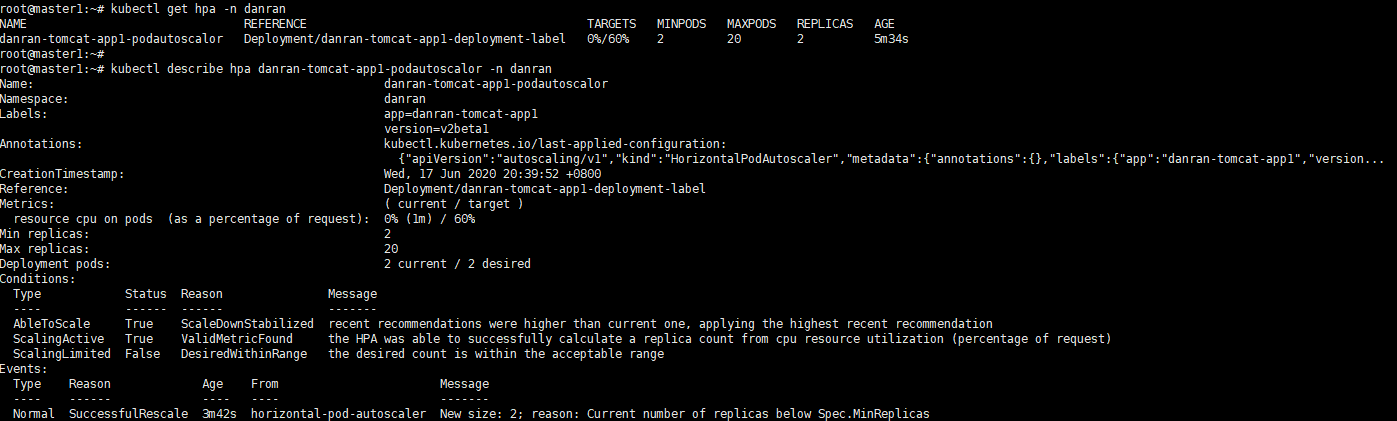

查看hpa的状态

root@master1:~# kubectl get hpa -n danran

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

danran-tomcat-app1-podautoscalor Deployment/danran-tomcat-app1-deployment-label 0%/60% 2 20 2 3m31s

root@master1:~# kubectl describe hpa danran-tomcat-app1-podautoscalor -n danran

动态修改资源内容kubectl edit

用于临时修改某些配置后需要立即生效的场景

获取修改调整资源的deployment

root@master1:~# kubectl get deployment -n danran

NAME READY UP-TO-DATE AVAILABLE AGE

danran-nginx-deployment 2/2 2 2 21h

danran-tomcat-app1-deployment-label 4/4 4 4 22h

修改deployment的replicas 副本数

root@master1:~# kubectl edit deployment danran-tomcat-app1-deployment-label -n danran

deployment.apps/danran-tomcat-app1-deployment-label edited

验证副本数是否更新

root@master1:~# kubectl get deployment -n danran

NAME READY UP-TO-DATE AVAILABLE AGE

danran-nginx-deployment 2/2 2 2 21h

danran-tomcat-app1-deployment-label 3/3 3 3 22h

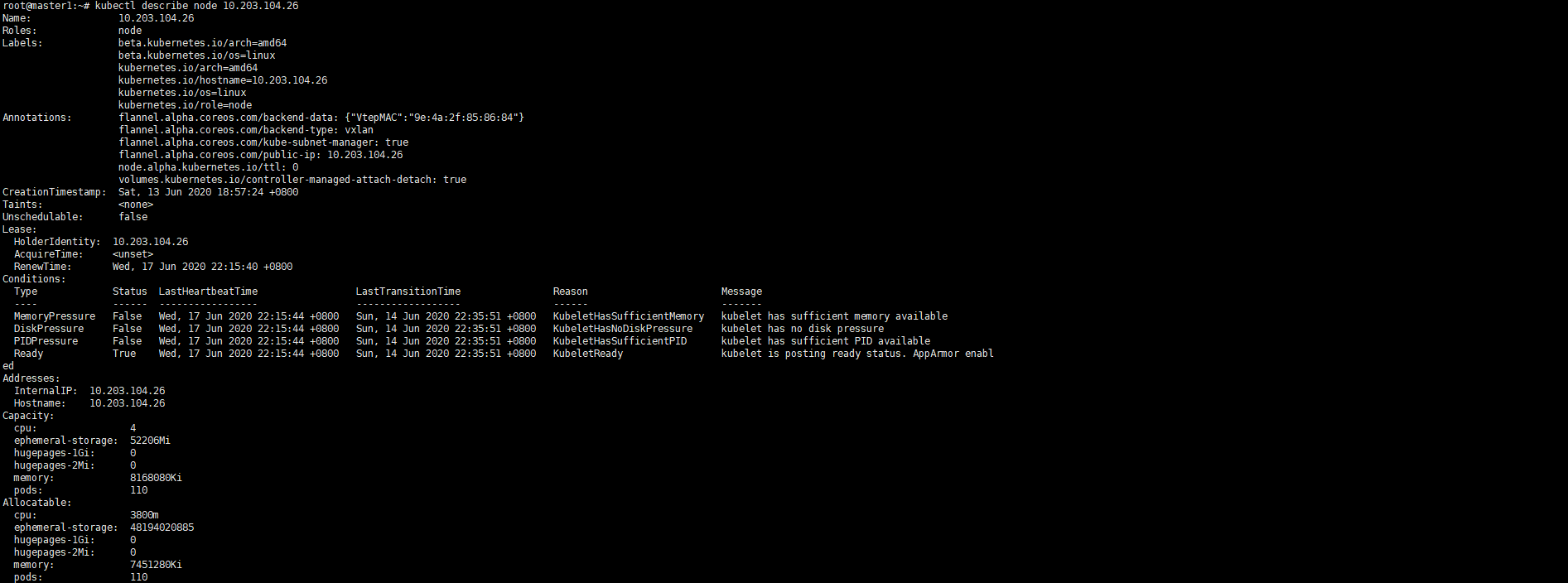

定义node资源标签

lable是一个键值对,创建pod的时候会查询那些node有这个标签,只会将pod创建在符合指定label值的node节点上。

查看当前node label

root@master1:~# kubectl describe node 10.203.104.26

Name: 10.203.104.26

Roles: node

Labels: beta.kubernetes.io/arch=amd64

beta.kubernetes.io/os=linux

kubernetes.io/arch=amd64

kubernetes.io/hostname=10.203.104.26

kubernetes.io/os=linux

kubernetes.io/role=node

Annotations: flannel.alpha.coreos.com/backend-data: {"VtepMAC":"9e:4a:2f:85:86:84"}

flannel.alpha.coreos.com/backend-type: vxlan

flannel.alpha.coreos.com/kube-subnet-manager: true

flannel.alpha.coreos.com/public-ip: 10.203.104.26

node.alpha.kubernetes.io/ttl: 0

volumes.kubernetes.io/controller-managed-attach-detach: true

CreationTimestamp: Sat, 13 Jun 2020 18:57:24 +0800

Taints: <none>

Unschedulable: false

Lease:

HolderIdentity: 10.203.104.26

AcquireTime: <unset>

RenewTime: Wed, 17 Jun 2020 22:15:40 +0800

Conditions:

Type Status LastHeartbeatTime LastTransitionTime Reason Message

---- ------ ----------------- ------------------ ------ -------

MemoryPressure False Wed, 17 Jun 2020 22:15:44 +0800 Sun, 14 Jun 2020 22:35:51 +0800 KubeletHasSufficientMemory kubelet has sufficient memory available

DiskPressure False Wed, 17 Jun 2020 22:15:44 +0800 Sun, 14 Jun 2020 22:35:51 +0800 KubeletHasNoDiskPressure kubelet has no disk pressure

PIDPressure False Wed, 17 Jun 2020 22:15:44 +0800 Sun, 14 Jun 2020 22:35:51 +0800 KubeletHasSufficientPID kubelet has sufficient PID available

Ready True Wed, 17 Jun 2020 22:15:44 +0800 Sun, 14 Jun 2020 22:35:51 +0800 KubeletReady kubelet is posting ready status. AppArmor enabled

Addresses:

InternalIP: 10.203.104.26

Hostname: 10.203.104.26

Capacity:

cpu: 4

ephemeral-storage: 52206Mi

hugepages-1Gi: 0

hugepages-2Mi: 0

memory: 8168080Ki

pods: 110

Allocatable:

cpu: 3800m

ephemeral-storage: 48194020885

hugepages-1Gi: 0

hugepages-2Mi: 0

memory: 7451280Ki

pods: 110

System Info:

Machine ID: 202eb555a5e147b4a4a683e1b0869d19

System UUID: 1BA73542-2622-4635-CDFF-A19A2B87390B

Boot ID: 57bcbc34-a662-477d-a994-1d4986ede5c8

Kernel Version: 4.15.0-106-generic

OS Image: Ubuntu 18.04.4 LTS

Operating System: linux

Architecture: amd64

Container Runtime Version: docker://19.3.8

Kubelet Version: v1.17.4

Kube-Proxy Version: v1.17.4

PodCIDR: 10.20.3.0/24

PodCIDRs: 10.20.3.0/24

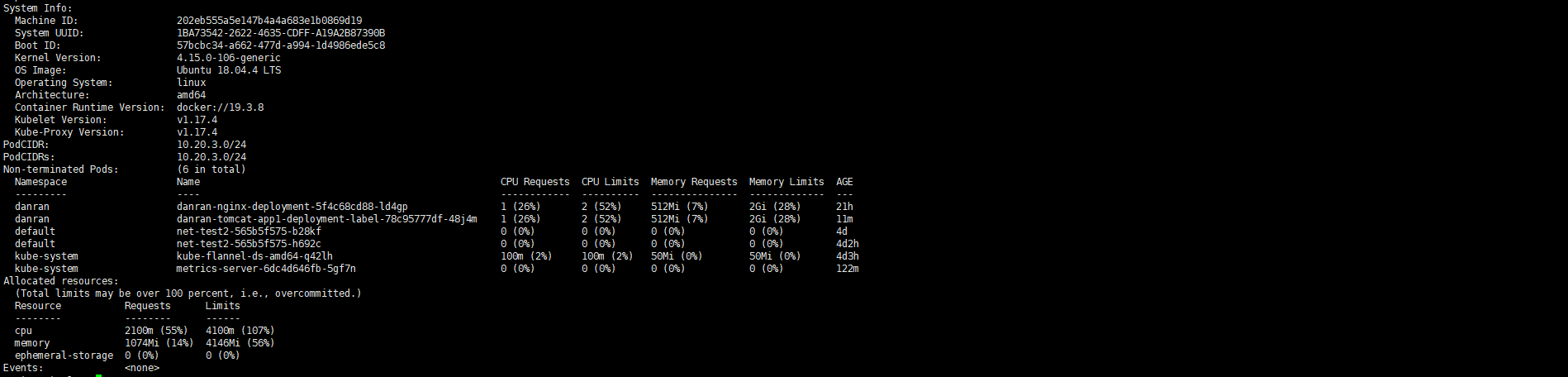

Non-terminated Pods: (6 in total)

Namespace Name CPU Requests CPU Limits Memory Requests Memory Limits AGE

--------- ---- ------------ ---------- --------------- ------------- ---

danran danran-nginx-deployment-5f4c68cd88-ld4gp 1 (26%) 2 (52%) 512Mi (7%) 2Gi (28%) 21h

danran danran-tomcat-app1-deployment-label-78c95777df-48j4m 1 (26%) 2 (52%) 512Mi (7%) 2Gi (28%) 11m

default net-test2-565b5f575-b28kf 0 (0%) 0 (0%) 0 (0%) 0 (0%) 4d

default net-test2-565b5f575-h692c 0 (0%) 0 (0%) 0 (0%) 0 (0%) 4d2h

kube-system kube-flannel-ds-amd64-q42lh 100m (2%) 100m (2%) 50Mi (0%) 50Mi (0%) 4d3h

kube-system metrics-server-6dc4d646fb-5gf7n 0 (0%) 0 (0%) 0 (0%) 0 (0%) 122m

Allocated resources:

(Total limits may be over 100 percent, i.e., overcommitted.)

Resource Requests Limits

-------- -------- ------

cpu 2100m (55%) 4100m (107%)

memory 1074Mi (14%) 4146Mi (56%)

ephemeral-storage 0 (0%) 0 (0%)

Events: <none>

自定义node label并验证

添加project=jevon的label

root@master1:~# kubectl label node 10.203.104.27 project=jevon

node/10.203.104.27 labeled

添加project=danran的label

root@master1:~# kubectl label node 10.203.104.26 project=danran

node/10.203.104.26 labeled

验证确认project=danran label

root@master1:~# kubectl describe node 10.203.104.26

Name: 10.203.104.26

Roles: node

Labels: beta.kubernetes.io/arch=amd64

beta.kubernetes.io/os=linux

kubernetes.io/arch=amd64

kubernetes.io/hostname=10.203.104.26

kubernetes.io/os=linux

kubernetes.io/role=node

project=danran

Annotations: flannel.alpha.coreos.com/backend-data: {"VtepMAC":"9e:4a:2f:85:86:84"}

flannel.alpha.coreos.com/backend-type: vxlan

flannel.alpha.coreos.com/kube-subnet-manager: true

flannel.alpha.coreos.com/public-ip: 10.203.104.26

node.alpha.kubernetes.io/ttl: 0

volumes.kubernetes.io/controller-managed-attach-detach: true

yaml引用node label

在Deployment中最后添加nodeSelector.project: danran 字段选择project为danran 的label

root@master1:/opt/data/yaml/danran/tomcat-app1# cat tomcat-app1.yaml

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

app: danran-tomcat-app1-deployment-label

name: danran-tomcat-app1-deployment-label

namespace: danran

spec:

replicas: 1

selector:

matchLabels:

app: danran-tomcat-app1-selector

template:

metadata:

labels:

app: danran-tomcat-app1-selector

spec:

containers:

- name: danran-tomcat-app1-container

image: harbor.linux.com/danran/tomcat-app1:v2

#imagePullPolicy: IfNotPresent

imagePullPolicy: Always

ports:

- containerPort: 8080

protocol: TCP

name: http

env:

- name: "password"

value: "123456"

- name: "age"

value: "18"

resources:

limits:

cpu: 2

memory: 2Gi

requests:

cpu: 1

memory: 512Mi

volumeMounts:

- name: danran-images

mountPath: /usr/local/nginx/html/webapp/images

readOnly: false

- name: danran-static

mountPath: /usr/local/nginx/html/webapp/static

readOnly: false

volumes:

- name: danran-images

nfs:

server: 10.203.104.30

path: /data/danran/images

- name: danran-static

nfs:

server: 10.203.104.30

path: /data/danran/static

nodeSelector:

project: danran

---

kind: Service

apiVersion: v1

metadata:

labels:

app: danran-tomcat-app1-service-label

name: danran-tomcat-app1-service

namespace: danran

spec:

type: NodePort

ports:

- name: http

port: 80

protocol: TCP

targetPort: 8080

nodePort: 40004

selector:

app: danran-tomcat-app1-selector

应用yaml文件

root@master1:/opt/data/yaml/danran/tomcat-app1# kubectl apply -f tomcat-app1.yaml

deployment.apps/danran-tomcat-app1-deployment-label configured

service/danran-tomcat-app1-service unchanged

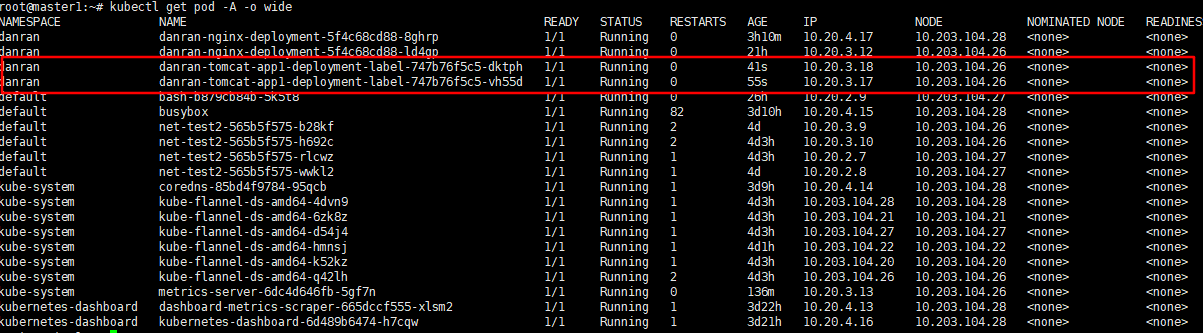

验证所有tomcat的pod全在label project=danran的Node节点上

root@master1:~# kubectl get pod -A -o wide

删除自定义node label

删除自定义的project label

root@master1:~# kubectl label nodes 10.203.104.26 project-

node/10.203.104.26 labeled

业务镜像版本升级及回滚

在指定的deployment中通过kubectl set image指定新版本的 镜像:tag 来实现更新代码的目的。

构建三个不同版本的nginx镜像,第一次使用v1版本,后组逐渐升级到v2与v3,测试镜像版本升级与回滚操作

升级到镜像到指定版本

v1版本

v1版本,--record=true为记录执行的kubectl

root@master1:/opt/data/yaml/danran/tomcat-app1# kubectl apply -f tomcat-app1.yaml --record=true

deployment.apps/danran-tomcat-app1-deployment-label created

service/danran-tomcat-app1-service created

升级到v5版本

容器的name为danran-tomcat-app1-container,可在tomcat-app1.yaml文件中查看

root@master1:~# kubectl set image deployment/danran-tomcat-app1-deployment-label danran-tomcat-app1-container=harbor.linux.com/danran/tomcat-app1:v5 -n danran

deployment.apps/danran-tomcat-app1-deployment-label image updated

查看历史版本信息

root@master1:~# kubectl rollout history deployment danran-tomcat-app1-deployment-label -n danran

deployment.apps/danran-tomcat-app1-deployment-label

REVISION CHANGE-CAUSE

2 kubectl apply --filename=tomcat-app1.yaml --record=true

3 kubectl apply --filename=tomcat-app1.yaml --record=true

4 kubectl apply --filename=tomcat-app1.yaml --record=true

回滚到上一个版本

将deployment 的danran-tomcat-app1-deployment-label 回滚到上个版本

root@master1:~# kubectl rollout undo deployment danran-tomcat-app1-deployment-label -n danran

deployment.apps/danran-tomcat-app1-deployment-label rolled back

回滚到指定版本

查看需要回滚到的版本

root@master1:~# kubectl rollout history deployment danran-tomcat-app1-deployment-label -n danran

deployment.apps/danran-tomcat-app1-deployment-label

REVISION CHANGE-CAUSE

2 kubectl apply --filename=tomcat-app1.yaml --record=true

3 kubectl apply --filename=tomcat-app1.yaml --record=true

4 kubectl apply --filename=tomcat-app1.yaml --record=true

回滚到REVISION 2

root@master1:~# kubectl rollout undo deployment danran-tomcat-app1-deployment-label --to-revision=2 -n danran

deployment.apps/danran-tomcat-app1-deployment-label rolled back

回滚后的版本

root@master1:~# kubectl rollout history deployment danran-tomcat-app1-deployment-label -n danran

deployment.apps/danran-tomcat-app1-deployment-label

REVISION CHANGE-CAUSE

3 kubectl apply --filename=tomcat-app1.yaml --record=true

4 kubectl apply --filename=tomcat-app1.yaml --record=true

5 kubectl apply --filename=tomcat-app1.yaml --record=true

配置主机为封锁状态且不参与调度

设置10.203.104.27不参与pod调度

root@master1:~# kubectl cordon 10.203.104.27

node/10.203.104.27 cordoned

root@master1:~# kubectl get node

NAME STATUS ROLES AGE VERSION

10.203.104.20 Ready,SchedulingDisabled master 4d5h v1.17.4

10.203.104.21 Ready,SchedulingDisabled master 4d5h v1.17.4

10.203.104.22 Ready,SchedulingDisabled master 4d3h v1.17.4

10.203.104.26 Ready node 4d5h v1.17.4

10.203.104.27 Ready,SchedulingDisabled node 4d5h v1.17.4

10.203.104.28 Ready node 4d5h v1.17.4

设置10.203.104.27参与pod调度

root@master1:~# kubectl uncordon 10.203.104.27

node/10.203.104.27 uncordoned

root@master1:~# kubectl get node

NAME STATUS ROLES AGE VERSION

10.203.104.20 Ready,SchedulingDisabled master 4d5h v1.17.4

10.203.104.21 Ready,SchedulingDisabled master 4d5h v1.17.4

10.203.104.22 Ready,SchedulingDisabled master 4d3h v1.17.4

10.203.104.26 Ready node 4d5h v1.17.4

10.203.104.27 Ready node 4d5h v1.17.4

10.203.104.28 Ready node 4d5h v1.17.4

etcd删除pod

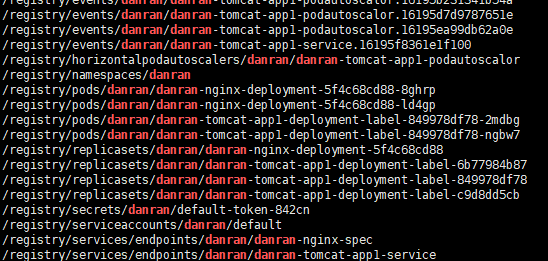

查看和namespace相关的数据

root@etcd1:~# etcdctl get /registry/ --prefix --keys-only | grep danran

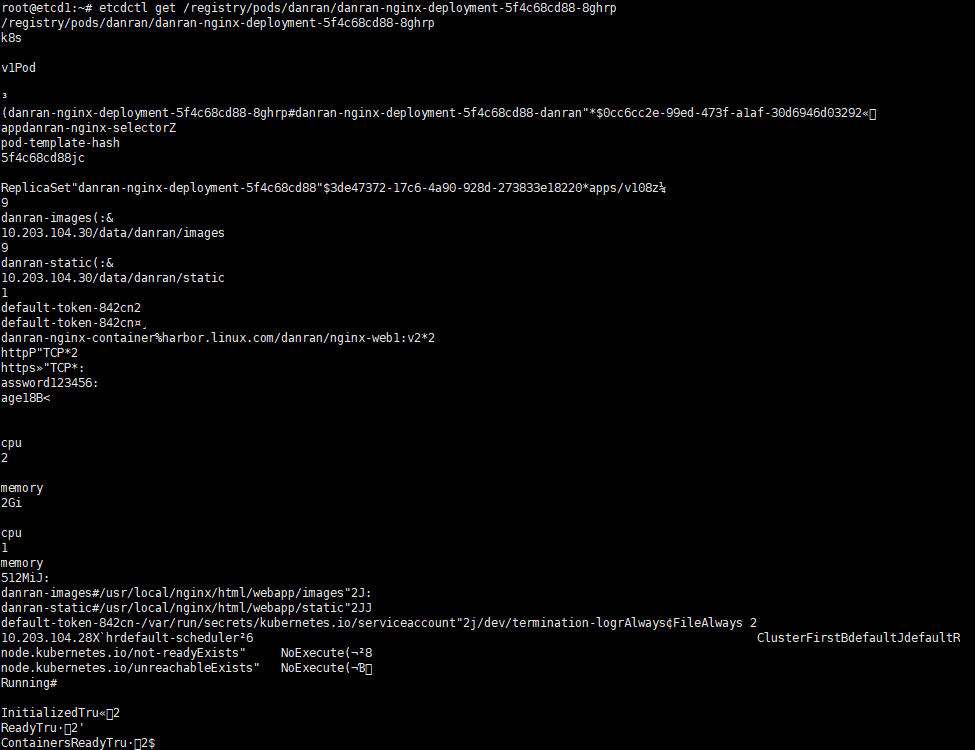

从etcd查看具体某个对象的数据

root@etcd1:~# etcdctl get /registry/pods/danran/danran-nginx-deployment-5f4c68cd88-8ghrp

删除etcd指定资源

删除danran-nginx-deployment-5f4c68cd88-8ghrp pod,返回1为删除成功

root@etcd1:~# etcdctl del /registry/pods/danran/danran-nginx-deployment-5f4c68cd88-8ghrp

1