趁着国庆节有时间,帮人写了个爬取淘女郎模特动态加载的图片的爬虫,还有爬取模特们的个人信息数据,这个爬虫花了3天时间,因为图片是异步加载的所以爬取

的复杂度有点大,最终我通过研究URL的变化,构造新的URL来进行持续性爬取,不过爬取速度真心慢(查看了cpu的利用率还有很多没有利用到),我准备把多线程加进去

说实话不太好加,有点头大,

1 # -*- coding: utf-8 -*-

2 import requests,time,re

3 import threadpool

4 import json,os,redis

5 import xlwt,xlrd,random

6 import urllib.request

7 from lxml import etree

8

9 url = 'https://mm.taobao.com/search_tstar_model.htm?spm=5679.126488.640745.2.38a457c5mEWpPl&style=&place=city%3A%E5%B9%BF%E5%B7%9E'

10 url1='https://mm.taobao.com/tstar/search/tstar_model.do?_input_charset=utf-8'

11

12

13 class tn(object):

14

15 headers = {

16 'user-agent': 'Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/61.0.3163.100 Safari/537.36',

17 'cookie': 'thw=cn; hng=CN%7Czh-CN%7CCNY%7C156; x=e%3D1%26p%3D*%26s%3D0%26c%3D0%26f%3D0%26g%3D0%26t%3D0%26__ll%3D-1%26_ato%3D0; linezing_session=dTr7U8KOxddDd4CJ4VFihDPH_1506516599348AOqB_1; v=0; _tb_token_=5b9375847e363; _m_h5_tk=4efc0ba8d72376fa1968a3f0a92f0eef_1506518851245; _m_h5_tk_enc=e10a67c79b8a47f97dd3134779acfdfe; uc3=sg2=URsQfTD%2BFY9mkKOl%2FNBXqNFPPUNKq8HjGx%2Bair7O99U%3D&nk2=UoCKEw%2B1myb2u1mo&id2=UoCJiFOLhjN6OQ%3D%3D&vt3=F8dBzWk7FANQZ7%2B830Y%3D&lg2=U%2BGCWk%2F75gdr5Q%3D%3D; existShop=MTUwNjk0NjQwMQ%3D%3D; uss=VFcwj3YmzKmO6xkbkJFH%2FN%2FOd2CPNJzRBWBygIM3IKKXIgbm1DSeGb87; lgc=1132771621aa; tracknick=1132771621aa; cookie2=11aae79de97ae344158e4aa965c7003c; sg=a2d; cookie1=Aihx9FxoyUYIE7uEPgeqstl%2B5uvfGslyiCQ%2FpePYriI%3D; unb=1100473042; skt=11ea4b0360e50e08; t=b63e6968872da200706b694d67c62883; _cc_=UtASsssmfA%3D%3D; tg=0; _l_g_=Ug%3D%3D; _nk_=1132771621aa; cookie17=UoCJiFOLhjN6OQ%3D%3D; cna=1/K/EZz4HDECAXhVTdivCBle; mt=ci=45_1; isg=Anx8i770Cd1_Zz2Ede24lLr7TRqCdCGCcQXP_1b95GdKIR2rfoTZL77ZdX-i; JSESSIONID=F34B74BB5A7A0A1BF96E8B3F2C02DE87; uc1=cookie14=UoTcCfmfxB%2Fd7g%3D%3D&lng=zh_CN&cookie16=WqG3DMC9UpAPBHGz5QBErFxlCA%3D%3D&existShop=false&cookie21=U%2BGCWk%2F7owY3i1vB1W2BgQ%3D%3D&tag=8&cookie15=UtASsssmOIJ0bQ%3D%3D&pas=0',

18

19 }

20

21 def getUrlinfo(self,page):

22

23 datas=[]

24 pageurl = 'https://mm.taobao.com/tstar/search/tstar_model.do?_input_charset=utf-8'

25

26 data = {

27 'q':'',

28 'viewFlag':'A',

29 'sortType':'default',

30 'searchStyle':'',

31 'searchRegion':'city:',

32 'searchFansNum':'',

33 'currentPage':'%s'%(page),

34 'pageSize':'100'

35 }

36 try:

37 while True:

38 time.sleep(1)

39 reqs = requests.post(pageurl,data=data,headers=self.headers,timeout=5)

40 if reqs.status_code ==200:

41 break

42 else:

43 print('field')

44 except Exception as e:

45 print('error:',e)

46 dictx = json.loads(str(reqs.text))

47 t = dictx['data']['searchDOList']

48 for i in t:

49 r = i['realName'],i['height'],i['weight'],i['city'],i['userId']

50 #userid = i['userId']

51 datas.append(r)

52 return datas #返回淘女郎信息数据

53

54 def getImages(self,rs):

55

56 a=0

57 for id in rs:

58 #print(id)

59 os.mkdir(os.path.join('D:\SpiderProject\ZhiHu\taonvlang\img', str(id[0])))

60 imagurl = 'https://mm.taobao.com/self/album/open_album_list.htm?_charset=utf-8&user_id%20='+str(id[4])

61

62 try:

63

64 html1 = requests.get(imagurl,headers=self.headers,timeout=5)

65 reqsones = str(html1.text)

66 #print(reqsones)

67 except Exception as e:

68 print('error:',e)

69 urls = etree.HTML(reqsones)

70 imagesurl = urls.xpath('//a[@class="mm-first"]/@href')#获取淘女郎对应相册url

71 #print(imagesurl)

72 ad = 'album_id=d{11}|album_id=d{8}|album_id=d{9}'#获取album_id

73 album_id = re.compile(ad)

74 result = album_id.findall(str(imagesurl))

75

76 for im in result:

77 pturl = 'https://mm.taobao.com/album/json/get_album_photo_list.htm?user_id=%s&%s&top_pic_id=0&page=0'%(id[4],im)

78 time.sleep(1)

79 html5 = requests.get(pturl,headers=self.headers,timeout=5)#获取json图片URL

80 print('获取json图片URL')

81 jsons =json.loads(str(html5.text))

82 try:

83 pic = jsons['picList']

84 except KeyError as e:

85 print('Error:',e)

86

87 for ius in pic:

88 a+=1

89 iu = ius['picUrl']

90 imurl = 'http:'+ str(iu)

91 filename = os.path.join('D:\SpiderProject\ZhiHu\taonvlang\img\%s'%(id[0]),str(a)+'.jpg')

92 print('开始下载图片')

93 try:

94 file = urllib.request.urlretrieve(str(imurl),filename)

95 except urllib.error.HTTPErro as e:

96 print('Error:',e)

97

98 def getInfophone(self):

99

100 userurl = 'https://mm.taobao.com/self/aiShow.htm?spm=719.7763510.1998643336.1.6WJFuT&userId=277949921'

101 html = requests.get(userurl,headers=self.headers,timeout=5)

102 html.encoding = 'GBK'

103 print(html.encoding)

104 selector = etree.HTML(str(html.text))

105 phone = selector.xpath('//strong[@style="font-family: simhei;color: #000000;font-size: 24.0px;line-height: 1.5;"]|//span[@style="font-size: 24.0px;"]/text()')

106

107 def saveInfo(self,p):

108

109 a,b = 1,0

110 workbook = xlwt.Workbook(encoding='ascii')

111 worksheet = workbook.add_sheet('My Worksheet')

112 worksheet.write(0,0, label='姓名')

113 worksheet.write(0, 1, label='身高')

114 worksheet.write(0, 2, label='体重')

115 worksheet.write(0, 3, label='城市')

116

117 while a<=30 or b<=5:

118 for names in p:

119 n = names[0]

120 w = names[1]

121 h = names[2]

122 c = names[3]

123 a+=1

124 worksheet.write(3, 5, label=str(n))

125 workbook.save('Excel_Workbook.xls')

126

127 if __name__ =="__main__":

128 t = tn()

129 for ii in range(1):

130 rs = t.getUrlinfo(ii)

131 #print(rs)

132 t.getImages(rs)

133 #t.saveInfo(p)

134 #t.getInfophone()

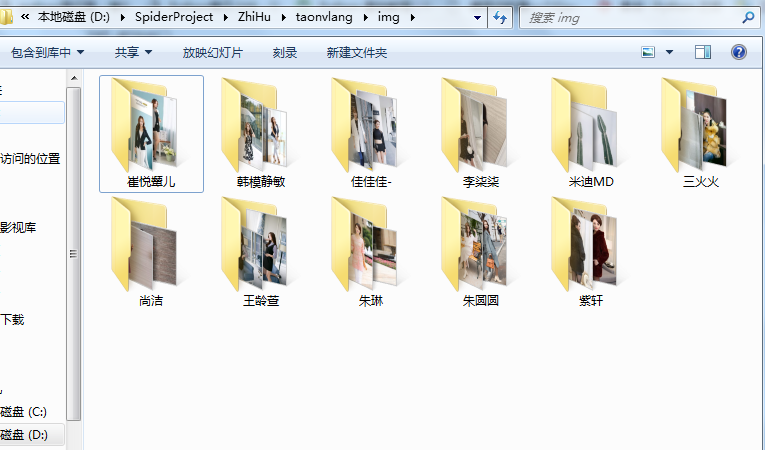

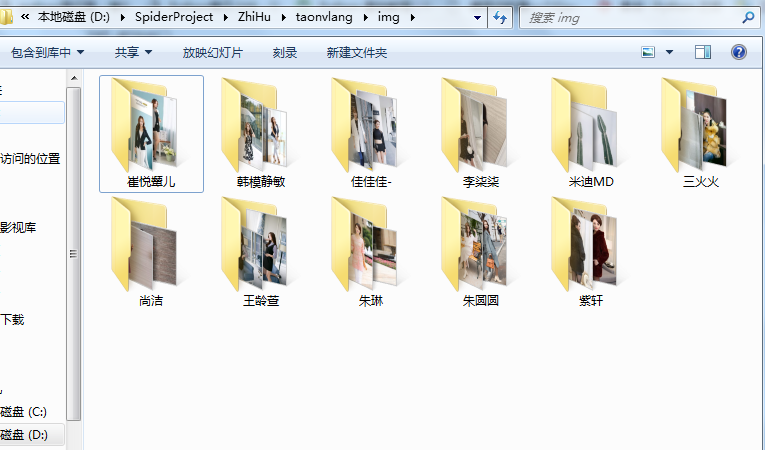

下面是运行代码截图