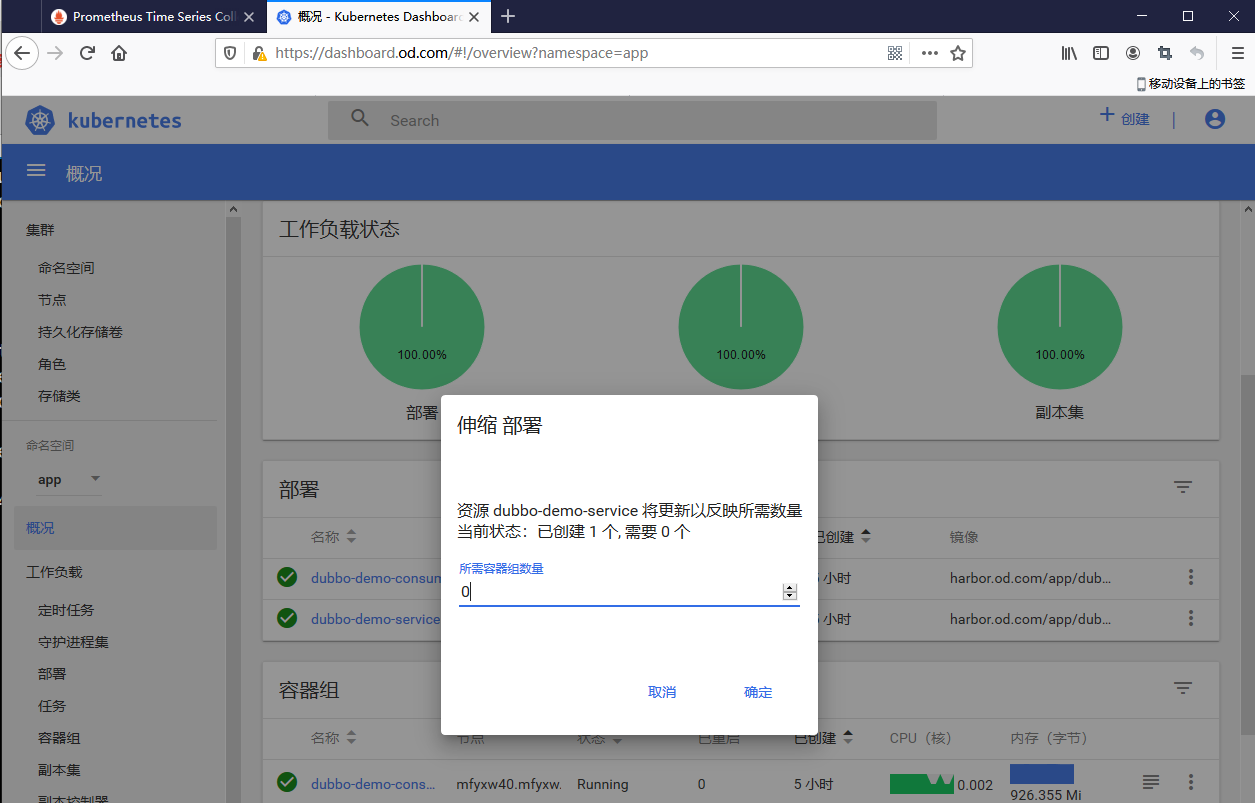

Prometheus架构图

常见的镜像

| pod | 备注 |

|---|---|

| kube-state-metric | 用来收集K8S基本状态信息的监控代理 |

| node-exporter | 专门用来收集K8S运算节点基础信息,需要部署到所有运算节点 |

| cadivsor | 用来监控容器内部使用资源的重要工具 |

| blackbox-exporter | 用来帮助你探明你业务容器是否存活 |

新一代容器云监控平台P+G

| Exporters(可以自定义开发) | HTTP接口 定义监控荐和监控项的标签(维度) 按一定的数据结构组织监控数据 以时间序列被收集 |

|---|---|

| Prometheus Server | Retriever(数据收集器) TSDB(时间序列数据库) Configure (static_config、kubernetes_sd、file_sd) HTTP Server |

| Grafana | 多种多样的插件 数据源(Prometheus) Dashboard(PromQL) |

| AlertManager | rules.yml(PromQL) |

1.部署kube-state-metric

kube-state-metric官方quay.io地址:https://quay.io/repository/coreos/kube-state-metrics?tab=info

在运维主机mfyxw50.mfyxw.com上执行

(1)下载kube-state-metric镜像

[root@mfyxw50 ~]# docker pull quay.io/coreos/kube-state-metrics:v1.5.0

v1.5.0: Pulling from coreos/kube-state-metrics

cd784148e348: Pull complete

f622528a393e: Pull complete

Digest: sha256:b7a3143bd1eb7130759c9259073b9f239d0eeda09f5210f1cd31f1a530599ea1

Status: Downloaded newer image for quay.io/coreos/kube-state-metrics:v1.5.0

quay.io/coreos/kube-state-metrics:v1.5.0

(2)打标签并上传至私有仓库

[root@mfyxw50 ~]# docker images | grep kube-state-*

quay.io/coreos/kube-state-metrics v1.5.0 91599517197a 18 months ago 31.8MB

[root@mfyxw50 ~]#

[root@mfyxw50 ~]# docker tag quay.io/coreos/kube-state-metrics:v1.5.0 harbor.od.com/public/kube-state-metrics:v1.5.0

[root@mfyxw50 ~]#

[root@mfyxw50 ~]# docker login harbor.od.com

Authenticating with existing credentials...

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

[root@mfyxw50 ~]#

[root@mfyxw50 ~]# docker push harbor.od.com/public/kube-state-metrics:v1.5.0

The push refers to repository [harbor.od.com/public/kube-state-metrics]

5b3c36501a0a: Pushed

7bff100f35cb: Pushed

v1.5.0: digest: sha256:16e9a1d63e80c19859fc1e2727ab7819f89aeae5f8ab5c3380860c2f88fe0a58 size: 739

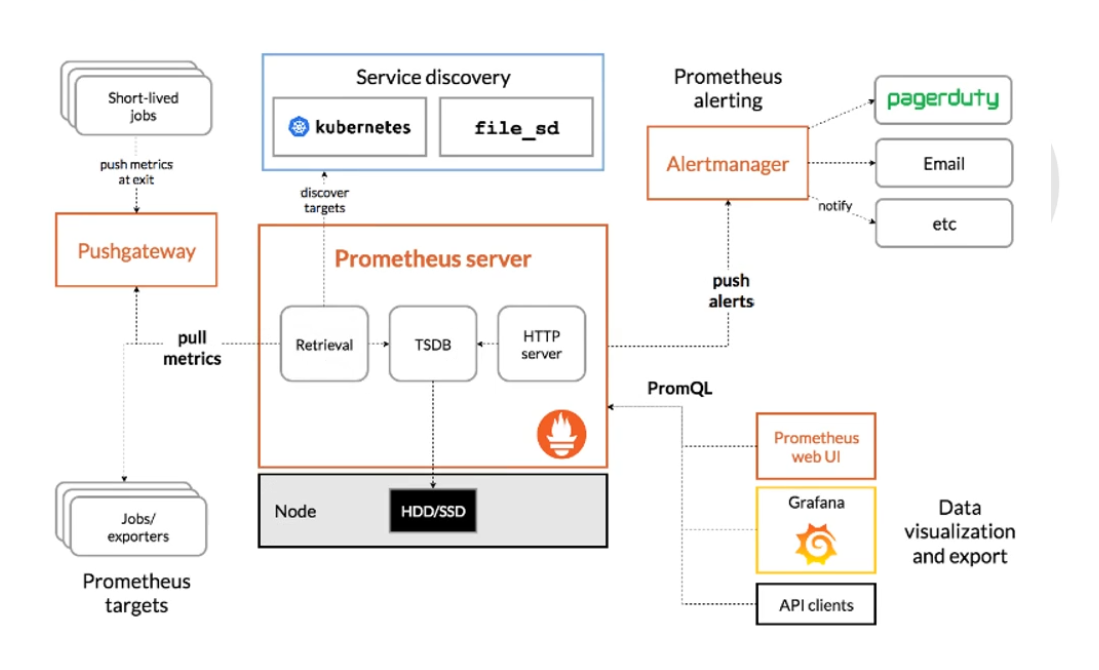

(3)登录私有仓库查看public项目是否有kube-state-metrics

(4)创建存放kube-state-metric资源配置清单目录

[root@mfyxw50 ~]# mkdir -p /data/k8s-yaml/kube-state-metrics

(5)创建kube-state-metrics资源配置清单

-

rbrc.yaml文件内容

[root@mfyxw50 ~]# cat > /data/k8s-yaml/kube-state-metrics/rbrc.yaml << EOF apiVersion: v1 kind: ServiceAccount metadata: labels: addonmanager.kubernetes.io/mode: Reconcile kubernetes.io/cluster-service: "true" name: kube-state-metrics namespace: kube-system --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: labels: addonmanager.kubernetes.io/mode: Reconcile kubernetes.io/cluster-service: "true" name: kube-state-metrics rules: - apiGroups: - "" resources: - configmaps - secrets - nodes - pods - services - resourcequotas - replicationcontrollers - limitranges - persistentvolumeclaims - persistentvolumes - namespaces - endpoints verbs: - list - watch - apiGroups: - policy resources: - poddisruptionbudgets verbs: - list - watch - apiGroups: - extensions resources: - daemonsets - deployments - replicasets verbs: - list - watch - apiGroups: - apps resources: - statefulsets verbs: - list - watch - apiGroups: - batch resources: - cronjobs - jobs verbs: - list - watch - apiGroups: - autoscaling resources: - horizontalpodautoscalers verbs: - list - watch --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: labels: addonmanager.kubernetes.io/mode: Reconcile kubernetes.io/cluster-service: "true" name: kube-state-metrics roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: kube-state-metrics subjects: - kind: ServiceAccount name: kube-state-metrics namespace: kube-system EOF -

deployment.yaml文件内容

[root@mfyxw50 ~]# cat > /data/k8s-yaml/kube-state-metrics/deployment.yaml << EOF apiVersion: extensions/v1beta1 kind: Deployment metadata: annotations: deployment.kubernetes.io/revision: "2" labels: grafanak8sapp: "true" app: kube-state-metrics name: kube-state-metrics namespace: kube-system spec: selector: matchLabels: grafanak8sapp: "true" app: kube-state-metrics strategy: rollingUpdate: maxSurge: 25% maxUnavailable: 25% type: RollingUpdate template: metadata: labels: grafanak8sapp: "true" app: kube-state-metrics spec: containers: - name: kube-state-metrics image: harbor.od.com/public/kube-state-metrics:v1.5.0 imagePullPolicy: IfNotPresent ports: - containerPort: 8080 name: http-metrics protocol: TCP readinessProbe: failureThreshold: 3 httpGet: path: /healthz port: 8080 scheme: HTTP initialDelaySeconds: 5 periodSeconds: 10 successThreshold: 1 timeoutSeconds: 5 serviceAccountName: kube-state-metrics EOF

(6)应用kube-state-metric资源配置清单

在master节点(mfyxw30.mfyxw.com或mfyxw40.mfyxw.com)任意一节点上执行

[root@mfyxw30 ~]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-58f8966f84-zrtj9 1/1 Running 31 59d

heapster-b5b9f794-f76bw 1/1 Running 31 59d

kubernetes-dashboard-67989c548-c9w6m 1/1 Running 33 59d

traefik-ingress-mdvsl 1/1 Running 30 59d

traefik-ingress-pk84z 1/1 Running 35 59d

[root@mfyxw30 ~]#

[root@mfyxw30 ~]#

[root@mfyxw30 ~]# kubectl apply -f http://k8s-yaml.od.com/kube-state-metrics/rbrc.yaml

serviceaccount/kube-state-metrics created

clusterrole.rbac.authorization.k8s.io/kube-state-metrics created

clusterrolebinding.rbac.authorization.k8s.io/kube-state-metrics created

[root@mfyxw30 ~]#

[root@mfyxw30 ~]# kubectl apply -f http://k8s-yaml.od.com/kube-state-metrics/deployment.yaml

deployment.extensions/kube-state-metrics created

[root@mfyxw30 ~]#

[root@mfyxw30 ~]# kubectl get pod -n kube-system |grep kube-state-metrics

NAME READY STATUS RESTARTS AGE

kube-state-metrics-6bc5758866-qrpbd 1/1 Running 0 3s

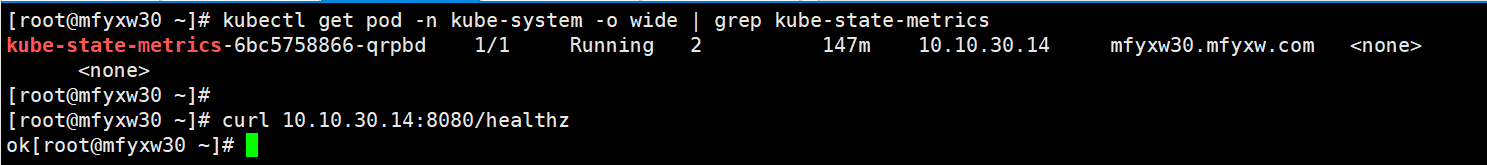

(7)查看kube-state-metrics健康信息

[root@mfyxw30 ~]# kubectl get pod -n kube-system -o wide | grep kube-state-metrics

kube-state-metrics-6bc5758866-qrpbd 1/1 Running 2 147m 10.10.30.14 mfyxw30.mfyxw.com <none> <none>

[root@mfyxw30 ~]#

[root@mfyxw30 ~]# curl 10.10.30.14:8080/healthz

ok[root@mfyxw30 ~]#

2.部署node-exporter

node-exporter官方github仓库地址:https://github.com/prometheus/node_exporter

node-exporter官方dockerhub地址:https://hub.docker.com/r/prom/node-exporter/

在运维主机mfyxw50.mfyxw.com操作

(1)下载node-exporter镜像

[root@mfyxw50 ~]# docker pull prom/node-exporter:v0.15.0

v0.15.0: Pulling from prom/node-exporter

Image docker.io/prom/node-exporter:v0.15.0 uses outdated schema1 manifest format. Please upgrade to a schema2 image for better future compatibility. More information at https://docs.docker.com/registry/spec/deprecated-schema-v1/

aa3e9481fcae: Pull complete

a3ed95caeb02: Pull complete

afc308b02dc6: Pull complete

4cafbffc9d4f: Pull complete

Digest: sha256:a59d1f22610da43490532d5398b3911c90bfa915951d3b3e5c12d3c0bf8771c3

Status: Downloaded newer image for prom/node-exporter:v0.15.0

docker.io/prom/node-exporter:v0.15.0

(2)打标签并上传至私有仓库

[root@mfyxw50 ~]# docker images | grep node-exporter

prom/node-exporter v0.15.0 12d51ffa2b22 2 years ago 22.8MB

[root@mfyxw50 ~]#

[root@mfyxw50 ~]# docker tag prom/node-exporter:v0.15.0 harbor.od.com/public/node-exporter:v0.15.0

[root@mfyxw50 ~]#

[root@mfyxw50 ~]# docker login harbor.od.com

Authenticating with existing credentials...

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

[root@mfyxw50 ~]#

[root@mfyxw50 ~]# docker push harbor.od.com/public/node-exporter:v0.15.0

The push refers to repository [harbor.od.com/public/node-exporter]

5f70bf18a086: Mounted from public/pause

1c7f6350717e: Pushed

a349adf62fe1: Pushed

c7300f623e77: Pushed

v0.15.0: digest: sha256:57d9b335b593e4d0da1477d7c5c05f23d9c3dc6023b3e733deb627076d4596ed size: 1979

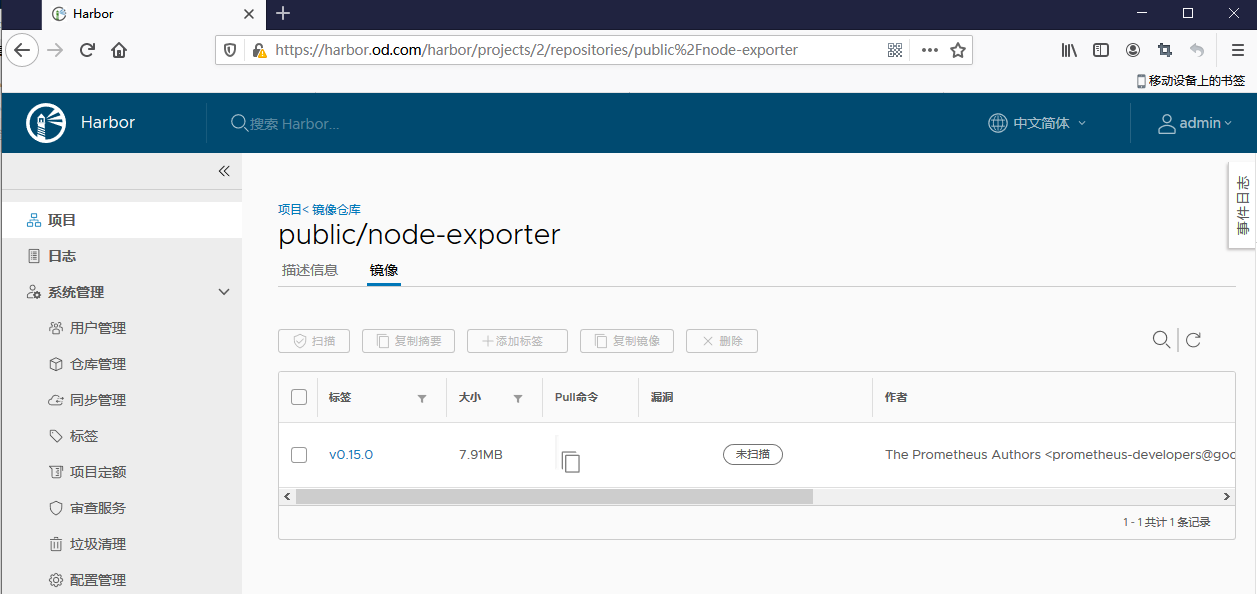

(3)登录私有仓库查看public项目是否有node-exporter

(4)创建存放node-exporter资源配置清单目录

[root@mfyxw50 ~]# mkdir -p /data/k8s-yaml/node-exporter

(5)创建node-exporter资源配置清单

-

daemonset.yaml文件内容

[root@mfyxw50 ~]# cat > /data/k8s-yaml/node-exporter/daemonset.yaml << EOF kind: DaemonSet apiVersion: extensions/v1beta1 metadata: name: node-exporter namespace: kube-system labels: daemon: "node-exporter" grafanak8sapp: "true" spec: selector: matchLabels: daemon: "node-exporter" grafanak8sapp: "true" template: metadata: name: node-exporter labels: daemon: "node-exporter" grafanak8sapp: "true" spec: volumes: - name: proc hostPath: path: /proc type: "" - name: sys hostPath: path: /sys type: "" containers: - name: node-exporter image: harbor.od.com/public/node-exporter:v0.15.0 imagePullPolicy: IfNotPresent args: - --path.procfs=/host_proc - --path.sysfs=/host_sys ports: - name: node-exporter hostPort: 9100 containerPort: 9100 protocol: TCP volumeMounts: - name: sys readOnly: true mountPath: /host_sys - name: proc readOnly: true mountPath: /host_proc hostNetwork: true EOF

(6)应用node-exporter资源配置清单

在master节点(mfyxw30.mfyxw.com或mfyxw40.mfyxw.com)任意一节点上执行

[root@mfyxw30 ~]# kubectl get daemonset -n kube-system

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

traefik-ingress 2 2 2 2 2 <none> 60d

[root@mfyxw30 ~]#

[root@mfyxw30 ~]# kubectl apply -f http://k8s-yaml.od.com/node-exporter/daemonset.yaml

daemonset.extensions/node-exporter created

[root@mfyxw30 ~]#

[root@mfyxw30 ~]# kubectl get daemonset -n kube-system

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

node-exporter 2 2 2 2 2 <none> 3s

traefik-ingress 2 2 2 2 2 <none> 60d

(7)查看到node-exporter的IP地址访问metrics

[root@mfyxw30 ~]# kubectl get pod -n kube-system -o wide | grep node-exporter

node-exporter-8s97p 1/1 Running 0 3h20m 192.168.80.40 mfyxw40.mfyxw.com <none> <none>

node-exporter-p47k7 1/1 Running 0 3h20m 192.168.80.30 mfyxw30.mfyxw.com <none> <none>

[root@mfyxw30 ~]#

[root@mfyxw30 ~]# curl 192.168.80.40:9100/metrics

3.部署cadvisor

cadvisor官方dockerhub链接:https://hub.docker.com/r/google/cadvisor/

cadvisor官方github链接:https://github.com/google/cadvisor

(1)下载cadvisor镜像

[root@mfyxw50 ~]# docker pull google/cadvisor:v0.28.3

v0.28.3: Pulling from google/cadvisor

ab7e51e37a18: Pull complete

a2dc2f1bce51: Pull complete

3b017de60d4f: Pull complete

Digest: sha256:9e347affc725efd3bfe95aa69362cf833aa810f84e6cb9eed1cb65c35216632a

Status: Downloaded newer image for google/cadvisor:v0.28.3

docker.io/google/cadvisor:v0.28.3

(2)打标签并上传至私有仓库

[root@mfyxw50 ~]# docker images | grep cadvisor

google/cadvisor v0.28.3 75f88e3ec333 2 years ago 62.2MB

[root@mfyxw50 ~]#

[root@mfyxw50 ~]# docker tag google/cadvisor:v0.28.3 harbor.od.com/public/cadvisor:v0.28.3

[root@mfyxw50 ~]#

[root@mfyxw50 ~]# docker login harbor.od.com

Authenticating with existing credentials...

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

[root@mfyxw50 ~]#

[root@mfyxw50 ~]# docker push harbor.od.com/public/cadvisor:v0.28.3

The push refers to repository [harbor.od.com/public/cadvisor]

f60e27acaccf: Pushed

f04a25da66bf: Pushed

52a5560f4ca0: Pushed

v0.28.3: digest: sha256:34d9d683086d7f3b9bbdab0d1df4518b230448896fa823f7a6cf75f66d64ebe1 size: 951

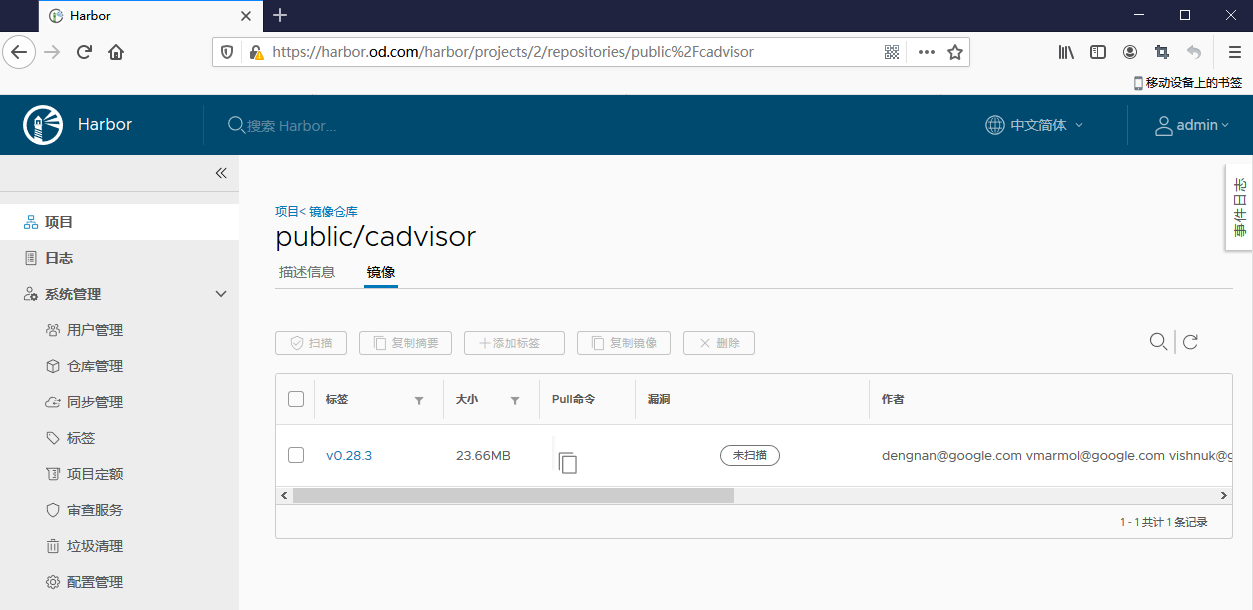

(3)登录私有仓库查看public项目是否有cadvisor

(4)创建存放cadvisor资源配置清单目录

[root@mfyxw50 ~]# mkdir -p /data/k8s-yaml/cadvisor

(5)创建cadvisor资源配置清单

-

daemonset.yaml文件内容

[root@mfyxw50 ~]# cat > /data/k8s-yaml/cadvisor/daemonset.yaml << EOF apiVersion: apps/v1 kind: DaemonSet metadata: name: cadvisor namespace: kube-system labels: app: cadvisor spec: selector: matchLabels: name: cadvisor template: metadata: labels: name: cadvisor spec: hostNetwork: true tolerations: - key: node-role.kubernetes.io/master effect: NoSchedule containers: - name: cadvisor image: harbor.od.com/public/cadvisor:v0.28.3 imagePullPolicy: IfNotPresent volumeMounts: - name: rootfs mountPath: /rootfs readOnly: true - name: var-run mountPath: /var/run - name: sys mountPath: /sys readOnly: true - name: docker mountPath: /var/lib/docker readOnly: true ports: - name: http containerPort: 4194 protocol: TCP readinessProbe: tcpSocket: port: 4194 initialDelaySeconds: 5 periodSeconds: 10 args: - --housekeeping_interval=10s - --port=4194 terminationGracePeriodSeconds: 30 volumes: - name: rootfs hostPath: path: / - name: var-run hostPath: path: /var/run - name: sys hostPath: path: /sys - name: docker hostPath: path: /data/docker EOF

(6)修改运算节点软连接

所有的运算节点都需要修改

[root@mfyxw30 ~]# mount -o remount,rw /sys/fs/cgroup/

[root@mfyxw30 ~]#

[root@mfyxw30 ~]# ll /sys/fs/cgroup/ | grep cpu

lrwxrwxrwx 1 root root 11 Jul 14 02:48 cpu -> cpu,cpuacct

lrwxrwxrwx 1 root root 11 Jul 14 02:48 cpuacct -> cpu,cpuacct

dr-xr-xr-x 7 root root 0 Jul 14 02:48 cpu,cpuacct

dr-xr-xr-x 5 root root 0 Jul 14 02:48 cpuset

[root@mfyxw30 ~]#

[root@mfyxw30 ~]# ln -s /sys/fs/cgroup/cpu,cpuacct /sys/fs/cgroup/cpuacct,cpu

[root@mfyxw30 ~]#

[root@mfyxw30 ~]# ll /sys/fs/cgroup/ | grep cpu

lrwxrwxrwx 1 root root 11 Jul 14 02:48 cpu -> cpu,cpuacct

lrwxrwxrwx 1 root root 11 Jul 14 02:48 cpuacct -> cpu,cpuacct

lrwxrwxrwx 1 root root 27 Jul 14 03:48 cpuacct,cpu -> /sys/fs/cgroup/cpu,cpuacct/

dr-xr-xr-x 7 root root 0 Jul 14 03:47 cpu,cpuacct

dr-xr-xr-x 5 root root 0 Jul 14 03:47 cpuset

[root@mfyxw40 ~]# mount -o remount,rw /sys/fs/cgroup/

[root@mfyxw40 ~]#

[root@mfyxw40 ~]# ll /sys/fs/cgroup/ | grep cpu

lrwxrwxrwx 1 root root 11 Jul 14 02:48 cpu -> cpu,cpuacct

lrwxrwxrwx 1 root root 11 Jul 14 02:48 cpuacct -> cpu,cpuacct

dr-xr-xr-x 7 root root 0 Jul 14 02:48 cpu,cpuacct

dr-xr-xr-x 5 root root 0 Jul 14 02:48 cpuset

[root@mfyxw40 ~]#

[root@mfyxw40 ~]# ln -s /sys/fs/cgroup/cpu,cpuacct /sys/fs/cgroup/cpuacct,cpu

[root@mfyxw40 ~]#

[root@mfyxw40 ~]# ll /sys/fs/cgroup/ | grep cpu

lrwxrwxrwx 1 root root 11 Jul 14 02:48 cpu -> cpu,cpuacct

lrwxrwxrwx 1 root root 11 Jul 14 02:48 cpuacct -> cpu,cpuacct

lrwxrwxrwx 1 root root 27 Jul 14 03:48 cpuacct,cpu -> /sys/fs/cgroup/cpu,cpuacct/

dr-xr-xr-x 7 root root 0 Jul 14 03:47 cpu,cpuacct

dr-xr-xr-x 5 root root 0 Jul 14 03:47 cpuset

(7)应用cadvisor资源配置清单

在master节点(mfyxw30.mfyxw.com或mfyxw40.mfyxw.com)任意一节点上执行

[root@mfyxw30 ~]#

[root@mfyxw30 ~]# kubectl get daemonset -n kube-system

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

node-exporter 2 2 2 2 2 <none> 22m

traefik-ingress 2 2 2 2 2 <none> 60d

[root@mfyxw30 ~]#

[root@mfyxw30 ~]# kubectl apply -f http://k8s-yaml.od.com/cadvisor/daemonset.yaml

daemonset.apps/cadvisor created

[root@mfyxw30 ~]#

[root@mfyxw30 ~]# kubectl get daemonset -n kube-system

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

cadvisor 2 2 2 2 2 <none> 13s

node-exporter 2 2 2 2 2 <none> 113m

traefik-ingress 2 2 2 2 2 <none> 61d

[root@mfyxw30 ~]#

[root@mfyxw30 ~]# netstat -luntp | grep 4194

tcp6 0 0 :::4194 :::* LISTEN 12649/cadvisor

(8)查看到cadvisor的IP地址并访问metrics

[root@mfyxw30 ~]# kubectl get pod -n kube-system -o wide | grep cadvisor

cadvisor-rs8rt 1/1 Running 0 148m 192.168.80.40 mfyxw40.mfyxw.com <none> <none>

cadvisor-v9jh4 1/1 Running 0 148m 192.168.80.30 mfyxw30.mfyxw.com <none> <none>

[root@mfyxw30 ~]#

[root@mfyxw30 ~]# curl 192.168.80.40:4194/metrics

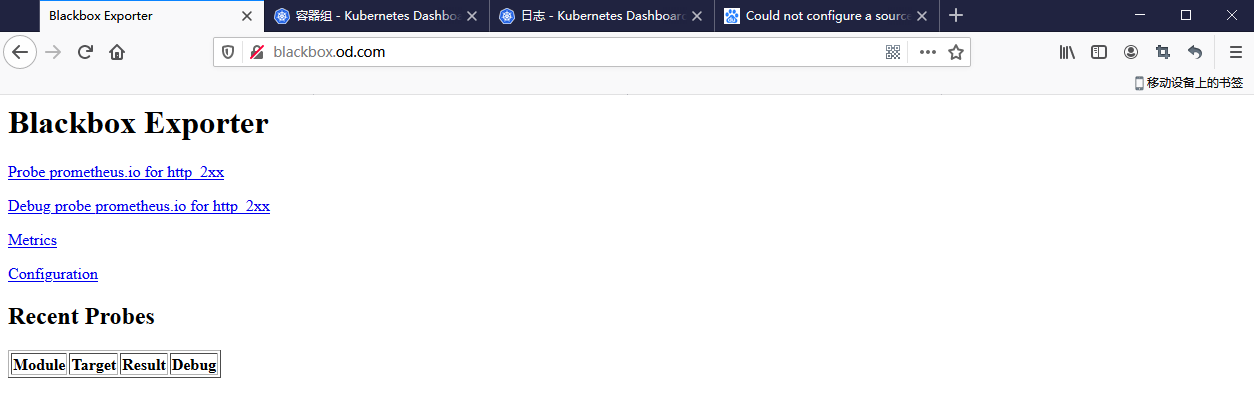

4.部署blackbox-exporter

blackbox-exporter官方dockerhub链接:https://hub.docker.com/r/prom/blackbox-exporter/

blackbox-exporter官方github链接:https://github.com/prometheus/blackbox_exporter

在运维主机mfyxw50.mfyxw.com上执行

(1)下载blackbox-exporter镜像

[root@mfyxw50 ~]# docker pull prom/blackbox-exporter:v0.15.1

v0.15.1: Pulling from prom/blackbox-exporter

697743189b6d: Pull complete

f1989cfd335b: Pull complete

8918f7b8f34f: Pull complete

a128dce6256a: Pull complete

Digest: sha256:c20445e0cc628fa4b227fe2f694c22a314beb43fd8297095b6ee6cbc67161336

Status: Downloaded newer image for prom/blackbox-exporter:v0.15.1

docker.io/prom/blackbox-exporter:v0.15.1

(2)打标签并上传至私有仓库

[root@mfyxw50 ~]# docker images | grep blackbox-exporter

prom/blackbox-exporter v0.15.1 d3a00aea3a01 16 months ago 17.6MB

[root@mfyxw50 ~]#

[root@mfyxw50 ~]# docker tag prom/blackbox-exporter:v0.15.1 harbor.od.com/public/blackbox-exporter:v0.15.1

[root@mfyxw50 ~]#

[root@mfyxw50 ~]# docker login harbor.od.com

Authenticating with existing credentials...

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

[root@mfyxw50 ~]#

[root@mfyxw50 ~]# docker push harbor.od.com/public/blackbox-exporter:v0.15.1

The push refers to repository [harbor.od.com/public/blackbox-exporter]

256c4aa8ebe5: Pushed

4b6cc55de649: Pushed

986894c42222: Pushed

adab5d09ba79: Pushed

v0.15.1: digest: sha256:c20445e0cc628fa4b227fe2f694c22a314beb43fd8297095b6ee6cbc67161336 size: 1155

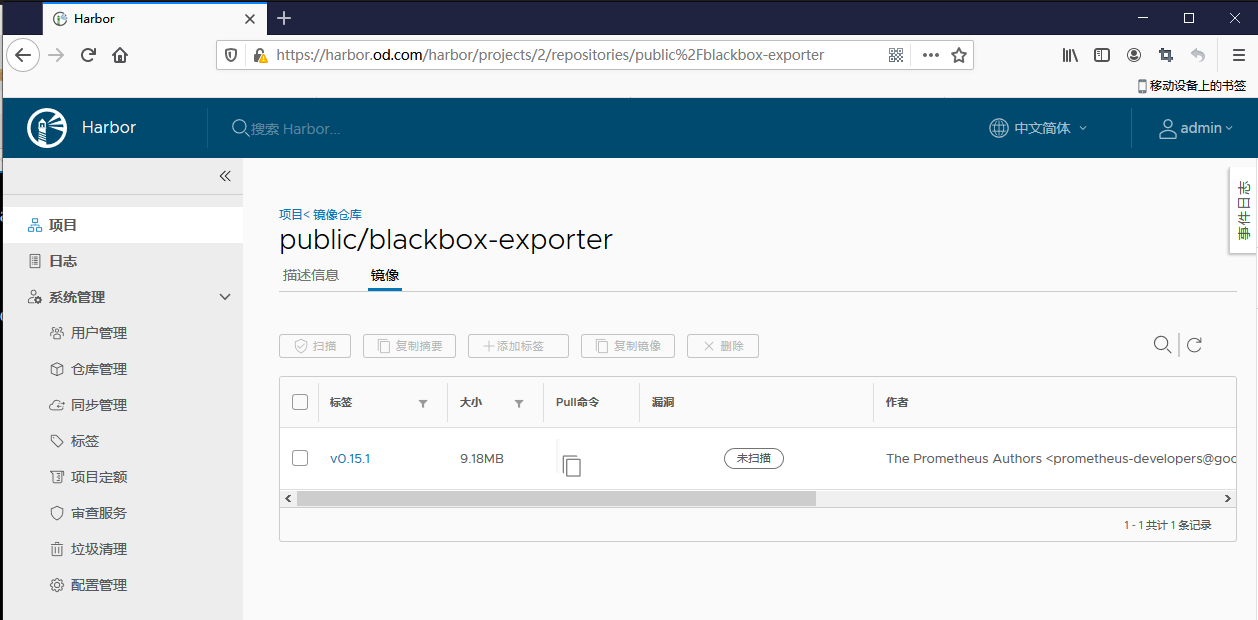

(3)登录私有仓库查看public项目是否有blackbox-exporter

(4)创建存放blackbox-exporter资源配置清单目录

[root@mfyxw50 ~]# mkdir -p /data/k8s-yaml/blackbox-exporter

(5)创建blackbox-exporter资源配置清单

-

configmap.yaml文件内容

[root@mfyxw50 ~]# cat > /data/k8s-yaml/blackbox-exporter/configmap.yaml << EOF apiVersion: v1 kind: ConfigMap metadata: labels: app: blackbox-exporter name: blackbox-exporter namespace: kube-system data: blackbox.yml: |- modules: http_2xx: prober: http timeout: 2s http: valid_http_versions: ["HTTP/1.1", "HTTP/2"] valid_status_codes: [200,301,302] method: GET preferred_ip_protocol: "ip4" tcp_connect: prober: tcp timeout: 2s EOF -

deployment.yaml文件内容

[root@mfyxw50 ~]# cat > /data/k8s-yaml/blackbox-exporter/deployment.yaml << EOF kind: Deployment apiVersion: extensions/v1beta1 metadata: name: blackbox-exporter namespace: kube-system labels: app: blackbox-exporter annotations: deployment.kubernetes.io/revision: 1 spec: replicas: 1 selector: matchLabels: app: blackbox-exporter template: metadata: labels: app: blackbox-exporter spec: volumes: - name: config configMap: name: blackbox-exporter defaultMode: 420 containers: - name: blackbox-exporter image: harbor.od.com/public/blackbox-exporter:v0.15.1 imagePullPolicy: IfNotPresent args: - --config.file=/etc/blackbox_exporter/blackbox.yml - --log.level=info - --web.listen-address=:9115 ports: - name: blackbox-port containerPort: 9115 protocol: TCP resources: limits: cpu: 200m memory: 256Mi requests: cpu: 100m memory: 50Mi volumeMounts: - name: config mountPath: /etc/blackbox_exporter readinessProbe: tcpSocket: port: 9115 initialDelaySeconds: 5 timeoutSeconds: 5 periodSeconds: 10 successThreshold: 1 failureThreshold: 3 EOF -

service.yaml文件内容

[root@mfyxw50 ~]# cat > /data/k8s-yaml/blackbox-exporter/service.yaml << EOF kind: Service apiVersion: v1 metadata: name: blackbox-exporter namespace: kube-system spec: selector: app: blackbox-exporter ports: - name: blackbox-port protocol: TCP port: 9115 EOF -

Ingress.yaml文件内容

[root@mfyxw50 ~]# cat > /data/k8s-yaml/blackbox-exporter/Ingress.yaml << EOF apiVersion: extensions/v1beta1 kind: Ingress metadata: name: blackbox-exporter namespace: kube-system spec: rules: - host: blackbox.od.com http: paths: - path: / backend: serviceName: blackbox-exporter servicePort: 9115 EOF

(6)解析域名并测试域名

在mfyxw10.mfyxw.com主机上操作

-

配置域名解析

[root@mfyxw10 ~]# cat > /var/named/od.com.zone << EOF $ORIGIN od.com. $TTL 600 ; 10 minutes @ IN SOA dns.od.com. dnsadmin.od.com. ( ;序号请加1,表示比之前版本要新 2020031314 ; serial 10800 ; refresh (3 hours) 900 ; retry (15 minutes) 604800 ; expire (1 week) 86400 ; minimum (1 day) ) NS dns.od.com. $TTL 60 ; 1 minute dns A 192.168.80.10 harbor A 192.168.80.50 ;添加harbor记录 k8s-yaml A 192.168.80.50 traefik A 192.168.80.100 dashboard A 192.168.80.100 zk1 A 192.168.80.10 zk2 A 192.168.80.20 zk3 A 192.168.80.30 jenkins A 192.168.80.100 dubbo-monitor A 192.168.80.100 demo A 192.168.80.100 mysql A 192.168.80.10 config A 192.168.80.100 portal A 192.168.80.100 zk-test A 192.168.80.10 zk-prod A 192.168.80.20 config-test A 192.168.80.100 config-prod A 192.168.80.100 demo-test A 192.168.80.100 demo-prod A 192.168.80.100 blackbox A 192.168.80.100 EOF -

重启DNS服务

[root@mfyxw10 ~]# systemctl restart named -

测试域名解析

[root@mfyxw10 ~]# dig -t A blackbox.od.com @192.168.80.10 +short 192.168.80.100

(7)应用blackbox-exporter资源配置清单

在master节点(mfyxw30.mfyxw.com或mfyxw40.mfyxw.com)任意一节点上执行

[root@mfyxw30 ~]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

cadvisor-lcr8b 1/1 Running 0 32m

cadvisor-tw4sz 1/1 Running 0 32m

coredns-58f8966f84-zrtj9 1/1 Running 32 59d

heapster-b5b9f794-f76bw 1/1 Running 32 59d

kube-state-metrics-6bc5758866-qrpbd 1/1 Running 2 171m

kubernetes-dashboard-67989c548-c9w6m 1/1 Running 35 59d

node-exporter-4mp5l 1/1 Running 1 146m

node-exporter-qj6hq 1/1 Running 1 146m

traefik-ingress-mdvsl 1/1 Running 31 59d

traefik-ingress-pk84z 1/1 Running 36 59d

[root@mfyxw30 ~]#

[root@mfyxw30 ~]# kubectl apply -f http://k8s-yaml.od.com/blackbox-exporter/configmap.yaml

configmap/blackbox-exporter created

[root@mfyxw30 ~]#

[root@mfyxw30 ~]# kubectl apply -f http://k8s-yaml.od.com/blackbox-exporter/deployment.yaml

deployment.extensions/blackbox-exporter created

[root@mfyxw30 ~]#

[root@mfyxw30 ~]# kubectl apply -f http://k8s-yaml.od.com/blackbox-exporter/service.yaml

service/blackbox-exporter created

[root@mfyxw30 ~]# kubectl apply -f http://k8s-yaml.od.com/blackbox-exporter/Ingress.yaml

ingress.extensions/blackbox-exporter created

[root@mfyxw30 ~]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

blackbox-exporter-6dbf8cdfbb-fnmtg 1/1 Running 0 83s

cadvisor-lcr8b 1/1 Running 0 36m

cadvisor-tw4sz 1/1 Running 0 36m

coredns-58f8966f84-zrtj9 1/1 Running 32 59d

heapster-b5b9f794-f76bw 1/1 Running 32 59d

kube-state-metrics-6bc5758866-qrpbd 1/1 Running 2 175m

kubernetes-dashboard-67989c548-c9w6m 1/1 Running 35 59d

node-exporter-4mp5l 1/1 Running 1 150m

node-exporter-qj6hq 1/1 Running 1 150m

traefik-ingress-mdvsl 1/1 Running 31 59d

traefik-ingress-pk84z 1/1 Running 36 59d

(8)访问域名

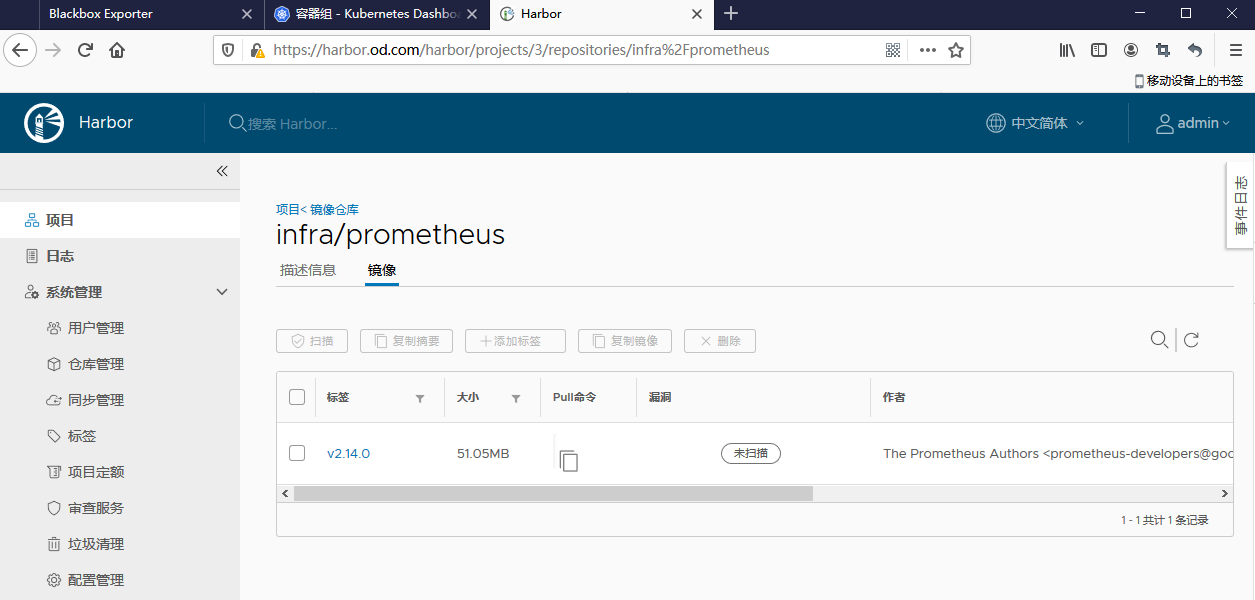

5.部署prometheus

prometheus官方dockerhub链接:https://hub.docker.com/r/prom/prometheus

prometheus官方github链接:https://github.com/prometheus/prometheus

在运维主机mfyxw50.mfyxw.com执行

(1)下载prometheus镜像

[root@mfyxw50 ~]# docker pull prom/prometheus:v2.14.0

v2.14.0: Pulling from prom/prometheus

8e674ad76dce: Already exists

e77d2419d1c2: Already exists

8674123643f1: Pull complete

21ee3b79b17a: Pull complete

d9073bbe10c3: Pull complete

585b5cbc27c1: Pull complete

0b174c1d55cf: Pull complete

a1b4e43b91a7: Pull complete

31ccb7962a7c: Pull complete

e247e238102a: Pull complete

6798557a5ee4: Pull complete

cbfcb065e0ae: Pull complete

Digest: sha256:907e20b3b0f8b0a76a33c088fe9827e8edc180e874bd2173c27089eade63d8b8

Status: Downloaded newer image for prom/prometheus:v2.14.0

docker.io/prom/prometheus:v2.14.0

(2)打标签并上传至私有仓库

[root@mfyxw50 ~]# docker images | grep prometheus

prom/prometheus v2.14.0 7317640d555e 8 months ago 130MB

[root@mfyxw50 ~]#

[root@mfyxw50 ~]# docker tag prom/prometheus:v2.14.0 harbor.od.com/infra/prometheus:v2.14.0

[root@mfyxw50 ~]#

[root@mfyxw50 ~]# docker login harbor.od.com

Authenticating with existing credentials...

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

[root@mfyxw50 ~]#

[root@mfyxw50 ~]# docker push harbor.od.com/infra/prometheus:v2.14.0

The push refers to repository [harbor.od.com/infra/prometheus]

fca78fb26e9b: Pushed

ccf6f2fbceef: Pushed

eb6f7e00328c: Pushed

5da914e0fc1b: Pushed

b202797fdad0: Pushed

39dc7810e736: Pushed

8a9fe881edcd: Pushed

5dd8539686e4: Pushed

5c8b7d3229bc: Pushed

062d51f001d9: Pushed

3163e6173fcc: Mounted from public/blackbox-exporter

6194458b07fc: Mounted from public/blackbox-exporter

v2.14.0: digest: sha256:3d53ce329b25cc0c1bfc4c03be0496022d81335942e9e0518ded6d50a5e6c638 size: 2824

(3)登录私有仓库查看infra项目是否有prometheus

(4)创建目录

-

创建存储prometheus资源配置清单目录

[root@mfyxw50 ~]# mkdir /data/k8s-yaml/prometheus -

创建持久化数据目录

[root@mfyxw50 ~]# mkdir /data/nfs-volume/prometheus

(5)创建prometheus资源配置清单

-

rbrc.yaml文件内容

[root@mfyxw50 ~]# cat > /data/k8s-yaml/prometheus/rbrc.yaml << EOF apiVersion: v1 kind: ServiceAccount metadata: labels: addonmanager.kubernetes.io/mode: Reconcile kubernetes.io/cluster-service: "true" name: prometheus namespace: infra --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: labels: addonmanager.kubernetes.io/mode: Reconcile kubernetes.io/cluster-service: "true" name: prometheus rules: - apiGroups: - "" resources: - nodes - nodes/metrics - services - endpoints - pods verbs: - get - list - watch - apiGroups: - "" resources: - configmaps verbs: - get - nonResourceURLs: - /metrics verbs: - get --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: labels: addonmanager.kubernetes.io/mode: Reconcile kubernetes.io/cluster-service: "true" name: prometheus roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: prometheus subjects: - kind: ServiceAccount name: prometheus namespace: infra EOF -

deployment文件内容

[root@mfyxw50 ~]# cat > /data/k8s-yaml/prometheus/deployment.yaml << EOF apiVersion: extensions/v1beta1 kind: Deployment metadata: annotations: deployment.kubernetes.io/revision: "5" labels: name: prometheus name: prometheus namespace: infra spec: progressDeadlineSeconds: 600 replicas: 1 revisionHistoryLimit: 7 selector: matchLabels: app: prometheus strategy: rollingUpdate: maxSurge: 1 maxUnavailable: 1 type: RollingUpdate template: metadata: labels: app: prometheus spec: nodeName: mfyxw30.mfyxw.com containers: - name: prometheus image: harbor.od.com/infra/prometheus:v2.14.0 imagePullPolicy: IfNotPresent command: - /bin/prometheus args: - --config.file=/data/etc/prometheus.yml - --storage.tsdb.path=/data/prom-db - --storage.tsdb.min-block-duration=10m - --storage.tsdb.retention=72h ports: - containerPort: 9090 protocol: TCP volumeMounts: - mountPath: /data name: data resources: requests: cpu: "1000m" memory: "1.5Gi" limits: cpu: "2000m" memory: "3Gi" imagePullSecrets: - name: harbor securityContext: runAsUser: 0 serviceAccountName: prometheus volumes: - name: data nfs: server: mfyxw50.mfyxw.com path: /data/nfs-volume/prometheus EOF -

service文件内容

[root@mfyxw50 ~]# cat > /data/k8s-yaml/prometheus/service.yaml << EOF apiVersion: v1 kind: Service metadata: name: prometheus namespace: infra spec: ports: - port: 9090 protocol: TCP targetPort: 9090 selector: app: prometheus EOF -

Ingress文件内容

[root@mfyxw50 ~]# cat > /data/k8s-yaml/prometheus/Ingress.yaml << EOF apiVersion: extensions/v1beta1 kind: Ingress metadata: annotations: kubernetes.io/ingress.class: traefik name: prometheus namespace: infra spec: rules: - host: prometheus.od.com http: paths: - path: / backend: serviceName: prometheus servicePort: 9090 EOF

(6)准备prometheus的配置文件

-

创建目录

[root@mfyxw50 ~]# mkdir -p /data/nfs-volume/prometheus/{etc,prom-db} -

拷贝证书

[root@mfyxw50 ~]# cp /opt/certs/ca.pem /data/nfs-volume/prometheus/etc/ [root@mfyxw50 ~]# cp /opt/certs/client.pem /data/nfs-volume/prometheus/etc/ [root@mfyxw50 ~]# cp /opt/certs/client-key.pem /data/nfs-volume/prometheus/etc/ -

创建prometheus.yml配置文件

[root@mfyxw50 ~]# cat > /data/nfs-volume/prometheus/etc/prometheus.yml << EOF global: scrape_interval: 15s evaluation_interval: 15s scrape_configs: - job_name: 'etcd' tls_config: ca_file: /data/etc/ca.pem cert_file: /data/etc/client.pem key_file: /data/etc/client-key.pem scheme: https static_configs: - targets: - '192.168.80.20:2379' - '192.168.80.30:2379' - '192.168.80.40:2379' - job_name: 'kubernetes-apiservers' kubernetes_sd_configs: - role: endpoints scheme: https tls_config: ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token relabel_configs: - source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name] action: keep regex: default;kubernetes;https - job_name: 'kubernetes-pods' kubernetes_sd_configs: - role: pod relabel_configs: - source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_scrape] action: keep regex: true - source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_path] action: replace target_label: __metrics_path__ regex: (.+) - source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port] action: replace regex: ([^:]+)(?::d+)?;(d+) replacement: $1:$2 target_label: __address__ - action: labelmap regex: __meta_kubernetes_pod_label_(.+) - source_labels: [__meta_kubernetes_namespace] action: replace target_label: kubernetes_namespace - source_labels: [__meta_kubernetes_pod_name] action: replace target_label: kubernetes_pod_name - job_name: 'kubernetes-kubelet' kubernetes_sd_configs: - role: node relabel_configs: - action: labelmap regex: __meta_kubernetes_node_label_(.+) - source_labels: [__meta_kubernetes_node_name] regex: (.+) target_label: __address__ replacement: ${1}:10255 - job_name: 'kubernetes-cadvisor' kubernetes_sd_configs: - role: node relabel_configs: - action: labelmap regex: __meta_kubernetes_node_label_(.+) - source_labels: [__meta_kubernetes_node_name] regex: (.+) target_label: __address__ replacement: ${1}:4194 - job_name: 'kubernetes-kube-state' kubernetes_sd_configs: - role: pod relabel_configs: - action: labelmap regex: __meta_kubernetes_pod_label_(.+) - source_labels: [__meta_kubernetes_namespace] action: replace target_label: kubernetes_namespace - source_labels: [__meta_kubernetes_pod_name] action: replace target_label: kubernetes_pod_name - source_labels: [__meta_kubernetes_pod_label_grafanak8sapp] regex: .*true.* action: keep - source_labels: ['__meta_kubernetes_pod_label_daemon', '__meta_kubernetes_pod_node_name'] regex: 'node-exporter;(.*)' action: replace target_label: nodename - job_name: 'blackbox_http_pod_probe' metrics_path: /probe kubernetes_sd_configs: - role: pod params: module: [http_2xx] relabel_configs: - source_labels: [__meta_kubernetes_pod_annotation_blackbox_scheme] action: keep regex: http - source_labels: [__address__, __meta_kubernetes_pod_annotation_blackbox_port, __meta_kubernetes_pod_annotation_blackbox_path] action: replace regex: ([^:]+)(?::d+)?;(d+);(.+) replacement: $1:$2$3 target_label: __param_target - action: replace target_label: __address__ replacement: blackbox-exporter.kube-system:9115 - source_labels: [__param_target] target_label: instance - action: labelmap regex: __meta_kubernetes_pod_label_(.+) - source_labels: [__meta_kubernetes_namespace] action: replace target_label: kubernetes_namespace - source_labels: [__meta_kubernetes_pod_name] action: replace target_label: kubernetes_pod_name - job_name: 'blackbox_tcp_pod_probe' metrics_path: /probe kubernetes_sd_configs: - role: pod params: module: [tcp_connect] relabel_configs: - source_labels: [__meta_kubernetes_pod_annotation_blackbox_scheme] action: keep regex: tcp - source_labels: [__address__, __meta_kubernetes_pod_annotation_blackbox_port] action: replace regex: ([^:]+)(?::d+)?;(d+) replacement: $1:$2 target_label: __param_target - action: replace target_label: __address__ replacement: blackbox-exporter.kube-system:9115 - source_labels: [__param_target] target_label: instance - action: labelmap regex: __meta_kubernetes_pod_label_(.+) - source_labels: [__meta_kubernetes_namespace] action: replace target_label: kubernetes_namespace - source_labels: [__meta_kubernetes_pod_name] action: replace target_label: kubernetes_pod_name - job_name: 'traefik' kubernetes_sd_configs: - role: pod relabel_configs: - source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_scheme] action: keep regex: traefik - source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_path] action: replace target_label: __metrics_path__ regex: (.+) - source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port] action: replace regex: ([^:]+)(?::d+)?;(d+) replacement: $1:$2 target_label: __address__ - action: labelmap regex: __meta_kubernetes_pod_label_(.+) - source_labels: [__meta_kubernetes_namespace] action: replace target_label: kubernetes_namespace - source_labels: [__meta_kubernetes_pod_name] action: replace target_label: kubernetes_pod_name EOF

(7)应用prometheus资源配置清单

在master节点(mfyxw30.mfyxw.com或mfyxw40.mfyxw.com)任意一节点上执行

-

由于资源的限制,先查看一下jenkins在哪台运算节点上运行,建议与jenkins节点错开不同的运算节点,毕竟promethus比较耗内存资源,故一般在实际生产环境上都建议单独使用一台运算节点来运行prometheus,建议在此节点上打上污点,让其它的pod无法调度此运算节点,在此实现的环境中,就不做污点处理,但是,在prometheus的deployment.yaml文件,使用了nodeName来指定prometheus运行在哪个运算节点。实际环境建议将nodeName删除

[root@mfyxw30 ~]# kubectl get pod -n infra -o wide | grep jenkins jenkins-b99776c69-49dnr 1/1 Running 7 7d3h 10.10.40.5 mfyxw40.mfyxw.com <none> <none> [root@mfyxw30 ~]# -

应用资源配置清单

[root@mfyxw30 ~]# kubectl apply -f http://k8s-yaml.od.com/prometheus/rbrc.yaml serviceaccount/prometheus created clusterrole.rbac.authorization.k8s.io/prometheus created clusterrolebinding.rbac.authorization.k8s.io/prometheus created [root@mfyxw30 ~]# [root@mfyxw30 ~]# kubectl apply -f http://k8s-yaml.od.com/prometheus/deployment.yaml deployment.extensions/prometheus created [root@mfyxw30 ~]# [root@mfyxw30 ~]# kubectl apply -f http://k8s-yaml.od.com/prometheus/service.yaml service/prometheus created [root@mfyxw30 ~]# [root@mfyxw30 ~]# kubectl apply -f http://k8s-yaml.od.com/prometheus/Ingress.yaml ingress.extensions/prometheus created

(8)解析域名并测试域名解析

在mfyxw10.mfyxw.com主机上操作

-

解析域名

[root@mfyxw10 ~]# cat > /var/named/od.com.zone << EOF $ORIGIN od.com. $TTL 600 ; 10 minutes @ IN SOA dns.od.com. dnsadmin.od.com. ( ;序号请加1,表示比之前版本要新 2020031315 ; serial 10800 ; refresh (3 hours) 900 ; retry (15 minutes) 604800 ; expire (1 week) 86400 ; minimum (1 day) ) NS dns.od.com. $TTL 60 ; 1 minute dns A 192.168.80.10 harbor A 192.168.80.50 ;添加harbor记录 k8s-yaml A 192.168.80.50 traefik A 192.168.80.100 dashboard A 192.168.80.100 zk1 A 192.168.80.10 zk2 A 192.168.80.20 zk3 A 192.168.80.30 jenkins A 192.168.80.100 dubbo-monitor A 192.168.80.100 demo A 192.168.80.100 mysql A 192.168.80.10 config A 192.168.80.100 portal A 192.168.80.100 zk-test A 192.168.80.10 zk-prod A 192.168.80.20 config-test A 192.168.80.100 config-prod A 192.168.80.100 demo-test A 192.168.80.100 demo-prod A 192.168.80.100 blackbox A 192.168.80.100 prometheus A 192.168.80.100 EOF -

重启DNS服务

[root@mfyxw10 ~]# systemctl restart named -

测试域名解析

[root@mfyxw10 ~]# dig -t A prometheus.od.com @192.168.80.10 +short 192.168.80.100

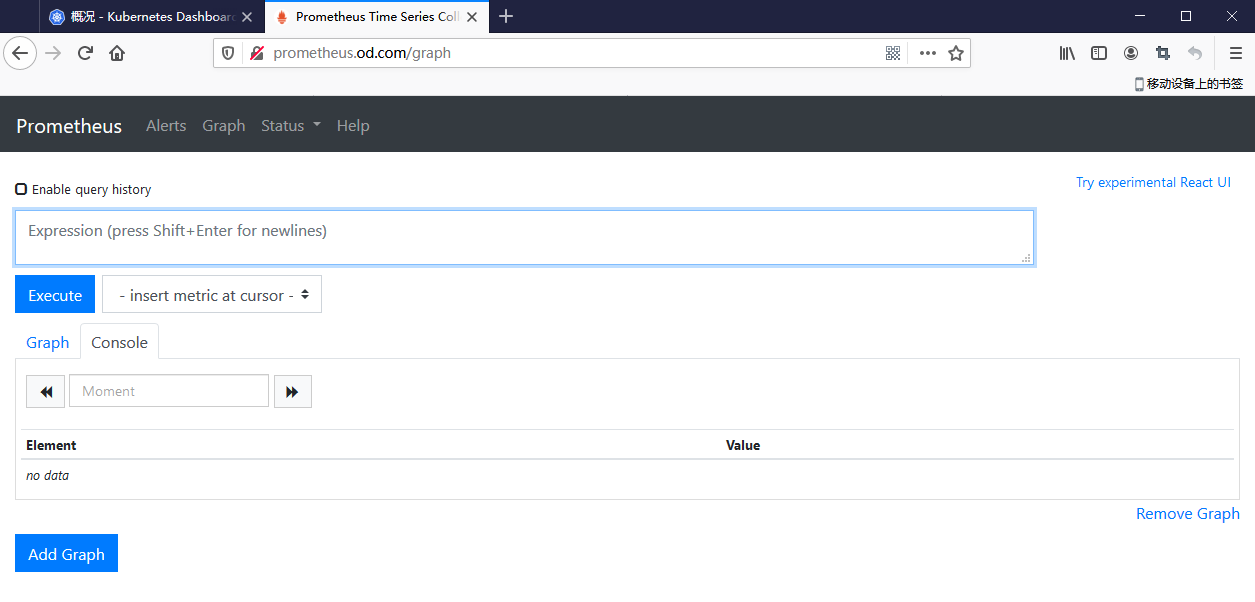

(9)访问prometheus域名

6.配置prometheus监控业务容器

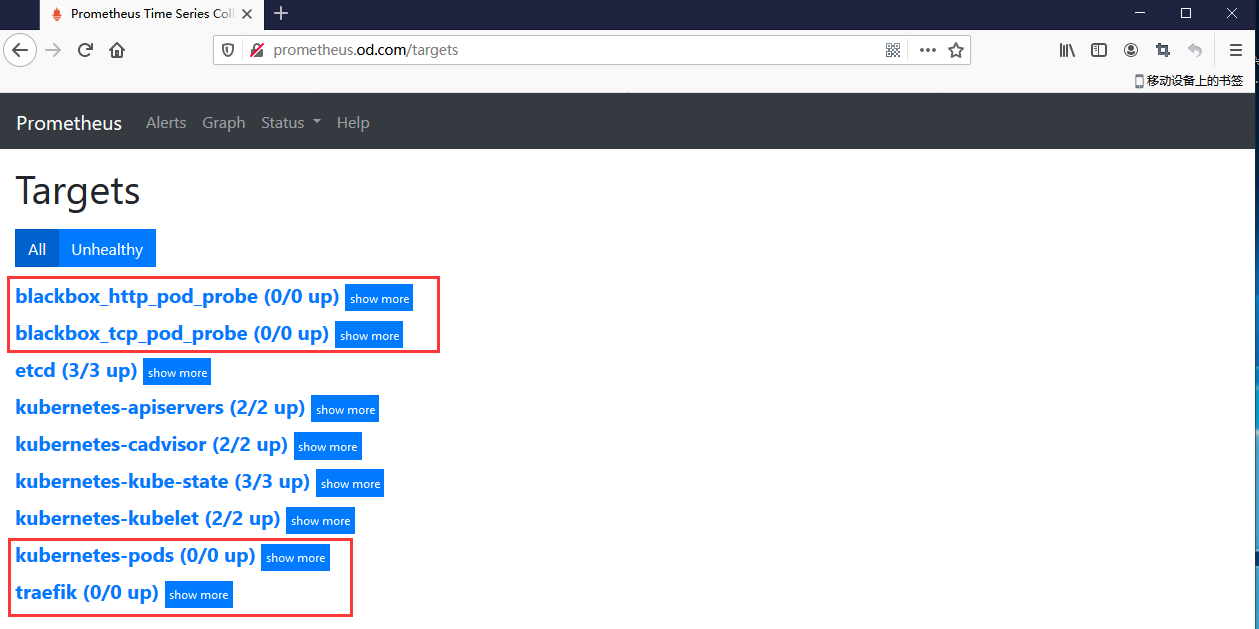

在promethus中的Targets中,可以看到,blackbox_http,blackbox_tcp,kubernetes-pods,traefik都没有监控对象,故下面针对此来做监控

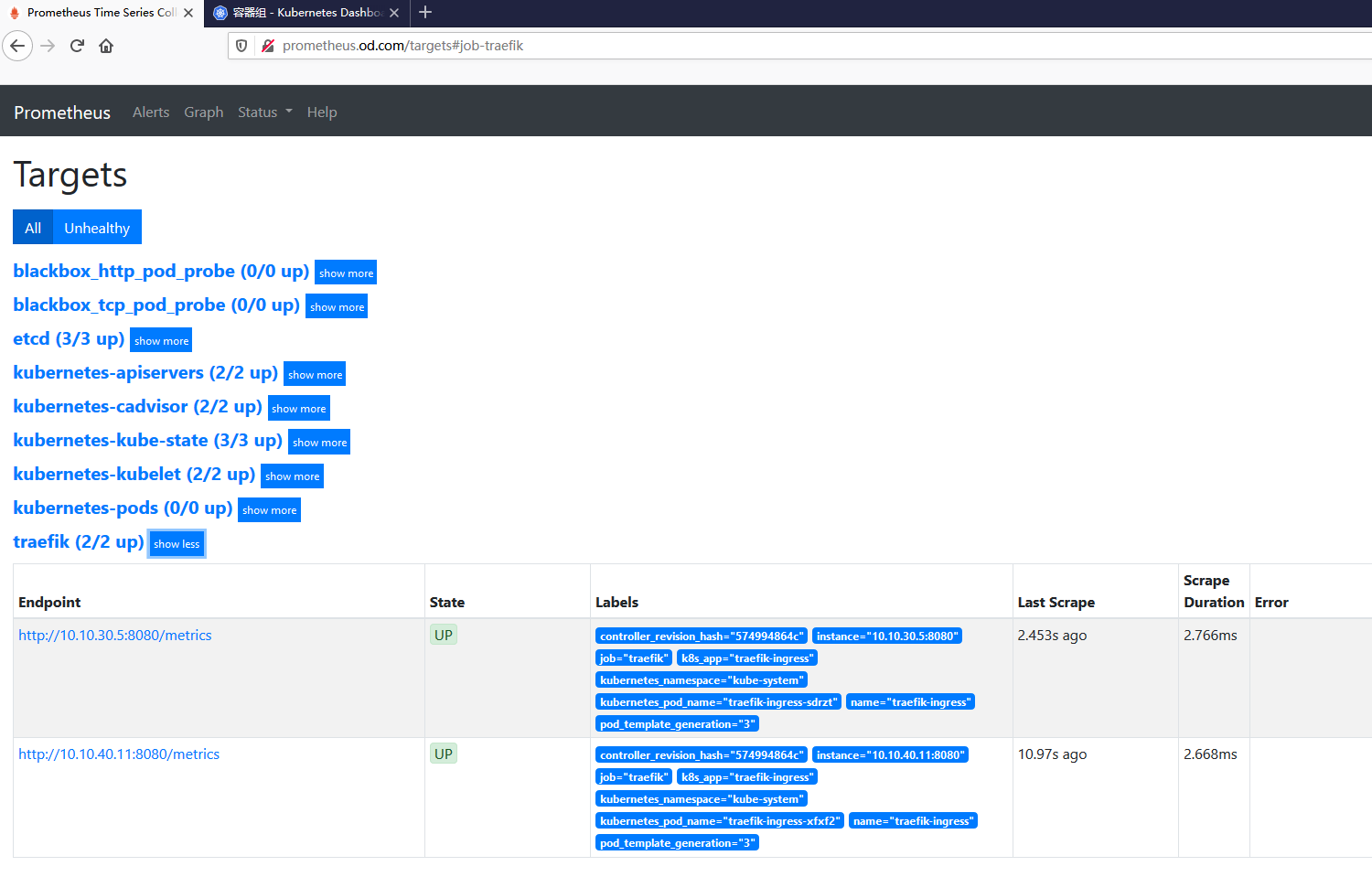

(1)监控traefik

通过在promethus的Configuration的配置文件中可以看到,需要监控traefik的对象,则在pod的yaml文件中添加annotations即可

修改原traefik的DaemonSet.yaml文件,添加annotations

annotations:

prometheus_io_scheme: traefik

prometheus_io_path: /metrics

prometheus_io_port: "8080"

在运维主机(mfyxw50.mfyxw.com)上执行操作

对DaemonSet.yaml修改,文件内容如下:

[root@mfyxw50 ~]# cat > /data/k8s-yaml/traefik/DaemonSet.yaml << EOF

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: traefik-ingress

namespace: kube-system

labels:

k8s-app: traefik-ingress

spec:

template:

metadata:

labels:

k8s-app: traefik-ingress

name: traefik-ingress

annotations:

prometheus_io_scheme: traefik

prometheus_io_path: /metrics

prometheus_io_port: "8080"

spec:

serviceAccountName: traefik-ingress-controller

terminationGracePeriodSeconds: 60

containers:

- image: harbor.od.com/public/traefik:v1.7.2

name: traefik-ingress

ports:

- name: controller

containerPort: 80

hostPort: 81

- name: admin-web

containerPort: 8080

securityContext:

capabilities:

drop:

- ALL

add:

- NET_BIND_SERVICE

args:

- --api

- --kubernetes

- --logLevel=INFO

- --insecureskipverify=true

- --kubernetes.endpoint=https://192.168.80.100:7443

- --accesslog

- --accesslog.filepath=/var/log/traefik_access.log

- --traefiklog

- --traefiklog.filepath=/var/log/traefik.log

- --metrics.prometheus

EOF

删除原来的traefik的pod

在mfyxw30.mfyxw.com主机上执行如下操作

[root@mfyxw30 ~]# kubectl delete -f http://k8s-yaml.od.com/traefik/DaemonSet.yaml

daemonset.extensions "traefik-ingress" deleted

重新运行修改后的DaemonSet.yaml文件

[root@mfyxw30 ~]# kubectl apply -f http://k8s-yaml.od.com/traefik/DaemonSet.yaml

daemonset.extensions/traefik-ingress created

再次登录promethus,在Targets查看traefik是否已经监控到

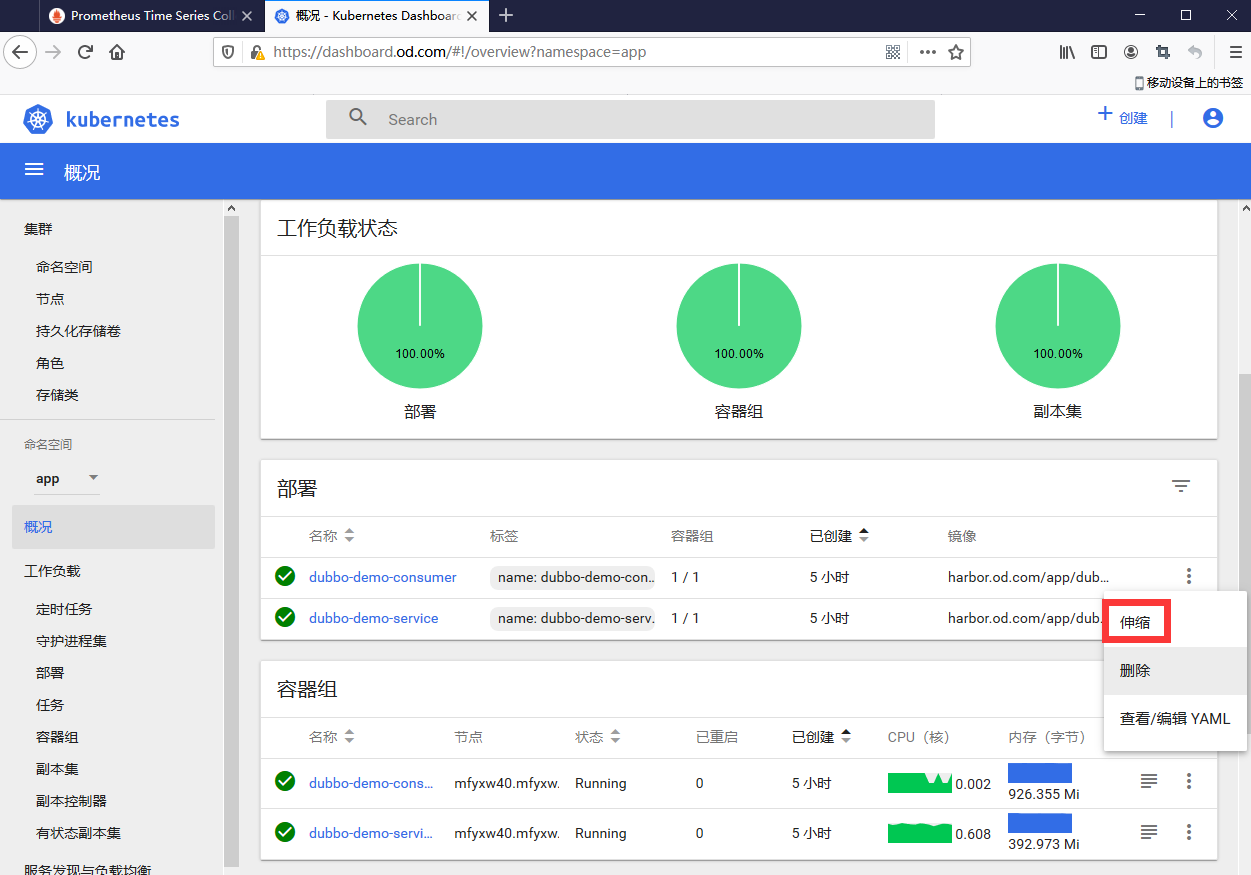

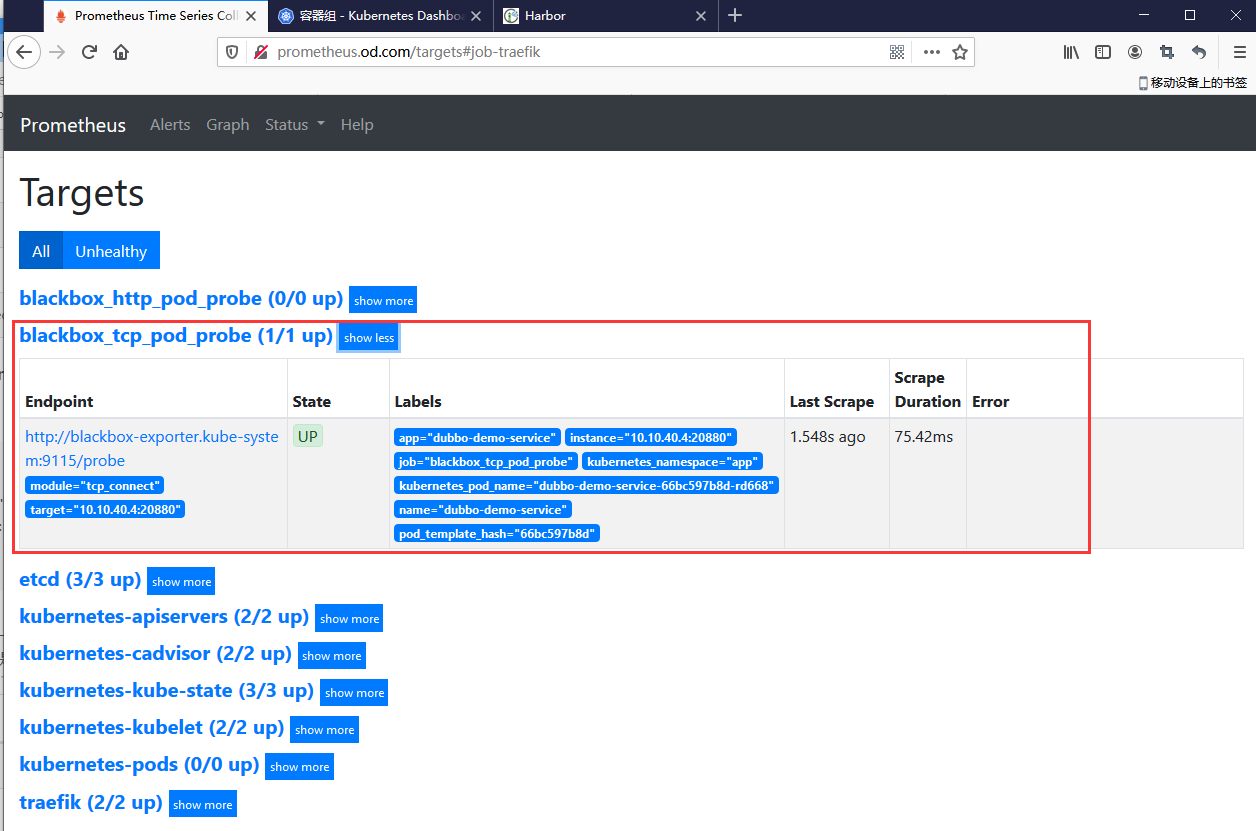

(2)blackbox:监控tcp服务是否存活

blackbox_tcp_pod_probe:监控tcp协议服务是否存活,此处就以dubbo提供者(dubbo-demo-service)做演示

只需要在template.metadata下添加annotations即可

annotations:

blackbox_port: "20880"

blackbox_scheme: tcp

对dubbo-demo-service的deployment.yaml文件修改如下

[root@mfyxw50 ~]# cat > /data/k8s-yaml/dubbo-demo-service/deployment.yaml << EOF

kind: Deployment

apiVersion: extensions/v1beta1

metadata:

name: dubbo-demo-service

namespace: app

labels:

name: dubbo-demo-service

spec:

replicas: 1

selector:

matchLabels:

name: dubbo-demo-service

template:

metadata:

labels:

app: dubbo-demo-service

name: dubbo-demo-service

annotations:

blackbox_port: "20880"

blackbox_scheme: tcp

spec:

containers:

- name: dubbo-demo-service

image: harbor.od.com/app/dubbo-demo-service:master_20200613_1929

ports:

- containerPort: 20880

protocol: TCP

env:

- name: JAR_BALL

value: dubbo-server.jar

imagePullPolicy: IfNotPresent

imagePullSecrets:

- name: harbor

restartPolicy: Always

terminationGracePeriodSeconds: 30

securityContext:

runAsUser: 0

schedulerName: default-scheduler

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

maxSurge: 1

revisionHistoryLimit: 7

progressDeadlineSeconds: 600

EOF

在master节点(mfyxw30或mfyxw40其任意一台执行如下命令)

先将之前运行的dubbo-demo-service的pod删除,再重新生成

[root@mfyxw30 ~]# kubectl delete -f http://k8s-yaml.od.com/dubbo-demo-service/deployment.yaml

deployment.extensions "dubbo-demo-service" deleted

[root@mfyxw30 ~]# kubectl apply -f http://k8s-yaml.od.com/dubbo-demo-service/deployment.yaml

deployment.extensions/dubbo-demo-service created

再次登录promethus,在Targets查看blackbox_tcp_pod_probe是否已经监控到

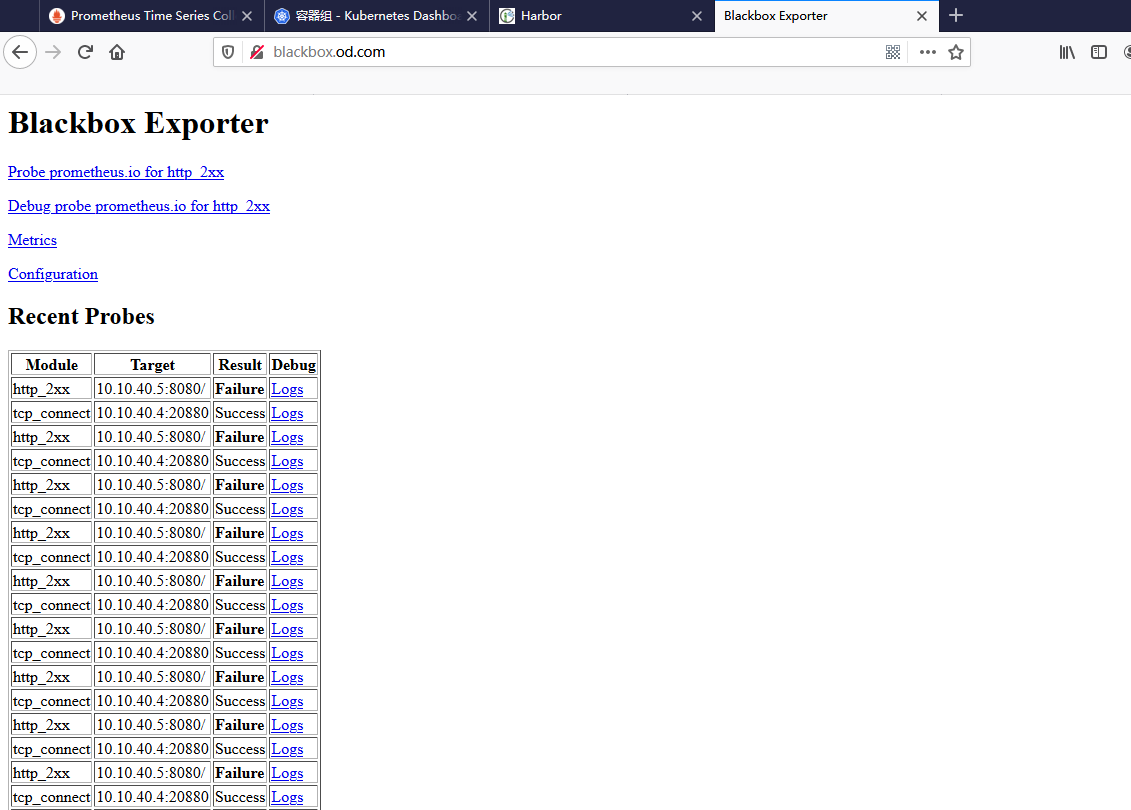

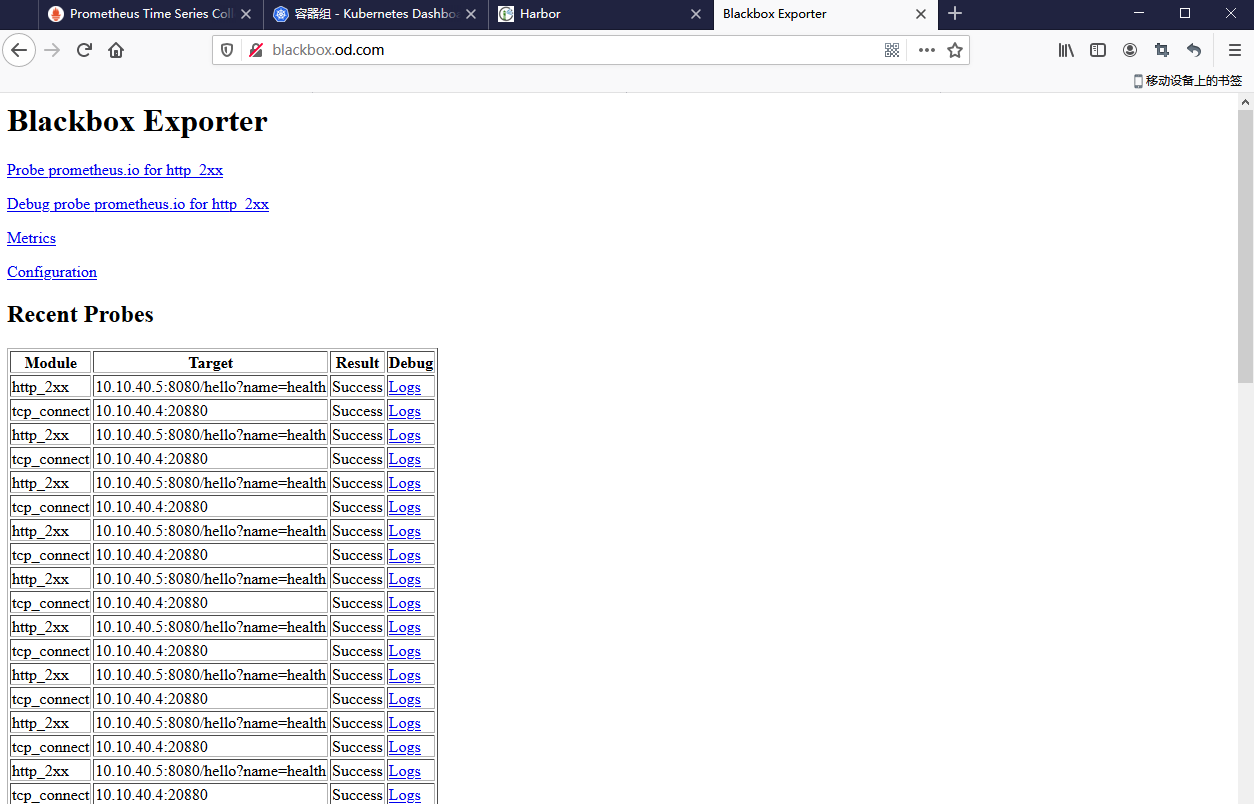

(3)blackbox:监控http服务是否存活

blackbox_http_pod_probe:监控http协议服务是否存活,此处就以dubbo消费者(dubbo-demo-consumer)做演示

只需要在template.metadata下添加annotations即可

annotations:

blackbox_path: /

blackbox_port: "8080"

blackbox_scheme: http

因dubbo-demo-consumer的根目录下没有内容,如不做修改直接使用/,会一直报错(Failure)

故将/修改为/hello?name=health

修改后的dubbo-demo-consumer的deployment.yaml文件内容如下:(先使用/来做测试,看看会报什么错,再修改为/hello?name=health)

[root@mfyxw50 ~]# cat > /data/k8s-yaml/dubbo-demo-consumer/deployment.yaml << EOF

kind: Deployment

apiVersion: extensions/v1beta1

metadata:

name: dubbo-demo-consumer

namespace: app

labels:

name: dubbo-demo-consumer

spec:

replicas: 1

selector:

matchLabels:

name: dubbo-demo-consumer

template:

metadata:

labels:

app: dubbo-demo-consumer

name: dubbo-demo-consumer

annotations:

blackbox_path: /hello?name=health

blackbox_port: "8080"

blackbox_scheme: http

spec:

containers:

- name: dubbo-demo-consumer

image: harbor.od.com/app/dubbo-demo-consumer:master_20200614_2211

ports:

- containerPort: 8080

protocol: TCP

- containerPort: 20880

protocol: TCP

env:

- name: JAR_BALL

value: dubbo-client.jar

imagePullPolicy: IfNotPresent

imagePullSecrets:

- name: harbor

restartPolicy: Always

terminationGracePeriodSeconds: 30

securityContext:

runAsUser: 0

schedulerName: default-scheduler

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

maxSurge: 1

revisionHistoryLimit: 7

progressDeadlineSeconds: 600

EOF

在master节点(mfyxw30或mfyxw40其任意一台执行如下命令)

先将之前运行的dubbo-demo-consumer的pod删除,再重新生成

[root@mfyxw30 ~]# kubectl delete -f http://k8s-yaml.od.com/dubbo-demo-consumer/deployment.yaml

deployment.extensions "dubbo-demo-consumer" deleted

[root@mfyxw30 ~]# kubectl apply -f http://k8s-yaml.od.com/dubbo-demo-consumer/deployment.yaml

deployment.extensions/dubbo-demo-consumer created

(4)kubernetes-pods:监控JVM信息

只需要在template.metadata下添加annotations即可

annotations:

prometheus_io_scrape: true

prometheus_io_port: "12346"

prometheus_io_path: /

此处还是以dubbo-demo-service(提供者)与dubbo-demo-consumer(消费者)来做例子

在运维主机(mfyxw50.mfyxw.com)上执行操作

修改dubbo-demo-service的deployment.yaml如下:

[root@mfyxw50 ~]# cat > /data/k8s-yaml/dubbo-demo-service/deployment.yaml << EOF

kind: Deployment

apiVersion: extensions/v1beta1

metadata:

name: dubbo-demo-service

namespace: app

labels:

name: dubbo-demo-service

spec:

replicas: 1

selector:

matchLabels:

name: dubbo-demo-service

template:

metadata:

labels:

app: dubbo-demo-service

name: dubbo-demo-service

annotations:

blackbox_port: "20880"

blackbox_scheme: tcp

prometheus_io_scrape: "true"

prometheus_io_port: "12346"

prometheus_io_path: /

spec:

containers:

- name: dubbo-demo-service

image: harbor.od.com/app/dubbo-demo-service:master_20200613_1929

ports:

- containerPort: 20880

protocol: TCP

env:

- name: JAR_BALL

value: dubbo-server.jar

imagePullPolicy: IfNotPresent

imagePullSecrets:

- name: harbor

restartPolicy: Always

terminationGracePeriodSeconds: 30

securityContext:

runAsUser: 0

schedulerName: default-scheduler

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

maxSurge: 1

revisionHistoryLimit: 7

progressDeadlineSeconds: 600

EOF

修改dubbo-demo-consumer的deployment.yaml如下:

[root@mfyxw50 ~]# cat > /data/k8s-yaml/dubbo-demo-consumer/deployment.yaml << EOF

kind: Deployment

apiVersion: extensions/v1beta1

metadata:

name: dubbo-demo-consumer

namespace: app

labels:

name: dubbo-demo-consumer

spec:

replicas: 1

selector:

matchLabels:

name: dubbo-demo-consumer

template:

metadata:

labels:

app: dubbo-demo-consumer

name: dubbo-demo-consumer

annotations:

blackbox_path: /hello?name=health

blackbox_port: "8080"

blackbox_scheme: http

prometheus_io_scrape: "true"

prometheus_io_port: "12346"

prometheus_io_path: /

spec:

containers:

- name: dubbo-demo-consumer

image: harbor.od.com/app/dubbo-demo-consumer:master_20200614_2211

ports:

- containerPort: 8080

protocol: TCP

- containerPort: 20880

protocol: TCP

env:

- name: JAR_BALL

value: dubbo-client.jar

imagePullPolicy: IfNotPresent

imagePullSecrets:

- name: harbor

restartPolicy: Always

terminationGracePeriodSeconds: 30

securityContext:

runAsUser: 0

schedulerName: default-scheduler

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

maxSurge: 1

revisionHistoryLimit: 7

progressDeadlineSeconds: 600

EOF

删除已经运行的pod,重新创建pod

在master节点(mfyxw30或mfyxw40)任意一台执行即可

[root@mfyxw30 ~]# kubectl delete -f http://k8s-yaml.od.com/dubbo-demo-service/deployment.yaml

deployment.extensions "dubbo-demo-service" deleted

[root@mfyxw30 ~]#

[root@mfyxw30 ~]# kubectl delete -f http://k8s-yaml.od.com/dubbo-demo-consumer/deployment.yaml

deployment.extensions "dubbo-demo-consumer" deleted

[root@mfyxw30 ~]#

[root@mfyxw30 ~]# kubectl apply -f http://k8s-yaml.od.com/dubbo-demo-service/deployment.yaml

deployment.extensions/dubbo-demo-service created

[root@mfyxw30 ~]#

[root@mfyxw30 ~]# kubectl apply -f http://k8s-yaml.od.com/dubbo-demo-consumer/deployment.yaml

deployment.extensions/dubbo-demo-consumer created

7.安装部署配置Grafana

grafana官方dockerhub地址:https://hub.docker.com/r/grafana/grafana

grafana官方github地址:https://github.com/grafana/grafana

grafana官网:https://grafana.com/

在运维主机(mfyxw50.mfyxw.com)操作

(1)下载grafana 镜像文件

[root@mfyxw50 ~]# docker pull grafana/grafana:5.4.2

5.4.2: Pulling from grafana/grafana

a5a6f2f73cd8: Pull complete

08e6195c0f29: Pull complete

b7bd3a2a524c: Pull complete

d3421658103b: Pull complete

cd7c84229877: Pull complete

49917e11f039: Pull complete

Digest: sha256:b9a31857e86e9cf43552605bd7f3c990c123f8792ab6bea8f499db1a1bdb7d53

Status: Downloaded newer image for grafana/grafana:5.4.2

docker.io/grafana/grafana:5.4.2

(2)将grafana打标签并上传至私有仓库

[root@mfyxw50 ~]# docker images | grep grafana

grafana/grafana 5.4.2 6f18ddf9e552 20 months ago 243MB

[root@mfyxw50 ~]#

[root@mfyxw50 ~]# docker tag grafana/grafana:5.4.2 harbor.od.com/infra/grafana:5.4.2

[root@mfyxw50 ~]#

[root@mfyxw50 ~]# docker login harbor.od.com

Authenticating with existing credentials...

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

[root@mfyxw50 ~]#

[root@mfyxw50 ~]# docker push harbor.od.com/infra/grafana:5.4.2

The push refers to repository [harbor.od.com/infra/grafana]

8e6f0f1fe3f4: Pushed

f8bf0b7b071d: Pushed

5dde66caf2d2: Pushed

5c8801473422: Pushed

11f89658f27f: Pushed

ef68f6734aa4: Pushed

5.4.2: digest: sha256:b9a31857e86e9cf43552605bd7f3c990c123f8792ab6bea8f499db1a1bdb7d53 size: 1576

(3)提供grafana资源配置文件

先创建目录用于存放grafana资源配置文件

[root@mfyxw50 ~]# mkdir -p /data/k8s-yaml/grafana

创建grafana资源配置文件

rbac.yaml内容如下:

[root@mfyxw50 ~]# cat >/data/k8s-yaml/grafana/rbac.yaml << EOF

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/cluster-service: "true"

name: grafana

rules:

- apiGroups:

- "*"

resources:

- namespaces

- deployments

- pods

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/cluster-service: "true"

name: grafana

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: grafana

subjects:

- kind: User

name: k8s-node

EOF

deployment.yaml文件内容如下:

[root@mfyxw50 ~]# cat >/data/k8s-yaml/grafana/deployment.yaml << EOF

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

labels:

app: grafana

name: grafana

name: grafana

namespace: infra

spec:

progressDeadlineSeconds: 600

replicas: 1

revisionHistoryLimit: 7

selector:

matchLabels:

name: grafana

strategy:

rollingUpdate:

maxSurge: 1

maxUnavailable: 1

type: RollingUpdate

template:

metadata:

labels:

app: grafana

name: grafana

spec:

containers:

- name: grafana

image: harbor.od.com/infra/grafana:5.4.2

imagePullPolicy: IfNotPresent

ports:

- containerPort: 3000

protocol: TCP

volumeMounts:

- mountPath: /var/lib/grafana

name: data

imagePullSecrets:

- name: harbor

securityContext:

runAsUser: 0

volumes:

- nfs:

server: mfyxw50

path: /data/nfs-volume/grafana

name: data

EOF

service.yaml文件内容如下:

[root@mfyxw50 ~]# cat >/data/k8s-yaml/grafana/service.yaml << EOF

apiVersion: v1

kind: Service

metadata:

name: grafana

namespace: infra

spec:

ports:

- port: 3000

protocol: TCP

targetPort: 3000

selector:

app: grafana

EOF

ingress.yaml文件内容如下:

[root@mfyxw50 ~]# cat >/data/k8s-yaml/grafana/ingress.yaml << EOF

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: grafana

namespace: infra

spec:

rules:

- host: grafana.od.com

http:

paths:

- path: /

backend:

serviceName: grafana

servicePort: 3000

EOF

(4)创建grafana持久存储(NFS目录)

[root@mfyxw50 ~]# mkdir -p /data/nfs-volume/grafana

(5)解析域名

在DNS服务器(mfyxw10)执行操作

添加grafana.od.com域名解析

[root@mfyxw10 ~]# cat > /var/named/od.com.zone << EOF

$ORIGIN od.com.

$TTL 600 ; 10 minutes

@ IN SOA dns.od.com. dnsadmin.od.com. (

;序号请加1,表示比之前版本要新

2020031316 ; serial

10800 ; refresh (3 hours)

900 ; retry (15 minutes)

604800 ; expire (1 week)

86400 ; minimum (1 day)

)

NS dns.od.com.

$TTL 60 ; 1 minute

dns A 192.168.80.10

harbor A 192.168.80.50 ;添加harbor记录

k8s-yaml A 192.168.80.50

traefik A 192.168.80.100

dashboard A 192.168.80.100

zk1 A 192.168.80.10

zk2 A 192.168.80.20

zk3 A 192.168.80.30

jenkins A 192.168.80.100

dubbo-monitor A 192.168.80.100

demo A 192.168.80.100

mysql A 192.168.80.10

config A 192.168.80.100

portal A 192.168.80.100

zk-test A 192.168.80.10

zk-prod A 192.168.80.20

config-test A 192.168.80.100

config-prod A 192.168.80.100

demo-test A 192.168.80.100

demo-prod A 192.168.80.100

blackbox A 192.168.80.100

prometheus A 192.168.80.100

grafana A 192.168.80.100

EOF

重启dns服务

[root@mfyxw10 ~]# systemctl restart named

测试域名解析

[root@mfyxw10 ~]# dig -t A grafana.od.com @192.168.80.10 +short

192.168.80.100

(6)应用grafana资源配置清单

在master节点(mfyxw30或mfyxw40任意一台执行操作即可)

[root@mfyxw30 ~]# kubectl apply -f http://k8s-yaml.od.com/grafana/rbac.yaml

clusterrole.rbac.authorization.k8s.io/grafana created

clusterrolebinding.rbac.authorization.k8s.io/grafana created

[root@mfyxw30 ~]#

[root@mfyxw30 ~]# kubectl apply -f http://k8s-yaml.od.com/grafana/deployment.yaml

deployment.extensions/grafana created

[root@mfyxw30 ~]#

[root@mfyxw30 ~]# kubectl apply -f http://k8s-yaml.od.com/grafana/service.yaml

service/grafana created

[root@mfyxw30 ~]#

[root@mfyxw30 ~]# kubectl apply -f http://k8s-yaml.od.com/grafana/ingress.yaml

ingress.extensions/grafana created

[root@mfyxw30 ~]#

[root@mfyxw30 ~]# kubectl get pods -n infra | grep grafana

grafana-5b64c9d6d-tkldg 1/1 Running 0 105s

(7)登录grafana,默认用户和密码都为:admin

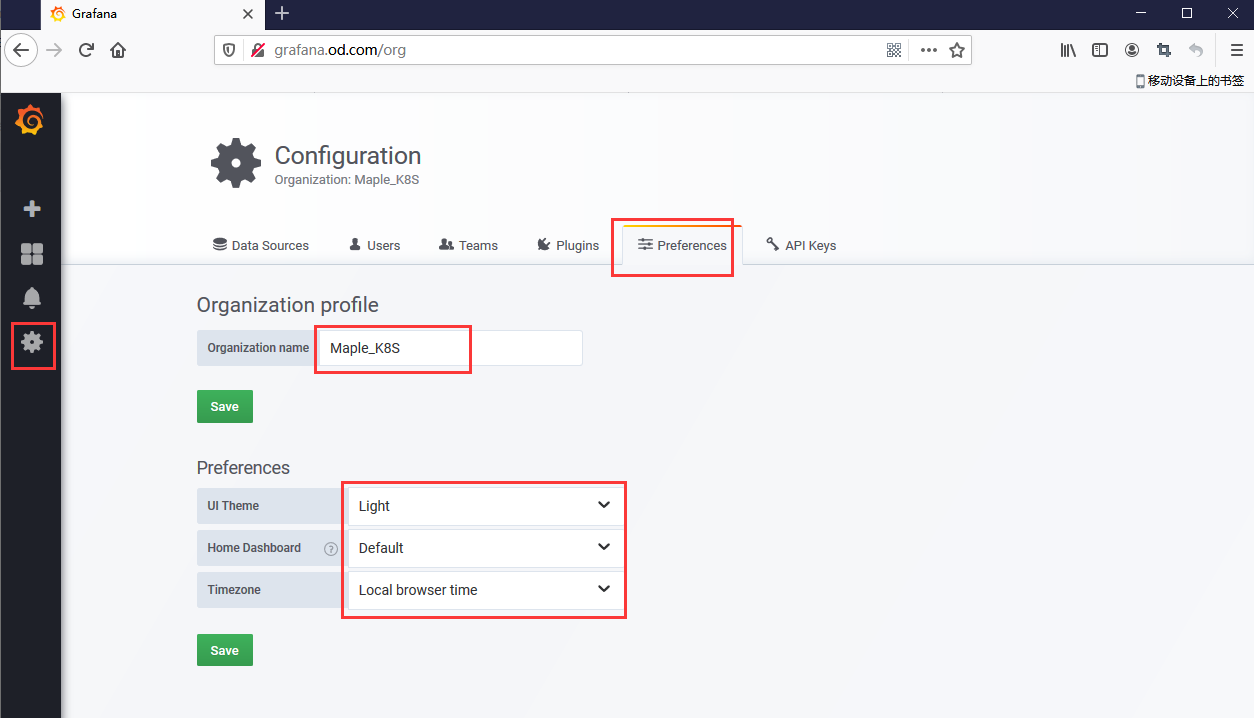

(8)对grafana进行设置

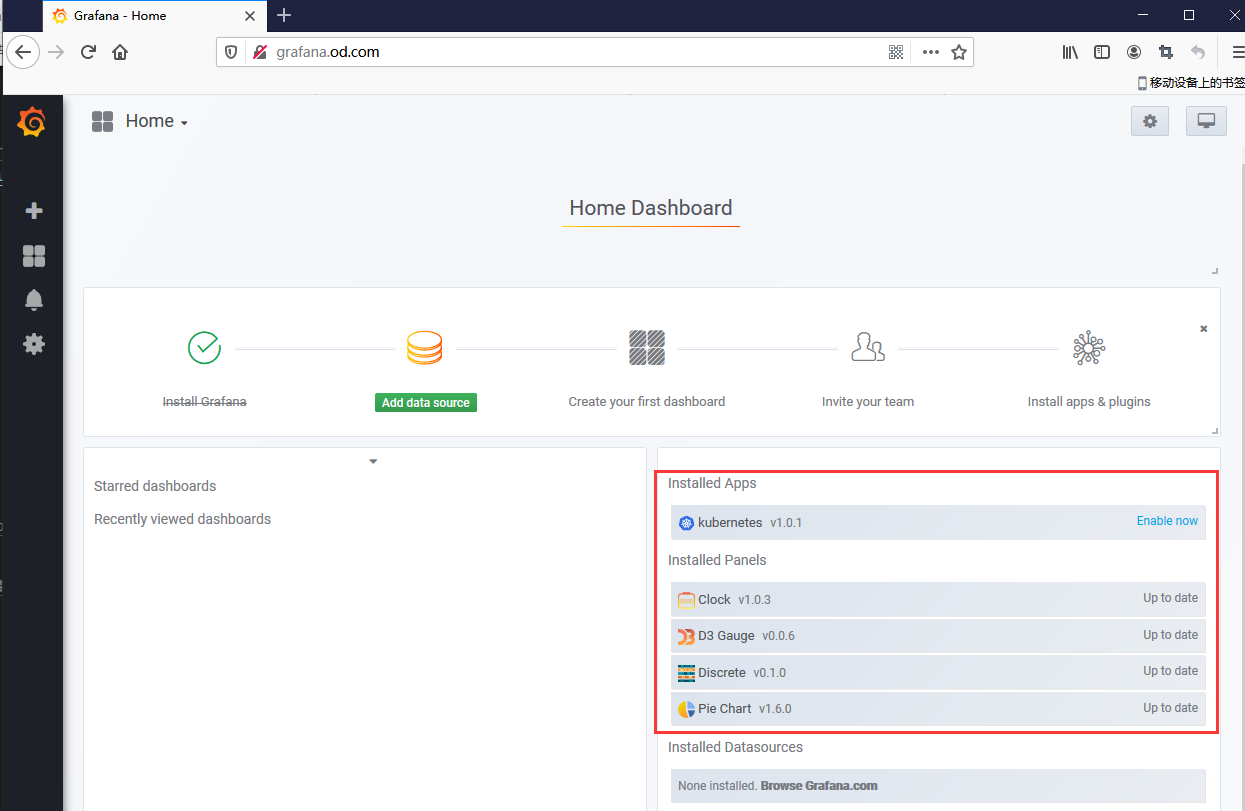

(9)登录grafana的pod,安装需要用到的插件

kubernetes插件

grafana-cli plugins install grafana-kubernetes-app

Clock插件

grafana-cli plugins install grafana-clock-panel

D3 Gauge插件

grafana-cli plugins install briangann-gauge-panel

Discrete插件

grafana-cli plugins install natel-discrete-panel

Pie Chart插件

grafana-cli plugins install grafana-piechart-panel

[root@mfyxw30 ~]# kubectl exec -it grafana-5b64c9d6d-tkldg -n infra -- /bin/bash

root@grafana-5b64c9d6d-tkldg:/usr/share/grafana# grafana-cli plugins install grafana-kubernetes-app

installing grafana-kubernetes-app @ 1.0.1

from url: https://grafana.com/api/plugins/grafana-kubernetes-app/versions/1.0.1/download

into: /var/lib/grafana/plugins

✔ Installed grafana-kubernetes-app successfully

Restart grafana after installing plugins . <service grafana-server restart>

root@grafana-5b64c9d6d-tkldg:/usr/share/grafana# grafana-cli plugins install grafana-clock-panel

installing grafana-clock-panel @ 1.0.3

from url: https://grafana.com/api/plugins/grafana-clock-panel/versions/1.0.3/download

into: /var/lib/grafana/plugins

✔ Installed grafana-clock-panel successfully

Restart grafana after installing plugins . <service grafana-server restart>

root@grafana-5b64c9d6d-tkldg:/usr/share/grafana# grafana-cli plugins install grafana-piechart-panel

installing grafana-piechart-panel @ 1.6.0

from url: https://grafana.com/api/plugins/grafana-piechart-panel/versions/1.6.0/download

into: /var/lib/grafana/plugins

✔ Installed grafana-piechart-panel successfully

Restart grafana after installing plugins . <service grafana-server restart>

root@grafana-5b64c9d6d-tkldg:/usr/share/grafana# grafana-cli plugins install briangann-gauge-panel

installing briangann-gauge-panel @ 0.0.6

from url: https://grafana.com/api/plugins/briangann-gauge-panel/versions/0.0.6/download

into: /var/lib/grafana/plugins

✔ Installed briangann-gauge-panel successfully

Restart grafana after installing plugins . <service grafana-server restart>

root@grafana-5b64c9d6d-tkldg:/usr/share/grafana# grafana-cli plugins install natel-discrete-panel

installing natel-discrete-panel @ 0.1.0

from url: https://grafana.com/api/plugins/natel-discrete-panel/versions/0.1.0/download

into: /var/lib/grafana/plugins

✔ Installed natel-discrete-panel successfully

Restart grafana after installing plugins . <service grafana-server restart>

插件 全部已经安装完成,现在需要将grafana删除,自动会再次创建一个让新安装的插件生效

重新登录至grafana可以在home页面上看到刚刚安装到的插件或者

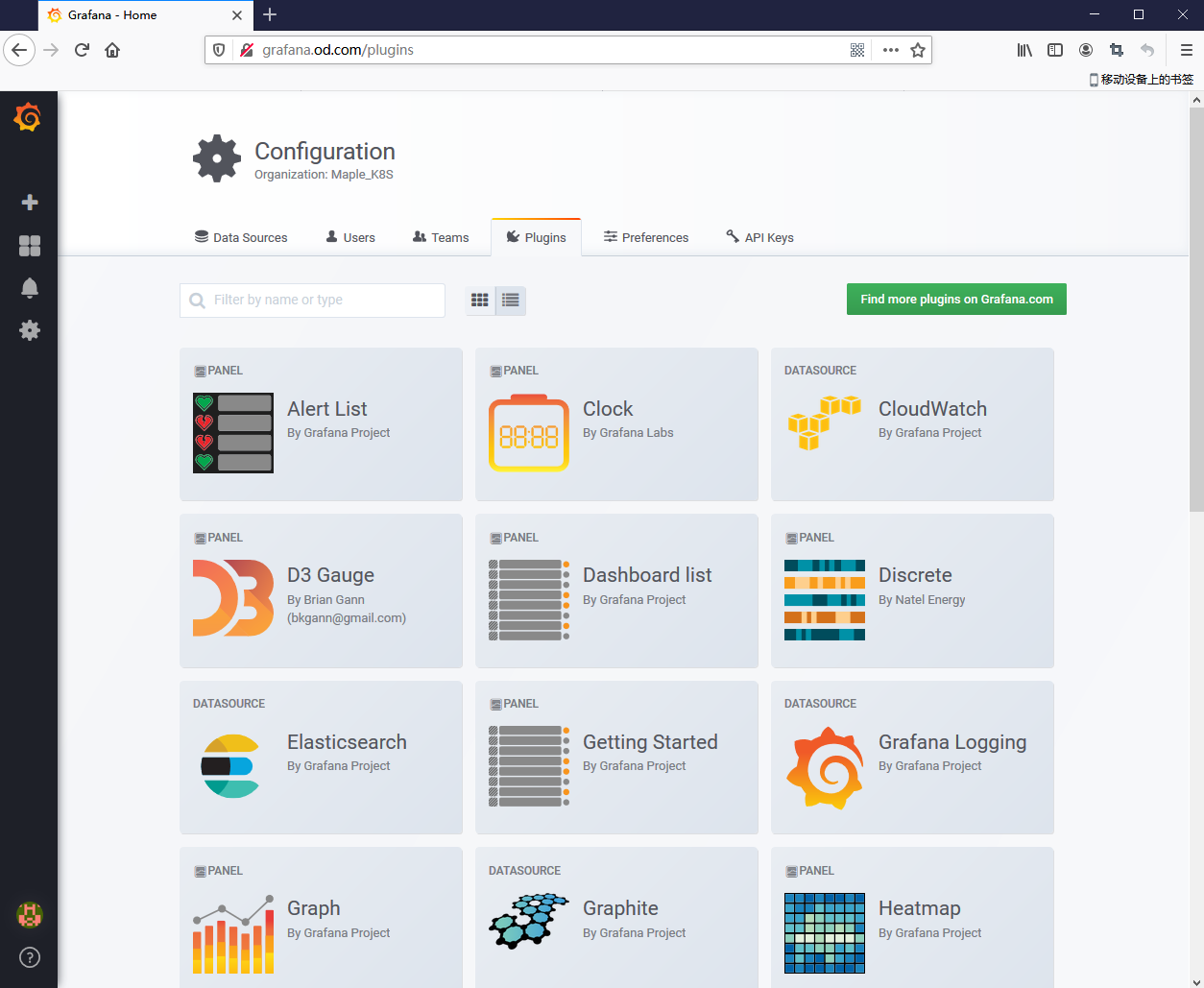

也可以在Configuration中找到Plugins看到安装的插件

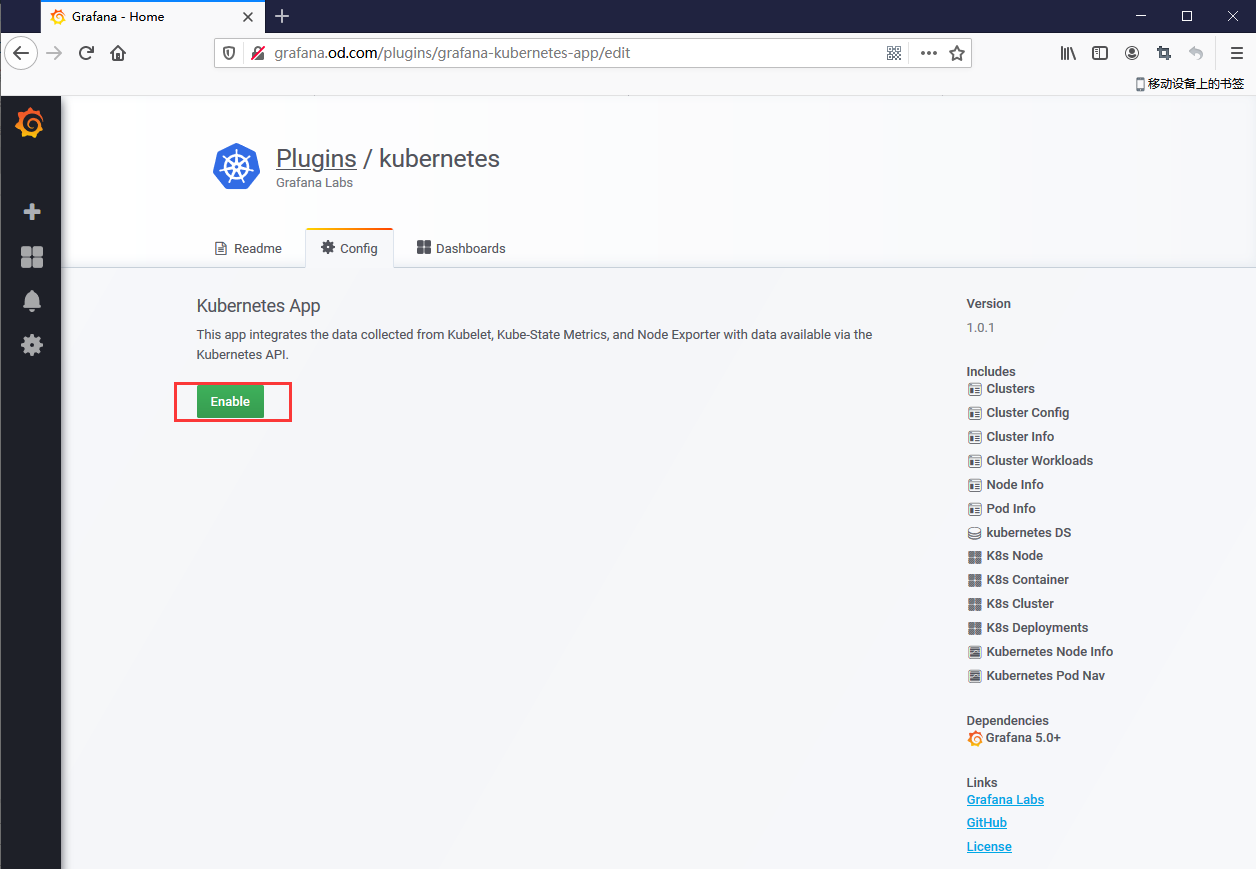

默认kubernetes插件安装成功是不启动的,故需要找到对应的插件,单击Enable进行开启

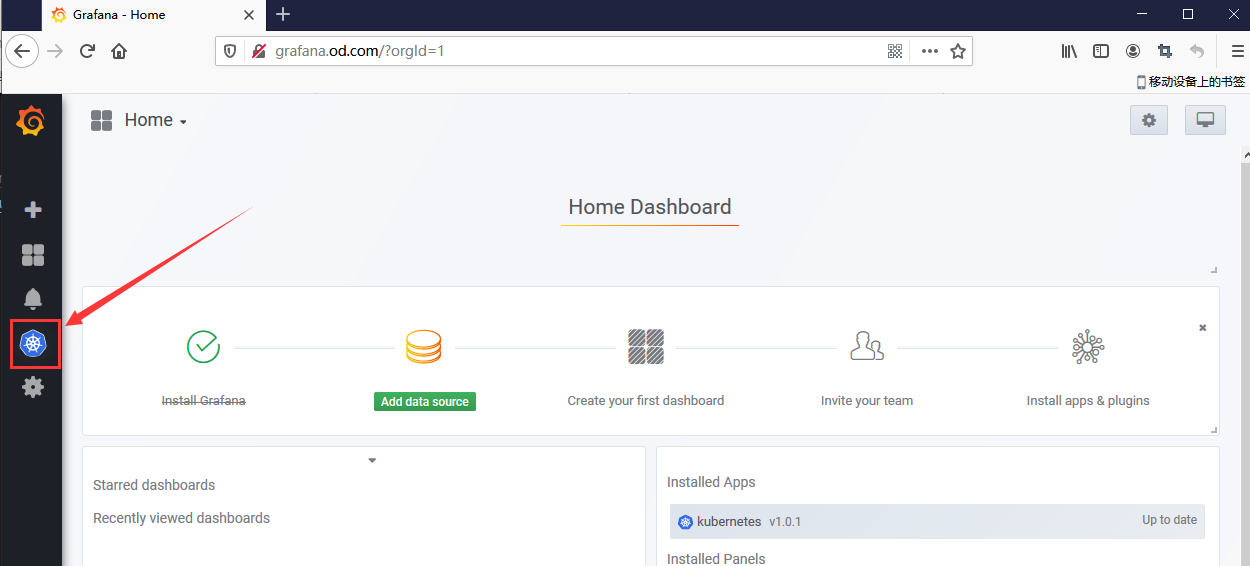

开启成功后会在Home页面的左侧显示kubernetes图标

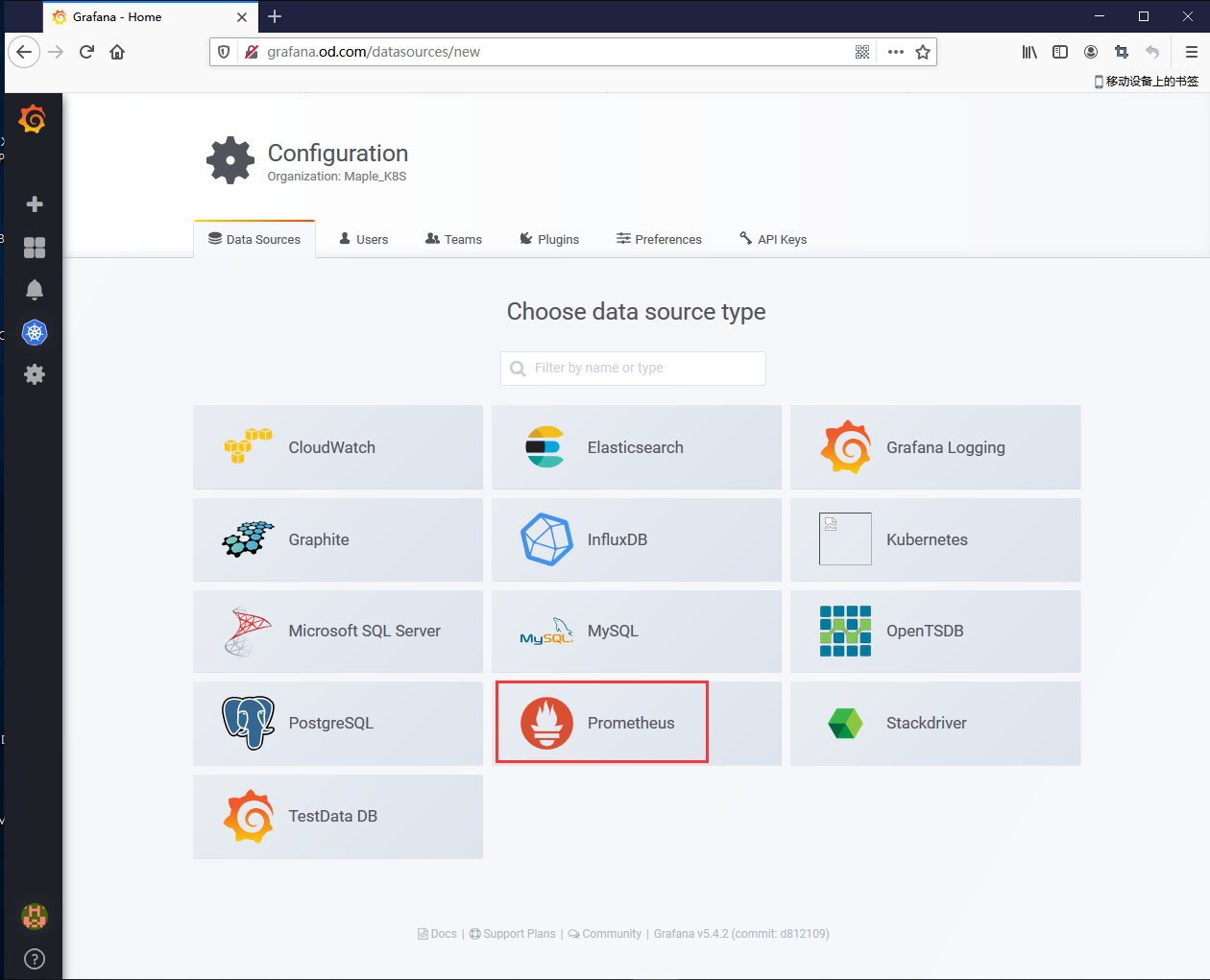

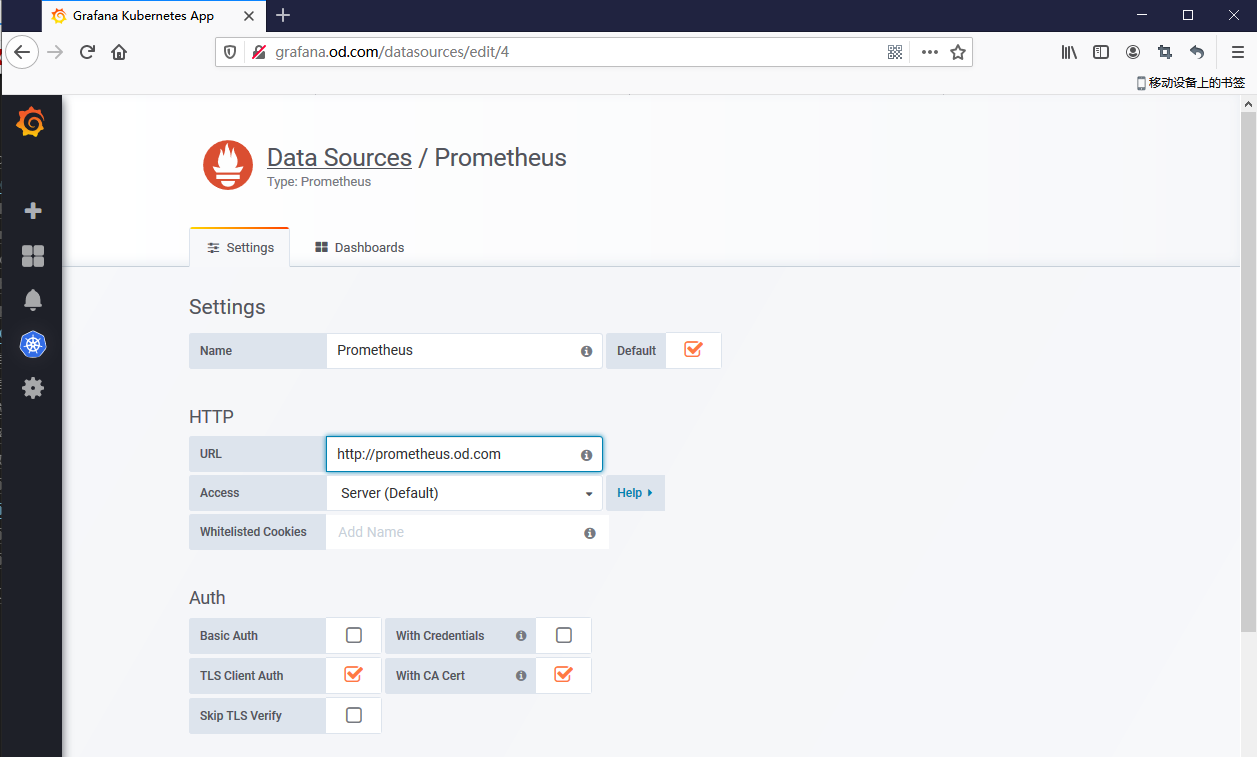

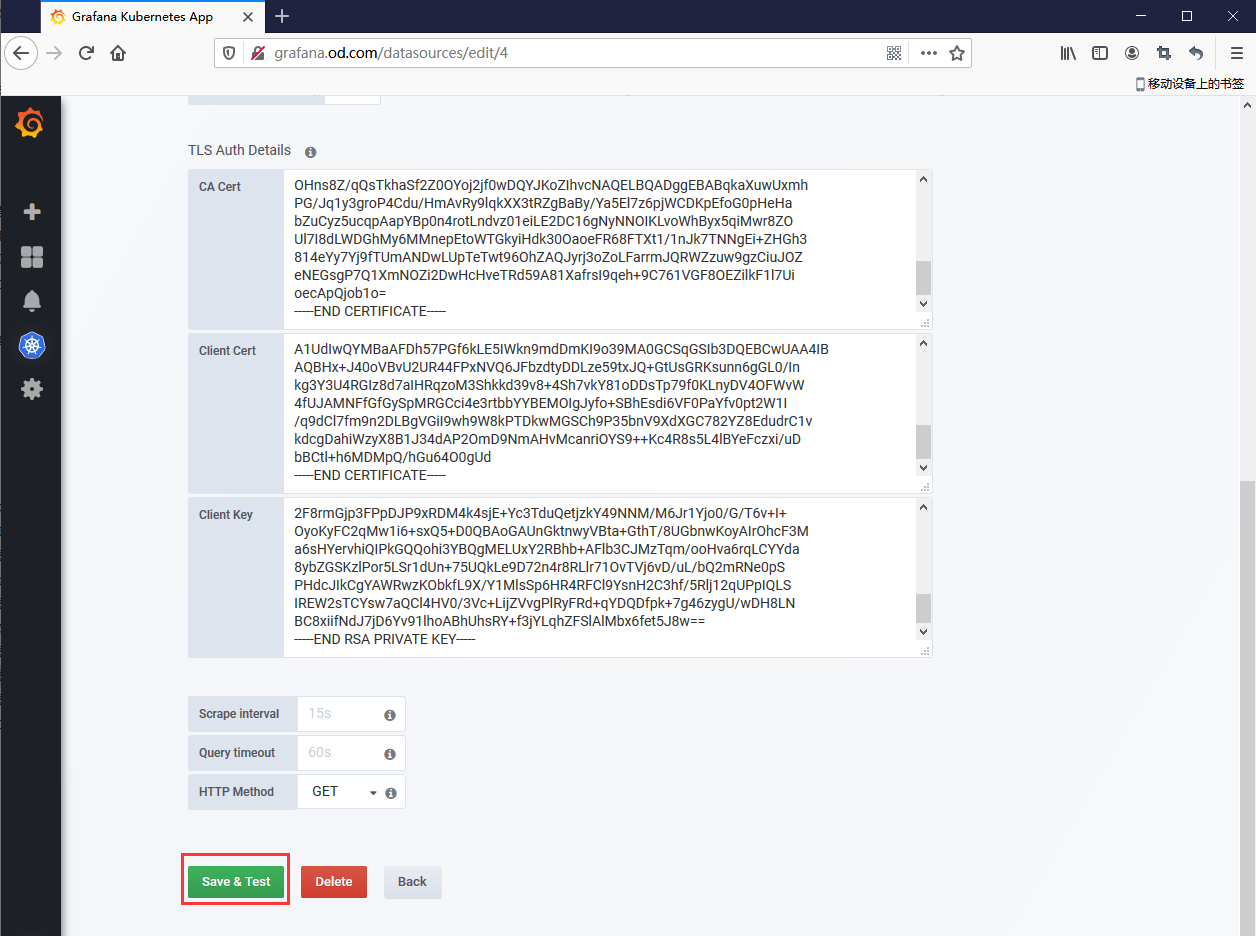

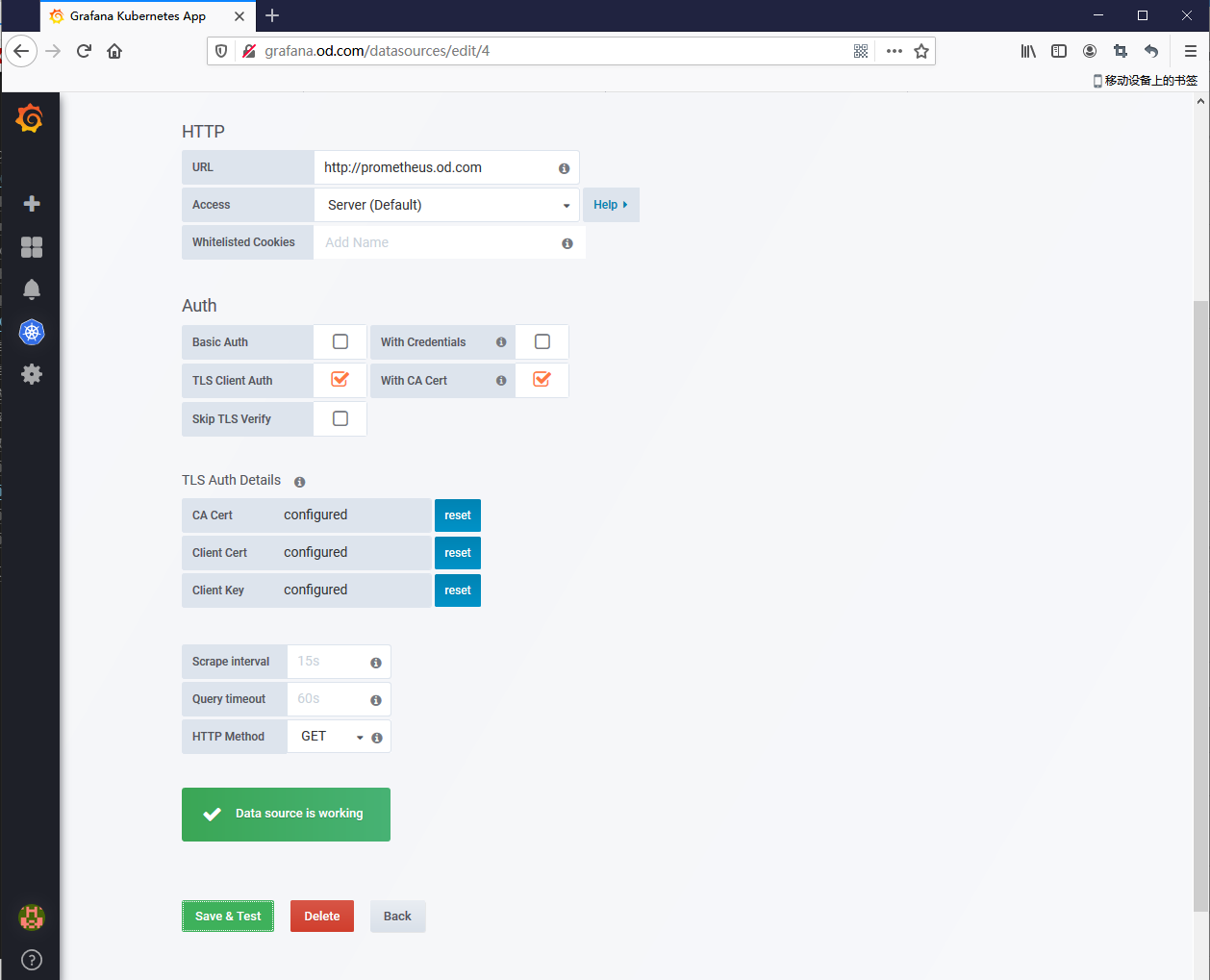

(10)配置数据源

单击 Configuration --> Data sources --> Add data source,选择promethus

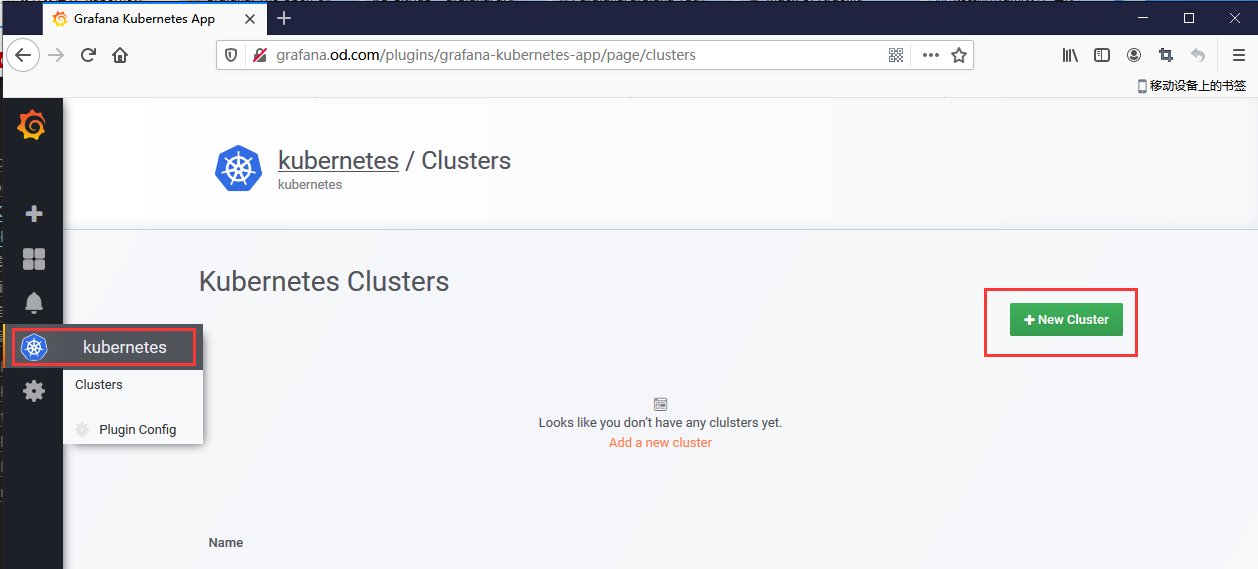

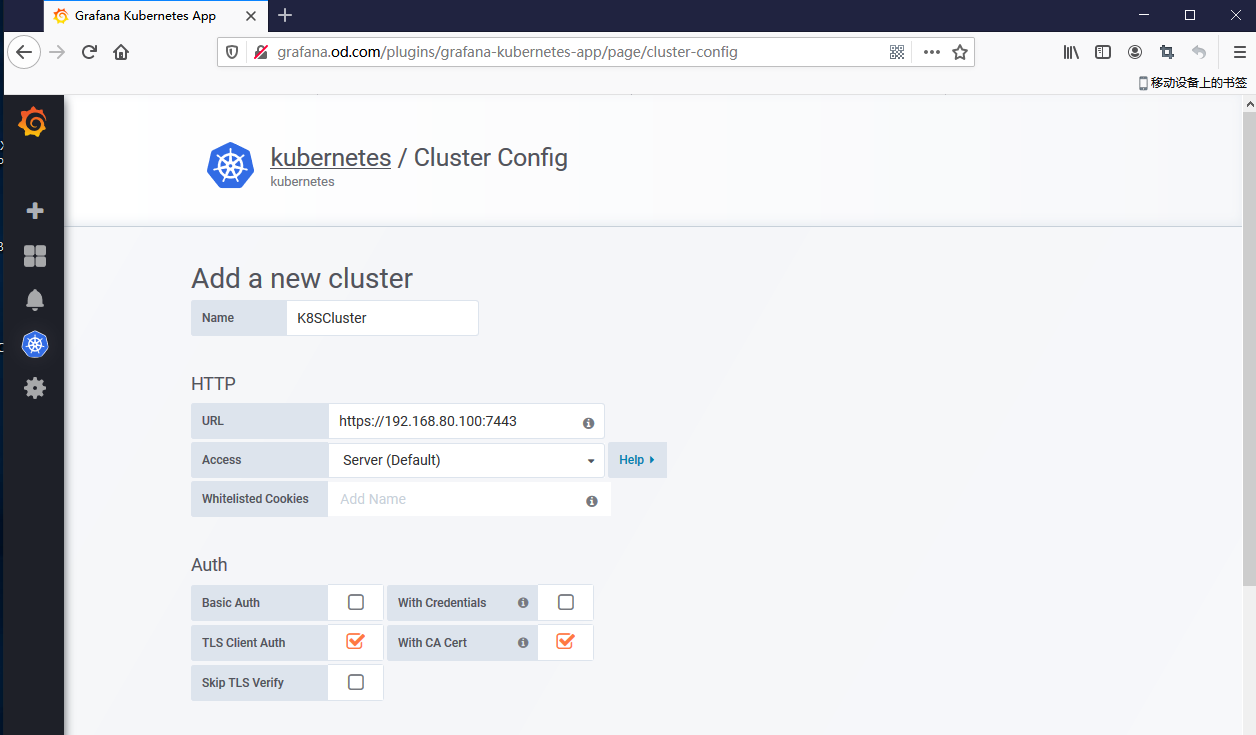

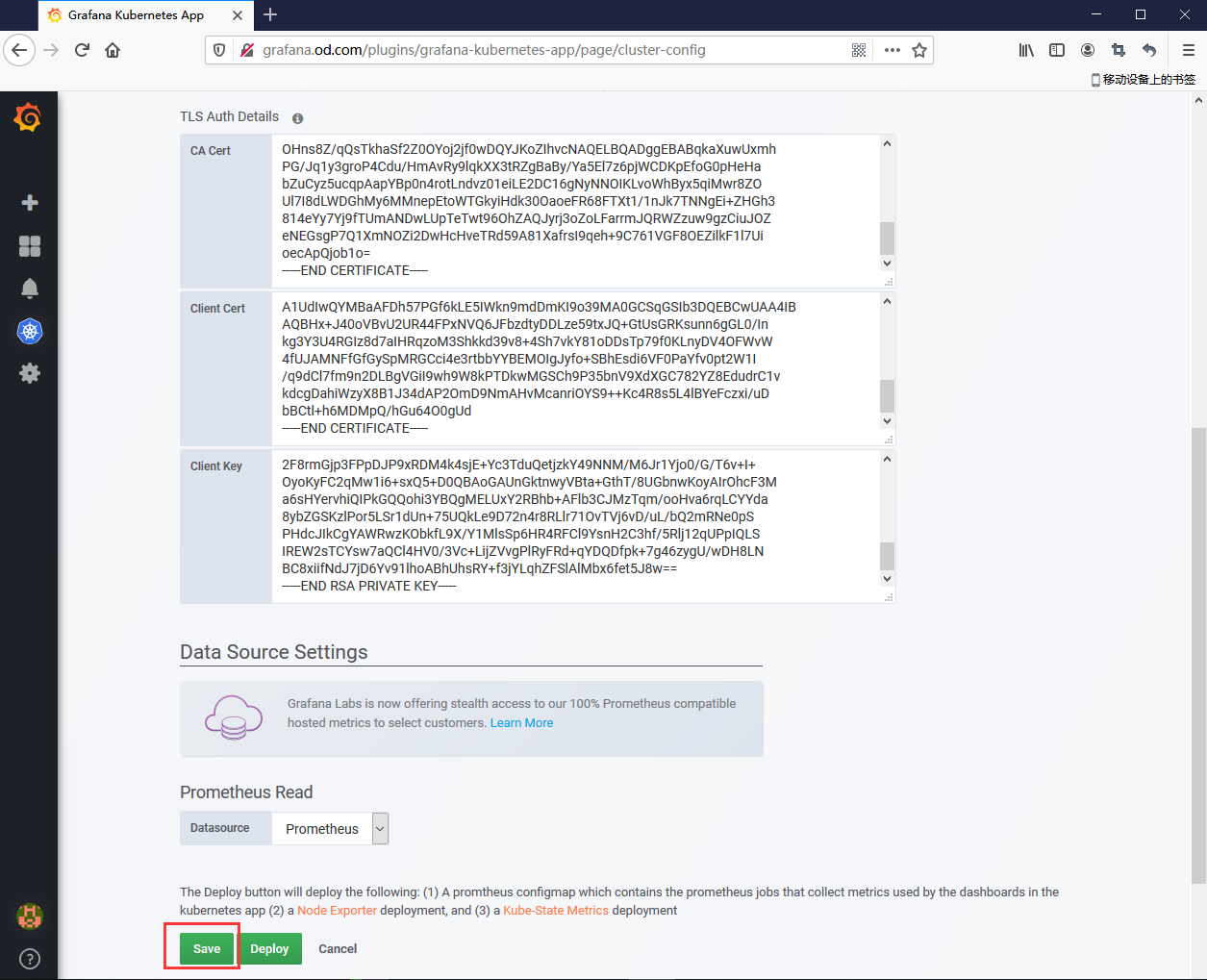

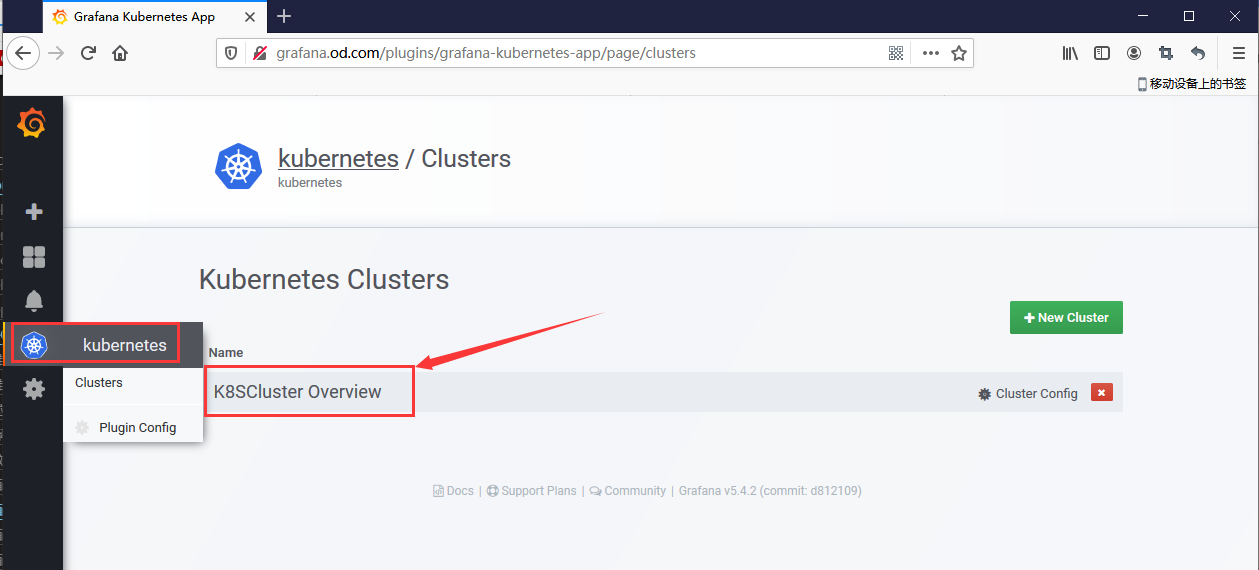

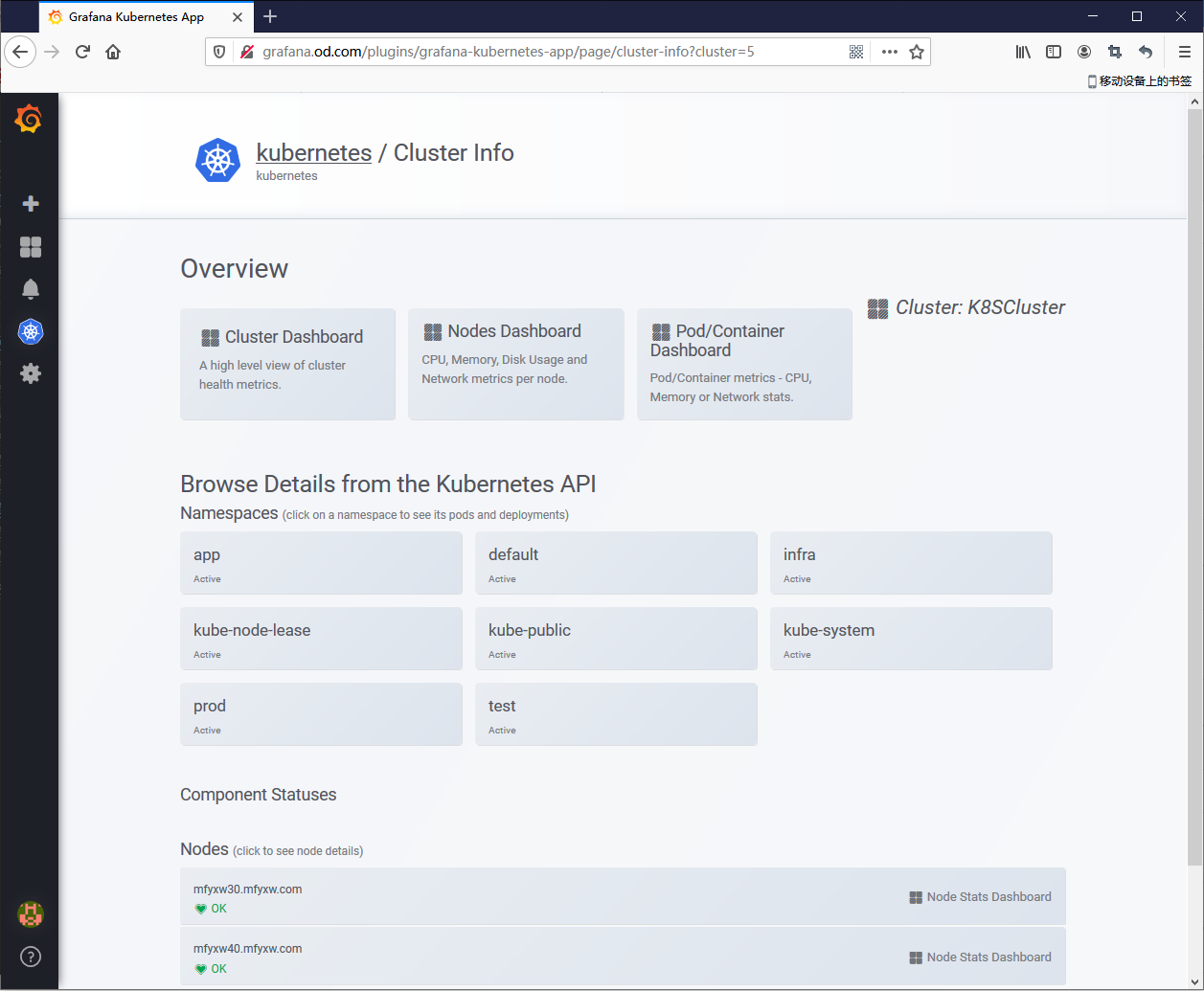

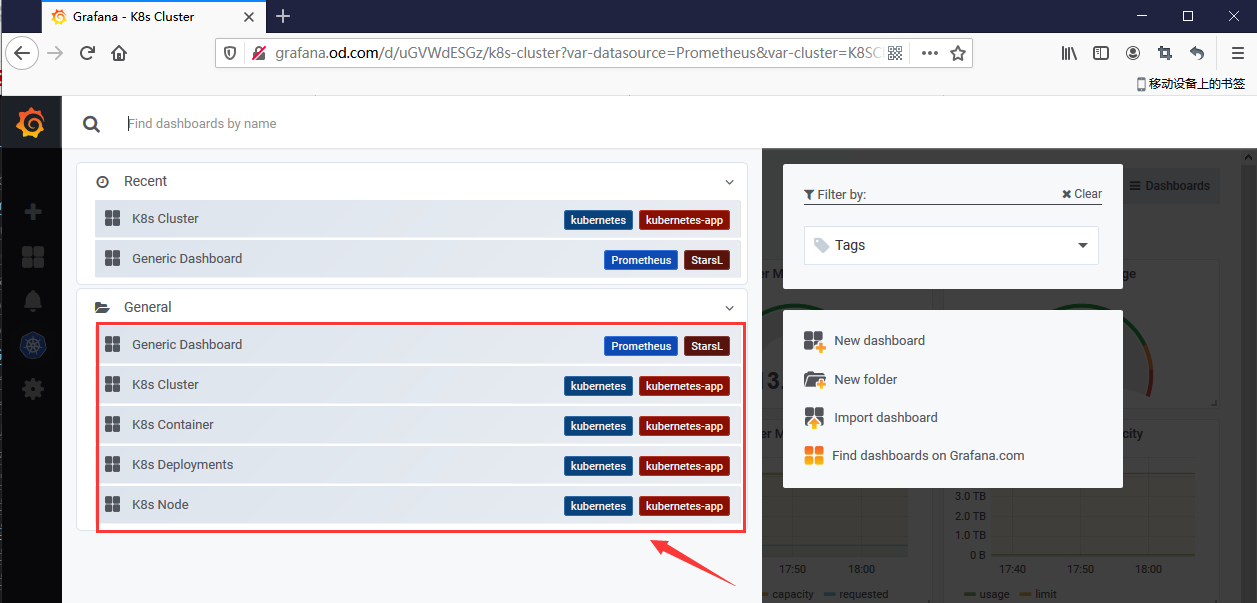

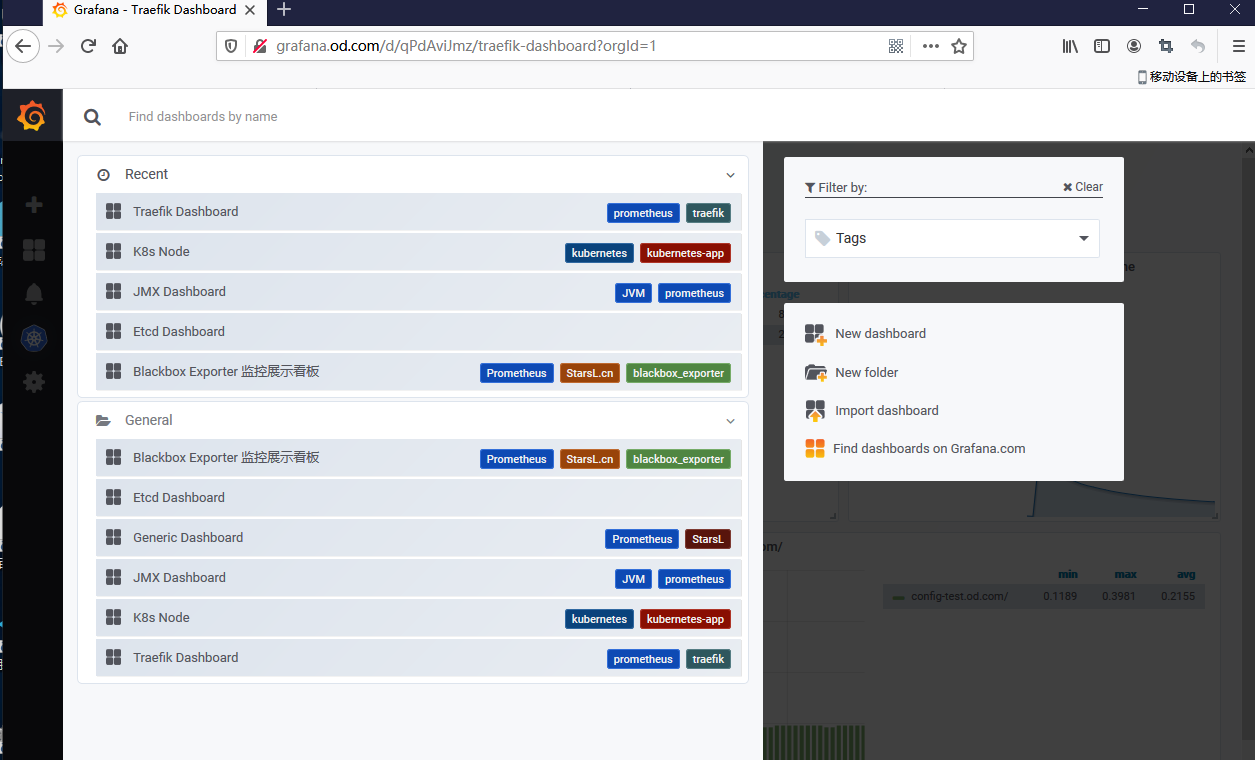

(11)配置Kubernetes集群Dashboard

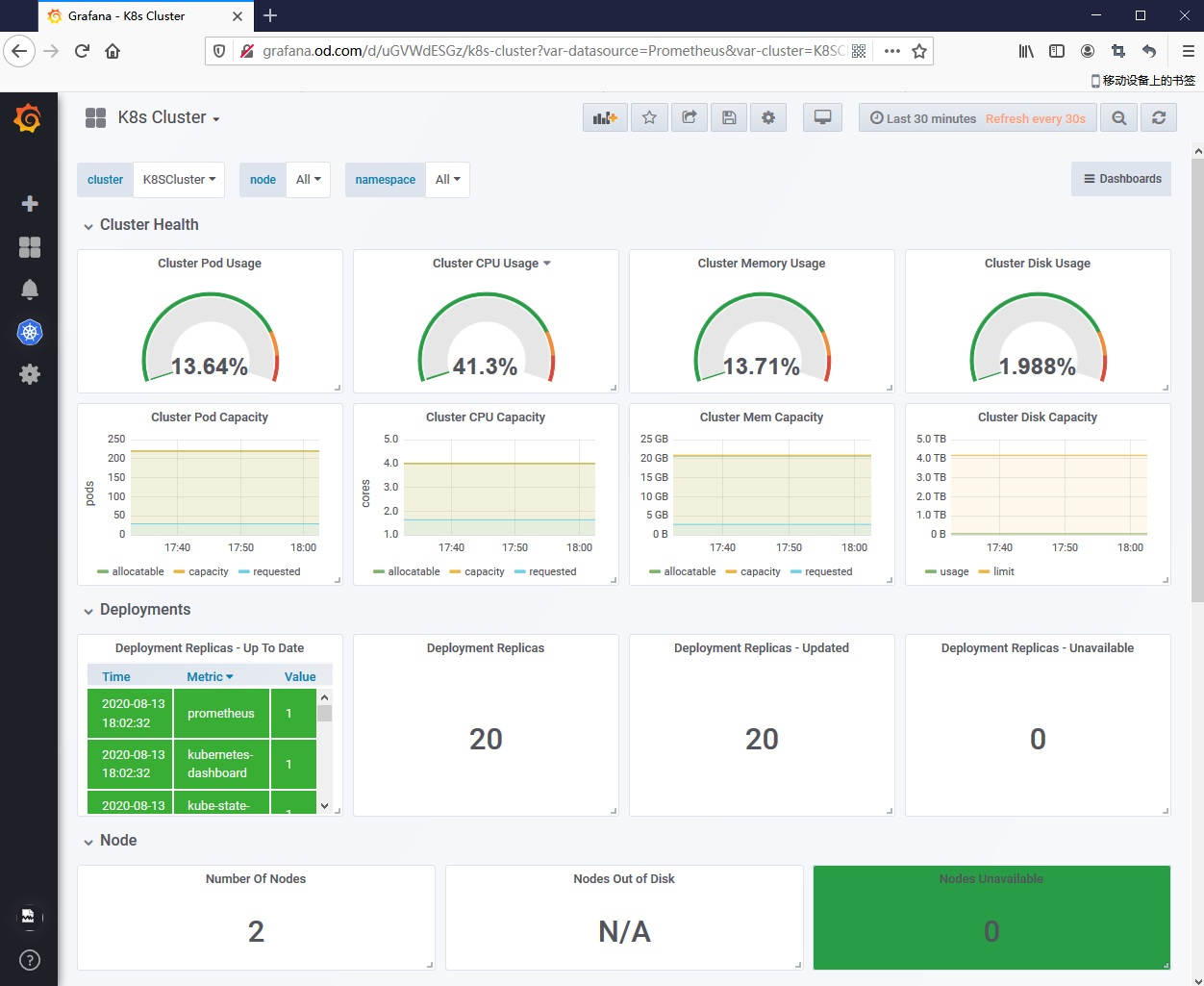

(12)查看grafana的dashboard情况

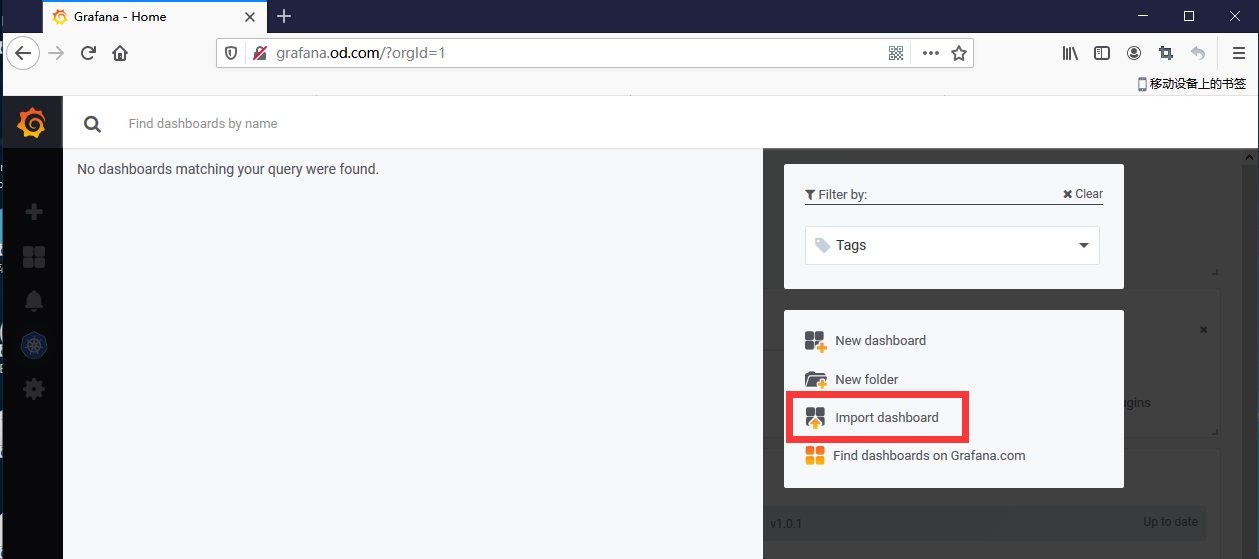

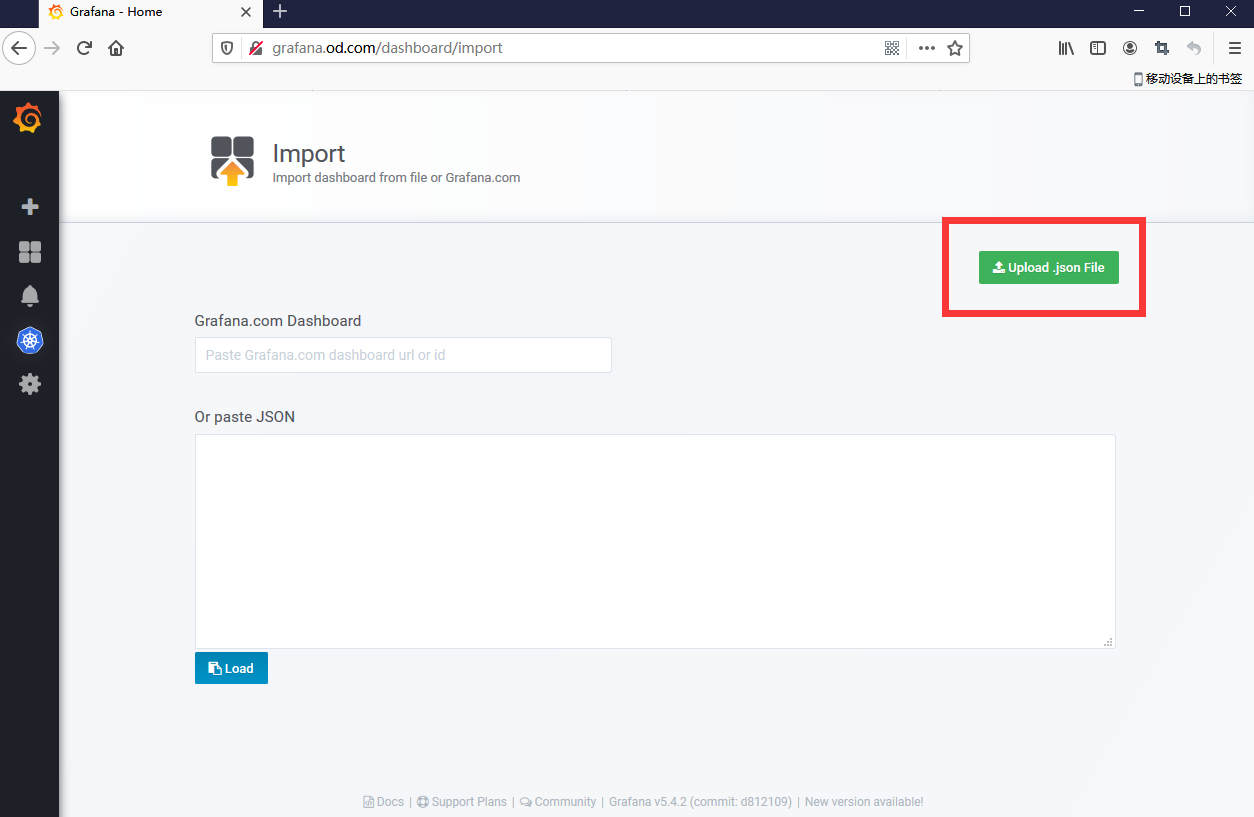

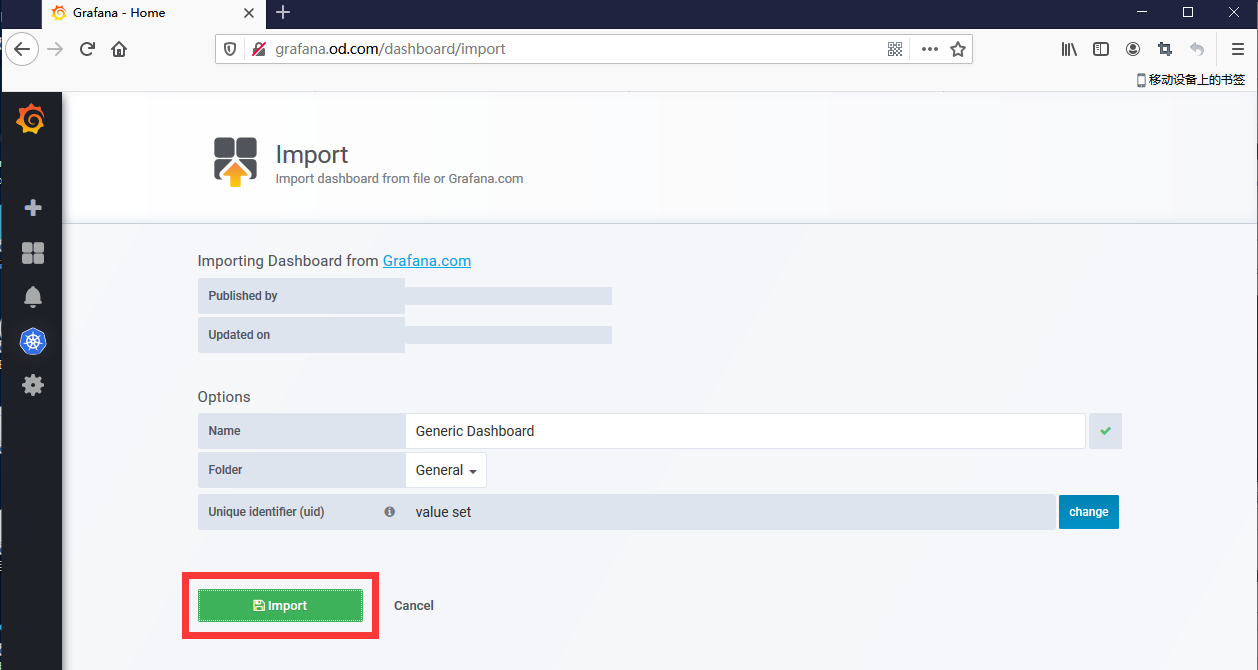

(12)删除自带的。导入优化好的

删除自带的

导入优化后的

导入完成的如下图所示

8.安装部署alertmanager

alertmanager的docker镜像仓库地址:https://hub.docker.com/r/prom/alertmanager/

在运维主机(mfyxw50)操作

(1)下载alertmanager镜像

[root@mfyxw50 ~]# docker pull prom/alertmanager:v0.19.0

v0.19.0: Pulling from prom/alertmanager

8e674ad76dce: Already exists

e77d2419d1c2: Already exists

fc0b06cce5a2: Pull complete

1cc6eb76696f: Pull complete

c4b97307695d: Pull complete

d49e70084386: Pull complete

Digest: sha256:7dbf4949a317a056d11ed8f379826b04d0665fad5b9334e1d69b23e946056cd3

Status: Downloaded newer image for prom/alertmanager:v0.19.0

docker.io/prom/alertmanager:v0.19.0

(2)给alertmanager打标签并上传至私有仓库

[root@mfyxw50 ~]# docker images | grep alertmanager

prom/alertmanager v0.19.0 30594e96cbe8 11 months ago 53.2MB

[root@mfyxw50 ~]#

[root@mfyxw50 ~]# docker tag prom/alertmanager:v0.19.0 harbor.od.com/infra/alertmanager:v0.19.0

[root@mfyxw50 ~]#

[root@mfyxw50 ~]# docker login harbor.od.com

Authenticating with existing credentials...

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

[root@mfyxw50 ~]#

[root@mfyxw50 ~]# docker push harbor.od.com/infra/alertmanager:v0.19.0

The push refers to repository [harbor.od.com/infra/alertmanager]

bb7386721ef9: Pushed

13b4609b0c95: Pushed

ba550e698377: Pushed

fa5b6d2332d5: Pushed

3163e6173fcc: Mounted from infra/prometheus

6194458b07fc: Mounted from infra/prometheus

v0.19.0: digest: sha256:8088fac0a74480912fbb76088247d0c4e934f1dd2bd199b52c40c1e9dba69917 size: 1575

(3)创建alertmanager的资源配置清单

创建存放资源配置文件的目录

[root@mfyxw50 ~]# mkdir -p /data/k8s-yaml/alertmanager

创建alertmanager资源配置文件

configmap.yaml文件内容如下:

[root@mfyxw50 ~]# cat > /data/k8s-yaml/alertmanager/configmap.yaml << EOF

apiVersion: v1

kind: ConfigMap

metadata:

name: alertmanager-config

namespace: infra

data:

config.yml: |-

global:

# 在没有报警的情况下声明为已解决的时间

resolve_timeout: 5m

# 配置邮件发送信息

smtp_smarthost: 'smtp.126.com:25'

smtp_from: 'attachiny@126.com'

smtp_auth_username: 'attachiny@126.com'

smtp_auth_password: '邮箱密码(授权码)'

smtp_require_tls: false

# 所有报警信息进入后的根路由,用来设置报警的分发策略

route:

# 这里的标签列表是接收到报警信息后的重新分组标签,例如,接收到的报警信息里面有许多具有 cluster=A 和 alertname=LatncyHigh 这样的标签的报警信息将会批量被聚合到一个分组里面

group_by: ['alertname', 'cluster']

# 当一个新的报警分组被创建后,需要等待至少group_wait时间来初始化通知,这种方式可以确保您能有足够的时间为同一分组来获取多个警报,然后一起触发这个报警信息。

group_wait: 30s

# 当第一个报警发送后,等待'group_interval'时间来发送新的一组报警信息。

group_interval: 5m

# 如果一个报警信息已经发送成功了,等待'repeat_interval'时间来重新发送他们

repeat_interval: 5m

# 默认的receiver:如果一个报警没有被一个route匹配,则发送给默认的接收器

receiver: default

receivers:

- name: 'default'

email_configs:

- to: '371314999@qq.com'

send_resolved: true

EOF

deployment.yaml文件内容如下:

[root@mfyxw50 ~]# cat > /data/k8s-yaml/alertmanager/deployment.yaml << EOF

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: alertmanager

namespace: infra

spec:

replicas: 1

selector:

matchLabels:

app: alertmanager

template:

metadata:

labels:

app: alertmanager

spec:

containers:

- name: alertmanager

image: harbor.od.com/infra/alertmanager:v0.19.0

args:

- "--config.file=/etc/alertmanager/config.yml"

- "--storage.path=/alertmanager"

ports:

- name: alertmanager

containerPort: 9093

volumeMounts:

- name: alertmanager-cm

mountPath: /etc/alertmanager

volumes:

- name: alertmanager-cm

configMap:

name: alertmanager-config

imagePullSecrets:

- name: harbor

EOF

service.yaml文件内容如下:

[root@mfyxw50 ~]# cat > /data/k8s-yaml/alertmanager/service.yaml << EOF

apiVersion: v1

kind: Service

metadata:

name: alertmanager

namespace: infra

spec:

selector:

app: alertmanager

ports:

- port: 80

targetPort: 9093

EOF

(4)应用alertmanager的资源配置清单

在master节点(mfyxw30或mfyxw40)任意一台执行即可

[root@mfyxw30 ~]# kubectl apply -f http://k8s-yaml.od.com/alertmanager/configmap.yaml

configmap/alertmanager-config created

[root@mfyxw30 ~]#

[root@mfyxw30 ~]# kubectl apply -f http://k8s-yaml.od.com/alertmanager/deployment.yaml

deployment.extensions/alertmanager created

[root@mfyxw30 ~]#

[root@mfyxw30 ~]# kubectl apply -f http://k8s-yaml.od.com/alertmanager/service.yaml

service/alertmanager created

(5)查看altermanager的pod是否已经启动

[root@mfyxw30 ~]# kubectl get pods -n infra | grep alertmanager

alertmanager-6754975dbf-csd49 1/1 Running 0 101s

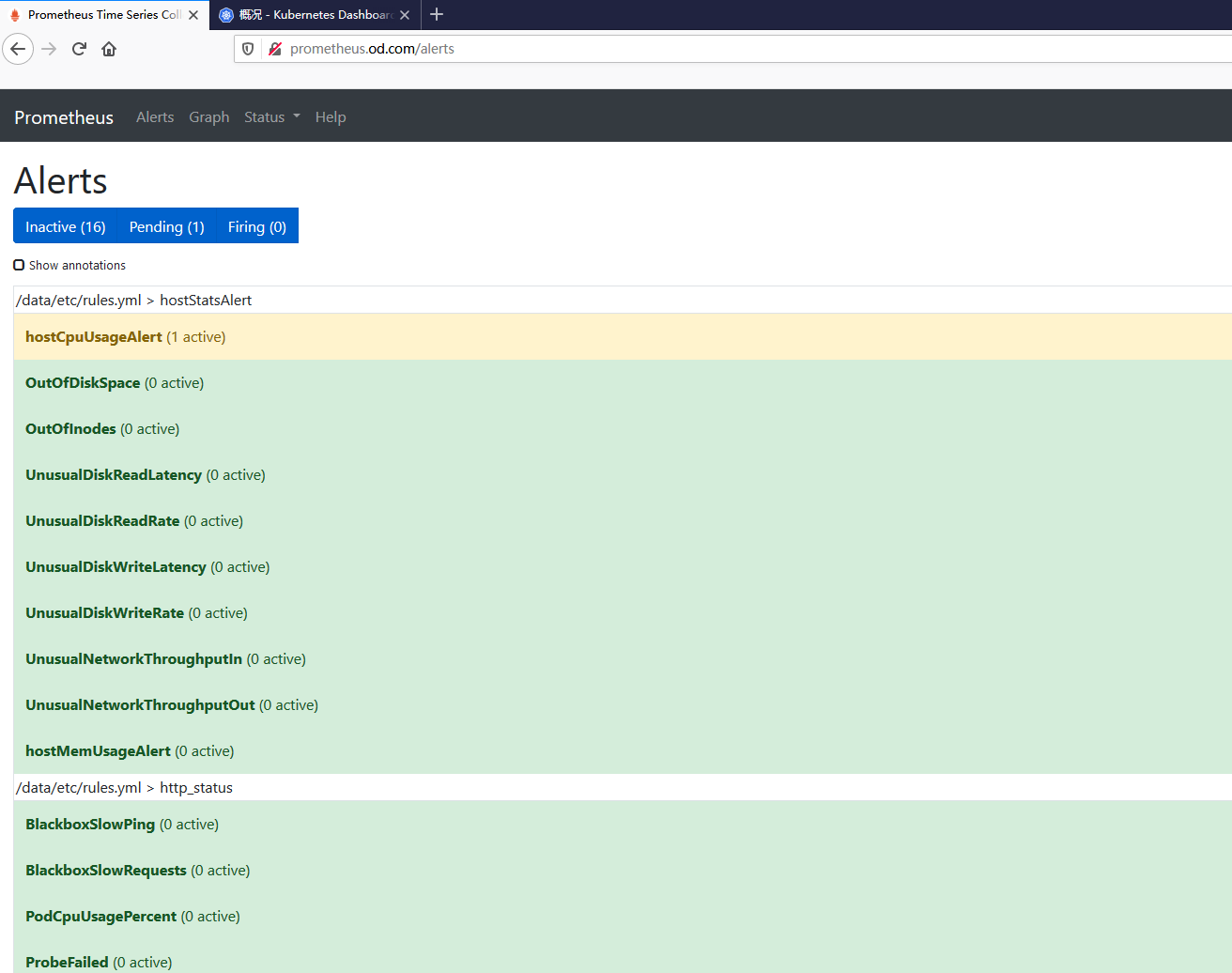

(6)为alertmanager创建报警规则

[root@mfyxw50 ~]# cat > /data/nfs-volume/prometheus/etc/rules.yml << EOF

groups:

- name: hostStatsAlert

rules:

- alert: hostCpuUsageAlert

expr: sum(avg without (cpu)(irate(node_cpu{mode!='idle'}[5m]))) by (instance) > 0.85

for: 5m

labels:

severity: warning

annotations:

summary: "{{ $labels.instance }} CPU usage above 85% (current value: {{ $value }}%)"

- alert: hostMemUsageAlert

expr: (node_memory_MemTotal - node_memory_MemAvailable)/node_memory_MemTotal > 0.85

for: 5m

labels:

severity: warning

annotations:

summary: "{{ $labels.instance }} MEM usage above 85% (current value: {{ $value }}%)"

- alert: OutOfInodes

expr: node_filesystem_free{fstype="overlay",mountpoint ="/"} / node_filesystem_size{fstype="overlay",mountpoint ="/"} * 100 < 10

for: 5m

labels:

severity: warning

annotations:

summary: "Out of inodes (instance {{ $labels.instance }})"

description: "Disk is almost running out of available inodes (< 10% left) (current value: {{ $value }})"

- alert: OutOfDiskSpace

expr: node_filesystem_free{fstype="overlay",mountpoint ="/rootfs"} / node_filesystem_size{fstype="overlay",mountpoint ="/rootfs"} * 100 < 10

for: 5m

labels:

severity: warning

annotations:

summary: "Out of disk space (instance {{ $labels.instance }})"

description: "Disk is almost full (< 10% left) (current value: {{ $value }})"

- alert: UnusualNetworkThroughputIn

expr: sum by (instance) (irate(node_network_receive_bytes[2m])) / 1024 / 1024 > 100

for: 5m

labels:

severity: warning

annotations:

summary: "Unusual network throughput in (instance {{ $labels.instance }})"

description: "Host network interfaces are probably receiving too much data (> 100 MB/s) (current value: {{ $value }})"

- alert: UnusualNetworkThroughputOut

expr: sum by (instance) (irate(node_network_transmit_bytes[2m])) / 1024 / 1024 > 100

for: 5m

labels:

severity: warning

annotations:

summary: "Unusual network throughput out (instance {{ $labels.instance }})"

description: "Host network interfaces are probably sending too much data (> 100 MB/s) (current value: {{ $value }})"

- alert: UnusualDiskReadRate

expr: sum by (instance) (irate(node_disk_bytes_read[2m])) / 1024 / 1024 > 50

for: 5m

labels:

severity: warning

annotations:

summary: "Unusual disk read rate (instance {{ $labels.instance }})"

description: "Disk is probably reading too much data (> 50 MB/s) (current value: {{ $value }})"

- alert: UnusualDiskWriteRate

expr: sum by (instance) (irate(node_disk_bytes_written[2m])) / 1024 / 1024 > 50

for: 5m

labels:

severity: warning

annotations:

summary: "Unusual disk write rate (instance {{ $labels.instance }})"

description: "Disk is probably writing too much data (> 50 MB/s) (current value: {{ $value }})"

- alert: UnusualDiskReadLatency

expr: rate(node_disk_read_time_ms[1m]) / rate(node_disk_reads_completed[1m]) > 100

for: 5m

labels:

severity: warning

annotations:

summary: "Unusual disk read latency (instance {{ $labels.instance }})"

description: "Disk latency is growing (read operations > 100ms) (current value: {{ $value }})"

- alert: UnusualDiskWriteLatency

expr: rate(node_disk_write_time_ms[1m]) / rate(node_disk_writes_completedl[1m]) > 100

for: 5m

labels:

severity: warning

annotations:

summary: "Unusual disk write latency (instance {{ $labels.instance }})"

description: "Disk latency is growing (write operations > 100ms) (current value: {{ $value }})"

- name: http_status

rules:

- alert: ProbeFailed

expr: probe_success == 0

for: 1m

labels:

severity: error

annotations:

summary: "Probe failed (instance {{ $labels.instance }})"

description: "Probe failed (current value: {{ $value }})"

- alert: StatusCode

expr: probe_http_status_code <= 199 OR probe_http_status_code >= 400

for: 1m

labels:

severity: error

annotations:

summary: "Status Code (instance {{ $labels.instance }})"

description: "HTTP status code is not 200-399 (current value: {{ $value }})"

- alert: SslCertificateWillExpireSoon

expr: probe_ssl_earliest_cert_expiry - time() < 86400 * 30

for: 5m

labels:

severity: warning

annotations:

summary: "SSL certificate will expire soon (instance {{ $labels.instance }})"

description: "SSL certificate expires in 30 days (current value: {{ $value }})"

- alert: SslCertificateHasExpired

expr: probe_ssl_earliest_cert_expiry - time() <= 0

for: 5m

labels:

severity: error

annotations:

summary: "SSL certificate has expired (instance {{ $labels.instance }})"

description: "SSL certificate has expired already (current value: {{ $value }})"

- alert: BlackboxSlowPing

expr: probe_icmp_duration_seconds > 2

for: 5m

labels:

severity: warning

annotations:

summary: "Blackbox slow ping (instance {{ $labels.instance }})"

description: "Blackbox ping took more than 2s (current value: {{ $value }})"

- alert: BlackboxSlowRequests

expr: probe_http_duration_seconds > 2

for: 5m

labels:

severity: warning

annotations:

summary: "Blackbox slow requests (instance {{ $labels.instance }})"

description: "Blackbox request took more than 2s (current value: {{ $value }})"

- alert: PodCpuUsagePercent

expr: sum(sum(label_replace(irate(container_cpu_usage_seconds_total[1m]),"pod","$1","container_label_io_kubernetes_pod_name", "(.*)"))by(pod) / on(pod) group_right kube_pod_container_resource_limits_cpu_cores *100 )by(container,namespace,node,pod,severity) > 80

for: 5m

labels:

severity: warning

annotations:

summary: "Pod cpu usage percent has exceeded 80% (current value: {{ $value }}%)"

EOF

(7)重新对prometheus.yaml文件添加如下内容

alerting:

alertmanagers:

- static_configs:

- targets: ["alertmanager"]

rule_files:

- "/data/etc/rules.yml"

修改好的prometheus.yaml文件内容如下:

[root@mfyxw50 ~]# cat > /data/nfs-volume/prometheus/etc/prometheus.yml << EOF

global:

scrape_interval: 15s

evaluation_interval: 15s

scrape_configs:

- job_name: 'etcd'

tls_config:

ca_file: /data/etc/ca.pem

cert_file: /data/etc/client.pem

key_file: /data/etc/client-key.pem

scheme: https

static_configs:

- targets:

- '192.168.80.20:2379'

- '192.168.80.30:2379'

- '192.168.80.40:2379'

- job_name: 'kubernetes-apiservers'

kubernetes_sd_configs:

- role: endpoints

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]

action: keep

regex: default;kubernetes;https

- job_name: 'kubernetes-pods'

kubernetes_sd_configs:

- role: pod

relabel_configs:

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_scrape]

action: keep

regex: true

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port]

action: replace

regex: ([^:]+)(?::d+)?;(d+)

replacement: $1:$2

target_label: __address__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: kubernetes_pod_name

- job_name: 'kubernetes-kubelet'

kubernetes_sd_configs:

- role: node

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __address__

replacement: ${1}:10255

- job_name: 'kubernetes-cadvisor'

kubernetes_sd_configs:

- role: node

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __address__

replacement: ${1}:4194

- job_name: 'kubernetes-kube-state'

kubernetes_sd_configs:

- role: pod

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: kubernetes_pod_name

- source_labels: [__meta_kubernetes_pod_label_grafanak8sapp]

regex: .*true.*

action: keep

- source_labels: ['__meta_kubernetes_pod_label_daemon', '__meta_kubernetes_pod_node_name']

regex: 'node-exporter;(.*)'

action: replace

target_label: nodename

- job_name: 'blackbox_http_pod_probe'

metrics_path: /probe

kubernetes_sd_configs:

- role: pod

params:

module: [http_2xx]

relabel_configs:

- source_labels: [__meta_kubernetes_pod_annotation_blackbox_scheme]

action: keep

regex: http

- source_labels: [__address__, __meta_kubernetes_pod_annotation_blackbox_port, __meta_kubernetes_pod_annotation_blackbox_path]

action: replace

regex: ([^:]+)(?::d+)?;(d+);(.+)

replacement: $1:$2$3

target_label: __param_target

- action: replace

target_label: __address__

replacement: blackbox-exporter.kube-system:9115

- source_labels: [__param_target]

target_label: instance

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: kubernetes_pod_name

- job_name: 'blackbox_tcp_pod_probe'

metrics_path: /probe

kubernetes_sd_configs:

- role: pod

params:

module: [tcp_connect]

relabel_configs:

- source_labels: [__meta_kubernetes_pod_annotation_blackbox_scheme]

action: keep

regex: tcp

- source_labels: [__address__, __meta_kubernetes_pod_annotation_blackbox_port]

action: replace

regex: ([^:]+)(?::d+)?;(d+)

replacement: $1:$2

target_label: __param_target

- action: replace

target_label: __address__

replacement: blackbox-exporter.kube-system:9115

- source_labels: [__param_target]

target_label: instance

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: kubernetes_pod_name

- job_name: 'traefik'

kubernetes_sd_configs:

- role: pod

relabel_configs:

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_scheme]

action: keep

regex: traefik

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port]

action: replace

regex: ([^:]+)(?::d+)?;(d+)

replacement: $1:$2

target_label: __address__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: kubernetes_pod_name

alerting:

alertmanagers:

- static_configs:

- targets: ["alertmanager"]

rule_files:

- "/data/etc/rules.yml"

EOF

(8)将prometheus平滑加载

[root@mfyxw30 ~]# kubectl get pod -n infra -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

alertmanager-6754975dbf-csd49 1/1 Running 0 12m 10.10.40.13 mfyxw40.mfyxw.com <none> <none>

apollo-portal-57bc86966d-khxvw 1/1 Running 7 34d 10.10.30.12 mfyxw30.mfyxw.com <none> <none>

dubbo-monitor-6676dd74cc-tcx6w 1/1 Running 9 31d 10.10.30.11 mfyxw30.mfyxw.com <none> <none>

grafana-5b64c9d6d-k4dqm 1/1 Running 1 21h 10.10.40.10 mfyxw40.mfyxw.com <none> <none>

jenkins-b99776c69-49dnr 1/1 Running 10 37d 10.10.40.9 mfyxw40.mfyxw.com <none> <none>

prometheus-7d466f8c64-d9g99 1/1 Running 2 29d 10.10.30.16 mfyxw30.mfyxw.com <none> <none>

[root@mfyxw30 ~]#

[root@mfyxw30 ~]# ps aux | grep prometheus

root 4128 2.0 3.8 886200 397848 ? Ssl 09:33 6:50 /bin/prometheus --config.file=/data/etc/prometheus.yml --storage.tsdb.path=/data/prom-db --storage.tsdb.min-block-duration=10m --storage.tsdb.retention=72h

root 29306 1.0 0.6 185524 65932 ? Ssl 15:09 0:00 traefik traefik --api --kubernetes --logLevel=INFO --insecureskipverify=true --kubernetes.endpoint=https://192.168.80.100:7443 --accesslog --accesslog.filepath=/var/log/traefik_access.log --traefiklog --traefiklog.filepath=/var/log/traefik.log --metrics.prometheus

root 29766 0.0 0.0 112720 2216 pts/0 R+ 15:10 0:00 grep --color=auto prometheus

[root@mfyxw30 ~]#

[root@mfyxw30 ~]# kill -SIGHUP 4128

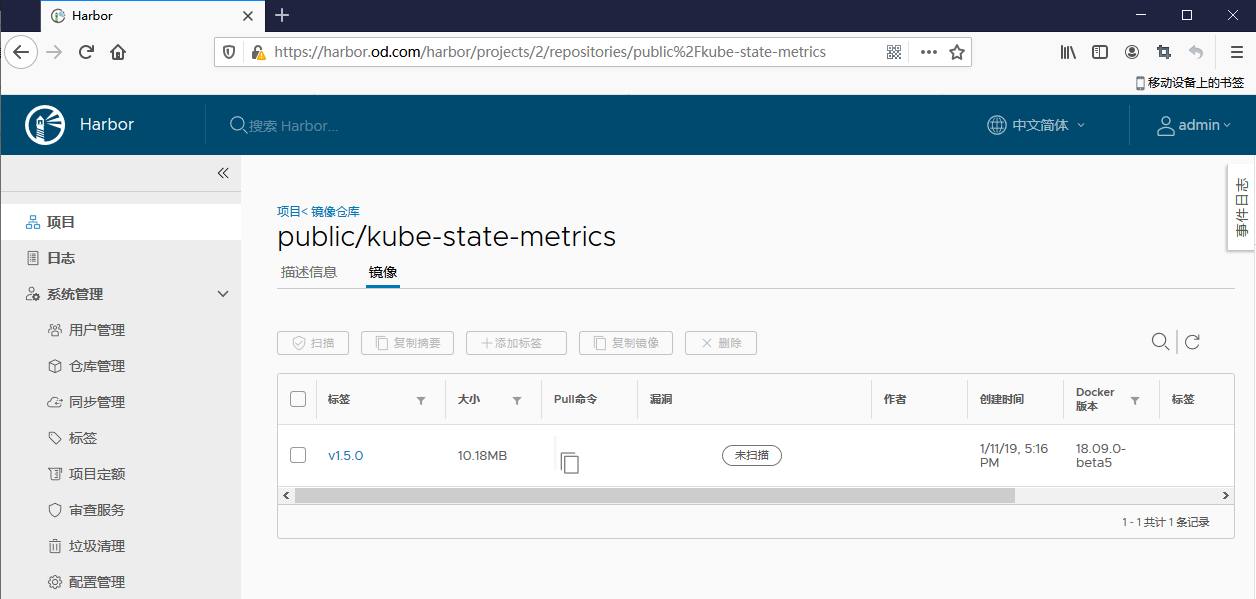

(9)测试发送报警邮件

将dubbo-demo-service(提供者)的pod删除

[root@mfyxw30 ~]# kubectl delete -f http://k8s-yaml.od.com/dubbo-demo-service/deployment.yaml

[root@mfyxw30 ~]# kubectl delete -f http://k8s-yaml.od.com/dubbo-demo-consumer/deployment.yaml

或者进入dashboard将dubbo-demo-service的伸缩修改为0