使用ConfigMap管理应用配置

拆分环境

| 主机名 | 角色 | IP地址 |

|---|---|---|

| mfyxw10.mfyxw.com | zk1.od.com(Test环境) | 192.168.80.10 |

| mfyxw20.mfyxw.com | zk2.od.com(Prod环境) | 192.168.80.20 |

| mfyxw30.mfyxw.com | 无,暂时停用此zookeepre | 192.168.80.30 |

1.将原来运行的zookeeper停止并且将配置文件修改

分别在mfyxw10、mfyxw20、mfyxw30主机上执行

[root@mfyxw10 ~]# /opt/zookeeper/bin/zkServer.sh stop #以mfyxw10为例,其它二台类似

ZooKeeper JMX enabled by default

Using config: /opt/zookeeper/bin/../conf/zoo.cfg

Stopping zookeeper ... STOPPED

将/data/zookeeper/data和/data/zookeeper/logs所有文件和目录都清空(以mfyxw10为例,其它二台类似)

[root@mfyxw10 ~]# rm -fr /data/zookeeper/data/*

[root@mfyxw10 ~]# rm -fr /data/zookeeper/logs/*

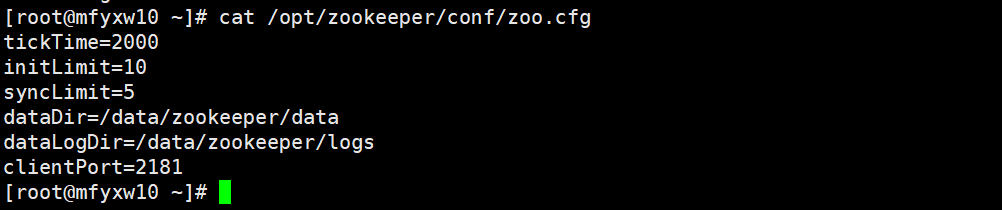

分别在mfyxw10和mfyxw20主机下执行,修改zookeeper配置文件(以mfyxw10为例)

[root@mfyxw10 ~]# cat > /opt/zookeeper/conf/zoo.cfg << EOF

tickTime=2000

initLimit=10

syncLimit=5

dataDir=/data/zookeeper/data

dataLogDir=/data/zookeeper/logs

clientPort=2181

EOF

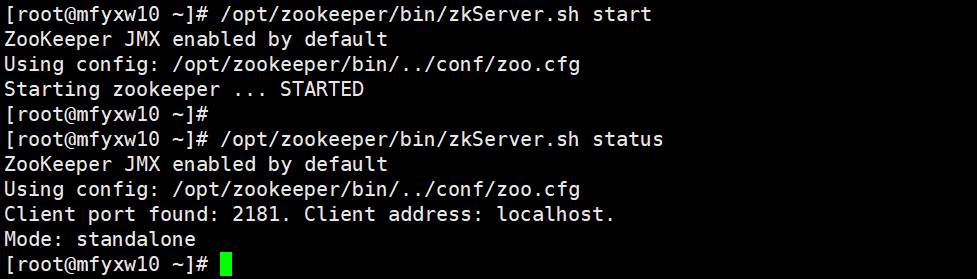

分别在mfyxw10和mfyxw20主机下执行(以mfyxw10为例),启动zookeeper

[root@mfyxw10 ~]# /opt/zookeeper/bin/zkServer.sh start

ZooKeeper JMX enabled by default

Using config: /opt/zookeeper/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

[root@mfyxw10 ~]#

[root@mfyxw10 ~]# /opt/zookeeper/bin/zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /opt/zookeeper/bin/../conf/zoo.cfg

Client port found: 2181. Client address: localhost.

Mode: standalone

[root@mfyxw10 ~]#

2.将dubbo-monitor的pod删除

在master节点(mfyxw30或mfyxw40)上任意一台执行即可

[root@mfyxw30 ~]# kubectl delete -f http://k8s-yaml.od.com/dubbo-monitor/Deployment.yaml

deployment.extensions "dubbo-monitor" deleted

在运维主机(mfyxw50)上重新为dubbo-monitor提供Deployment和configMap的配置资源清单

Deployment.yaml文件内容如下:

[root@mfyxw50 ~]# cat > /data/k8s-yaml/dubbo-monitor/dp.yaml << EOF

kind: Deployment

apiVersion: extensions/v1beta1

metadata:

name: dubbo-monitor

namespace: infra

labels:

name: dubbo-monitor

spec:

replicas: 1

selector:

matchLabels:

name: dubbo-monitor

template:

metadata:

labels:

app: dubbo-monitor

name: dubbo-monitor

spec:

containers:

- name: dubbo-monitor

image: harbor.od.com/infra/dubbo-monitor:latest

ports:

- containerPort: 8080

protocol: TCP

- containerPort: 20880

protocol: TCP

imagePullPolicy: IfNotPresent

volumeMounts:

- name: configmap-volume

mountPath: /dubbo-monitor-simple/conf

volumes:

- name: configmap-volume

configMap:

name: dubbo-monitor-cm

imagePullSecrets:

- name: harbor

restartPolicy: Always

terminationGracePeriodSeconds: 30

securityContext:

runAsUser: 0

schedulerName: default-scheduler

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

maxSurge: 1

revisionHistoryLimit: 7

progressDeadlineSeconds: 600

EOF

configMap.yaml文件内容如下:

[root@mfyxw50 ~]# cat > /data/k8s-yaml/dubbo-monitor/configmap.yaml << EOF

apiVersion: v1

kind: ConfigMap

metadata:

name: dubbo-monitor-cm

namespace: infra

data:

dubbo.properties: |

dubbo.container=log4j,spring,registry,jetty

dubbo.application.name=simple-monitor

dubbo.application.owner=Maple

dubbo.registry.address=zookeeper://zk1.od.com:2181

dubbo.protocol.port=20880

dubbo.jetty.port=8080

dubbo.jetty.directory=/dubbo-monitor-simple/monitor

dubbo.charts.directory=/dubbo-monitor-simple/charts

dubbo.statistics.directory=/dubbo-monitor-simple/statistics

dubbo.log4j.file=/dubbo-monitor-simple/logs/dubbo-monitor.log

dubbo.log4j.level=WARN

EOF

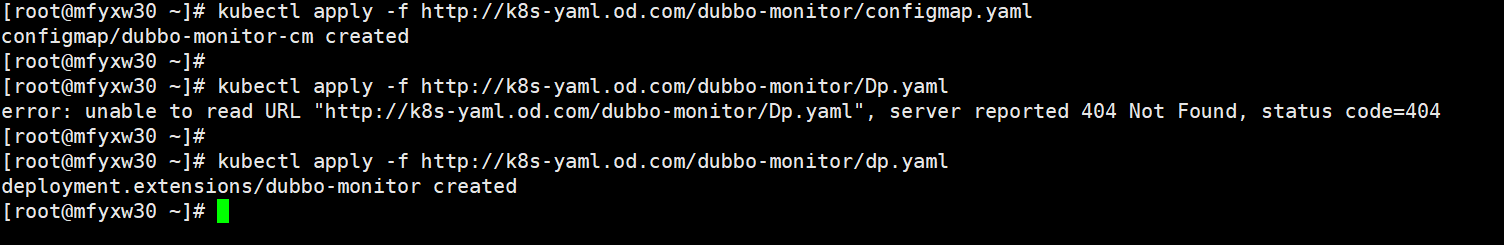

3.应用dubbo-monitor资源配置清单

在master节点(mfyxw30或mfyxw40)上任意一台执行即可

[root@mfyxw30 ~]# kubectl apply -f http://k8s-yaml.od.com/dubbo-monitor/configmap.yaml

configmap/dubbo-monitor-cm created

[root@mfyxw30 ~]#

[root@mfyxw30 ~]# kubectl apply -f http://k8s-yaml.od.com/dubbo-monitor/dp.yaml

deployment.extensions/dubbo-monitor created

[root@mfyxw30 ~]# kubectl get pod -n infra

NAME READY STATUS RESTARTS AGE

dubbo-monitor-6676dd74cc-rd86g 1/1 Running 0 55s

jenkins-b99776c69-p6skp 1/1 Running 10 22d

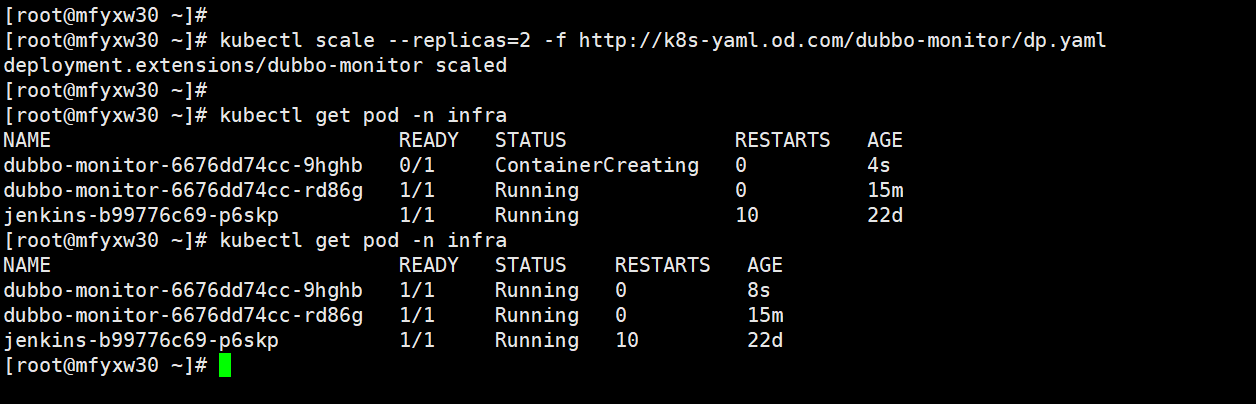

4.将duboo-monitor的pod扩容到2份

在master节点(mfyxw30或mfyxw40)上任意一台执行即可

[root@mfyxw30 ~]# kubectl scale --replicas=2 -f http://k8s-yaml.od.com/dubbo-monitor/dp.yaml

deployment.extensions/dubbo-monitor scaled

[root@mfyxw30 ~]#

[root@mfyxw30 ~]# kubectl get pod -n infra

NAME READY STATUS RESTARTS AGE

dubbo-monitor-6676dd74cc-9hghb 0/1 ContainerCreating 0 4s

dubbo-monitor-6676dd74cc-rd86g 1/1 Running 0 15m

jenkins-b99776c69-p6skp 1/1 Running 10 22d

[root@mfyxw30 ~]# kubectl get pod -n infra

NAME READY STATUS RESTARTS AGE

dubbo-monitor-6676dd74cc-9hghb 1/1 Running 0 8s

dubbo-monitor-6676dd74cc-rd86g 1/1 Running 0 15m

jenkins-b99776c69-p6skp 1/1 Running 10 22d

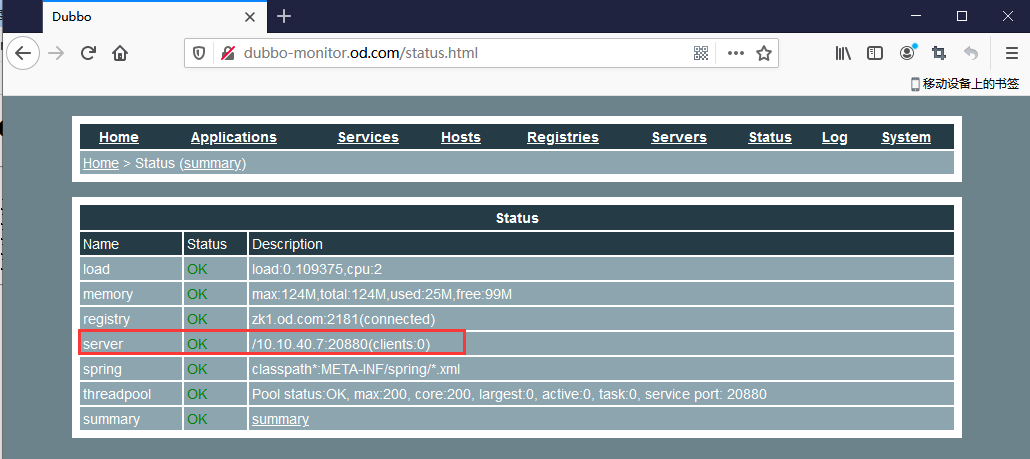

5.查看dubbo-monitor

启用了二份dubbo-monitor,在浏览器访问dubbo-monitor,不停刷新能看得到会在二个POD中切换