IDEA中调试程序设置指定的用户提交任务

场景:

在IDEA中调试任务,远程操作hadoo集群的时候,发生权限不足的问题

报错代码:

... 7 more Caused by: org.apache.hadoop.security.AccessControlException: Permission denied: user=11078, access=WRITE, inode="/hudi/flink-integration/checkpoints/flink_mysql_mysql":hanmin:hdfs:drwxr-xr-x at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.check(FSPermissionChecker.java:399) at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkPermission(FSPermissionChecker.java:255) at org.apache.ranger.authorization.hadoop.RangerHdfsAuthorizer$RangerAccessControlEnforcer.checkDefaultEnforcer(RangerHdfsAuthorizer.java:589) at org.apache.ranger.authorization.hadoop.RangerHdfsAuthorizer$RangerAccessControlEnforcer.checkPermission(RangerHdfsAuthorizer.java:350) at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkPermission(FSPermissionChecker.java:193) at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkPermission(FSDirectory.java:1857) at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkPermission(FSDirectory.java:1841) at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkAncestorAccess(FSDirectory.java:1800) at org.apache.hadoop.hdfs.server.namenode.FSDirMkdirOp.mkdirs(FSDirMkdirOp.java:59) at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.mkdirs(FSNamesystem.java:3150) at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.mkdirs(NameNodeRpcServer.java:1126)

错误分析:

经过分析应该是,hdfs上对应的目录中对于该系统用户是没有权限的。需要更改用户需要能够操作hdfs文件。

源码分析:

package org.apache.hadoop.security; import com.google.common.annotations.VisibleForTesting; import java.io.File; import java.io.FileNotFoundException; import java.io.IOException; import java.lang.reflect.UndeclaredThrowableException; import java.security.AccessControlContext; import java.security.AccessController; import java.security.Principal; import java.security.PrivilegedAction; import java.security.PrivilegedActionException; import java.security.PrivilegedExceptionAction; import java.util.ArrayList; import java.util.Arrays; import java.util.Collection; import java.util.Collections; import java.util.EnumMap; import java.util.HashMap; import java.util.Iterator; import java.util.List; import java.util.Map; import java.util.Set; import java.util.concurrent.TimeUnit; import java.util.concurrent.atomic.AtomicBoolean; import java.util.concurrent.atomic.AtomicReference; import javax.security.auth.DestroyFailedException; import javax.security.auth.Subject; import javax.security.auth.callback.CallbackHandler; import javax.security.auth.kerberos.KerberosPrincipal; import javax.security.auth.kerberos.KerberosTicket; import javax.security.auth.login.AppConfigurationEntry; import javax.security.auth.login.LoginContext; import javax.security.auth.login.LoginException; import javax.security.auth.login.AppConfigurationEntry.LoginModuleControlFlag; import javax.security.auth.login.Configuration.Parameters; import javax.security.auth.spi.LoginModule; import org.apache.hadoop.classification.InterfaceAudience.LimitedPrivate; import org.apache.hadoop.classification.InterfaceAudience.Private; import org.apache.hadoop.classification.InterfaceAudience.Public; import org.apache.hadoop.classification.InterfaceStability.Evolving; import org.apache.hadoop.classification.InterfaceStability.Unstable; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.io.Text; import org.apache.hadoop.io.retry.RetryPolicies; import org.apache.hadoop.io.retry.RetryPolicy; import org.apache.hadoop.io.retry.RetryPolicy.RetryAction; import org.apache.hadoop.metrics2.annotation.Metric; import org.apache.hadoop.metrics2.annotation.Metrics; import org.apache.hadoop.metrics2.lib.DefaultMetricsSystem; import org.apache.hadoop.metrics2.lib.MetricsRegistry; import org.apache.hadoop.metrics2.lib.MutableGaugeInt; import org.apache.hadoop.metrics2.lib.MutableGaugeLong; import org.apache.hadoop.metrics2.lib.MutableQuantiles; import org.apache.hadoop.metrics2.lib.MutableRate; import org.apache.hadoop.security.SaslRpcServer.AuthMethod; import org.apache.hadoop.security.authentication.util.KerberosUtil; import org.apache.hadoop.security.token.Token; import org.apache.hadoop.security.token.TokenIdentifier; import org.apache.hadoop.util.PlatformName; import org.apache.hadoop.util.Shell; import org.apache.hadoop.util.StringUtils; import org.apache.hadoop.util.Time; import org.slf4j.Logger; import org.slf4j.LoggerFactory; @Public @Evolving public class UserGroupInformation { @VisibleForTesting static final Logger LOG = LoggerFactory.getLogger(UserGroupInformation.class); private static final float TICKET_RENEW_WINDOW = 0.8F; private static boolean shouldRenewImmediatelyForTests = false; static final String HADOOP_USER_NAME = "HADOOP_USER_NAME"; static final String HADOOP_PROXY_USER = "HADOOP_PROXY_USER"; static UserGroupInformation.UgiMetrics metrics = UserGroupInformation.UgiMetrics.create(); private static UserGroupInformation.AuthenticationMethod authenticationMethod; private static Groups groups; private static long kerberosMinSecondsBeforeRelogin; private static Configuration conf; public static final String HADOOP_TOKEN_FILE_LOCATION = "HADOOP_TOKEN_FILE_LOCATION"; private static final AtomicReference<UserGroupInformation> loginUserRef = new AtomicReference(); private final Subject subject; private final User user; private static String OS_LOGIN_MODULE_NAME = getOSLoginModuleName(); private static Class<? extends Principal> OS_PRINCIPAL_CLASS = getOsPrincipalClass(); private static final boolean windows = System.getProperty("os.name").startsWith("Windows"); private static final boolean is64Bit = System.getProperty("os.arch").contains("64") || System.getProperty("os.arch").contains("s390x"); private static final boolean aix = System.getProperty("os.name").equals("AIX"); ... public boolean commit() throws LoginException { if (UserGroupInformation.LOG.isDebugEnabled()) { UserGroupInformation.LOG.debug("hadoop login commit"); } if (!this.subject.getPrincipals(User.class).isEmpty()) { if (UserGroupInformation.LOG.isDebugEnabled()) { UserGroupInformation.LOG.debug("using existing subject:" + this.subject.getPrincipals()); } return true; } else { Principal user = this.getCanonicalUser(KerberosPrincipal.class); if (user != null && UserGroupInformation.LOG.isDebugEnabled()) { UserGroupInformation.LOG.debug("using kerberos user:" + user); } String envUser; if (!UserGroupInformation.isSecurityEnabled() && user == null) { envUser = System.getenv("HADOOP_USER_NAME"); if (envUser == null) { envUser = System.getProperty("HADOOP_USER_NAME"); } user = envUser == null ? null : new User(envUser); } if (user == null) { user = this.getCanonicalUser(UserGroupInformation.OS_PRINCIPAL_CLASS); if (UserGroupInformation.LOG.isDebugEnabled()) { UserGroupInformation.LOG.debug("using local user:" + user); } } if (user != null) { if (UserGroupInformation.LOG.isDebugEnabled()) { UserGroupInformation.LOG.debug("Using user: "" + user + "" with name " + ((Principal)user).getName()); } envUser = null; User userEntry; try { UserGroupInformation.AuthenticationMethod authMethod = user instanceof KerberosPrincipal ? UserGroupInformation.AuthenticationMethod.KERBEROS : UserGroupInformation.AuthenticationMethod.SIMPLE; userEntry = new User(((Principal)user).getName(), authMethod, (LoginContext)null); } catch (Exception var4) { throw (LoginException)((LoginException)(new LoginException(var4.toString())).initCause(var4)); } if (UserGroupInformation.LOG.isDebugEnabled()) { UserGroupInformation.LOG.debug("User entry: "" + userEntry.toString() + """); } this.subject.getPrincipals().add(userEntry); return true; } else { UserGroupInformation.LOG.error("Can't find user in " + this.subject); throw new LoginException("Can't find user name"); } } } public void initialize(Subject subject, CallbackHandler callbackHandler, Map<String, ?> sharedState, Map<String, ?> options) { this.subject = subject; } public boolean login() throws LoginException { if (UserGroupInformation.LOG.isDebugEnabled()) { UserGroupInformation.LOG.debug("hadoop login"); } return true; } public boolean logout() throws LoginException { if (UserGroupInformation.LOG.isDebugEnabled()) { UserGroupInformation.LOG.debug("hadoop logout"); } return true; } } @Metrics( about = "User and group related metrics", context = "ugi" ) static class UgiMetrics { final MetricsRegistry registry = new MetricsRegistry("UgiMetrics"); @Metric({"Rate of successful kerberos logins and latency (milliseconds)"}) MutableRate loginSuccess; @Metric({"Rate of failed kerberos logins and latency (milliseconds)"}) MutableRate loginFailure; @Metric({"GetGroups"}) MutableRate getGroups; MutableQuantiles[] getGroupsQuantiles; @Metric({"Renewal failures since startup"}) private MutableGaugeLong renewalFailuresTotal; @Metric({"Renewal failures since last successful login"}) private MutableGaugeInt renewalFailures; UgiMetrics() { } static UserGroupInformation.UgiMetrics create() { return (UserGroupInformation.UgiMetrics)DefaultMetricsSystem.instance().register(new UserGroupInformation.UgiMetrics()); } static void reattach() { UserGroupInformation.metrics = create(); } void addGetGroups(long latency) { this.getGroups.add(latency); if (this.getGroupsQuantiles != null) { MutableQuantiles[] var3 = this.getGroupsQuantiles; int var4 = var3.length; for(int var5 = 0; var5 < var4; ++var5) { MutableQuantiles q = var3[var5]; q.add(latency); } } } MutableGaugeInt getRenewalFailures() { return this.renewalFailures; } } }

问题解决:

根据源码分析可以看到,hadoop在运行的时候,会通过获取 HADOOP_USER_NAME的值来进行判断操作的用户,如果该变量未设置的话,则默认获取的是当前系统的用户名。

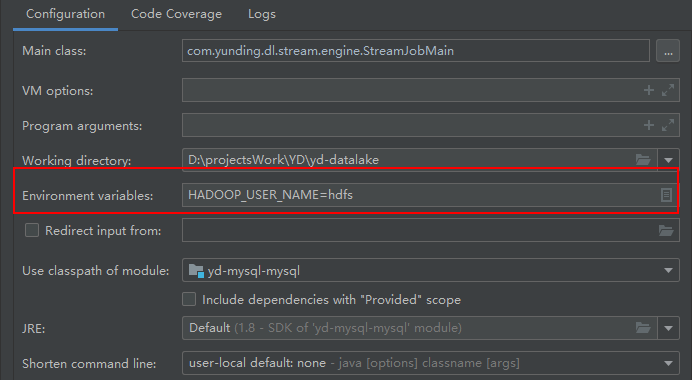

方案一:在IDEA中的环境变量参数中进行设置:

方案二、在系统环境变量中设置HADOOP_USER_NAME的值即可