配置hadoop(master,slave1,slave2) 说明: NameNode: master DataNode: slave1,slave2 -------------------------------------------------------- A. 修改主机的master 和 slaves i. 配置slaves # vi hadoop/conf/slaves 添加:192.168.126.20 192.168.126.30 ...节点 ip ii. 配置master 添加:192.168.126.10 ...主机 ip -------------------------------------------------------- B. 配置master .xml文件 i. 配置core-site.xml <?xml version="1.0"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <!-- Put site-specific property overrides in this file. --> <configuration> <property> <name>hadoop.tmp.dir</name> <value>/home/had/hadoop/data</value> <description>A base for other temporary directories.</description> </property> <property> <name>fs.default.name</name> <value>hdfs://192.168.126.10:9000</value> </property> </configuration> ii. 配置hdfs-site.xml <?xml version="1.0"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <!-- Put site-specific property overrides in this file. --> <configuration> <property> <name>dfs.replication</name> <value>3</value> <description>Default block replication. The actual number of replications can be specified when the file is created. The default is used if replication is not specified in create time. </description> </property> </configuration> iii.mapred-site.xml <?xml version="1.0"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <!-- Put site-specific property overrides in this file. --> <configuration> <property> <name>mapred.job.tracker</name> <value>192.168.126.10:9001</value> </property> </configuration> ------------------------------------------------------------- C. 配置slave1,slave2 (同上) i. core-site.xml ii. mapred-site.xml --------------------------------------------------------------- D. 配置 master,slave1,slave2的hadoop系统环境 $ vi /home/hadoop/.bashrc 添加: export HADOOP_HOME=/home/hadoop/hadoop-0.20.2 export HADOOP_CONF_DIR=$HADOOP_HOME/conf export PATH=/home/hadoop/hadoop-0.20.2/bin:$PATH ----------------------------------------------------------------

初始化文件系统:

注意:有时候会出现以下错误信息

。。。

11/08/18 17:02:35 INFO ipc.Client: Retrying connect to server: localhost/192.168.126 .10:9000. Already tried 0 time(s).

Bad connection to FS. command aborted.

此时需要把根目录下的tmp文件里面的内容删掉,然后重新格式化即可。

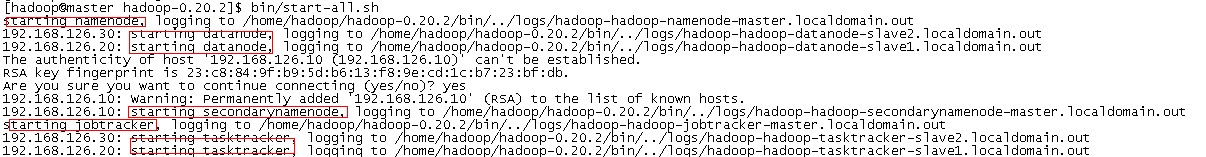

启动Hadoop:

完成后进行测试:

测试 $ bin/hadoop fs -put ./README.txt test1 $ bin/hadoop fs -ls Found 1 items drwxr-xr-x - hadoop supergroup 0 2013-07-14 00:51 /user/hadoop/test1 $hadoop jar hadoop-0.20.2-examples.jar wordcount /user/hadoop/test1/README.txt output1 结果出现以下问题

注:测试过程当中会有一些错误信息。一下是我在安装的过程当中碰到的几个问题。

1.org.apache.hadoop.ipc.RemoteException: org.apache.hadoop.hdfs.server.namenode.SafeModeException: Cannot delete /home/hadoop/hadoop-datastore

/hadoop-hadoop/mapred/system/job_201307132331_0005. Name node is in safe mode. 关闭安全模式: bin/hadoop dfsadmin -safemode leave 2.org.apache.hadoop.ipc.RemoteException: java.io.IOException: File /user/hadoop/test1/README.txt could only be replicated to 0 nodes,

instead of 1 情况1. hadoop.tmp.dir 磁盘空间不足。 解决方法: 换个足够空间的磁盘即可。 情况2. 查看防火墙状态 /etc/init.d/iptables status /etc/init.d/iptables stop//关闭所有的防火墙 情况3.先后启动namenode、datanode(我的是这种情况) 参考文章:http://sjsky.iteye.com/blog/1124545

最后执行界面如下:

查看hdfs运行状态(web):http://192.168.126.10:50070/dfshealth.jsp

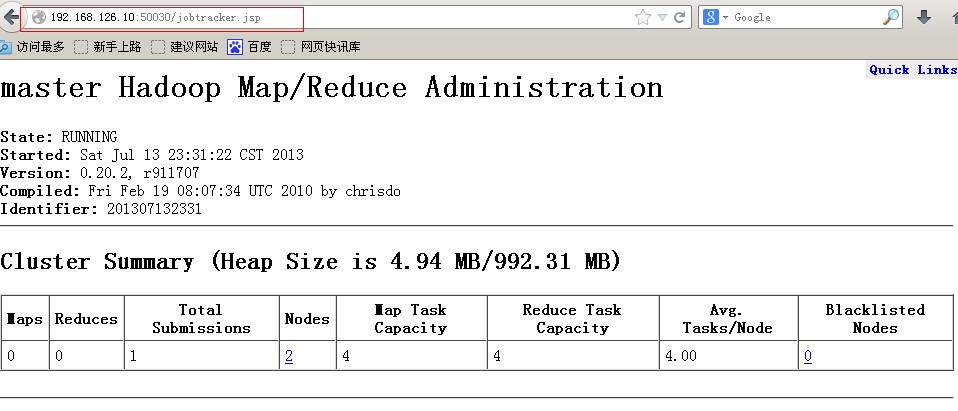

查看map-reduce信息(web):http://192.168.126.10:50030/jobtracker.jsp

整个Hadoop集群搭建结束。