开始动手前的说明

我搭建这一套环境的时候是基于docker搭建的,用到了docker-compose,所以开始前要先安装好docker 、 docker-compose,并简单的了解docker 、 docker-compose的用法。

前言

Q: ELK 是什么?

A: ELK 指:ElasticSearch + Logstash + Kibana

Q: ELK 用来干什么?

A: ELK 可以用来收集日志并进行日志分析,实现日志的统一管理,帮助开发人员和运维人员快速分析日志,快速发现问题。

当然它还有很多非常多实用功能,需要您去自行挖掘。

这里使用Filebeat进行日志收集并将收集上来的日志发送给ELK。

es:

Elasticsearch 是一个分布式、RESTful 风格的搜索和数据分析引擎。

kibana:

Kibana 是通向 Elastic 产品集的窗口。 它可以在 Elasticsearch 中对数据进行视觉探索和实时分析。

logstash:

Logstash 是开源的服务器端数据处理管道,能够同时从多个来源采集数据,转换数据,然后将数据发送到您最喜欢的“存储库”中

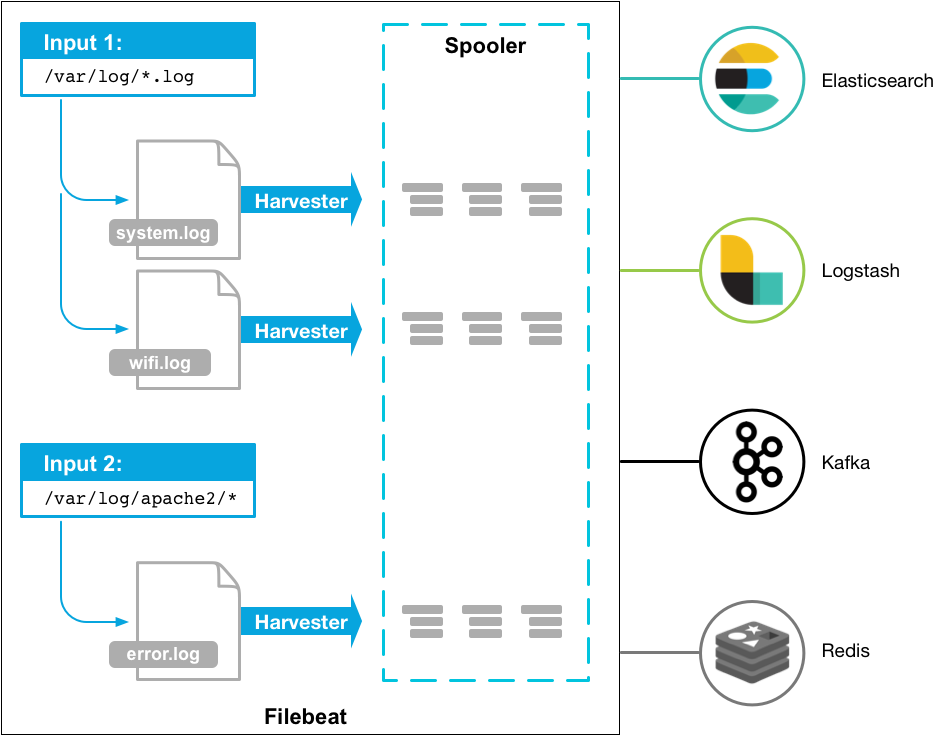

filebeat:

轻量级收集日志的服务,并且可以将收集的日志发送给 es、logstash、kafka、redis

filebeat 概览图

ELK日志数据收集时序图

接下来开始动手操作。

准备工作

$ mkdir ELK_pro

$ cd ELK_pro

$ touch docker-compose.yml

$ touch Dockerfile

$ touch filebeat.yml

$ touch kibana.yml

$ touch logstash-pipeline.conf

$ touch logstash.yml

1. ElasticSearch 环境搭建

我是参考官网的例子直接写的docker-compose.yml,然后做了小的改动。下面是我改动之后的配置:

version: "3"

services:

es01:

image: docker.elastic.co/elasticsearch/elasticsearch:7.6.1

container_name: es01

environment:

- node.name=es01

- cluster.name=es-docker-cluster

- discovery.seed_hosts=es02,es03

- cluster.initial_master_nodes=es01,es02,es03

- bootstrap.memory_lock=true

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

- xpack.security.enabled=true

- xpack.security.authc.accept_default_password=true

- xpack.security.transport.ssl.enabled=true

- xpack.security.transport.ssl.verification_mode=certificate

- xpack.security.transport.ssl.keystore.path=/usr/share/elasticsearch/config/certificates/elastic-certificates.p12

- xpack.security.transport.ssl.truststore.path=/usr/share/elasticsearch/config/certificates/elastic-certificates.p12

ulimits:

memlock:

soft: -1

hard: -1

volumes:

- data01:/usr/share/elasticsearch/data

- ./elastic-certificates.p12:/usr/share/elasticsearch/config/certificates/elastic-certificates.p12

ports:

- 9200:9200

networks:

- falling_wind

es02:

image: docker.elastic.co/elasticsearch/elasticsearch:7.6.1

container_name: es02

environment:

- node.name=es02

- cluster.name=es-docker-cluster

- discovery.seed_hosts=es01,es03

- cluster.initial_master_nodes=es01,es02,es03

- bootstrap.memory_lock=true

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

- xpack.security.enabled=true

- xpack.security.authc.accept_default_password=true

- xpack.security.transport.ssl.enabled=true

- xpack.security.transport.ssl.verification_mode=certificate

- xpack.security.transport.ssl.keystore.path=/usr/share/elasticsearch/config/certificates/elastic-certificates.p12

- xpack.security.transport.ssl.truststore.path=/usr/share/elasticsearch/config/certificates/elastic-certificates.p12

ulimits:

memlock:

soft: -1

hard: -1

volumes:

- data02:/usr/share/elasticsearch/data

- ./elastic-certificates.p12:/usr/share/elasticsearch/config/certificates/elastic-certificates.p12

networks:

- falling_wind

es03:

image: docker.elastic.co/elasticsearch/elasticsearch:7.6.1

container_name: es03

environment:

- node.name=es03

- cluster.name=es-docker-cluster

- discovery.seed_hosts=es01,es02

- cluster.initial_master_nodes=es01,es02,es03

- bootstrap.memory_lock=true

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

- xpack.security.enabled=true

- xpack.security.authc.accept_default_password=true

- xpack.security.transport.ssl.enabled=true

- xpack.security.transport.ssl.verification_mode=certificate

- xpack.security.transport.ssl.keystore.path=/usr/share/elasticsearch/config/certificates/elastic-certificates.p12

- xpack.security.transport.ssl.truststore.path=/usr/share/elasticsearch/config/certificates/elastic-certificates.p12

ulimits:

memlock:

soft: -1

hard: -1

volumes:

- data03:/usr/share/elasticsearch/data

- ./elastic-certificates.p12:/usr/share/elasticsearch/config/certificates/elastic-certificates.p12

networks:

- falling_wind

volumes:

data01:

driver: local

data02:

driver: local

data03:

driver: local

networks:

falling_wind:

driver: bridge

这个配置我是加了证书认证的。

下面请看证书生成方法:

- 进入docker (es01):

$ docker ps

$ docker exec -it 容器ID或名称 /bin/sh

- 生成证书并copy

$ cd bin

$ elasticsearch-certutil ca

$ elasticsearch-certutil cert --ca elastic-stack-ca.p12

$ exit

$ docker cp 容器ID:/usr/share/elasticsearch/elastic-certificates.p12 .

# 注意:最后的点不要忘记了。

- 设置es01的密码:

$ docker ps

$ docker exec -it 容器ID或名称 /bin/sh

$ cd bin

$ elasticsearch-setup-passwords interactive

# 按照提示设置密码即可

2. kibana 环境搭建

配置 kibana

docker-compose.yml

kibana:

image: docker.elastic.co/kibana/kibana:7.6.1

container_name: kibana_7_61

ports:

- "5601:5601"

volumes:

- ./kibana.yml:/usr/share/kibana/config/kibana.yml

networks:

- falling_wind

depends_on:

- es01

kibana.yml

server.name: kibana

server.host: "0"

elasticsearch.hosts: ["http://172.18.114.219:9200"]

xpack.monitoring.ui.container.elasticsearch.enabled: true

elasticsearch.username: your username

elasticsearch.password: your password

3. logsstash 环境搭建

配置logsstash

docker-compose.yml

logstash:

image: docker.elastic.co/logstash/logstash:7.6.1

container_name: logstash_7_61

ports:

- "5044:5044"

volumes:

- ./logstash.yml:/usr/share/logstash/config/logstash.yml

- ./logstash-pipeline.conf:/usr/share/logstash/conf.d/logstash-pipeline.conf

networks:

- falling_wind

logstash.yml

path.config: /usr/share/logstash/conf.d/*.conf

path.logs: /var/log/logstash

logstash-pipeline.conf

input {

beats {

port => 5044

codec => json

}

tcp {

port => 8000

codec => json

}

}

output {

elasticsearch {

hosts => ["172.18.114.219:9200"]

index => "falling-wind"

user => "your username"

password => "your password"

}

stdout {

codec => rubydebug

}

}

4. filebeat 环境搭建

配置 filebeat

docker-compose.yml

filebeat:

container_name: filebeat_7_61

build:

context: .

dockerfile: Dockerfile

volumes:

- /var/logs:/usr/share/filebeat/logs

networks:

- falling_wind

Dockerfile

FROM docker.elastic.co/beats/filebeat:7.6.1

COPY filebeat.yml /usr/share/filebeat/filebeat.yml

USER root

RUN chown root:filebeat /usr/share/filebeat/filebeat.yml

RUN chown root:filebeat /usr/share/filebeat/data/meta.json

说明:官网上的Dockerfile最后还加了

USER filebeat,按理说应该不会出现什么问题,但是启动总是会报权限不足:/usr/share/filebeat/data/meta.json,所以我暂时将这一句去掉就好了。

filebeat.yml

filebeat.inputs:

- type: log

paths:

- /usr/share/filebeat/logs/falling-wind/*.log

multiline.pattern: '^[[:space:]]'

multiline.negate: false

multiline.match: after

tags: ["falling-wind"]

- type: log

paths:

- /usr/share/filebeat/logs/celery/*.log

multiline.pattern: '^[[:space:]]'

multiline.negate: false

multiline.match: after

tags: ["celery"]

- type: log

paths:

- /usr/share/filebeat/logs/gunicorn/*.log

multiline.pattern: '^[[:space:]]'

multiline.negate: false

multiline.match: after

tags: ["gunicorn"]

- type: log

paths:

- /usr/share/filebeat/logs/supervisor/*.log

tags: ["supervisor"]

#============================= Filebeat modules ===============================

filebeat.config.modules:

# Glob pattern for configuration loading

path: ${path.config}/modules.d/*.yml

# Set to true to enable config reloading

reload.enabled: true

output.logstash:

hosts: ["172.18.114.219:5044"]

注意合并多行信息的配置:

将堆栈信息合并:

multiline.pattern: '^[[:space:]]'

multiline.negate: false

multiline.match: after

总结

一共需要的配置文件:

- docker-compose.yml

- Dockerfile: 构建filebeat镜像

- elastic-certificates.p12:证书文件

- filebeat.yml

- kibana.yml

- logstash-pipeline.conf

- logstash.yml

docker-compose.yml 完整版:

version: "3"

services:

es01:

image: docker.elastic.co/elasticsearch/elasticsearch:7.6.1

container_name: es01

environment:

- node.name=es01

- cluster.name=es-docker-cluster

- discovery.seed_hosts=es02,es03

- cluster.initial_master_nodes=es01,es02,es03

- bootstrap.memory_lock=true

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

- xpack.security.enabled=true

- xpack.security.authc.accept_default_password=true

- xpack.security.transport.ssl.enabled=true

- xpack.security.transport.ssl.verification_mode=certificate

- xpack.security.transport.ssl.keystore.path=/usr/share/elasticsearch/config/certificates/elastic-certificates.p12

- xpack.security.transport.ssl.truststore.path=/usr/share/elasticsearch/config/certificates/elastic-certificates.p12

ulimits:

memlock:

soft: -1

hard: -1

volumes:

- data01:/usr/share/elasticsearch/data

- ./elastic-certificates.p12:/usr/share/elasticsearch/config/certificates/elastic-certificates.p12

ports:

- 9200:9200

networks:

- falling_wind

es02:

image: docker.elastic.co/elasticsearch/elasticsearch:7.6.1

container_name: es02

environment:

- node.name=es02

- cluster.name=es-docker-cluster

- discovery.seed_hosts=es01,es03

- cluster.initial_master_nodes=es01,es02,es03

- bootstrap.memory_lock=true

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

- xpack.security.enabled=true

- xpack.security.authc.accept_default_password=true

- xpack.security.transport.ssl.enabled=true

- xpack.security.transport.ssl.verification_mode=certificate

- xpack.security.transport.ssl.keystore.path=/usr/share/elasticsearch/config/certificates/elastic-certificates.p12

- xpack.security.transport.ssl.truststore.path=/usr/share/elasticsearch/config/certificates/elastic-certificates.p12

ulimits:

memlock:

soft: -1

hard: -1

volumes:

- data02:/usr/share/elasticsearch/data

- ./elastic-certificates.p12:/usr/share/elasticsearch/config/certificates/elastic-certificates.p12

networks:

- falling_wind

es03:

image: docker.elastic.co/elasticsearch/elasticsearch:7.6.1

container_name: es03

environment:

- node.name=es03

- cluster.name=es-docker-cluster

- discovery.seed_hosts=es01,es02

- cluster.initial_master_nodes=es01,es02,es03

- bootstrap.memory_lock=true

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

- xpack.security.enabled=true

- xpack.security.authc.accept_default_password=true

- xpack.security.transport.ssl.enabled=true

- xpack.security.transport.ssl.verification_mode=certificate

- xpack.security.transport.ssl.keystore.path=/usr/share/elasticsearch/config/certificates/elastic-certificates.p12

- xpack.security.transport.ssl.truststore.path=/usr/share/elasticsearch/config/certificates/elastic-certificates.p12

ulimits:

memlock:

soft: -1

hard: -1

volumes:

- data03:/usr/share/elasticsearch/data

- ./elastic-certificates.p12:/usr/share/elasticsearch/config/certificates/elastic-certificates.p12

networks:

- falling_wind

kibana:

image: docker.elastic.co/kibana/kibana:7.6.1

container_name: kibana_7_61

ports:

- "5601:5601"

volumes:

- ./kibana.yml:/usr/share/kibana/config/kibana.yml

networks:

- falling_wind

depends_on:

- es01

logstash:

image: docker.elastic.co/logstash/logstash:7.6.1

container_name: logstash_7_61

ports:

- "5044:5044"

volumes:

- ./logstash.yml:/usr/share/logstash/config/logstash.yml

- ./logstash-pipeline.conf:/usr/share/logstash/conf.d/logstash-pipeline.conf

networks:

- falling_wind

filebeat:

container_name: filebeat_7_61

build:

context: .

dockerfile: Dockerfile

volumes:

- /var/logs:/usr/share/filebeat/logs

networks:

- falling_wind

volumes:

data01:

driver: local

data02:

driver: local

data03:

driver: local

networks:

falling_wind:

driver: bridge

Enjoy your code!