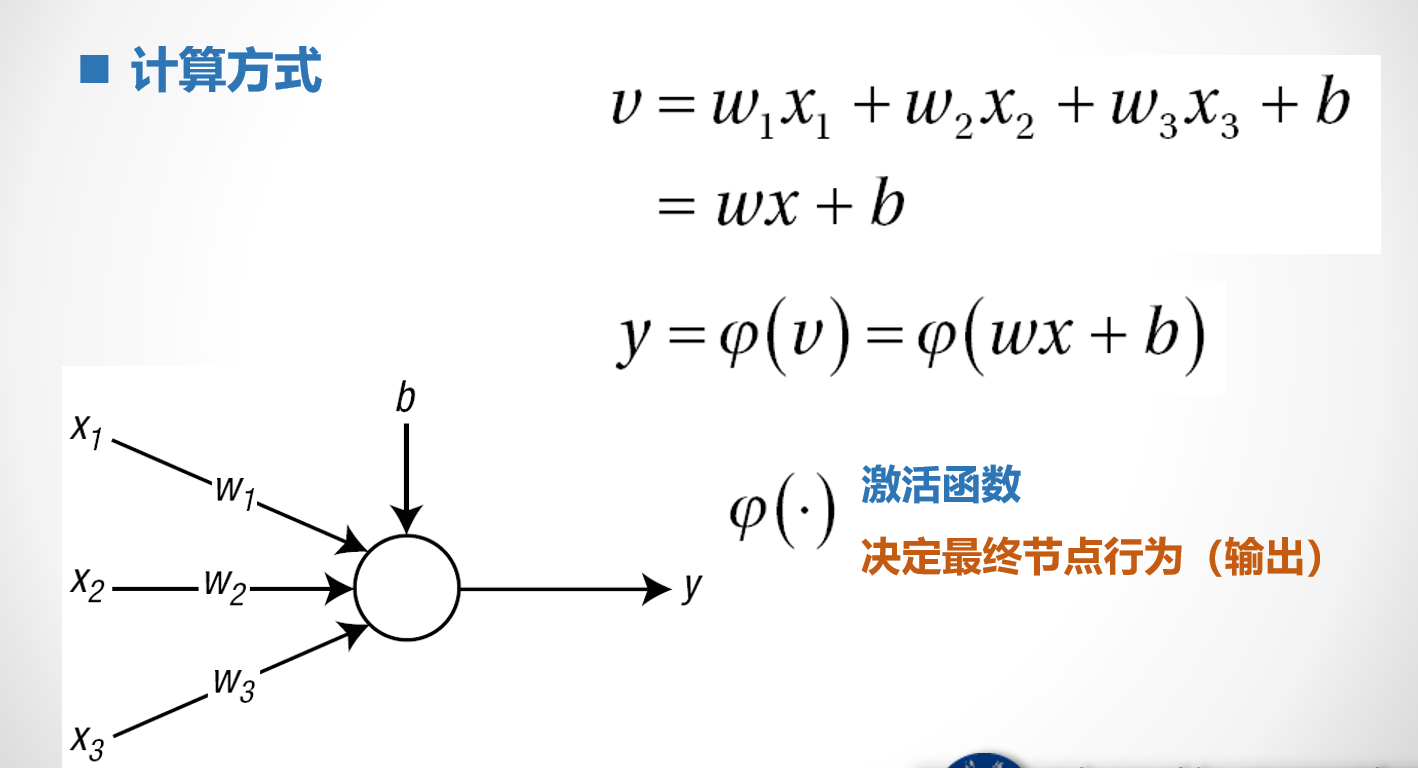

神经网络最基本的元素与计算流程:

神经网络最基本的元素与计算流程:

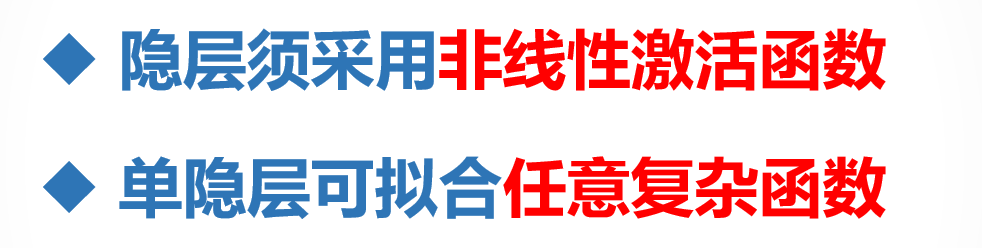

基本的组网原则:

神经网络监督学习的基本步骤:

- 初始化权值系数

- 提取一个样本输入NN,比较网络输出与正确输出的误差

- 调整权值系数,以减少上面误差——调整的方法对应不同的学习规则

- 重复二三步,直到所有的样本遍历完毕或者误差在可以容忍的范围内

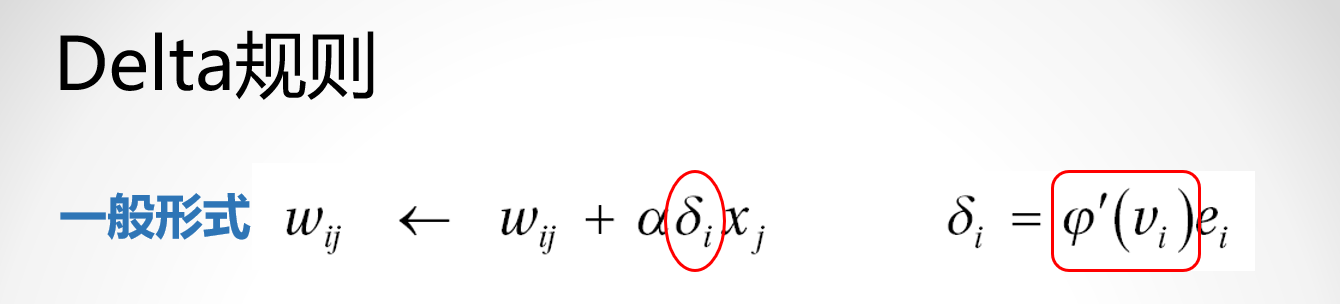

Delta规则:(一种更新权值系数的规则)

-

基于sigmoid函数的Delta规则:优势,便于用于分类问题——激活函数选择

-

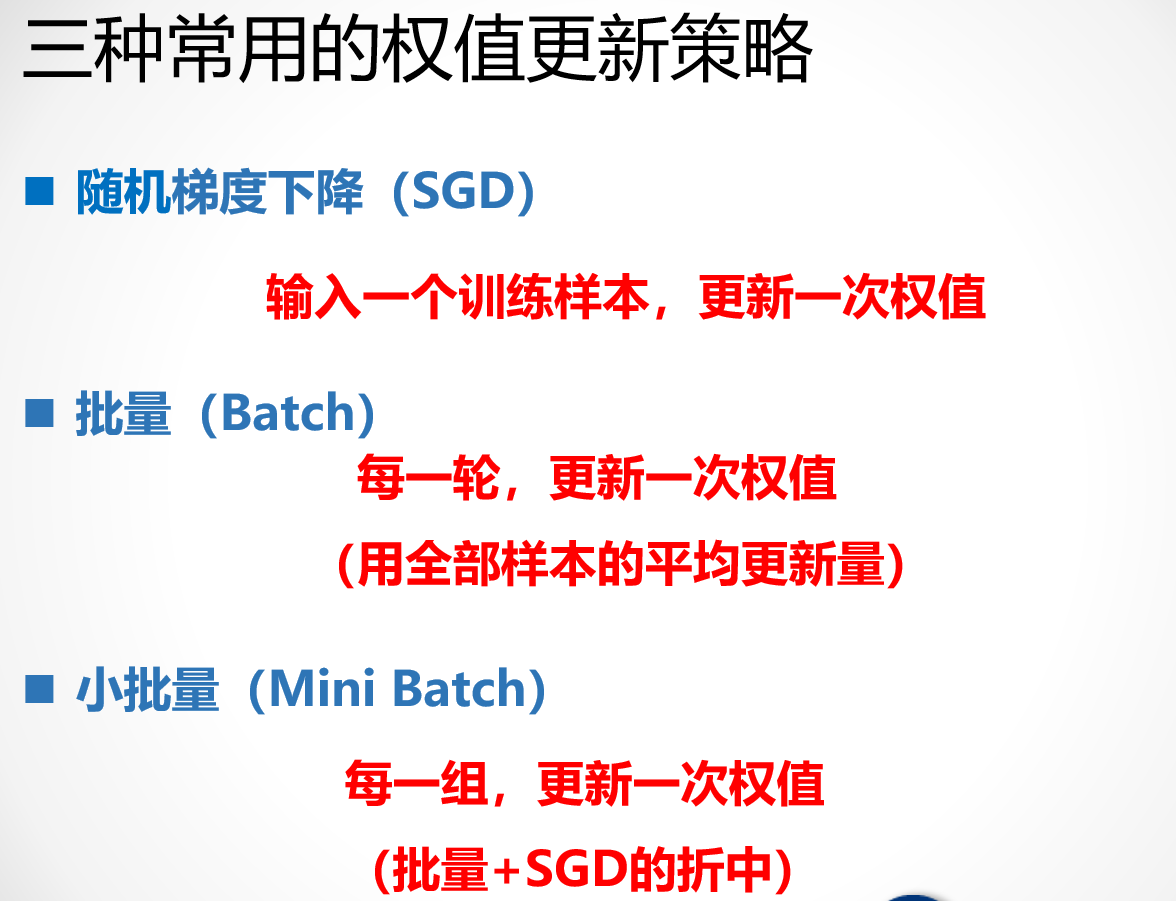

几种常见权值更新策略:

-

三种更新策略下的代码演示

function W = DeltaSGD(W,X,D) alpha = 0.9; N = 4; for i = 1:N x = X(i,:)'; d = D(i); v = W*x; y = sigmoid(v); e = d - y; dy = y*(1-y)*e; dW = alpha*dy*x'; W = dW + W; end end

function W = DeltaBatch(W,X,D) alpha = 0.9; N = 4; dWSum = zeros(1,3); for i = 1:N x = X(i,:)'; d = D(i); v = W*x; y = sigmoid(v); e = d - y; dy = y*(1-y)*e; dW = alpha*dy*x'; dWSum = dWSum + dW; end dWavg = dWSum/N; W = W + dWavg; end

function W = DeltaMiniBatch(W,X,D) alpha = 0.9; N = 4; M = 2; for j = 1:(N/M) dWSum = zeros(1,3); for q = 1:M i = j*(M-1) + q; x = X(i,:)'; d = D(i); v = W*x; y = sigmoid(v); e = d - y; dy = y*(1-y)*e; dW = alpha*dy*x'; dWSum = dWSum + dW; end dWavg = dWSum/M; W = W + dWavg; end end

function y = sigmoid(x) y = 1/(1+exp(-x)); end

function D = DeltaTest()

clear all;%清除所有变量

close all;%关闭所有打开文件

X = [0 0 1;0 1 1;1 0 1;1 1 1];% 输入样本

D = [0 0 1 1];%对应样本的答案

% 初始化误差平方和向量

E1 = zeros(1000,1);

E2 = zeros(1000,1);

E3 = zeros(1000,1);

% 统一初始化权值系数

W1 = 2*rand(1,3) - 1;

W2 = W1;

W3 = W1;

% 使用三种方法训练1000轮 同时每一轮计算一次误差平方

for epoch = 1:1000

%各自完成一轮训练

W1 = DeltaSGD(W1,X,D);

W2 = DeltaBatch(W2,X,D);

W3 = DeltaMiniBatch(W3,X,D);

% 计算这一轮结束后的误差平方

N= 4;

for i = 1:N

%利用误差计算方法计算误差

% E1

x = X(i,:)';

d = D(i);

v1 = W1*x;

y1 = sigmoid(v1);

E1(epoch) = E1(epoch) + (d - y1)^2;

% E2

v2 = W2*x;

y2 = sigmoid(v2);

E2(epoch) = E2(epoch) + (d - y2)^2;

% E3

v3 = W3*x;

y3 = sigmoid(v3);

E3(epoch) = E3(epoch) + (d - y3)^2;

end

end

for i = 1:4

x = X(i,:)';d = D(i);

v = W1*x;

y = sigmoid(v)

end

% 绘制三种算法策略的差异图

plot(E1,'r');hold on

plot(E2,'b:');

plot(E3,'k-');

xlabel('Epoch');

ylabel('Sum of Squares of Training Error');

legend('SGD',"Batch",'MiniBatch');

end