在Ubuntu中安装opencv等插件,运行代码:

1 #! /usr/bin/python 2 # -*- coding: utf-8 -*- 3 4 import pygame 5 import random 6 from pygame.locals import * 7 import numpy as np 8 from collections import deque 9 import tensorflow as tf # http://blog.topspeedsnail.com/archives/10116 10 import cv2 # http://blog.topspeedsnail.com/archives/4755 11 12 BLACK = (0 ,0 ,0 ) 13 WHITE = (255,255,255) 14 15 SCREEN_SIZE = [320,400] 16 BAR_SIZE = [50, 5] 17 BALL_SIZE = [15, 15] 18 19 # 神经网络的输出 20 MOVE_STAY = [1, 0, 0] 21 MOVE_LEFT = [0, 1, 0] 22 MOVE_RIGHT = [0, 0, 1] 23 24 class Game(object): 25 def __init__(self): 26 pygame.init() 27 self.clock = pygame.time.Clock() 28 self.screen = pygame.display.set_mode(SCREEN_SIZE) 29 pygame.display.set_caption('Simple Game') 30 31 self.ball_pos_x = SCREEN_SIZE[0]//2 - BALL_SIZE[0]/2 32 self.ball_pos_y = SCREEN_SIZE[1]//2 - BALL_SIZE[1]/2 33 34 self.ball_dir_x = -1 # -1 = left 1 = right 35 self.ball_dir_y = -1 # -1 = up 1 = down 36 self.ball_pos = pygame.Rect(self.ball_pos_x, self.ball_pos_y, BALL_SIZE[0], BALL_SIZE[1]) 37 38 self.bar_pos_x = SCREEN_SIZE[0]//2-BAR_SIZE[0]//2 39 self.bar_pos = pygame.Rect(self.bar_pos_x, SCREEN_SIZE[1]-BAR_SIZE[1], BAR_SIZE[0], BAR_SIZE[1]) 40 41 # action是MOVE_STAY、MOVE_LEFT、MOVE_RIGHT 42 # ai控制棒子左右移动;返回游戏界面像素数和对应的奖励。(像素->奖励->强化棒子往奖励高的方向移动) 43 def step(self, action): 44 45 if action == MOVE_LEFT: 46 self.bar_pos_x = self.bar_pos_x - 2 47 elif action == MOVE_RIGHT: 48 self.bar_pos_x = self.bar_pos_x + 2 49 else: 50 pass 51 if self.bar_pos_x < 0: 52 self.bar_pos_x = 0 53 if self.bar_pos_x > SCREEN_SIZE[0] - BAR_SIZE[0]: 54 self.bar_pos_x = SCREEN_SIZE[0] - BAR_SIZE[0] 55 56 self.screen.fill(BLACK) 57 self.bar_pos.left = self.bar_pos_x 58 pygame.draw.rect(self.screen, WHITE, self.bar_pos) 59 60 self.ball_pos.left += self.ball_dir_x * 2 61 self.ball_pos.bottom += self.ball_dir_y * 3 62 pygame.draw.rect(self.screen, WHITE, self.ball_pos) 63 64 if self.ball_pos.top <= 0 or self.ball_pos.bottom >= (SCREEN_SIZE[1] - BAR_SIZE[1]+1): 65 self.ball_dir_y = self.ball_dir_y * -1 66 if self.ball_pos.left <= 0 or self.ball_pos.right >= (SCREEN_SIZE[0]): 67 self.ball_dir_x = self.ball_dir_x * -1 68 69 reward = 0 70 if self.bar_pos.top <= self.ball_pos.bottom and (self.bar_pos.left < self.ball_pos.right and self.bar_pos.right > self.ball_pos.left): 71 reward = 1 # 击中奖励 72 elif self.bar_pos.top <= self.ball_pos.bottom and (self.bar_pos.left > self.ball_pos.right or self.bar_pos.right < self.ball_pos.left): 73 reward = -1 # 没击中惩罚 74 75 # 获得游戏界面像素 76 screen_image = pygame.surfarray.array3d(pygame.display.get_surface()) 77 pygame.display.update() 78 # 返回游戏界面像素和对应的奖励 79 return reward, screen_image 80 81 # learning_rate 82 LEARNING_RATE = 0.99 83 # 更新梯度 84 INITIAL_EPSILON = 1.0 85 FINAL_EPSILON = 0.05 86 # 测试观测次数 87 EXPLORE = 500000 88 OBSERVE = 50000 89 # 存储过往经验大小 90 REPLAY_MEMORY = 500000 91 92 BATCH = 100 93 94 output = 3 # 输出层神经元数。代表3种操作-MOVE_STAY:[1, 0, 0] MOVE_LEFT:[0, 1, 0] MOVE_RIGHT:[0, 0, 1] 95 input_image = tf.placeholder("float", [None, 80, 100, 4]) # 游戏像素 96 action = tf.placeholder("float", [None, output]) # 操作 97 98 # 定义CNN-卷积神经网络 参考:http://blog.topspeedsnail.com/archives/10451 99 def convolutional_neural_network(input_image): 100 weights = {'w_conv1':tf.Variable(tf.zeros([8, 8, 4, 32])), 101 'w_conv2':tf.Variable(tf.zeros([4, 4, 32, 64])), 102 'w_conv3':tf.Variable(tf.zeros([3, 3, 64, 64])), 103 'w_fc4':tf.Variable(tf.zeros([3456, 784])), 104 'w_out':tf.Variable(tf.zeros([784, output]))} 105 106 biases = {'b_conv1':tf.Variable(tf.zeros([32])), 107 'b_conv2':tf.Variable(tf.zeros([64])), 108 'b_conv3':tf.Variable(tf.zeros([64])), 109 'b_fc4':tf.Variable(tf.zeros([784])), 110 'b_out':tf.Variable(tf.zeros([output]))} 111 112 conv1 = tf.nn.relu(tf.nn.conv2d(input_image, weights['w_conv1'], strides = [1, 4, 4, 1], padding = "VALID") + biases['b_conv1']) 113 conv2 = tf.nn.relu(tf.nn.conv2d(conv1, weights['w_conv2'], strides = [1, 2, 2, 1], padding = "VALID") + biases['b_conv2']) 114 conv3 = tf.nn.relu(tf.nn.conv2d(conv2, weights['w_conv3'], strides = [1, 1, 1, 1], padding = "VALID") + biases['b_conv3']) 115 conv3_flat = tf.reshape(conv3, [-1, 3456]) 116 fc4 = tf.nn.relu(tf.matmul(conv3_flat, weights['w_fc4']) + biases['b_fc4']) 117 118 output_layer = tf.matmul(fc4, weights['w_out']) + biases['b_out'] 119 return output_layer 120 121 # 深度强化学习入门: https://www.nervanasys.com/demystifying-deep-reinforcement-learning/ 122 # 训练神经网络 123 def train_neural_network(input_image): 124 predict_action = convolutional_neural_network(input_image) 125 126 argmax = tf.placeholder("float", [None, output]) 127 gt = tf.placeholder("float", [None]) 128 129 action = tf.reduce_sum(tf.multiply(predict_action, argmax), reduction_indices = 1) 130 cost = tf.reduce_mean(tf.square(action - gt)) 131 optimizer = tf.train.AdamOptimizer(1e-6).minimize(cost) 132 133 game = Game() 134 D = deque() 135 136 _, image = game.step(MOVE_STAY) 137 # 转换为灰度值 138 image = cv2.cvtColor(cv2.resize(image, (100, 80)), cv2.COLOR_BGR2GRAY) 139 # 转换为二值 140 ret, image = cv2.threshold(image, 1, 255, cv2.THRESH_BINARY) 141 input_image_data = np.stack((image, image, image, image), axis = 2) 142 143 with tf.Session() as sess: 144 sess.run(tf.initialize_all_variables()) 145 146 saver = tf.train.Saver() 147 148 n = 0 149 epsilon = INITIAL_EPSILON 150 while True: 151 action_t = predict_action.eval(feed_dict = {input_image : [input_image_data]})[0] 152 153 argmax_t = np.zeros([output], dtype=np.int) 154 if(random.random() <= INITIAL_EPSILON): 155 maxIndex = random.randrange(output) 156 else: 157 maxIndex = np.argmax(action_t) 158 argmax_t[maxIndex] = 1 159 if epsilon > FINAL_EPSILON: 160 epsilon -= (INITIAL_EPSILON - FINAL_EPSILON) / EXPLORE 161 162 #for event in pygame.event.get(): macOS需要事件循环,否则白屏 163 # if event.type == QUIT: 164 # pygame.quit() 165 # sys.exit() 166 reward, image = game.step(list(argmax_t)) 167 168 image = cv2.cvtColor(cv2.resize(image, (100, 80)), cv2.COLOR_BGR2GRAY) 169 ret, image = cv2.threshold(image, 1, 255, cv2.THRESH_BINARY) 170 image = np.reshape(image, (80, 100, 1)) 171 input_image_data1 = np.append(image, input_image_data[:, :, 0:3], axis = 2) 172 173 D.append((input_image_data, argmax_t, reward, input_image_data1)) 174 175 if len(D) > REPLAY_MEMORY: 176 D.popleft() 177 178 if n > OBSERVE: 179 minibatch = random.sample(D, BATCH) 180 input_image_data_batch = [d[0] for d in minibatch] 181 argmax_batch = [d[1] for d in minibatch] 182 reward_batch = [d[2] for d in minibatch] 183 input_image_data1_batch = [d[3] for d in minibatch] 184 185 gt_batch = [] 186 187 out_batch = predict_action.eval(feed_dict = {input_image : input_image_data1_batch}) 188 189 for i in range(0, len(minibatch)): 190 gt_batch.append(reward_batch[i] + LEARNING_RATE * np.max(out_batch[i])) 191 192 optimizer.run(feed_dict = {gt : gt_batch, argmax : argmax_batch, input_image : input_image_data_batch}) 193 194 input_image_data = input_image_data1 195 n = n+1 196 197 if n % 10000 == 0: 198 saver.save(sess, 'game.cpk', global_step = n) # 保存模型 199 200 print(n, "epsilon:", epsilon, " " ,"action:", maxIndex, " " ,"reward:", reward) 201 202 203 train_neural_network(input_image)

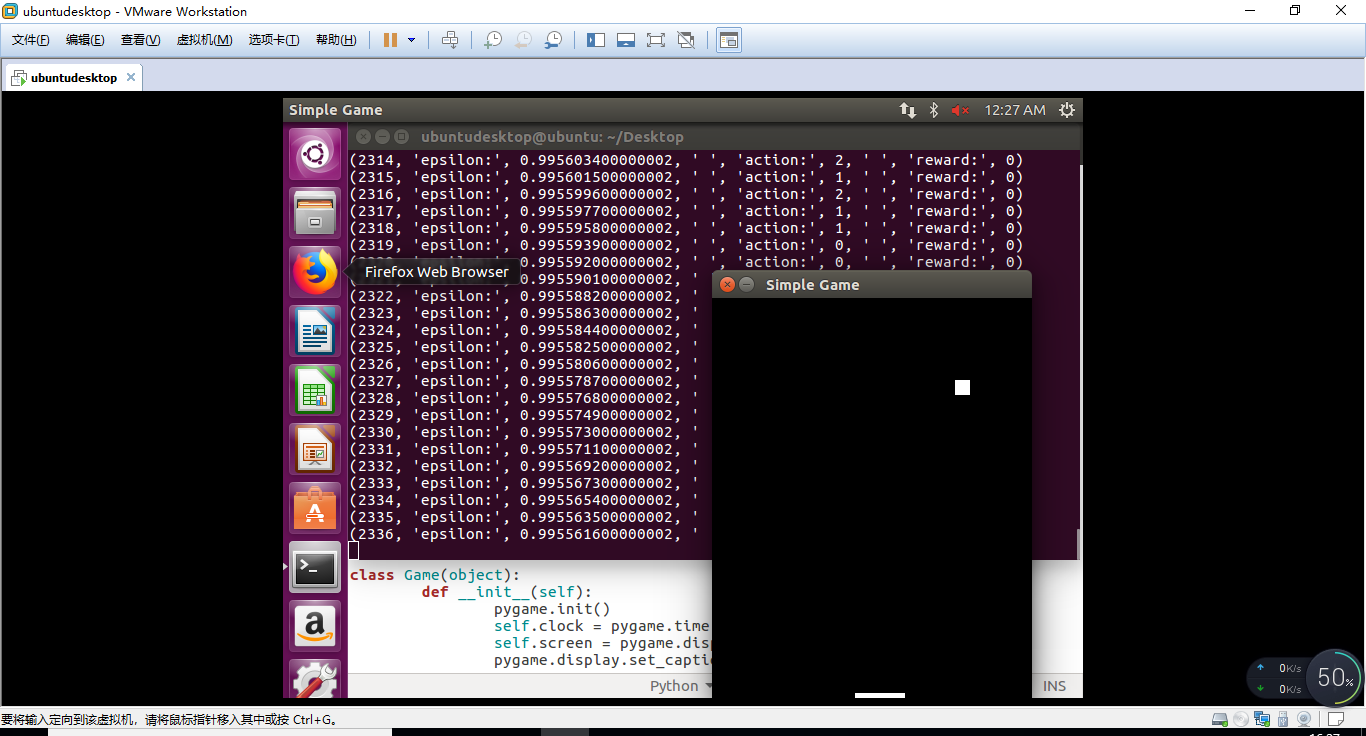

运行结果如下: