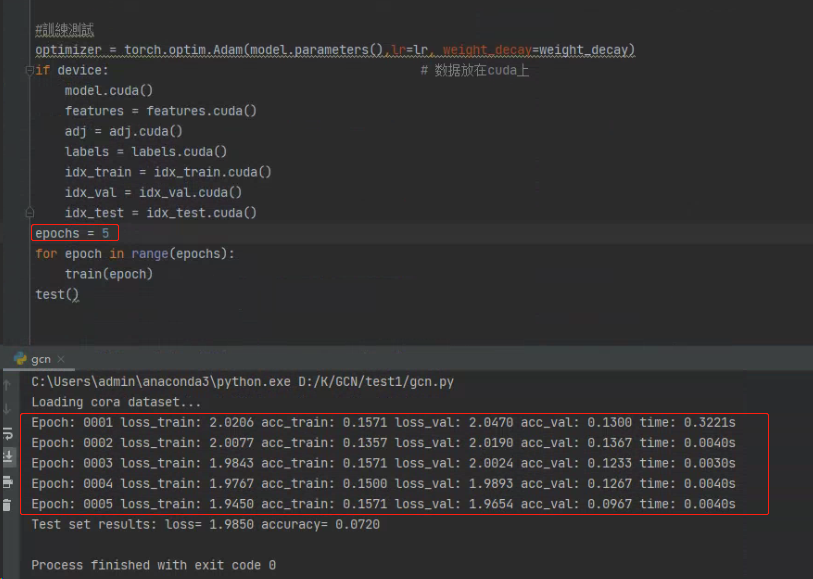

1 #定義頭文件 2 import math 3 import time 4 import torch 5 import torch.nn as nn 6 import numpy as np 7 import scipy.sparse as sp 8 from torch.nn.modules.module import Module 9 from torch.nn.parameter import Parameter 10 11 device = torch.device("cuda" if torch.cuda.is_available() else "cpu") 12 hidden = 16 # 定义隐藏层数 13 dropout = 0.5 14 lr = 0.01 15 weight_decay = 5e-4 16 fastmode = 'store_true' 17 18 #讀取數據 19 def encode_onehot(labels): 20 classes = set(labels) 21 classes_dict = {c: np.identity(len(classes))[i, :] for i, c in enumerate(classes)} 22 labels_onehot = np.array(list(map(classes_dict.get, labels)), dtype=np.int32) 23 return labels_onehot 24 25 def normalize(mx): 26 rowsum = np.array(mx.sum(1)) 27 r_inv = np.power(rowsum, -1).flatten() 28 r_inv[np.isinf(r_inv)] = 0. 29 r_mat_inv = sp.diags(r_inv) 30 mx = r_mat_inv.dot(mx) 31 return mx 32 33 def sparse_mx_to_torch_sparse_tensor(sparse_mx): 34 sparse_mx = sparse_mx.tocoo().astype(np.float32) 35 indices = torch.from_numpy( 36 np.vstack((sparse_mx.row, sparse_mx.col)).astype(np.int64)) 37 values = torch.from_numpy(sparse_mx.data) 38 shape = torch.Size(sparse_mx.shape) 39 return torch.sparse.FloatTensor(indices, values, shape) 40 41 def load_data(path="./", dataset="cora"): 42 """Load citation network dataset (cora only for now)""" 43 print('Loading {} dataset...'.format(dataset)) 44 idx_features_labels = np.genfromtxt("{}{}.content".format(path, dataset),# 读取节点标签 45 dtype=np.dtype(str)) 46 features = sp.csr_matrix(idx_features_labels[:, 1:-1], dtype=np.float32) # 读取节点特征 47 labels = encode_onehot(idx_features_labels[:, -1]) # 标签用onehot方式表示 48 idx = np.array(idx_features_labels[:, 0], dtype=np.int32) 49 idx_map = {j: i for i, j in enumerate(idx)} 50 edges_unordered = np.genfromtxt("{}{}.cites".format(path, dataset), # 读取边信息 51 dtype=np.int32) 52 edges = np.array(list(map(idx_map.get, edges_unordered.flatten())), 53 dtype=np.int32).reshape(edges_unordered.shape) 54 adj = sp.coo_matrix((np.ones(edges.shape[0]), (edges[:, 0], edges[:, 1])), 55 shape=(labels.shape[0], labels.shape[0]), 56 dtype=np.float32) 57 adj = adj + adj.T.multiply(adj.T > adj) - adj.multiply(adj.T > adj) 58 features = normalize(features) # 特征值归一化 59 adj = normalize(adj + sp.eye(adj.shape[0])) # 边信息归一化 60 61 idx_train = range(140) # 训练集 62 idx_val = range(200, 500) # 验证集 63 idx_test = range(500, 1500) # 测试集 64 65 features = torch.FloatTensor(np.array(features.todense())) 66 labels = torch.LongTensor(np.where(labels)[1]) 67 adj = sparse_mx_to_torch_sparse_tensor(adj) # 转换成邻居矩阵 68 69 idx_train = torch.LongTensor(idx_train) 70 idx_val = torch.LongTensor(idx_val) 71 idx_test = torch.LongTensor(idx_test) 72 73 return adj, features, labels, idx_train, idx_val, idx_test 74 adj, features, labels, idx_train, idx_val, idx_test = load_data() 75 76 #定義捲積網絡模型 77 class GraphConvolution(Module): 78 def __init__(self, in_features, out_features, bias=True): 79 super(GraphConvolution, self).__init__() 80 self.in_features = in_features 81 self.out_features = out_features 82 self.weight = Parameter(torch.FloatTensor(in_features, out_features)) 83 if bias: 84 self.bias = Parameter(torch.FloatTensor(out_features)) 85 else: 86 self.register_parameter('bias', None) 87 self.reset_parameters() 88 89 def reset_parameters(self): 90 stdv = 1. / math.sqrt(self.weight.size(1)) 91 self.weight.data.uniform_(-stdv, stdv) 92 if self.bias is not None: 93 self.bias.data.uniform_(-stdv, stdv) 94 95 def forward(self, input, adj): # 这里代码做了简化如 3.2节。 96 support = torch.mm(input, self.weight) # (2708, 16) = (2708, 1433) X (1433, 16) 97 output = torch.spmm(adj, support) # (2708, 16) = (2708, 2708) X (2708, 16) 98 if self.bias is not None: 99 return output + self.bias # 加上偏置 (2708, 16) 100 else: 101 return output # (2708, 16) 102 103 def __repr__(self): 104 return self.__class__.__name__ + ' (' \ 105 + str(self.in_features) + ' -> ' \ 106 + str(self.out_features) + ')' 107 108 class GCN(nn.Module): # 定义两层GCN 109 def __init__(self, nfeat, nhid, nclass, dropout): 110 super(GCN, self).__init__() 111 self.gc1 = GraphConvolution(nfeat, nhid) 112 self.gc2 = GraphConvolution(nhid, nclass) 113 self.dropout = dropout 114 115 def forward(self, x, adj): 116 x = torch.nn.functional.relu(self.gc1(x, adj)) 117 x = torch.nn.functional.dropout(x, self.dropout, training=self.training) 118 x = self.gc2(x, adj) 119 return torch.nn.functional.log_softmax(x, dim=1) # 对每一个节点做softmax 120 121 model = GCN(nfeat=features.shape[1], nhid=hidden, 122 nclass=labels.max().item() + 1,dropout=dropout) 123 124 #訓練函數 125 def accuracy(output, labels): 126 preds = output.max(1)[1].type_as(labels) 127 correct = preds.eq(labels).double() 128 correct = correct.sum() 129 return correct / len(labels) 130 131 def train(epoch): 132 t = time.time() 133 model.train() 134 optimizer.zero_grad() # 梯度清零 135 output = model(features, adj) 136 loss_train = torch.nn.functional.nll_loss(output[idx_train], labels[idx_train]) # 损失函数 137 acc_train = accuracy(output[idx_train], labels[idx_train]) # 计算准确率 138 loss_train.backward() # 反向传播 139 optimizer.step() # 更新梯度 140 141 if not fastmode: 142 model.eval() 143 output = model(features, adj) 144 145 loss_val = torch.nn.functional.nll_loss(output[idx_val], labels[idx_val]) 146 acc_val = accuracy(output[idx_val], labels[idx_val]) 147 print('Epoch: {:04d}'.format(epoch+1), 148 'loss_train: {:.4f}'.format(loss_train.item()), 149 'acc_train: {:.4f}'.format(acc_train.item()), 150 'loss_val: {:.4f}'.format(loss_val.item()), 151 'acc_val: {:.4f}'.format(acc_val.item()), 152 'time: {:.4f}s'.format(time.time() - t)) 153 154 #測試函數 155 def test(): 156 model.eval() 157 output = model(features, adj) # features:(2708, 1433) adj:(2708, 2708) 158 loss_test = torch.nn.functional.nll_loss(output[idx_test], labels[idx_test]) 159 acc_test = accuracy(output[idx_test], labels[idx_test]) 160 print("Test set results:", 161 "loss= {:.4f}".format(loss_test.item()), 162 "accuracy= {:.4f}".format(acc_test.item())) 163 164 #訓練測試 165 optimizer = torch.optim.Adam(model.parameters(),lr=lr, weight_decay=weight_decay) 166 if device: # 数据放在cuda上 167 model.cuda() 168 features = features.cuda() 169 adj = adj.cuda() 170 labels = labels.cuda() 171 idx_train = idx_train.cuda() 172 idx_val = idx_val.cuda() 173 idx_test = idx_test.cuda() 174 epochs = 500 175 for epoch in range(epochs): 176 train(epoch) 177 test()

数据集文件:https://linqs.soe.ucsc.edu/data

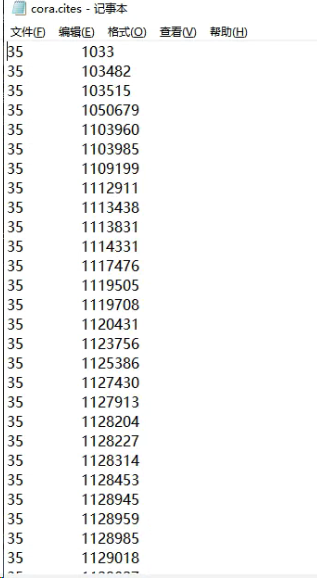

数据集两个文件:

1个是cora.cires 边的信息

1个是cora.content 节点的特征

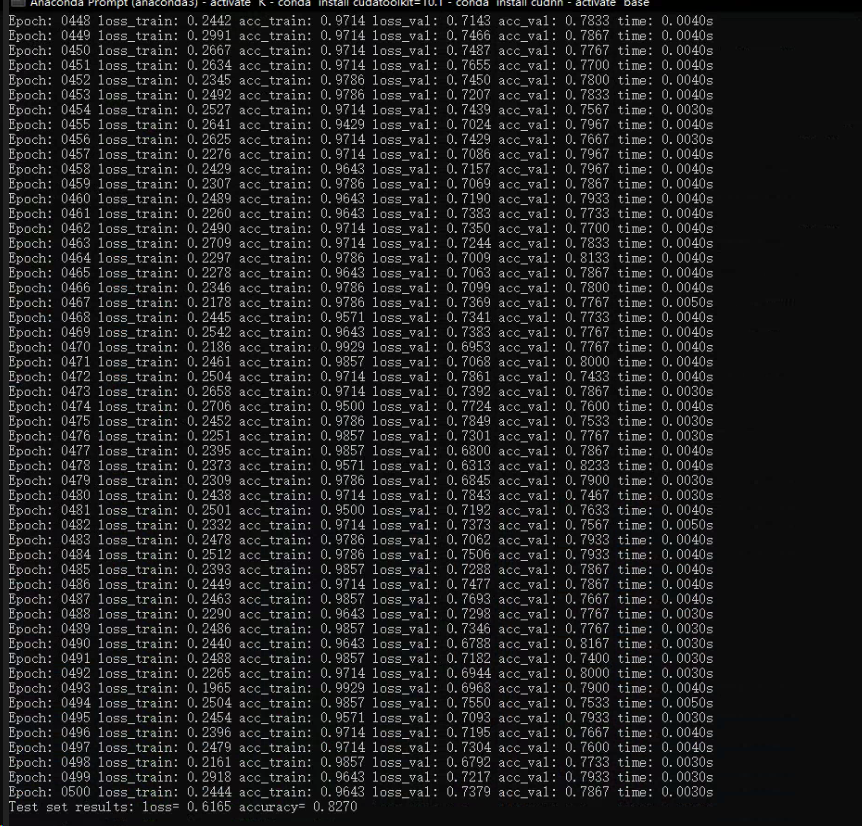

第一次在gcn上跑cora数据集结果:

-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

定义输出的六个参数

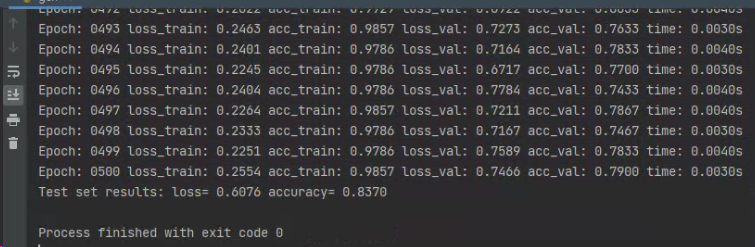

调参:

设epoch为5的结果

-------------------------------------------------------------------------

命令行运行结果