本地模式问题系列:

问题一:会报如下很多NoClassDefFoundError的错误,原因缺少相关依赖包

Exception in thread "main" java.lang.NoClassDefFoundError: org/apache/hadoop/fs/FSDataInputStream at org.apache.spark.SparkConf.loadFromSystemProperties(SparkConf.scala:76) at org.apache.spark.SparkConf.<init>(SparkConf.scala:71) at org.apache.spark.SparkConf.<init>(SparkConf.scala:58) at com.hadoop.sparkPi$.main(sparkPi.scala:9) at com.hadoop.sparkPi.main(sparkPi.scala) Caused by: java.lang.ClassNotFoundException: org.apache.hadoop.fs.FSDataInputStream at java.net.URLClassLoader.findClass(URLClassLoader.java:381) at java.lang.ClassLoader.loadClass(ClassLoader.java:424) at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:335) at java.lang.ClassLoader.loadClass(ClassLoader.java:357) ... 5 more

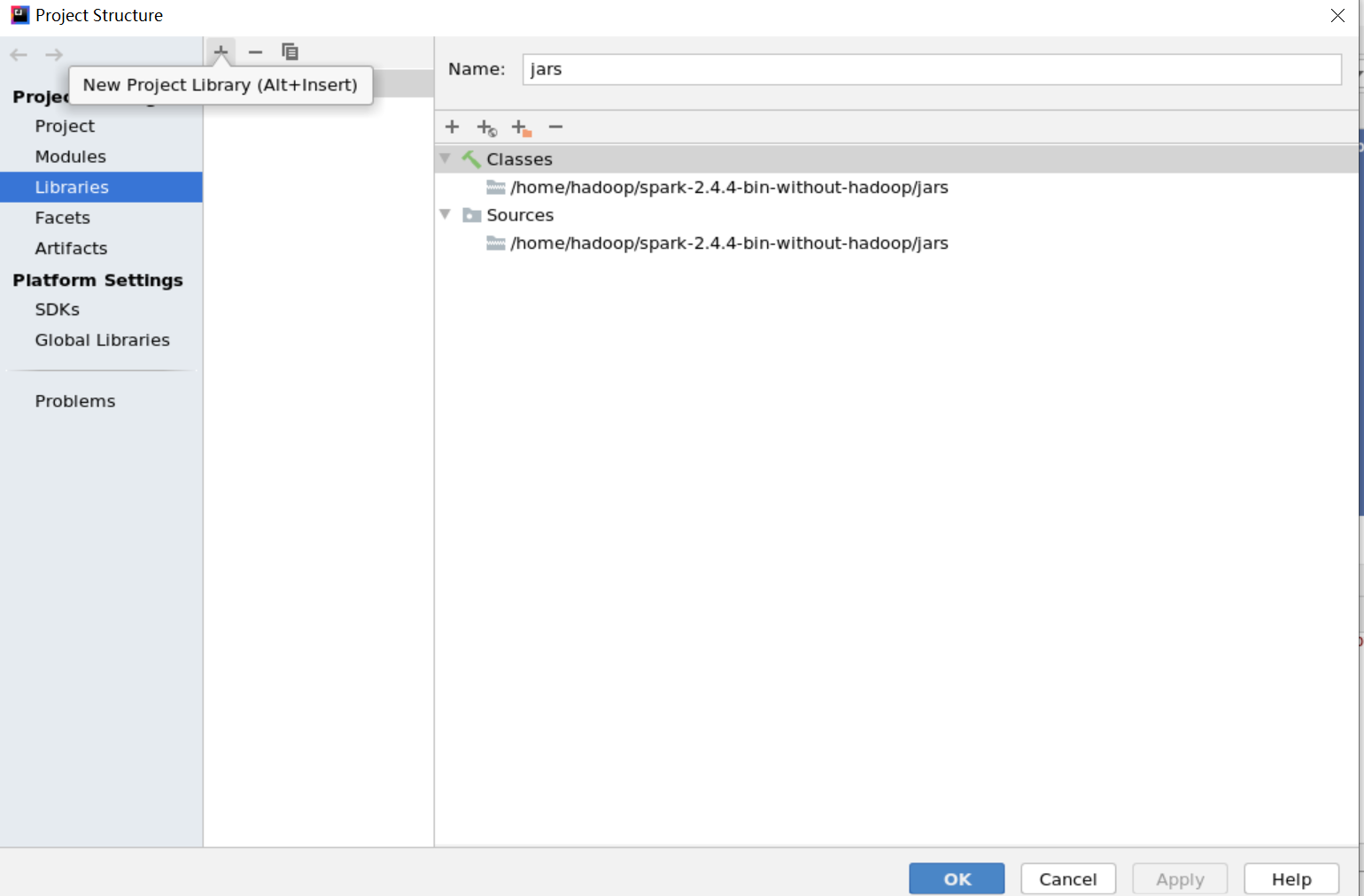

解决办法:下载相关缺少的依赖包,并在idea工程界面加入依赖包,路径为:file -- project structure -- libraries 中,点击左上角“+”符号添加依赖包的路径

问题二:Spark是非常依赖内存的计算框架,在虚拟环境下使用local模式时,实际上是使用多线程的形式模拟集群进行计算,因而对于计算机的内存有一定要求,这是典型的因为计算机内存不足而抛出的异常。

Exception in thread "main" java.lang.IllegalArgumentException: System memory 425197568 must be at least 471859200.

Please increase heap size using the --driver-memory option or spark.driver.memory in Spark configuration.

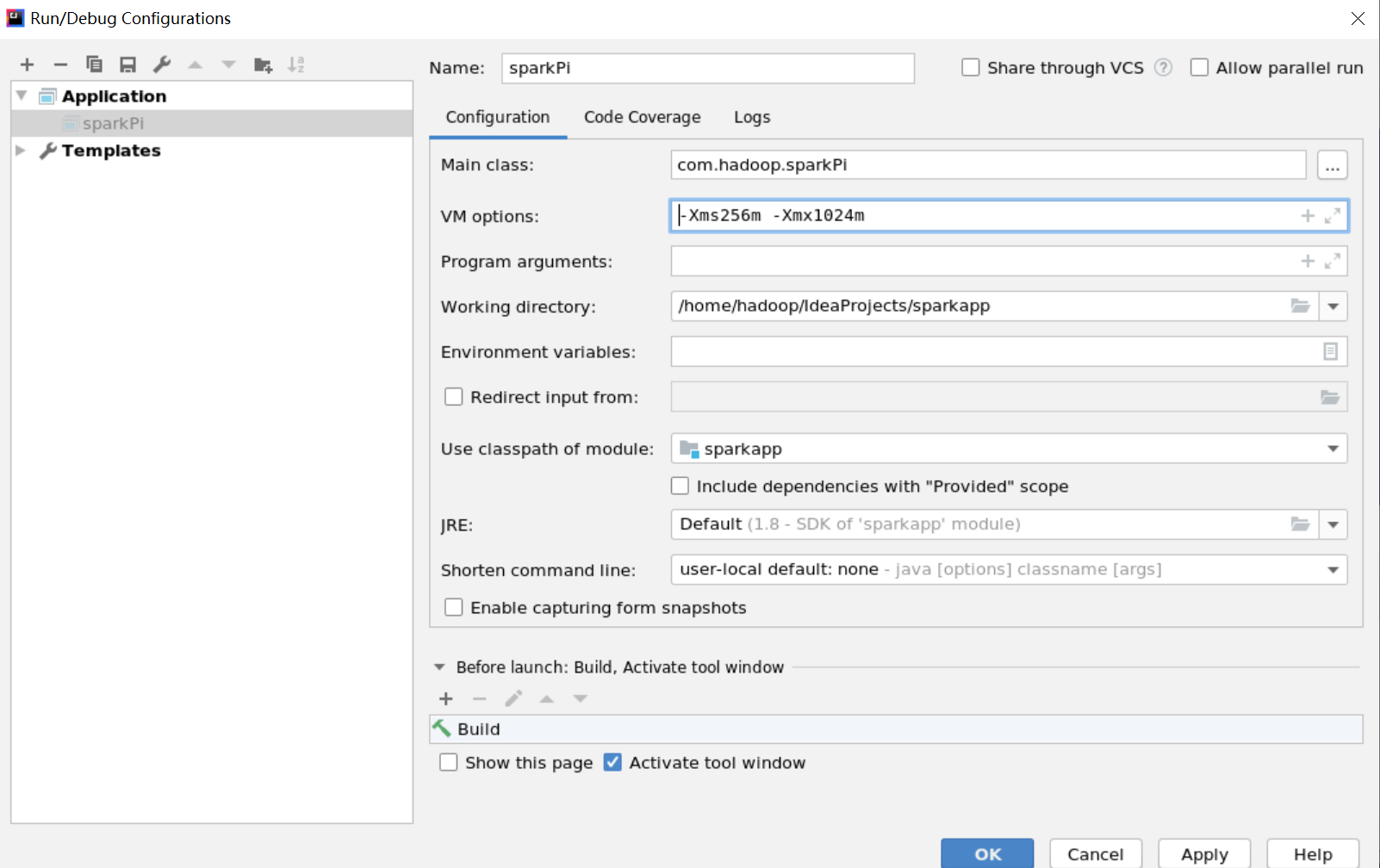

解决办法:修改代码或者设置-Xms256m -Xmx1024m

val conf = new SparkConf().setMaster("local").setAppName("sparkPi") //修改之前

val conf = new SparkConf().setMaster("local").setAppName("sparkPi").set("spark.testing.memory","2147480000") //修改之后

或