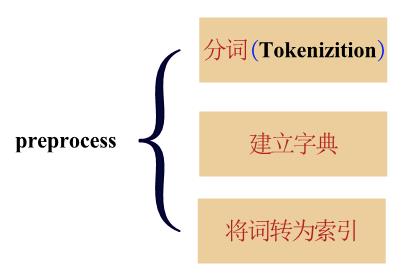

- 文本预处理通常包括四个步骤:

- 读入文本

- 分词(Tokenization)

- 建立词典(vocab),将每个词映射到唯一的索引(index)

- 根据词典,将文本序列转为索引序列,方便输入模型

- 建立词向量矩阵

读入文本

class ZOLDatesetReader:

@staticmethod

def __data_Counter__(fnames):

# 计数器

jieba_counter = Counter()

label_counter = Counter()

max_length_text = 0

min_length_text = 1000

max_length_img = 0

min_length_img = 1000

lengths_text = []

lengths_img = []

for fname in fnames:

with open(fname, 'r', encoding='utf-8', newline='\n', errors='ignore') as fin:

lines = fin.readlines()

for i in range(0, len(lines), 4):

text_raw = lines[i].strip()

imgs = lines[i + 1].strip()[1:-1].split(',')

aspect = lines[i + 2].strip()

polarity = lines[i + 3].strip()

length_text = len(text_raw)

length_img = len(imgs)

if length_text >= max_length_text:

max_length_text = length_text

if (length_text <= min_length_text):

min_length_text = length_text

lengths_text.append(length_text)

if length_img >= max_length_img:

max_length_img = length_img

if (length_img <= min_length_img):

min_length_img = length_img

lengths_img.append(length_img)

jieba_counter.update(text_raw)

label_counter.update([polarity])

print(label_counter)

去停用词

from nltk.corpus import stopwords

nltk.download('stopwords')

stopwords_list = stopwords.words('english')

text = " What? You don't love python?"

text = text .split()

for word in text :

if word in stopwords_list:

text .remove(word)

自定义停词表

def jieba_cut(text):

text = dp_txt(text)

stopwords = {}.fromkeys([line.rstrip() for line in open('./datasets/stopwords.txt', encoding='utf-8')])

segs = jieba.cut(text, cut_all=False)

final = ''

for seg in segs:

seg = str(seg)

if seg not in stopwords:

final += seg

seg_list = jieba.cut(final, cut_all=False)

text_cut = ' '.join(seg_list)

return text_cut

建立词典

self.word2idx = {}

self.idx2word = {}

self.idx = 1

def fit_on_text(self, text):

if self.lower:

text = text.lower()

words = text.split()

for word in words:

if word not in self.word2idx:

self.word2idx[word] = self.idx

self.idx2word[self.idx] = word

self.idx += 1

文本序列映射

def text_to_sequence(self, text, isaspect=False , reverse=False):

if self.lower:

text = text.lower()

words = text.split()

unknownidx = len(self.word2idx)+1

sequence = [self.word2idx[w] if w in self.word2idx else unknownidx for w in words]

if len(sequence) == 0:

sequence = [0]

pad_and_trunc = 'post' # use post padding together with torch.nn.utils.rnn.pack_padded_sequence

if reverse:

sequence = sequence[::-1]

if isaspect:

return Tokenizer.pad_sequence(sequence, self.max_aspect_len, dtype='int64',

padding=pad_and_trunc, truncating=pad_and_trunc)

else:

return Tokenizer.pad_sequence(sequence, self.max_seq_len, dtype='int64',

padding=pad_and_trunc, truncating=pad_and_trunc)

建立词向量矩阵

def build_embedding_matrix(word2idx, embed_dim, type):

embedding_matrix_file_name = '{0}_{1}_embedding_matrix.dat'.format(str(embed_dim), type)

if os.path.exists(embedding_matrix_file_name):

print('loading embedding_matrix:', embedding_matrix_file_name)

embedding_matrix = pickle.load(open(embedding_matrix_file_name, 'rb'))

else:

print('loading word vectors...')

embedding_matrix = np.random.rand(len(word2idx) + 2, embed_dim) # idx 0 and len(word2idx)+1 are all-zeros

fname = '../../datasets/GloveData/glove.6B.' + str(embed_dim) + 'd.txt' \

if embed_dim != 300 else '../../datasets/ChineseWordVectors/sgns.target.word-character.char1-2.dynwin5.thr10.neg5.dim' + str(embed_dim) + '.iter5'

word_vec = load_word_vec(fname, word2idx=word2idx)

print('building embedding_matrix:', embedding_matrix_file_name)

for word, i in word2idx.items():

vec = word_vec.get(word)

if vec is not None:

# words not found in embedding index will be all-zeros.

embedding_matrix[i] = vec

pickle.dump(embedding_matrix, open(embedding_matrix_file_name, 'wb'))

return embedding_matrix