Let's see how to do load balancing in Node.js.

Before we start with the solution, you can do a test to see the ability concurrent requests your current machine can handle.

This is our server.js:

const http = require('http'); const pid = process.pid; // listen the mssage event on the global // then do the computation process.on('message', (msg) => { const sum = longComputation(); process.send(sum); }) http.createServer((req, res) => { for (let i = 0; i<1e7; i++); // simulate CPU work res.end(`Handled by process ${pid}`) }).listen(8000, () => { console.log(`Started process ${pid}`); })

Test 200 concurrent requests in 10 seconds.

ab -c200 -t10 http:localhost:8000/

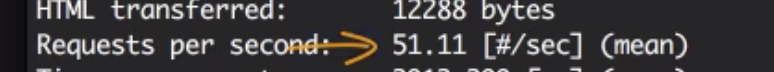

For one single node server can handle 51 requsts / second:

Create cluster.js:

// Cluster.js const cluster = require('cluster'); const os = require('os'); // For runing for the first time, // Master worker will get started // Then we can fork our new workers if (cluster.isMaster) { const cpus = os.cpus().length; console.log(`Forking for ${cpus} CPUs`); for (let i = 0; i < cpus; i++) { cluster.fork(); } } else { require('./server'); }

For the first time Master worker is running, we just need to create as many workers as our cpus allows. Then next run, we just require our server.js; that's it! simple enough!

Running:

node cluster.js

When you refresh the page, you should be able to see, we are assigned to different worker.

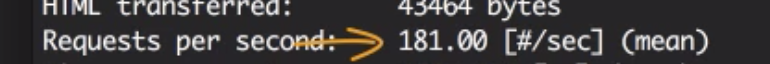

Now, if we do the ab testing again:

ab -c200 -t10 http:localhost:8000/

The result is 181 requests/second!

Sometimes it would be ncessary to communcation between master worker and cluster wokers.

Cluster.js:

We can send information from master worker to each cluster worker:

const cluster = require('cluster'); const os = require('os'); // For runing for the first time, // Master worker will get started // Then we can fork our new workers if (cluster.isMaster) { const cpus = os.cpus().length; console.log(`Forking for ${cpus} CPUs`); for (let i = 0; i < cpus; i++) { cluster.fork(); } console.dir(cluster.workers, {depth: 0}); Object.values(cluster.workers).forEach(worker => { worker.send(`Hello Worker ${worker.id}`); }) } else { require('./server'); }

In the server.js, we can listen to the events:

const http = require('http'); const pid = process.pid; // listen the mssage event on the global // then do the computation process.on('message', (msg) => { const sum = longComputation(); process.send(sum); }) http.createServer((req, res) => { for (let i = 0; i<1e7; i++); // simulate CPU work res.end(`Handled by process ${pid}`) }).listen(8000, () => { console.log(`Started process ${pid}`); }) process.on('message', msg => { console.log(`Message from master: ${msg}`) })

A one patical example would be count users with DB opreations;

// CLuster.js const cluster = require('cluster'); const os = require('os'); /** * Mock DB Call */ const numberOfUsersDB = function() { this.count = this.count || 6; this.count = this.count * this.count; return this.count; } // For runing for the first time, // Master worker will get started // Then we can fork our new workers if (cluster.isMaster) { const cpus = os.cpus().length; console.log(`Forking for ${cpus} CPUs`); for (let i = 0; i < cpus; i++) { cluster.fork(); } const updateWorkers = () => { const usersCount = numberOfUsersDB(); Object.values(cluster.workers).forEach(worker => { worker.send({usersCount}); }); } updateWorkers(); setInterval(updateWorkers, 10000); } else { require('./server'); }

Here, we let master worker calculate the result, and every 10 seconds we send out the result to all cluster workers.

Then in the server.js, we just need to listen the request:

let usersCount; http.createServer((req, res) => { for (let i = 0; i<1e7; i++); // simulate CPU work res.write(`Users ${usersCount}`); res.end(`Handled by process ${pid}`) }).listen(8000, () => { console.log(`Started process ${pid}`); }) process.on('message', msg => { usersCount = msg.usersCount; })